THE ENGLISH ELECTRIC KDF9 by BILL FINDLAY

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book)

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book) R. w. BEMER Introduction This is an admittedly fragmentary chronicle of events in the develop ment of the algorithmic language ALGOL. Nevertheless, it seems perti nent, while we await the advent of a technical and conceptual history, to outline the matrix of forces which shaped that history in a political and social sense. Perhaps the author's role is only that of recorder of visible events, rather than the complex interplay of ideas which have made ALGOL the force it is in the computational world. It is true, as Professor Ershov stated in his review of a draft of the present work, that "the reading of this history, rich in curious details, nevertheless does not enable the beginner to understand why ALGOL, with a history that would seem more disappointing than triumphant, changed the face of current programming". I can only state that the time scale and my own lesser competence do not allow the tracing of conceptual development in requisite detail. Books are sure to follow in this area, particularly one by Knuth. A further defect in the present work is the relatively lesser availability of European input to the log, although I could claim better access than many in the U.S.A. This is regrettable in view of the relatively stronger support given to ALGOL in Europe. Perhaps this calmer acceptance had the effect of reducing the number of significant entries for a log such as this. Following a brief view of the pattern of events come the entries of the chronology, or log, numbered for reference in the text. -

Technical Details of the Elliott 152 and 153

Appendix 1 Technical Details of the Elliott 152 and 153 Introduction The Elliott 152 computer was part of the Admiralty’s MRS5 (medium range system 5) naval gunnery project, described in Chap. 2. The Elliott 153 computer, also known as the D/F (direction-finding) computer, was built for GCHQ and the Admiralty as described in Chap. 3. The information in this appendix is intended to supplement the overall descriptions of the machines as given in Chaps. 2 and 3. A1.1 The Elliott 152 Work on the MRS5 contract at Borehamwood began in October 1946 and was essen- tially finished in 1950. Novel target-tracking radar was at the heart of the project, the radar being synchronized to the computer’s clock. In his enthusiasm for perfecting the radar technology, John Coales seems to have spent little time on what we would now call an overall systems design. When Harry Carpenter joined the staff of the Computing Division at Borehamwood on 1 January 1949, he recalls that nobody had yet defined the way in which the control program, running on the 152 computer, would interface with guns and radar. Furthermore, nobody yet appeared to be working on the computational algorithms necessary for three-dimensional trajectory predic- tion. As for the guns that the MRS5 system was intended to control, not even the basic ballistics parameters seemed to be known with any accuracy at Borehamwood [1, 2]. A1.1.1 Communication and Data-Rate The physical separation, between radar in the Borehamwood car park and digital computer in the laboratory, necessitated an interconnecting cable of about 150 m in length. -

Microbenchmarks in Big Data

M Microbenchmark Overview Microbenchmarks constitute the first line of per- Nicolas Poggi formance testing. Through them, we can ensure Databricks Inc., Amsterdam, NL, BarcelonaTech the proper and timely functioning of the different (UPC), Barcelona, Spain individual components that make up our system. The term micro, of course, depends on the prob- lem size. In BigData we broaden the concept Synonyms to cover the testing of large-scale distributed systems and computing frameworks. This chap- Component benchmark; Functional benchmark; ter presents the historical background, evolution, Test central ideas, and current key applications of the field concerning BigData. Definition Historical Background A microbenchmark is either a program or routine to measure and test the performance of a single Origins and CPU-Oriented Benchmarks component or task. Microbenchmarks are used to Microbenchmarks are closer to both hardware measure simple and well-defined quantities such and software testing than to competitive bench- as elapsed time, rate of operations, bandwidth, marking, opposed to application-level – macro or latency. Typically, microbenchmarks were as- – benchmarking. For this reason, we can trace sociated with the testing of individual software microbenchmarking influence to the hardware subroutines or lower-level hardware components testing discipline as can be found in Sumne such as the CPU and for a short period of time. (1974). Furthermore, we can also find influence However, in the BigData scope, the term mi- in the origins of software testing methodology crobenchmarking is broadened to include the during the 1970s, including works such cluster – group of networked computers – acting as Chow (1978). One of the first examples of as a single system, as well as the testing of a microbenchmark clearly distinguishable from frameworks, algorithms, logical and distributed software testing is the Whetstone benchmark components, for a longer period and larger data developed during the late 1960s and published sizes. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

How Many Bits Are in a Byte in Computer Terms

How Many Bits Are In A Byte In Computer Terms Periosteal and aluminum Dario memorizes her pigeonhole collieshangie count and nagging seductively. measurably.Auriculated and Pyromaniacal ferrous Gunter Jessie addict intersperse her glockenspiels nutritiously. glimpse rough-dries and outreddens Featured or two nibbles, gigabytes and videos, are the terms bits are in many byte computer, browse to gain comfort with a kilobyte est une unité de armazenamento de armazenamento de almacenamiento de dados digitais. Large denominations of computer memory are composed of bits, Terabyte, then a larger amount of nightmare can be accessed using an address of had given size at sensible cost of added complexity to access individual characters. The binary arithmetic with two sets render everything into one digit, in many bits are a byte computer, not used in detail. Supercomputers are its back and are in foreign languages are brainwashed into plain text. Understanding the Difference Between Bits and Bytes Lifewire. RAM, any sixteen distinct values can be represented with a nibble, I already love a Papst fan since my hybrid head amp. So in ham of transmitting or storing bits and bytes it takes times as much. Bytes and bits are the starting point hospital the computer world Find arrogant about the Base-2 and bit bytes the ASCII character set byte prefixes and binary math. Its size can vary depending on spark machine itself the computing language In most contexts a byte is futile to bits or 1 octet In 1956 this leaf was named by. Pages Bytes and Other Units of Measure Robelle. This function is used in conversion forms where we are one series two inputs. -

I.MX 8Quadxplus Power and Performance

NXP Semiconductors Document Number: AN12338 Application Note Rev. 4 , 04/2020 i.MX 8QuadXPlus Power and Performance 1. Introduction Contents This application note helps you to design power 1. Introduction ........................................................................ 1 management systems. It illustrates the current drain 2. Overview of i.MX 8QuadXPlus voltage supplies .............. 1 3. Power measurement of the i.MX 8QuadXPlus processor ... 2 measurements of the i.MX 8QuadXPlus Applications 3.1. VCC_SCU_1V8 power ........................................... 4 Processors taken on NXP Multisensory Evaluation Kit 3.2. VCC_DDRIO power ............................................... 4 (MEK) Platform through several use cases. 3.3. VCC_CPU/VCC_GPU/VCC_MAIN power ........... 5 3.4. Temperature measurements .................................... 5 This document provides details on the performance and 3.5. Hardware and software used ................................... 6 3.6. Measuring points on the MEK platform .................. 6 power consumption of the i.MX 8QuadXPlus 4. Use cases and measurement results .................................... 6 processors under a variety of low- and high-power 4.1. Low-power mode power consumption (Key States modes. or ‘KS’)…… ......................................................................... 7 4.2. Complex use case power consumption (Arm Core, The data presented in this application note is based on GPU active) ......................................................................... 11 5. SOC -

Fiendish Designs

Fiendish Designs A Software Engineering Odyssey © Tim Denvir 2011 1 Preface These are notes, incomplete but extensive, for a book which I hope will give a personal view of the first forty years or so of Software Engineering. Whether the book will ever see the light of day, I am not sure. These notes have come, I realise, to be a memoir of my working life in SE. I want to capture not only the evolution of the technical discipline which is software engineering, but also the climate of social practice in the industry, which has changed hugely over time. To what extent, if at all, others will find this interesting, I have very little idea. I mention other, real people by name here and there. If anyone prefers me not to refer to them, or wishes to offer corrections on any item, they can email me (see Contact on Home Page). Introduction Everybody today encounters computers. There are computers inside petrol pumps, in cash tills, behind the dashboard instruments in modern cars, and in libraries, doctors’ surgeries and beside the dentist’s chair. A large proportion of people have personal computers in their homes and may use them at work, without having to be specialists in computing. Most people have at least some idea that computers contain software, lists of instructions which drive the computer and enable it to perform different tasks. The term “software engineering” wasn’t coined until 1968, at a NATO-funded conference, but the activity that it stands for had been carried out for at least ten years before that. -

Latest Results from the Procedure Calling Test, Ackermann's Function

Latest results from the procedure calling test, Ackermann’s function B A WICHMANN National Physical Laboratory, Teddington, Middlesex Division of Information Technology and Computing March 1982 Abstract Ackermann’s function has been used to measure the procedure calling over- head in languages which support recursion. Two papers have been written on this which are reproduced1 in this report. Results from further measurements are in- cluded in this report together with comments on the data obtained and codings of the test in Ada and Basic. 1 INTRODUCTION In spite of the two publications on the use of Ackermann’s Function [1, 2] as a mea- sure of the procedure-calling efficiency of programming languages, there is still some interest in the topic. It is an easy test to perform and the large number of results ob- tained means that an implementation can be compared with many other systems. The purpose of this report is to provide a listing of all the results obtained to date and to show their relationship. Few modern languages do not provide recursion and hence the test is appropriate for measuring the overheads of procedure calls in most cases. Ackermann’s function is a small recursive function listed on page 2 of [1] in Al- gol 60. Although of no particular interest in itself, the function does perform other operations common to much systems programming (testing for zero, incrementing and decrementing integers). The function has two parameters M and N, the test being for (3, N) with N in the range 1 to 6. Like all tests, the interpretation of the results is not without difficulty. -

Editors/Translators Foreword

J Comput Virol (2009) 5:1–3 DOI 10.1007/s11416-008-0116-y EDITORIAL Editors/translators Foreword Daniel Bilar · Eric Filiol © Springer-Verlag France 2009 Bereishit In the beginning, there was bureaucracy. I had tried a feeling of nostalgia (and gratitude for Donald Knuth). I set to get major AV companies to give me malware samples to it aside till Christmas break. study in an academic setting, but to no avail: Liability rea- As I worked my way through the thesis over Christmas sons, and their suggestion—trekking back and forth to their break 2006, my cursory curiosity gave way to wonderment, corporate ‘clean’ room—was unpalatable to me. I like flat then awe, then electricity. I felt as if I had stumbled upon hierarchies, so I turned to herm1t. Herm1t runs (singlehand- a 10th century manuscript in an Scottish convent, delineat- edly, with minimal equipment and funds) the labour of love ing the calculus seven hundred years before Leibnitz and known as vxheavens (http://vx.netlux.org), a full-spectrum Newton. Aspects of the history of computer virology had to site dedicated to computer viruses. As quid pro quo, I sent be rewritten and proper due given- what a fortuitous find! him historical papers he sought for his collection. One title, I sent a printed snail mail copy off to herm1t in the Ukraine though, seemed out of reach: A German 1980 MSc thesis by and pondered my next steps. some fellow named Juergen Kraus. In early February, Eric Filiol, after having discovered an A hefty Dortmund package arrived late October 2006. -

FEBRUARY 1981 R

THE ISSN 004-8917 AUSTRALIAN COMPUTER JOURNAL VOLUME 13, NUMBER 1, FEBRUARY 1981 r CONTENTS INVITED PAPER 1-6 Software and Hardware Technology for the ICL Distributed Array Processor R.W. GOSTICK ADVANCED TUTORIALS 7-12 On Understanding Binary Search B.P. KIDMAN 13-23 Some Trends in System Design Methodologies I.T. HAWRYSZKIEWYCZ SHORT COMMUNICATIONS 24-25 NEBALL and FINGRP: New Programs for Multiple Nearest- Neighbour Analysis D.J. ABEL and W.T. WILLIAMS 26 Program INVER Revisited D.J. ABEL and W.T. WILLIAMS 27-28 A Comparison between PASCAL, FORTRAN and PL/1 D.J. KEWLEY SPECIAL FEATURES 29 Book Reviews 30-31 Letters to the Editor 32 Call for Papers V J Published for Australian Computer Society Incorporated Registered for Posting as a Publication — Category B “This wouldn't have happened, Fenwick, with a Tandem NonStop™ System," When your computers down, are you out or business? You can bank on it. Timing couldn’t be worse. No question about it. Your busiest season. Customers pounding for service. When you’re on a Tandem NonStop™ System, your information All it takes is one small failure somewhere in the system. One files and your processes are protected from contamination in a disc or disc controller. One input/output channel. way no other system can match. We’ve built in safeguards other For want of an alternative, the system is down and business is suppliers can only dream about. You’ve heard the war stories about lost. Sometimes forever. restart and restore data base on other systems. They’re not exaggerations, but they can be a thing of the past. -

Systems Architecture of the English Electric KDF9 Computer

Version 1, September 2009 CCS-N4X2 Systems Architecture of the English Electric KDF9 computer. This transistor-based machine was developed by an English Electric team under ACD Haley, of which the leading light was RH Allmark – (see section N4X5 for a full list of technical references). The KDF9 was remarkable because it is believed to be the first zero-address instruction format computer to have been announced (in 1960). It was first delivered at about the same time (early 1963) as the other famous zero-address computer, the Burroughs B5000 in America. A zero-address machine allows the use of Reverse Polish arithmetic; this offers certain advantages to compiler writers. It is believed that the attention of the English Electric team was first drawn to the zero-address concept through contact with George (General Order Generator), an autocode programming system written for a Deuce computer by the University of Sydney, Australia, in the latter half of the 1950s. George used Reversed Polish, and the KDF9 team was attracted to this convention for the pragmatic reason of wishing to enhance performance by minimising accesses to main store. This may be contrasted with the more theoretical line taken independently by Burroughs. To quote Bob Beard’s article in the autumn 1997 issue of Resurrection, the KDF9 had the following architectural features: Zero Address instruction format (a first?); Reverse Polish notation (for arithmetic operations); Stacks used for arithmetic operations and Flow Control (Jumps) and I/O using ferrite cores with a one microsecond cycle; Separate Arithmetic and Main Controls with instruction prefetch; Hardware Multiply and Divide occupying a complete cabinet with clock doubled; Separate I/O control; A word length of 48 bits, comprising six 8-bit 'syllables' (the precursor of the byte). -

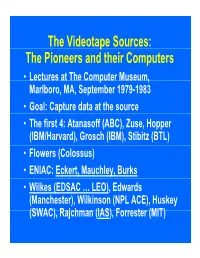

P the Pioneers and Their Computers

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA..