1Pipe: Scalable Total Order Communication in Data Center Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Eakta Jain A. Professional Preparation: B. Appointments: C. Publications

Eakta Jain Department of Computer and Information Science and Engineering University of Florida Phone: (352) 562-3979 E-mail: [email protected] Webpage: jainlab.cise.ufl.edu A. Professional Preparation: Indian Institute of Technology Kanpur, India B.Tech. (Electrical Engineering) 2006 Carnegie Mellon University M.S. (Robotics) 2010 Carnegie Mellon University Ph.D. (Robotics) 2012 B. Appointments: 02/14 – present Assistant Professor, Department of Computer and Information Science and Engi- neering, University of Florida, Gainesville, FL 12/12-02/14 Member of Technical Staff, Texas Instruments Embedded Signal Processing Lab, Computer Vision Branch, Dallas, TX 07/12-12/12 Systems Software Engineer, Natural User Interfaces Team, Texas Instruments, Dallas, TX 09/11-01/12 Lab Associate, Disney Research Pittsburgh, PA 06/10-08/10 Lab Associate, Disney Research Pittsburgh, PA 06/08-08/08 Lab Associate, Disney Research Pittsburgh, PA 06/07-08/07 Walt Disney Animation Studios, Burbank, CA C. Publications: Is the Motion of a Child Perceivably Different from the Motion of an Adult? Jain, E., Anthony, L., Aloba, A., Castonguay, A., Cuba, I., Shaw, A. and Woodward, J. (2016). ACM Transactions on Applied Percep- tion (TAP), 13(4), Article 22. DOI: 10.1145/2947616 Decoupling Light Reflex from Pupillary Dilation to Measure Emotional Arousal in Videos, Rai- turkar, P., Kleinsmith, A., Keil, A., Banerjee, A., and Jain, E. (2016), ACM Symposium on Ap- plied Perception (SAP), 89-96. Gaze-driven Video Re-editing, Jain, E., Sheikh, Y., Shamir, A. and Hodgins, J., (2015), ACM Transac- tions on Graphics (TOG) (34, p21:1-p21:12). DOI: 10.1145/2699644 3D Saliency from Eye Tracking with Tomography, Ma, B., Jain, E., and Entezari, A. -

Department of Computer Science and Engineering

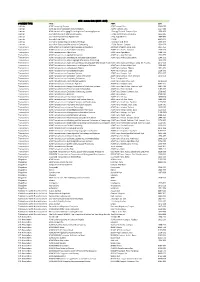

Department of Computer Science and Engineering Journals SJR Title ISSN SJR Quartile Country Molecular Systems Biology ISSN 17444292 8.87 Q1 United Kingdom IEEE Transactions on Pattern Analysis and Machine Intelligence ISSN 01628828 7.653 Q1 United States MIS Quarterly: Management Information Systems ISSN 02767783 6.984 Q1 United States Wiley Interdisciplinary Reviews: Computational Molecular Science ISSN 17590884, 17590876 6.715 Q1 United States Foundations and Trends in Machine Learning ISSN 19358237 6.194 Q1 United States IEEE Transactions on Fuzzy Systems ISSN 10636706 5.8 Q1 United States International Journal of Computer Vision ISSN 09205691 5.633 Q1 Netherlands Journal of Supply Chain Management ISSN 15232409 5.343 Q1 United Kingdom Journal of Operations Management ISSN 02726963 5.052 Q1 Netherlands IEEE Transactions on Smart Grid ISSN 19493053 4.784 Q1 United States Bioinformatics ISSN 13674811, 13674803 4.643 Q1 United Kingdom Information Systems Research ISSN 15265536, 10477047 4.397 Q1 United States IEEE Transactions on Evolutionary Computation ISSN 1089778X 4.308 Q1 United States IEEE Transactions on Automatic Control ISSN 00189286 4.238 Q1 United States International Journal of Robotics Research ISSN 02783649 4.184 Q1 United States Briefings in Bioinformatics ISSN 14774054, 14675463 4.086 Q1 United Kingdom SIAM Journal on Optimization ISSN 10526234, 10957189 3.943 Q1 United States IEEE Transactions on Systems, Man and Cybernetics Part B: Cybernetics ISSN 10834419 3.921 Q1 United States Internet and Higher Education ISSN 10967516 -

A ACM Transactions on Trans. 1553 TITLE ABBR ISSN ACM Computing Surveys ACM Comput. Surv. 0360‐0300 ACM Journal

ACM - zoznam titulov (2016 - 2019) CONTENT TYPE TITLE ABBR ISSN Journals ACM Computing Surveys ACM Comput. Surv. 0360‐0300 Journals ACM Journal of Computer Documentation ACM J. Comput. Doc. 1527‐6805 Journals ACM Journal on Emerging Technologies in Computing Systems J. Emerg. Technol. Comput. Syst. 1550‐4832 Journals Journal of Data and Information Quality J. Data and Information Quality 1936‐1955 Journals Journal of Experimental Algorithmics J. Exp. Algorithmics 1084‐6654 Journals Journal of the ACM J. ACM 0004‐5411 Journals Journal on Computing and Cultural Heritage J. Comput. Cult. Herit. 1556‐4673 Journals Journal on Educational Resources in Computing J. Educ. Resour. Comput. 1531‐4278 Transactions ACM Letters on Programming Languages and Systems ACM Lett. Program. Lang. Syst. 1057‐4514 Transactions ACM Transactions on Accessible Computing ACM Trans. Access. Comput. 1936‐7228 Transactions ACM Transactions on Algorithms ACM Trans. Algorithms 1549‐6325 Transactions ACM Transactions on Applied Perception ACM Trans. Appl. Percept. 1544‐3558 Transactions ACM Transactions on Architecture and Code Optimization ACM Trans. Archit. Code Optim. 1544‐3566 Transactions ACM Transactions on Asian Language Information Processing 1530‐0226 Transactions ACM Transactions on Asian and Low‐Resource Language Information Proce ACM Trans. Asian Low‐Resour. Lang. Inf. Process. 2375‐4699 Transactions ACM Transactions on Autonomous and Adaptive Systems ACM Trans. Auton. Adapt. Syst. 1556‐4665 Transactions ACM Transactions on Computation Theory ACM Trans. Comput. Theory 1942‐3454 Transactions ACM Transactions on Computational Logic ACM Trans. Comput. Logic 1529‐3785 Transactions ACM Transactions on Computer Systems ACM Trans. Comput. Syst. 0734‐2071 Transactions ACM Transactions on Computer‐Human Interaction ACM Trans. -

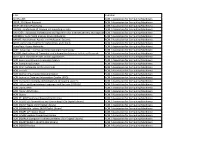

ACM JOURNALS S.No. TITLE PUBLICATION RANGE :STARTS PUBLICATION RANGE: LATEST URL 1. ACM Computing Surveys Volume 1 Issue 1

ACM JOURNALS S.No. TITLE PUBLICATION RANGE :STARTS PUBLICATION RANGE: LATEST URL 1. ACM Computing Surveys Volume 1 Issue 1 (March 1969) Volume 49 Issue 3 (October 2016) http://dl.acm.org/citation.cfm?id=J204 Volume 24 Issue 1 (Feb. 1, 2. ACM Journal of Computer Documentation Volume 26 Issue 4 (November 2002) http://dl.acm.org/citation.cfm?id=J24 2000) ACM Journal on Emerging Technologies in 3. Volume 1 Issue 1 (April 2005) Volume 13 Issue 2 (October 2016) http://dl.acm.org/citation.cfm?id=J967 Computing Systems 4. Journal of Data and Information Quality Volume 1 Issue 1 (June 2009) Volume 8 Issue 1 (October 2016) http://dl.acm.org/citation.cfm?id=J1191 Journal on Educational Resources in Volume 1 Issue 1es (March 5. Volume 16 Issue 2 (March 2016) http://dl.acm.org/citation.cfm?id=J814 Computing 2001) 6. Journal of Experimental Algorithmics Volume 1 (1996) Volume 21 (2016) http://dl.acm.org/citation.cfm?id=J430 7. Journal of the ACM Volume 1 Issue 1 (Jan. 1954) Volume 63 Issue 4 (October 2016) http://dl.acm.org/citation.cfm?id=J401 8. Journal on Computing and Cultural Heritage Volume 1 Issue 1 (June 2008) Volume 9 Issue 3 (October 2016) http://dl.acm.org/citation.cfm?id=J1157 ACM Letters on Programming Languages Volume 2 Issue 1-4 9. Volume 1 Issue 1 (March 1992) http://dl.acm.org/citation.cfm?id=J513 and Systems (March–Dec. 1993) 10. ACM Transactions on Accessible Computing Volume 1 Issue 1 (May 2008) Volume 9 Issue 1 (October 2016) http://dl.acm.org/citation.cfm?id=J1156 11. -

1 Acmsmall Author Submission Guide: Setting up Your Latex2ε Files

1 acmsmall Author Submission Guide: Setting Up Your LATEX2ε Files DONALD E. KNUTH, Stanford University LESLIE LAMPORT, Microsoft Corporation A The LTEX 2ε acmsmall document class formats articles in the style of the ACM small size journals and A transactions. Users who have prepared their document with LTEX 2ε can, with very little effort, produce camera-ready copy for these journals. Categories and Subject Descriptors: D.2.7 [Software Engineering]: Distribution and Maintenance—doc- umentation; H.4.0 [Information Systems Applications]: General; I.7.2 [Text Processing]: Document Preparation—languages; photocomposition General Terms: Documentation, Languages Additional Key Words and Phrases: Document preparation, publications, typesetting ACM Reference Format: Donald E. Knuth and Leslie Lamport. 2010. acmsmall Author submission guide: setting up your LATEX 2ε files. ACM Comput. Surv. 2, 3, Article 1 (July 2010), 14 pages. DOI:http://dx.doi.org/10.1145/0000000.0000000 1. INTRODUCTION This article is a description of the LATEX 2ε acmsmall document class for typesetting articles in the format of the ACM small size transactions and journals—Transactions on Programming Languages and Systems, Journal of the ACM, etc. It has, of course, been typeset using this document class, so it is a self-illustrating article. The reader is assumed to be familiar with LATEX, as described by Lamport [1986]. This document also describes the acmsmall bibliography style. LATEX 2ε is a document preparation system implemented as a macro package in Don- ald Knuth’s TEX typesetting system [Knuth 1984]. It is based upon the premise that the user should describe the logical structure of his document and not how the docu- ment is to be formatted. -

Distributed Event Routing in Publish/Subscribe Communication Systems: a Survey

Distributed Event Routing in Publish/Subscribe Communication Systems: a Survey Roberto Baldoni1, Leonardo Querzoni1, and Antonino Virgillito1 Dipartimento di Informatica e Sistemistica Universit`adi Roma “La Sapienza” Abstract. Distributed event routing has emerged as a key technology for achieving scalable information dissemination. In particular it has been used as preferential com- munication backbone within publish/subscribe communication system. Its aim is to reduce the network and computational overhead per event diffusion to a set (possibly large) of interested recipients. This paper introduces an unifying framework, namely a publish/subscribe architecture, that points out the functional decomposition be- tween event-based routing layer, the overlay infrastructure layer and the network protocols layer. Hence the paper, firstly, surveys current algorithms for event based routing and possible overlay infrastructures in wired and mobile systems and, sec- ondly, it discusses how and when single solutions at each level can be combined in the publish/subscribe architecture. Finally the paper positions existing publish/subscribe systems within the proposed architecture. 1 Introduction Since the early nineties, anonymous and asynchronous dissemination of information has been a basic building block for typical distributed application such as stock exchanges, news tickers and air-traffic control. With the advent of ubiquitous com- puting and of the ambient intelligence, information dissemination solutions have to face challenges such as the exchange of huge amounts of information, large and dynamic number of participants possibly deployed over a large network (e.g. peer- to-peer systems), mobility and scarcity of resources (e.g. mobile ad-hoc and sensor networks) [1]. Publish/Subscribe (pub/sub) systems are a key technology for information dis- semination. -

Operating Systems and Middleware: Supporting Controlled Interaction

Operating Systems and Middleware: Supporting Controlled Interaction Max Hailperin Gustavus Adolphus College Revised Edition 1.1 July 27, 2011 Copyright c 2011 by Max Hailperin. This work is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-sa/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. Bibliography [1] Atul Adya, Barbara Liskov, and Patrick E. O’Neil. Generalized iso- lation level definitions. In Proceedings of the 16th International Con- ference on Data Engineering, pages 67–78. IEEE Computer Society, 2000. [2] Alfred V. Aho, Peter J. Denning, and Jeffrey D. Ullman. Principles of optimal page replacement. Journal of the ACM, 18(1):80–93, 1971. [3] AMD. AMD64 Architecture Programmer’s Manual Volume 2: System Programming, 3.09 edition, September 2003. Publication 24593. [4] Dave Anderson. You don’t know jack about disks. Queue, 1(4):20–30, 2003. [5] Dave Anderson, Jim Dykes, and Erik Riedel. More than an interface— SCSI vs. ATA. In Proceedings of the 2nd Annual Conference on File and Storage Technology (FAST). USENIX, March 2003. [6] Ross Anderson. Security Engineering: A Guide to Building Depend- able Distributed Systems. Wiley, 2nd edition, 2008. [7] Apple Computer, Inc. Kernel Programming, 2003. Inside Mac OS X. [8] Apple Computer, Inc. HFS Plus volume format. Technical Note TN1150, Apple Computer, Inc., March 2004. [9] Ozalp Babaoglu and William Joy. Converting a swap-based system to do paging in an architecture lacking page-referenced bits. -

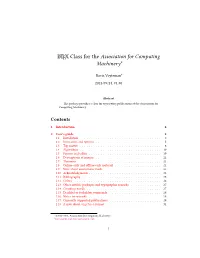

LATEX Class for the Association for Computing Machinery∗

LATEX Class for the Association for Computing Machinery∗ Boris Veytsmany 2021/09/24, v1.80 Abstract This package provides a class for typesetting publications of the Association for Computing Machinery. Contents 1 Introduction2 2 User’s guide2 2.1 Installation...................................2 2.2 Invocation and options............................5 2.3 Top matter...................................6 2.4 Algorithms................................... 19 2.5 Figures and tables............................... 19 2.6 Descriptions of images............................ 21 2.7 Theorems.................................... 21 2.8 Online-only and offline-only material.................... 21 2.9 Note about anonymous mode........................ 22 2.10 Acknowledgments............................... 22 2.11 Bibliography.................................. 23 2.12 Colors..................................... 26 2.13 Other notable packages and typographic remarks............. 27 2.14 Counting words................................ 27 2.15 Disabled or forbidden commands...................... 28 2.16 Notes for wizards............................... 28 2.17 Currently supported publications...................... 29 2.18 A note about sigchi-a format........................ 31 ∗©2016–2021, Association for Computing Machinery [email protected], [email protected] 1 1 Introduction The Association for Computing Machinery1 is the world’s largest educational and sci- entific computing society, which delivers resources that advance computing as ascience and a profession. It was one of the early adopters of TEX for its typesetting. It provided several different classes for a number of journals and conference pro- ceedings. Unfortunately during the years since these classes were written, the code was patched many times, and supporting different versions of the classes became difficult. This package provides the uniform interface for all ACM publications. It is intended to replace all the different classes and packages and provide an up-to-dateAT L EX package. -

Title Publisher 3C ON-LINE ACM / Association for Computing Machinery 3DOR

Title Publisher 3C ON-LINE ACM / Association for Computing Machinery 3DOR: 3D Object Retrieval ACM / Association for Computing Machinery 3DVP: 3D Video Processing ACM / Association for Computing Machinery A2CWiC: Conference of Women in Computing in India ACM / Association for Computing Machinery AAA-IDEA : Advanced Architectures and Algorithms for Internet Delivery and ApplicationsACM / Association for Computing Machinery AADEBUG: Automated analysis-driven debugging ACM / Association for Computing Machinery AAMAS: Autonomous Agents and Multiagent Systems ACM / Association for Computing Machinery ACDC: Automated Control for Datacenters and Clouds ACM / Association for Computing Machinery AcessNets: Access Networks ACM / Association for Computing Machinery ACET: Advances in Computer Enterntainment Technology ACM / Association for Computing Machinery ACISNR: Applications of Computer and Information Sciences to Nature Research ACM / Association for Computing Machinery ACL2: ACL2 Theorem Prover and its Applications ACM / Association for Computing Machinery ACM Communications in Computer Algebra ACM / Association for Computing Machinery ACM Computing Surveys ACM / Association for Computing Machinery ACM DEV: Computing for Development ACM / Association for Computing Machinery ACM Inroads ACM / Association for Computing Machinery ACM Journal of Computer Documentation ACM / Association for Computing Machinery ACM Journal of Data and Information Quality (JDIQ) ACM / Association for Computing Machinery ACM journal on emerging technologies in computing -

Natalie Enright Jerger

Natalie Enright Jerger Contact The Edward S. Rogers Department of Electrical Voice: (416) 978-5056 Information and Computer Engineering Fax: (416) 971-2326 10 King’s College Rd E-mail: [email protected] University of Toronto Webpage: www.eecg.toronto.edu/~enright Toronto, ON M5S 3G4 Canada Education University of Wisconsin, Madison, Wisconsin USA Ph.D., Electrical Engineering, December 2008 Dissertation: “Chip Multiprocessor Coherence and Interconnect System Design” Advisors: Mikko H. Lipasti and Li-Shiuan Peh M.S., Electrical and Computer Engineering, May 2004 Purdue University, West Lafayette, Indiana USA B.S., Computer Engineering, May 2002 Appointment University of Toronto, Electrical and Computer Engineering, Toronto, Ontario, Canada Professor July 2017 - Present Associate Professor July 2014 - July 2017 Assistant Professor January 2009 - July 2014 Research Computer architecture, many-core architectures, approximate computing, on-chip networks, cache Interests coherence protocols, interconnection networks, emerging applications for many-core architectures. Industrial Advanced Micro Devices Visiting Scholar Experience Bellevue, WA July 2015-December 2016 IBM Intern Rochester, MN June 2006 - Oct 2006 Intel Intern, Microarchitecture Research Lab Santa Clara, CA May 2004 - December 2004 Hewlett Packard Intern Vancouver, WA May 2001 - August 2001 Hewlett Packard Intern Vancouver, WA May 2000 - August 2000 Honors and Gordon R. Slemon Teaching of Design Award, 2017 Awards IEEE MICRO Top Picks Honorable Mention for “The Anytime Automaton”, -

The ACM Digital Library

The ACM Digital Library Presented By- AJITESH MOY GHOSH Contents Overview History of ACM Digital Library Products/Contents enhancement ACM Publications in Digital Library ACM Digital Library Platform Full Text Content in ACM Digital Library ACM Magazines Included in Digital Library Pricing Overview All of the ACM journals and proceedings online Constantly growing and expanding content Searching and browsing is free to the world See it at: http://www.acm.org/dl History of ACM Digital Library Began creating electronic files in January 1997. Launched a demo version in July 1997 that was free to the world, including unlimited downloading of full text. Went “live” on 1 January 1998 (for pay) Originally contained 120K pages of full text from 1991 to 1997. Products/Contents enhancement ACM journals going back to 1947 All proceedings going back to 1947 All Newsletters (34) to 1977 Usage Stats are available ACM Publications in Digital Library The Premier Publisher of Scholarly Information in the field of Computing 7- Journals - High Impact Titles according to ISI / Web of Science 10 Magazines - Including Flagship Communications of the ACM 25+ Transactions 270+ Proceedings Titles - Archive contains over 2,000 annual volumes ACM Publications in Digital Library 58 Newsletters – Published by ACM & various ACM Special Interest Groups 43 Special Interest Groups contributing content to ACM Digital Library 24 Publications from Affiliate Publishers included in ACM Digital Library 800 videos, animations, movies, etc. 12 Oral History Interviews The Guide -

Automatic Software Repair: a Bibliography Martin Monperrus

Automatic Software Repair: a Bibliography Martin Monperrus To cite this version: Martin Monperrus. Automatic Software Repair: a Bibliography. ACM Computing Surveys, Associa- tion for Computing Machinery, 2017, 51, pp.1-24. 10.1145/3105906. hal-01206501 HAL Id: hal-01206501 https://hal.archives-ouvertes.fr/hal-01206501 Submitted on 29 Sep 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Automatic Software Repair: a Bibliography Martin Monperrus University of Lille & Inria [email protected] Accepted for publication in ACM Computing Surveys on June 3rd 2017. Abstract: This article presents a survey on automatic software repair. Automatic soft- ware repair consists of automatically finding a solution to software bugs, without human intervention. This article considers all kinds of repair. First, it discusses behavioral repair where test-suites, contracts, models, crashing inputs are taken as oracle. Second, it dis- cusses state repair, also known as runtime repair or runtime recovery, with techniques such as checkpoint and restart, reconfiguration, invariant restoration. The uniqueness of this ar- ticle is that it spans the research communities that contribute to this body of knowledge: software engineering, dependability, operating systems, programming languages and secu- rity.