Symposium Preface

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Stylistic Evolution of Jazz Drummer Ed Blackwell: the Cultural Intersection of New Orleans and West Africa

STYLISTIC EVOLUTION OF JAZZ DRUMMER ED BLACKWELL: THE CULTURAL INTERSECTION OF NEW ORLEANS AND WEST AFRICA David J. Schmalenberger Research Project submitted to the College of Creative Arts at West Virginia University in partial fulfillment of the requirements for the degree of Doctor of Musical Arts in Percussion/World Music Philip Faini, Chair Russell Dean, Ph.D. David Taddie, Ph.D. Christopher Wilkinson, Ph.D. Paschal Younge, Ed.D. Division of Music Morgantown, West Virginia 2000 Keywords: Jazz, Drumset, Blackwell, New Orleans Copyright 2000 David J. Schmalenberger ABSTRACT Stylistic Evolution of Jazz Drummer Ed Blackwell: The Cultural Intersection of New Orleans and West Africa David J. Schmalenberger The two primary functions of a jazz drummer are to maintain a consistent pulse and to support the soloists within the musical group. Throughout the twentieth century, jazz drummers have found creative ways to fulfill or challenge these roles. In the case of Bebop, for example, pioneers Kenny Clarke and Max Roach forged a new drumming style in the 1940’s that was markedly more independent technically, as well as more lyrical in both time-keeping and soloing. The stylistic innovations of Clarke and Roach also helped foster a new attitude: the acceptance of drummers as thoughtful, sensitive musical artists. These developments paved the way for the next generation of jazz drummers, one that would further challenge conventional musical roles in the post-Hard Bop era. One of Max Roach’s most faithful disciples was the New Orleans-born drummer Edward Joseph “Boogie” Blackwell (1929-1992). Ed Blackwell’s playing style at the beginning of his career in the late 1940’s was predominantly influenced by Bebop and the drumming vocabulary of Max Roach. -

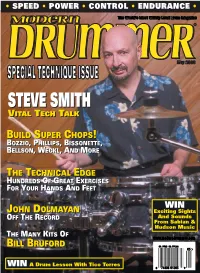

Steve Smith Steve Smith

• SPEED • POWER • CONTROL • ENDURANCE • SPECIAL TECHNIQUE ISSUE STEVESTEVE SMITHSMITH VVITALITAL TTECHECH TTALKALK BBUILDUILD SSUPERUPER CCHOPSHOPS!! BBOZZIOOZZIO,, PPHILLIPSHILLIPS,, BBISSONETTEISSONETTE,, BBELLSONELLSON,, WWECKLECKL,, AANDND MMOREORE TTHEHE TTECHNICALECHNICAL EEDGEDGE HHUNDREDSUNDREDS OOFF GGREATREAT EEXERCISESXERCISES FFOROR YYOUROUR HHANDSANDS AANDND FFEETEET WIN JJOHNOHN DDOLMAYANOLMAYAN Exciting Sights OOFFFF TTHEHE RRECORDECORD And Sounds From Sabian & Hudson Music TTHEHE MMANYANY KKITSITS OOFF BBILLILL BBRUFORDRUFORD $4.99US $6.99CAN 05 WIN A Drum Lesson With Tico Torres 0 74808 01203 9 Contents ContentsVolume 27, Number 5 Cover photo by Alex Solca STEVE SMITH You can’t expect to be a future drum star if you haven’t studied the past. As a self-proclaimed “US ethnic drummer,” Steve Smith has made it his life’s work to explore the uniquely American drumset— and the way it has shaped our music. by Bill Milkowski 38 Alex Solca BUILDING SUPER CHOPS 54 UPDATE 24 There’s more than one way to look at technique. Just ask Terry Bozzio, Thomas Lang, Kenny Aronoff, Bill Bruford, Dave Weckl, Paul Doucette Gregg Bissonette, Tommy Aldridge, Mike Mangini, Louie Bellson, of Matchbox Twenty Horacio Hernandez, Simon Phillips, David Garibaldi, Virgil Donati, and Carl Palmer. Gavin Harrison by Mike Haid of Porcupine Tree George Rebelo of Hot Water Music THE TECHNICAL EDGE 73 Duduka Da Fonseca An unprecedented gathering of serious chops-increasing exercises, samba sensation MD’s exclusive Technical Edge feature aims to do no less than make you a significantly better drummer. Work out your hands, feet, and around-the-drums chops like you’ve never worked ’em before. A DIFFERENT VIEW 126 TOM SCOTT You’d need a strongman just to lift his com- plete résumé—that’s how invaluable top musicians have found saxophonist Tom Scott’s playing over the past three decades. -

BAM R&B Festival at Metrotech Returns with 10 Free Concerts, Jun 7

BAM R&B Festival at MetroTech returns with 10 free concerts, Jun 7—Aug 9 The stellar lineup includes Bernard Purdie, Bobbi Humphrey, Savion Glover, Terence Blanchard, Vivian Green, and Marcus Miller Forest City Ratner Companies is the Presenting Sponsor of BAM R&B Festival at MetroTech BAM R&B Festival at MetroTech Thursdays, noon—2pm, Jun 7—Aug 9 Free admission, MetroTech Commons at MetroTech Center Produced by Danny Kapilian Jun 7 Bernard Purdie’s All-Star Shuffle with guests Bobbi Humphrey and Quiana Lynell Jun 14 Savion Glover featuring Marcus Gilmore Jun 21 PJ Morton Jun 28 Vivian Green Jul 5 Delgres Jul 12 Amalgarhythm with Kris Davis and Terri Lyne Carrington Jul 19 Jupiter & Okwess Jul 26 Terence Blanchard featuring the E-Collective Aug 2 Ranky Tanky Aug 9 Marcus Miller May 3, 2018/Brooklyn, NY—BAM R&B Festival at MetroTech, Downtown Brooklyn’s best summer tradition, returns from June 7 to August 9 with 10 afternoons of jazz, soul, R&B, and some tap dancing. Now in its 24th year, the festival continues to feature music legends alongside groundbreaking emerging artists. Producer Danny Kapilian said, “It’s so great to bring dynamic artists every year to perform in Downtown Brooklyn. This is one of those adventurous years where we are stepping into some thrilling new musical territory. Four of our star artists this summer live where R&B and jazz combine to create something totally new. Legendary tap dancing star Savion Glover presents a duo performance with the brilliant young jazz drummer Marcus Gilmore. Terri Lyne Carrington is a three-time Grammy-winning drummer who’s bridged the music of Dianne Reeves, Esperanza Spalding, and Valerie Simpson. -

Recorded Jazz in the 20Th Century

Recorded Jazz in the 20th Century: A (Haphazard and Woefully Incomplete) Consumer Guide by Tom Hull Copyright © 2016 Tom Hull - 2 Table of Contents Introduction................................................................................................................................................1 Individuals..................................................................................................................................................2 Groups....................................................................................................................................................121 Introduction - 1 Introduction write something here Work and Release Notes write some more here Acknowledgments Some of this is already written above: Robert Christgau, Chuck Eddy, Rob Harvilla, Michael Tatum. Add a blanket thanks to all of the many publicists and musicians who sent me CDs. End with Laura Tillem, of course. Individuals - 2 Individuals Ahmed Abdul-Malik Ahmed Abdul-Malik: Jazz Sahara (1958, OJC) Originally Sam Gill, an American but with roots in Sudan, he played bass with Monk but mostly plays oud on this date. Middle-eastern rhythm and tone, topped with the irrepressible Johnny Griffin on tenor sax. An interesting piece of hybrid music. [+] John Abercrombie John Abercrombie: Animato (1989, ECM -90) Mild mannered guitar record, with Vince Mendoza writing most of the pieces and playing synthesizer, while Jon Christensen adds some percussion. [+] John Abercrombie/Jarek Smietana: Speak Easy (1999, PAO) Smietana -

December 2002

PORTABLE • BILL STEWART • CONSUMERS POLL RESULTS DENNISDENNIS CHAMBERS,CHAMBERS, KARLKARL PERAZZO,PERAZZO, RAULRAUL REKOWREKOW SSANTANAANTANA’’SS FFINESTINEST RRHYTHMHYTHM TTEAMEAM?? WWEEZEREEZER’’SS PPATAT WWILSONILSON TTHROUGHHROUGH TTHEHE YYEARSEARS WWITHITH TTERRYERRY BBOZZIOOZZIO NNEWEW FFOUNDOUND GGLORYLORY’’SS CCYRUSYRUS BBOLOOKIOLOOKI TTRADINGRADING FFOURSOURS WWITHITH PPHILLYHILLY JJOEOE $4.99US $6.99CAN 12 PPLUSLUS CCARLOSARLOS SSANTANAANTANA TTALKSALKS DDRUMMERSRUMMERS!! 0 74808 01203 9 ContentsContents Volume 27, Number 12 Cover photo by Paul La Raia SANTANA’S DENNIS CHAMBERS, RAUL REKOW, AND KARL PERAZZO The heaviest rhythm section in rock has just gotten heavier. Even Carlos Santana himself is in awe of his new Dennis Chambers–led ensemble. a a by Robin Tolleson i i a a R R a a L L l l u u a a 56 P P UPDATE 24 Portable’s Brian Levy WEEZER’S Wayward Shamans’ Barrett Martin AT ILSON 80 P W Fugazi’s Brendan Canty From Neil Peart obsessive to Muppet hostage, Pat Wilson has Mickey Hart been through a lot in ten years with Daryl Stuermer’s John Calarco pop gods Weezer. Oh, he plays the heck out of the drums, too. by Adam Budofsky MD CONSUMERS POLL RESULTS 50 The best in today’s gear—according to you. A DIFFERENT VIEW 74 a a i i a a NEW FOUND R R CARLOS SANTANA a a L L Just a sample of his drum cohorts over the l l GLORY’S u u a a years: Michael Shrieve, Jack DeJohnette, Horacio P P CYRUS BOLOOKI Hernandez, Tony Williams, Carter Beauford, 150 Chester Thompson, and now, Dennis Chambers. Even a disastrous fall from a Take your seats, class is about to begin. -

Jazz Brazz Program Fall 2011

Department of MFulton Schouol of sLibeiracl Arts Salisbury University Jazz Brazz Big Band Jerry Tabor, Director & World Drum Ensemble Ted Nichols, Director www.salisbury.edu PROGRAM World Drum Ensemble Ted Nichols, Director Baga Gine “Baga Gine” literally means “Baga woman.”The Baga are an ethnic group in northwest Guinea. There is a story behind this music: A baga woman hears music. At first she didn’t want to dance, but the music was so good that she couldn’t stand it any longer and started to dance. Downfall .........................................................................John R. Beck This ensemble is a “rudimental fantasy,” which utilizes themes from three traditional snare drum compositions: Three Camps , The Downfall of Paris and Connecticut Halftime, a nod to Steve Gadd’s performance of Fifty Ways to Leave Your Lover! Instrumentation: three snare drums, bass drum and two optional toms. Balakulania ..................................Sylvia Franke and Ibro Konate The song is from upper Guinea and the rhythms are played to call the farmers to work in the fields in the early morning. Soko ..............................................................................Manady Keita The Malinke has handed down these rhythms from the people of Guinea. They are played as children arrive in each village after the announcement of a special celebration. Drummer’s Bucket List ...............................................Ted Nichols This list of drummers’ favorites includes: Let there Be Drums by Sandy Nelson, Sing Sing Sing as played by Gene Krupa, Wipeout from the Safaris and an opening section from Queen’s We Will Rock You . The performance features a drywall bucket orchestra with two trashcans. Taiko Excel .....................................................................Koki Suzuki Taiko is a drumming style of Japanese origin. -

Thesis University of London

THESIS UNIVERSITY OF LONDON COPYRIGHT This is a thesis accepted for a higher degree of the University of London. It has been made available to the Institute of Education library for public reference, photocopying and inter-library loan. The copyright is held by the author. All persons consulting the thesis must read and abide by the Copyright Declaration below. COPYRIGHT DECLARATION I recognise that the copyright of this thesis rests with the author and that no quotation from it or information derived from it may be published without the prior written consent of the author. `I drum, therefore I am'? A study of kit drummers' identities, practices and learning Gareth Dylan Smith Institute of Education, University of London Submitted for PhD 1 ABSTRACT Drummers have largely been neglected in scholarly literature on music and education, despite being active in large numbers in popular music and having an increasing presence in the music education arena. The study explores the identities, practices and learning of kit drummers in the UK from an emic perspective, using a mixed methodological approach with a focus on qualitative sociological enquiry drawing on interpretative phenomenological analysis and grounded theory. Semi-structured interviews were conducted with 15 teenage drummers and 12 adult drummers; both age groups were interviewed to allow for consideration of whether adults' reflections on their formative years differ greatly from those of teenage drummers today. Secondary data were gathered from a brief questionnaire conducted with 100 more drummers to support and contextualize the richer interview data. Data were also taken from relevant biographies, audio/visual media and journalistic sources. -

Kurt & Courtney

KURT & COURTNEY -- ILLUSTRATED SCREENPLAY & SCREENCAP GALLERY directed by Nick Broomfield © 1997 Strength Ltd. YOU ARE REQUIRED TO READ THE COPYRIGHT NOTICE AT THIS LINK BEFORE YOU READ THE FOLLOWING WORK, THAT IS AVAILABLE SOLELY FOR PRIVATE STUDY, SCHOLARSHIP OR RESEARCH PURSUANT TO 17 U.S.C. SECTION 107 AND 108. IN THE EVENT THAT THE LIBRARY DETERMINES THAT UNLAWFUL COPYING OF THIS WORK HAS OCCURRED, THE LIBRARY HAS THE RIGHT TO BLOCK THE I.P. ADDRESS AT WHICH THE UNLAWFUL COPYING APPEARED TO HAVE OCCURRED. THANK YOU FOR RESPECTING THE RIGHTS OF COPYRIGHT OWNERS. [Transcribed from the movie by Tara Carreon] Kurt & Courtney [Nick Broomfield] At 8:40 a.m. on April 8, 1994, Kurt Cobain's body was found. He had been killed by a shotgun wound to the head. The verdict was suicide. The medical examiner in Kurt's case knew Courtney well before she got married to Kurt. In fact, they were pretty good friends and used to go out barhopping together. This should be reason for someone else to handle the examination. Shortly after this potential conflict of interest was revealed, he left the King County medical examiner's office and took a job in a small town in Florida. Coincidence? I think not. -- http://www.angelfire.com/nv/boddah/facts.html The Seattle medical examiner who examined Cobain's body, Dr. Nicholas Hartshorne, insisted that all of the evidence pointed to a suicide. However, many have questioned his opinion because he once promoted concerts for Nirvana, to which he replied, "It's leap of faith, that someone who once promoted concerts for bands would now risk his job, prison, and public disgrace, in order to cover up a murder. -

Here Is a Multi-Storey Car Park Next to the Venue on Chapel Street

Gig Guide RAW SEPT DEC 2016 PROMO T H E KINGFLOWERPOT STREET, DERBY DE1 3DZ PEATBOGwww.rawpromo.co.uk FAERIES CHINA CRISIS DREADZONE STEVE FORBERT SKINNY MOLLY & Many More... For enquiries on tickets, bookings & the bands CONTACT US......... RAW t : 01332 834438 07887 912030 e : [email protected] PROMO PO BOX 5718, DERBY DE21 2YU w : www.rawpromo.co.uk HOW TO FIND T H E FLOWERPOT King Street, Derby DE1 3DZ Tel : 01332 204955 The Flowerpot is situated on the edge of the Derby City Centre on the main A6 road, just around the corner from the Cathedral. There is a multi-storey car park next to the venue on Chapel Street. This is open 24 hours. The Flowerpot is famed for its vast selection of real ales and is now recognised as one of the best medium sized venues in the country. The capacity is 250 if standing and 150 if the show is all seated. If you are travelling a long way, please check that tickets are still available for the show. If you are travelling by bus or train, the main stations are approximately 20 - 30 minutes walk from the venue - just head for the Derby Cathedral and the Flowerpot is 100 yards further on up the road. Accommodation is available at the Flowerpot. There are 7 en-suite rooms. To book a room phone 07707 312095 PLEASE BE AWARE THAT THE FLOWERPOT IS AN OVER 18 ONLY VENUE A6 A61 leading to the A38 and M1 J28 M/Storey M1 north Car Park Derbyshire Cricket CIub Jurys Flowerpot Inn Follow signs M1 J25 To Matlock A6 A52 To Nottingham Pride To City Park Centre M1 south Derby Cathedral BUYING TICKETS IN ADVANCE........ -

The Trombone and Bass Trumpet in Modern Jazz

THE TROMBONE AND BASS TRUMPET IN MODERN JAZZ A study into the harmonic, intervallic and melodic devices present in the improvisations of Elliot Mason [Bones] Research Project for the degree of Honours in Bachelor of Music in Jazz Performance, Elder Conservatorium of Music, The University of Adelaide October Page 1 of 19 TABLE OF CONTENTS ABSTRACT .................................................................................................................................... 3 1. INTRODUCTION ........................................................................................................................ 4 2. INTERVALS ................................................................................................................................ 6 3. HARMONIC LANGUAGE ........................................................................................................... 8 4. APPLICATION IN STANDARD HARMONY ................................................................................ 12 5. MELODIC AND MOTIVIC DEVELOPMENT ............................................................................... 17 6. SUMMARY .................................................................................................................................. 20 7. REFERENCES ............................................................................................................................ 21 Page 2 of 19 ABSTRACT This study will dissect and analyse the improvisations of bass trumpeter and trombonist Elliot Mason. The first objective -

December 1983

Cover Photo by Ebet Roberts FEATURES CARL PALMER Despite his many years in the business, Carl Palmer has managed to remain enthusiastic and innovative in his approach to music. Here, he discusses such topics as the differences between being a member of Asia and being the drummer with Emerson, Lake & Palmer, and his approach to drum solos. b y R i c k M a t t i n g l y 8 Photo by Ebet Roberts SIMON KIRKE e t R o b e r t s First coming to prominence with the group Free, and then moving on to Bad Company, Simon Kirke has established himself as one of those drummers who has been a role model for other drummers. He talks about his background, his drumming, and his current P h o t o b y E b activities. by Simon Goodwin 14 ANTHONY J.CIRONE A percussionist with the San Francisco Symphony, a teacher at San Jose State College, and a composer of percussion music, Cirone discusses his classical training at Juilliard, his teaching methods, careers in music today, and his personal philosophy for leading a well-integrated and diverse life-style. by Rick Mattingly 18 DRUM COMPUTERS A Comparative Look by Bob Saydlowski, Jr. 24 r e g T o l a n d d n a l o T g e r J.R. MITCHELL Creative Survival P h o t o b y G G y b o t o h P by Scott K. Fish 28 STRICTLY TECHNIQUE PORTRAITS COLUMNS Focus on Bass Drum Kwaku Dadey 94 by Paul R. -

History on My Arms Iggy Pop Llik Your Idols

DAGGER DVD REVIEWS! http://www.daggerzine.com/reviews_dvd.html 11.29.09 History On My Arms WITH DEE DEE RAMONE - (MVD)- Apparently this interview with Dee Dee was initially part of a Johnny Thunders documentary (done by Lech Kowalski, as was this) but they decided it could stand on its own as its own release. This has 3 segments, one is History on My Arms, one is Vom in Paris (not about the old Richard Meltzer band) and the third is Hey is Dee Dee Home. It also includes a fairly boring (extra) disc called Dee Dee Blues that I could have done without with him just noodling on the guitar. But the main interview on this is pretty interesting , basically about his love/hate relationship with Johnny Thunders and when/how he got each of his tattoos. It seems like every other sentences ends with “…and then we scored some dope” or “…and then we got high.” Dee Dee doesn’t romanticize the drug life, in fact, it seems as if he realizes how sad/pathetic it all is but it seems like every time he hung out with Thunders (who he eventually tried to avoid and then ended up hating after a fateful trip to Paris to meet up with Stiv Bators) he would go back using. The main interview is occasionally annoying as it keeps switching back to other interviews and him doing more noodling on guitar but that said, the main interview was pretty fascinating and the one part on here that you need to see.