Outlook on Operating Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Assessmentof Open Source GIS Software for Water Resources

Assessment of Open Source GIS Software for Water Resources Management in Developing Countries Daoyi Chen, Department of Engineering, University of Liverpool César Carmona-Moreno, EU Joint Research Centre Andrea Leone, Department of Engineering, University of Liverpool Shahriar Shams, Department of Engineering, University of Liverpool EUR 23705 EN - 2008 The mission of the Institute for Environment and Sustainability is to provide scientific-technical support to the European Union’s Policies for the protection and sustainable development of the European and global environment. European Commission Joint Research Centre Institute for Environment and Sustainability Contact information Cesar Carmona-Moreno Address: via fermi, T440, I-21027 ISPRA (VA) ITALY E-mail: [email protected] Tel.: +39 0332 78 9654 Fax: +39 0332 78 9073 http://ies.jrc.ec.europa.eu/ http://www.jrc.ec.europa.eu/ Legal Notice Neither the European Commission nor any person acting on behalf of the Commission is responsible for the use which might be made of this publication. Europe Direct is a service to help you find answers to your questions about the European Union Freephone number (*): 00 800 6 7 8 9 10 11 (*) Certain mobile telephone operators do not allow access to 00 800 numbers or these calls may be billed. A great deal of additional information on the European Union is available on the Internet. It can be accessed through the Europa server http://europa.eu/ JRC [49291] EUR 23705 EN ISBN 978-92-79-11229-4 ISSN 1018-5593 DOI 10.2788/71249 Luxembourg: Office for Official Publications of the European Communities © European Communities, 2008 Reproduction is authorised provided the source is acknowledged Printed in Italy Table of Content Introduction............................................................................................................................4 1. -

Oracle Database Administrator's Reference for UNIX-Based Operating Systems

Oracle® Database Administrator’s Reference 10g Release 2 (10.2) for UNIX-Based Operating Systems B15658-06 March 2009 Oracle Database Administrator's Reference, 10g Release 2 (10.2) for UNIX-Based Operating Systems B15658-06 Copyright © 2006, 2009, Oracle and/or its affiliates. All rights reserved. Primary Author: Brintha Bennet Contributing Authors: Kevin Flood, Pat Huey, Clara Jaeckel, Emily Murphy, Terri Winters, Ashmita Bose Contributors: David Austin, Subhranshu Banerjee, Mark Bauer, Robert Chang, Jonathan Creighton, Sudip Datta, Padmanabhan Ganapathy, Thirumaleshwara Hasandka, Joel Kallman, George Kotsovolos, Richard Long, Rolly Lv, Padmanabhan Manavazhi, Matthew Mckerley, Sreejith Minnanghat, Krishna Mohan, Rajendra Pingte, Hanlin Qian, Janelle Simmons, Roy Swonger, Lyju Vadassery, Douglas Williams This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this software or related documentation is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. -

Comp2011 Slides

COMP 2021 Unix and Script Programming Course Information Course Information Lecture Tue 11:00-12:50, G009A, CYT Building Instructor Dr. Cindy LI, [email protected], Room 3535 (Lift 25/26) Course website http://course.cse.ust.hk/comp2021/ Labs Lab 1: Thur 09:00 – 10:50, Room 4214 (Lift 19), TAs Mr. Chang Zhang Yu Course Objectives Have a general appreciation of the Unix operating system and its environment Get familiar with shell basics, file structure, everyday commands Be able to write simple shell programs for text/data manipulation and process control Understand regular expressions and use them in Unix utilities and file manipulation Script Programming Skills Understand the basics of script programming languages such as PHP and JavaScript, including variable and array, control flow, I/O, and functions Web Programming Skills Have a working knowledge of the common HTML commands and CSS Understand how to build web programs using CGI programming in languages such as PHP and JavaScript Reference & Grading Scheme Lab attendance (5%) Homework assignment (20%) Project and presentation (35%) Final exam (40%) Comp2021 Project & Presentation Propose, implement, and document your own custom application. Choose your own topic that includes Unix, Shell scripting, or PHP/JavaScript Work in groups of normally 2 people. Presentations will be in the last few lectures of the semester. The tentative format for the project is the following: 10-minute presentation (like short conference presentation) 5-minutes for Q&A (while the next group sets up) Upload final submission to CASS last Day of Spring term a softcopy of your PowerPoint slides a softcopy of a short paper (4 pages) summarizing your project source code Introduction to Unix *nix Systems What is UNIX? UNIX is an Operating System (OS). -

Summary of UNIX Commands Furnishing, Performance, Or the Use of These Previewers Commands Or the Associated Descriptions Available on Most UNIX Systems

S u mmary of UNIX Commands- version 3.2 Disclaimer 8. Usnet news 9. File transfer and remote access Summary of UNIX The author and publisher make no warranty of any 10. X window kind, expressed or implied, including the warranties of 11. Graph, Plot, Image processing tools commands merchantability or fitness for a particular purpose, with regard to the use of commands contained in this 12. Information systems 1994,1995,1996 Budi Rahardjo reference card. This reference card is provided “as is”. 13. Networking programs <[email protected]> The author and publisher shall not be liable for 14. Programming tools damage in connection with, or arising out of the 15. Text processors, typesetters, and This is a summary of UNIX commands furnishing, performance, or the use of these previewers commands or the associated descriptions available on most UNIX systems. 16. Wordprocessors Depending on the configuration, some of 17. Spreadsheets the commands may be unavailable on your Conventions 18. Databases site. These commands may be a 1. Directory and file commands commercial program, freeware or public bold domain program that must be installed represents program name separately, or probably just not in your bdf search path. Check your local dirname display disk space (HP-UX). See documentation or manual pages for more represents directory name as an also df. details (e.g. man programname). argument cat filename This reference card, obviously, cannot filename display the content of file filename describe all UNIX commands in details, but represents file name as an instead I picked commands that are useful argument cd [dirname] and interesting from a user’s point of view. -

The Unix™ Language Family

Chapter 5 of Using Computers in Linguistics: A Practical Guide Routledge, 1998. Edited by John Lawler and Helen Aristar Dry (Terms in bold italics are to be found in the Glossary, references in the Bibliography. Both are available online, along with other resources; see Page 24 below for URLs) The Unix™ Language Family John M. Lawler Linguistics Department and Residential College University of Michigan 1. General 1 The Unix™ operating system is used on a wide variety of computers (including but not limited to most workstation-class machines made by Sun, Hewlett-Packard, MIPS, NeXT, DEC, IBM2, and many others), in one or another version. If one is around computers almost anywhere, one is within reach of a computer running Unix, especially these days, when Linux, a free version of Unix, may be found on many otherwise ordinary-looking PCs. Indeed, more often than not Unix is the only choice available for many computing tasks like E-mail, number-crunching, or running file servers and Web sites. One of the reasons for the ubiquity of Unix is that it is the most influential operating system in history; it has strongly affected, and contributed features and development philosophy to almost all other operating systems. Understanding any kind of computing without knowing anything about Unix is not unlike trying to understand how English works without knowing anything about the Indo-European family: that is, it’s not impossible, but it’s far more difficult than it ought to be, because there appears to be too much unexplainable arbitrariness. In this chapter I provide a linguistic sketch3 of the Unix operating system and its family of “languages”. -

From DOS/Windows to Linux HOWTO from DOS/Windows to Linux HOWTO

From DOS/Windows to Linux HOWTO From DOS/Windows to Linux HOWTO Table of Contents From DOS/Windows to Linux HOWTO ........................................................................................................1 By Guido Gonzato, ggonza at tin.it.........................................................................................................1 1.Introduction...........................................................................................................................................1 2.For the Impatient...................................................................................................................................1 3.Meet bash..............................................................................................................................................1 4.Files and Programs................................................................................................................................1 5.Using Directories .................................................................................................................................2 6.Floppies, Hard Disks, and the Like ......................................................................................................2 7.What About Windows?.........................................................................................................................2 8.Tailoring the System.............................................................................................................................2 9.Networking: -

Unix Account

USING YOUR FAS UNIX ACCOUNT Copyright © 1999 The President and Fellows of Harvard College All Rights Reserved TABLE OF CONTENTS COMPUTING AT HARVARD ........................................................................................ 1 An Introduction to Unix ................................................................................................ 1 The FAS Unix Systems ............................................................................................... 1 CONNECTING TO YOUR FAS UNIX ACCOUNT .............................................................. 2 SECURITY & PRIVACY ON FAS UNIX SYSTEMS........................................................... 2 Your FAS Unix Account’s Password............................................................................ 2 USING THE UNIX COMMAND SHELL ........................................................................... 3 Managing Multiple Programs under Unix. .................................................................... 3 FILES AND DIRECTORIES IN UNIX ............................................................................... 4 Your Home Directory .................................................................................................. 4 Your Home Directory’s Disk Space Quota .................................................................. 4 Using ls to List Files in Your Home Directory ............................................................... 5 Managing Your Files .................................................................................................. -

Introduction to Linux

Introduction to Linux A Hands on Guide Machtelt Garrels CoreSequence.com <[email protected]> Version 1.8 Last updated 20030916 Edition Introduction to Linux Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions of this guide...................................................................................................................1 4. Revision History..................................................................................................................................1 5. Contributions.......................................................................................................................................2 6. Feedback..............................................................................................................................................2 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................3 9. Conventions used -

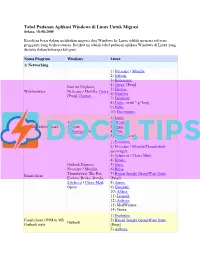

List Software Pengganti Windows Ke Linux

Tabel Padanan Aplikasi Windows di Linux Untuk Migrasi Selasa, 18-08-2009 Kesulitan besar dalam melakukan migrasi dari Windows ke Linux adalah mencari software pengganti yang berkesesuaian. Berikut ini adalah tabel padanan aplikasi Windows di Linux yang disusun dalam beberapa kategori. Nama Program Windows Linux 1) Networking. 1) Netscape / Mozilla. 2) Galeon. 3) Konqueror. 4) Opera. [Prop] Internet Explorer, 5) Firefox. Web browser Netscape / Mozilla, Opera 6) Nautilus. [Prop], Firefox, ... 7) Epiphany. 8) Links. (with "-g" key). 9) Dillo. 10) Encompass. 1) Links. 1) Links 2) ELinks. Console web browser 2) Lynx 3) Lynx. 3) Xemacs + w3. 4) w3m. 5) Xemacs + w3. 1) Evolution. 2) Netscape / Mozilla/Thunderbird messenger. 3) Sylpheed / Claws Mail. 4) Kmail. Outlook Express, 5) Gnus. Netscape / Mozilla, 6) Balsa. Thunderbird, The Bat, 7) Bynari Insight GroupWare Suite. Email client Eudora, Becky, Datula, [Prop] Sylpheed / Claws Mail, 8) Arrow. Opera 9) Gnumail. 10) Althea. 11) Liamail. 12) Aethera. 13) MailWarrior. 14) Opera. 1) Evolution. Email client / PIM in MS 2) Bynari Insight GroupWare Suite. Outlook Outlook style [Prop] 3) Aethera. 4) Sylpheed. 5) Claws Mail 1) Sylpheed. 2) Claws Mail Email client in The Bat The Bat 3) Kmail. style 4) Gnus. 5) Balsa. 1) Pine. [NF] 2) Mutt. Mutt [de], Pine, Pegasus, Console email client 3) Gnus. Emacs 4) Elm. 5) Emacs. 1) Knode. 2) Pan. 1) Agent [Prop] 3) NewsReader. 2) Free Agent 4) Netscape / Mozilla Thunderbird. 3) Xnews 5) Opera [Prop] 4) Outlook 6) Sylpheed / Claws Mail. 5) Netscape / Mozilla Console: News reader 6) Opera [Prop] 7) Pine. [NF] 7) Sylpheed / Claws Mail 8) Mutt. -

Baumann: the Multikernel: a New OS Architecture for Scalable Multicore

The Multikernel: A new OS architecture for scalable multicore systems Andrew Baumann,∗ Paul Barham,y Pierre-Evariste Dagand,z Tim Harris,y Rebecca Isaacs,y Simon Peter,∗ Timothy Roscoe,∗ Adrian Schüpbach,∗ and Akhilesh Singhania∗ ∗Systems Group, ETH Zurich yMicrosoft Research, Cambridge zENS Cachan Bretagne Abstract App App App App Commodity computer systems contain more and more OS node OS node OS node OS node processor cores and exhibit increasingly diverse archi- Agreement algorithms State State State Async messages State tectural tradeoffs, including memory hierarchies, inter- replica replica replica replica connects, instruction sets and variants, and IO configu- Arch-specific code rations. Previous high-performance computing systems have scaled in specific cases, but the dynamic nature of Heterogeneous x86 x64 ARM GPU modern client and server workloads, coupled with the cores impossibility of statically optimizing an OS for all work- Interconnect loads and hardware variants pose serious challenges for operating system structures. Figure 1: The multikernel model. We argue that the challenge of future multicore hard- ware is best met by embracing the networked nature of the machine, rethinking OS architecture using ideas from Such hardware, while in some regards similar to ear- distributed systems. We investigate a new OS structure, lier parallel systems, is new in the general-purpose com- the multikernel, that treats the machine as a network of puting domain. We increasingly find multicore systems independent cores, assumes no inter-core sharing at the in a variety of environments ranging from personal com- lowest level, and moves traditional OS functionality to puting platforms to data centers, with workloads that are a distributed system of processes that communicate via less predictable, and often more OS-intensive, than tradi- message-passing. -

Summary of UNIX Commands- Version 3.2

Summary of UNIX Commands- version 3.2 Disclaimer 8. Usnet news 9. File transfer and remote access Summary of UNIX The author and publisher make no warranty of any 10. X window kind, expressed or implied, including the warranties of 11. Graph, Plot, Image processing tools commands merchantability or fitness for a particular purpose, with regard to the use of commands contained in this 12. Information systems Ó 1994,1995,1996 Budi Rahardjo reference card. This reference card is provided “as is”. 13. Networking programs <[email protected]> The author and publisher shall not be liable for 14. Programming tools damage in connection with, or arising out of the 15. Text processors, typesetters, and This is a summary of UNIX commands furnishing, performance, or the use of these previewers available on most UNIX systems. commands or the associated descriptions. 16. Wordprocessors Depending on the configuration, some of 17. Spreadsheets the commands may be unavailable on your Conventions 18. Databases site. These commands may be a commercial program, freeware or public 1. Directory and file commands bold domain program that must be installed represents program name separately, or probably just not in your bdf search path. Check your local dirname display disk space (HP-UX). See documentation or manual pages for more represents directory name as an also df. details (e.g. man programname). argument cat filename This reference card, obviously, cannot filename display the content of file filename describe all UNIX commands in details, but represents file name as an instead I picked commands that are useful argument cd [dirname] and interesting from a user’s point of view. -

USENET History (1)

USENET History (1) > The first USENET – In 1979 – Tom Truscott, Jim Ellis, Steve Bellovin – Script-based software in the very first time – Communicate with UUCP via standard phone line and modems > The ANEWS version – In 1980 – Rewrite with C language – Steve Bellovin, Tom Truscott, Daniels > The BNEWS version – In 1981 – Mark Horton, Matt Glickman, Rick Adams – Rewrite ANEWS to handle more news traffic – Complied with RFC822 message format 2 History (2) > UUCP vs. NNTP – UUCP • UNIX-to-UNIX Copy • Via telephone line • Store-and-forward batch • Duplicate articles – NNTP • Networks News Transfer • Via TCP/IP connections • Sending required articles 3 History (3) > The CNEWS version – In 1987 – Geoff Collyer, Henry Spencer – Rewrite of BNEWS to speed up news exchange and processing > INN (InterNet News) – In 1992 – Rich Salz – NNTP and UUCP support > DNEWS – In 1995 – Commercial news software developed by NetWin Inc. – Handle both Ihave-style and Sucking-style news feed • Sucking-style feed pulls only those newsgroups actively being read 4 History (4) 5 News background – News Article > Two components – Body – Header • RFC1036 > All USENET news messages must be formatted as valid Internet mail message (RFC822) > RFC1036 is more restrictive Path: netnews2.csie.nctu.edu.tw!not-for-mail From: Ya-Lin Huang <[email protected]> Newsgroups: csie.help Subject: 無法登入ccbsd8 Date: Mon, 28 Mar 2005 06:36:19 +0000 (UTC) Organization: Computer Science & Information Engineering NCTU Lines: 3 Sender: Ya-Lin Huang <[email protected]> Message-ID: <[email protected]> NNTP-Posting-Host: [email protected] Mime-Version: 1.0 Content-Type: text/plain; charset=Big5 Content-Transfer-Encoding: 8bit 6 News background – Newsgroups > Top-level newsgroups – comp, humanities, misc, news, rec, sci, soc, talk – New sub-newsgroup will be created within the original newsgroup when articles get too specific • New newsgroup creation may need to: > subject a proposal and hold a vote.