ATLAS Installation Guide ∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IPS Signature Release Note V9.17.79

SOPHOS IPS Signature Update Release Notes Version : 9.17.79 Release Date : 19th January 2020 IPS Signature Update Release Information Upgrade Applicable on IPS Signature Release Version 9.17.78 CR250i, CR300i, CR500i-4P, CR500i-6P, CR500i-8P, CR500ia, CR500ia-RP, CR500ia1F, CR500ia10F, CR750ia, CR750ia1F, CR750ia10F, CR1000i-11P, CR1000i-12P, CR1000ia, CR1000ia10F, CR1500i-11P, CR1500i-12P, CR1500ia, CR1500ia10F Sophos Appliance Models CR25iNG, CR25iNG-6P, CR35iNG, CR50iNG, CR100iNG, CR200iNG/XP, CR300iNG/XP, CR500iNG- XP, CR750iNG-XP, CR2500iNG, CR25wiNG, CR25wiNG-6P, CR35wiNG, CRiV1C, CRiV2C, CRiV4C, CRiV8C, CRiV12C, XG85 to XG450, SG105 to SG650 Upgrade Information Upgrade type: Automatic Compatibility Annotations: None Introduction The Release Note document for IPS Signature Database Version 9.17.79 includes support for the new signatures. The following sections describe the release in detail. New IPS Signatures The Sophos Intrusion Prevention System shields the network from known attacks by matching the network traffic against the signatures in the IPS Signature Database. These signatures are developed to significantly increase detection performance and reduce the false alarms. Report false positives at [email protected], along with the application details. January 2020 Page 2 of 245 IPS Signature Update This IPS Release includes Two Thousand, Seven Hundred and Sixty Two(2762) signatures to address One Thousand, Nine Hundred and Thirty Eight(1938) vulnerabilities. New signatures are added for the following vulnerabilities: Name CVE–ID -

Ubuntu Kung Fu

Prepared exclusively for Alison Tyler Download at Boykma.Com What readers are saying about Ubuntu Kung Fu Ubuntu Kung Fu is excellent. The tips are fun and the hope of discov- ering hidden gems makes it a worthwhile task. John Southern Former editor of Linux Magazine I enjoyed Ubuntu Kung Fu and learned some new things. I would rec- ommend this book—nice tips and a lot of fun to be had. Carthik Sharma Creator of the Ubuntu Blog (http://ubuntu.wordpress.com) Wow! There are some great tips here! I have used Ubuntu since April 2005, starting with version 5.04. I found much in this book to inspire me and to teach me, and it answered lingering questions I didn’t know I had. The book is a good resource that I will gladly recommend to both newcomers and veteran users. Matthew Helmke Administrator, Ubuntu Forums Ubuntu Kung Fu is a fantastic compendium of useful, uncommon Ubuntu knowledge. Eric Hewitt Consultant, LiveLogic, LLC Prepared exclusively for Alison Tyler Download at Boykma.Com Ubuntu Kung Fu Tips, Tricks, Hints, and Hacks Keir Thomas The Pragmatic Bookshelf Raleigh, North Carolina Dallas, Texas Prepared exclusively for Alison Tyler Download at Boykma.Com Many of the designations used by manufacturers and sellers to distinguish their prod- ucts are claimed as trademarks. Where those designations appear in this book, and The Pragmatic Programmers, LLC was aware of a trademark claim, the designations have been printed in initial capital letters or in all capitals. The Pragmatic Starter Kit, The Pragmatic Programmer, Pragmatic Programming, Pragmatic Bookshelf and the linking g device are trademarks of The Pragmatic Programmers, LLC. -

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

Cygwin User's Guide

Cygwin User’s Guide Cygwin User’s Guide ii Copyright © Cygwin authors Permission is granted to make and distribute verbatim copies of this documentation provided the copyright notice and this per- mission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this documentation under the conditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this documentation into another language, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the Free Software Foundation. Cygwin User’s Guide iii Contents 1 Cygwin Overview 1 1.1 What is it? . .1 1.2 Quick Start Guide for those more experienced with Windows . .1 1.3 Quick Start Guide for those more experienced with UNIX . .1 1.4 Are the Cygwin tools free software? . .2 1.5 A brief history of the Cygwin project . .2 1.6 Highlights of Cygwin Functionality . .3 1.6.1 Introduction . .3 1.6.2 Permissions and Security . .3 1.6.3 File Access . .3 1.6.4 Text Mode vs. Binary Mode . .4 1.6.5 ANSI C Library . .4 1.6.6 Process Creation . .5 1.6.6.1 Problems with process creation . .5 1.6.7 Signals . .6 1.6.8 Sockets . .6 1.6.9 Select . .7 1.7 What’s new and what changed in Cygwin . .7 1.7.1 What’s new and what changed in 3.2 . -

Buildsystems and What the Heck for We Actually Use the Autotools

Buildsystems and what the heck for we actually use the autotools Tom´aˇsChv´atal SUSE Packagers team 2013/07/19 Introduction Who the hell is Tom´aˇsChv´atal • SUSE Employee since 2011 - Team lead of packagers team • Packager of Libreoffice and various other stuff for openSUSE • openSUSE promoter and volunteer • Gentoo developer since fall 2008 3 of 37 Autotools process Complete autotools process 5 of 37 Make Why not just a sh script? Always recompiling everything is a waste of time and CPU power 7 of 37 Plain makefile example CC ?= @CC@ CFLAGS ?= @CFLAGS@ PROGRAM = examplebinary OBJ = main.o parser.o output.o $ (PROGRRAM) : $ (OBJ) $ (CC) $ (LDFLAGS) −o $@ $^ main.o: main.c common.h parser.o: parser.c common.h output.o: output.c common.h setup.h i n s t a l l : $ (PROGRAM) # You have to use tabs here $(INSTALL) $(PROGRAM) $(BINDIR) c l e a n : $ (RM) $ (OBJ) 8 of 37 Variables in Makefiles • Variables expanded using $(), ie $(VAR) • Variables are assigned like in sh, ie VAR=value • $@ current target • $<the first dependent file • $^all dependent files 9 of 37 Well nice, but why autotools then • Makefiles can get complex fast (really unreadable) • Lots of details to keep in mind when writing, small mistakes happen fast • Does not make dependencies between targets really easier • Automake gives you automatic tarball creation (make distcheck) 10 of 37 Autotools Simplified autotools process 12 of 37 Autoconf/configure sample AC INIT(example , 0.1, [email protected]) AC CONFIG HEADER([ config .h]) AC PROG C AC PROG CPP AC PROG INSTALL AC HEADER STDC AC CHECK HEADERS([string.h unistd.h limits.h]) AC CONFIG FILES([ Makefile doc/Makefile src/Makefile]) AC OUTPUT 13 of 37 Autoconf syntax • The M4 syntax is quite weird on the first read • It is not interpreted, it is text substitution machine • Lots of quoting is needed, if in doubt add more [] • Everything that does or might contain whitespace or commas has to be quoted • Custom autoconf M4 macros are almost unreadable 14 of 37 Automake bin PROGRAMS = examplebinary examplebinary SOURCES = n s r c /main . -

Assessmentof Open Source GIS Software for Water Resources

Assessment of Open Source GIS Software for Water Resources Management in Developing Countries Daoyi Chen, Department of Engineering, University of Liverpool César Carmona-Moreno, EU Joint Research Centre Andrea Leone, Department of Engineering, University of Liverpool Shahriar Shams, Department of Engineering, University of Liverpool EUR 23705 EN - 2008 The mission of the Institute for Environment and Sustainability is to provide scientific-technical support to the European Union’s Policies for the protection and sustainable development of the European and global environment. European Commission Joint Research Centre Institute for Environment and Sustainability Contact information Cesar Carmona-Moreno Address: via fermi, T440, I-21027 ISPRA (VA) ITALY E-mail: [email protected] Tel.: +39 0332 78 9654 Fax: +39 0332 78 9073 http://ies.jrc.ec.europa.eu/ http://www.jrc.ec.europa.eu/ Legal Notice Neither the European Commission nor any person acting on behalf of the Commission is responsible for the use which might be made of this publication. Europe Direct is a service to help you find answers to your questions about the European Union Freephone number (*): 00 800 6 7 8 9 10 11 (*) Certain mobile telephone operators do not allow access to 00 800 numbers or these calls may be billed. A great deal of additional information on the European Union is available on the Internet. It can be accessed through the Europa server http://europa.eu/ JRC [49291] EUR 23705 EN ISBN 978-92-79-11229-4 ISSN 1018-5593 DOI 10.2788/71249 Luxembourg: Office for Official Publications of the European Communities © European Communities, 2008 Reproduction is authorised provided the source is acknowledged Printed in Italy Table of Content Introduction............................................................................................................................4 1. -

Bringing Windows to the Linux Desktop

BRINGING WINDOWS TO THE LINUX DESKTOP In a perfect world, the operating system that you use wouldn't matter. We're moving towards that with the growing use and availability of Web applications. But the day when the operating system is irrelevant is still awhile off. Right now, though, Windows is the dominant operating system. And there are no Linux versions of the popular and most widely-used applications for Windows -- programs like Microsoft Office and Adobe Photoshop. If you're a Linux user and you need to play nicely with the Windows world, conventional wisdom dictates that you either need a separate desktop computer or laptop computer that's running Windows or a system that dual boots. If you're adventurous, you can install a virtualization tool like VM Ware or Virtual Box. This software recreates Windows on your Linux box. The only problem with those solutions is that they need a lot of hard drive space and CPU. There is another choice: fake it. Faking it What do I mean by faking it? Using software that fools Windows applications into believing that they're actually running on Windows and not on Linux. It does this by creating what's called anapplication layer between the Windows program and Linux. The application layer simulates the Windows environment -- calls to the operating system, DLLs, drivers, and the like. Unlike the other solutions mentioned earlier, using an application layer only adds a bit of extra load to your computer -- a few megabytes of disk space and a couple of megabytes of RAM. -

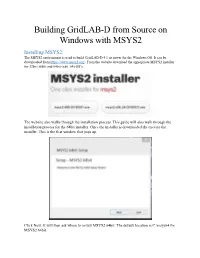

Building Gridlab-D from Source on Windows with MSYS2 Installing MSYS2: the MSYS2 Environment Is Used to Build Gridlab-D 4.1 Or Newer for the Windows OS

Building GridLAB-D from Source on Windows with MSYS2 Installing MSYS2: The MSYS2 environment is used to build GridLAB-D 4.1 or newer for the Windows OS. It can be downloaded from https://www.msys2.org/. From the website download the appropriate MSYS2 installer for 32bit (i686) and 64bit (x86_64) OS’s. The website also walks through the installation process. This guide will also walk through the installation process for the 64bit installer. Once the installer is downloaded the execute the installer. This is the first window that pops up. Click Next. It will then ask where to install MSYS2 64bit. The default location is C:\msys64 for MSYS2 64bit. Once the location has been specified click Next. Then it will ask where to create the program’s shortcuts. Use an existing one or create a new name. Once a destination is chosen click Next. At this point the installer begins to install MSYS2 onto the computer. Once installation is complete click Next. Then click Finish and installation is successful. Setting Up the MSYS2 Environment: To start the MSYS2 environment start msys2.exe. A window like below should pop up. As can be seen MSYS2 provides a Linux-like command terminal and environment for building GNU compliant C/C++ executables for Windows OS’s. When Running the MSYS2 environment for the first time updates will need to be performed. To perform update run $ pacman -Syuu Type “y” and hit enter to continue. If you get a line like the following: Simply close the MSYS2 window and restart the MSYS2 executable. -

Project-Team CONVECS

IN PARTNERSHIP WITH: Institut polytechnique de Grenoble Université Joseph Fourier (Grenoble) Activity Report 2016 Project-Team CONVECS Construction of verified concurrent systems IN COLLABORATION WITH: Laboratoire d’Informatique de Grenoble (LIG) RESEARCH CENTER Grenoble - Rhône-Alpes THEME Embedded and Real-time Systems Table of contents 1. Members :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 1 2. Overall Objectives :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 2 3. Research Program :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 2 3.1. New Formal Languages and their Concurrent Implementations2 3.2. Parallel and Distributed Verification3 3.3. Timed, Probabilistic, and Stochastic Extensions4 3.4. Component-Based Architectures for On-the-Fly Verification4 3.5. Real-Life Applications and Case Studies5 4. Application Domains ::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::5 5. New Software and Platforms :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 6 5.1. The CADP Toolbox6 5.2. The TRAIAN Compiler8 6. New Results :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 8 6.1. New Formal Languages and their Implementations8 6.1.1. Translation from LNT to LOTOS8 6.1.2. Translation from LOTOS NT to C8 6.1.3. Translation from LOTOS to Petri nets and C9 6.1.4. NUPN 9 6.1.5. Translation from BPMN to LNT 10 6.1.6. Translation from GRL to LNT 10 6.1.7. Translation of Term Rewrite Systems 10 6.2. Parallel and Distributed Verification 11 6.2.1. Distributed State Space Manipulation 11 6.2.2. Distributed Code Generation for LNT 11 6.2.3. Distributed Resolution of Boolean Equation Systems 11 6.2.4. Stability of Communicating Systems 12 6.2.5. Debugging of Concurrent Systems 12 6.3. Timed, Probabilistic, and Stochastic Extensions 12 6.4. Component-Based Architectures for On-the-Fly Verification 13 6.4.1. -

Linux Networking Cookbook.Pdf

Linux Networking Cookbook ™ Carla Schroder Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo Linux Networking Cookbook™ by Carla Schroder Copyright © 2008 O’Reilly Media, Inc. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (safari.oreilly.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Mike Loukides Indexer: John Bickelhaupt Production Editor: Sumita Mukherji Cover Designer: Karen Montgomery Copyeditor: Derek Di Matteo Interior Designer: David Futato Proofreader: Sumita Mukherji Illustrator: Jessamyn Read Printing History: November 2007: First Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. The Cookbook series designations, Linux Networking Cookbook, the image of a female blacksmith, and related trade dress are trademarks of O’Reilly Media, Inc. Java™ is a trademark of Sun Microsystems, Inc. .NET is a registered trademark of Microsoft Corporation. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. -

Outlook on Operating Systems

COVER FEATURE OUTLOOK Outlook on Operating Systems Dejan Milojičić, Hewlett Packard Labs Timothy Roscoe, ETH Zurich Will OSs in 2025 still resemble the Unix-like consensus of today, or will a very different design achieve widespread adoption? eventeen years ago, six renowned technolo- more technical: by following the argument that the OS gists attempted to predict the future of OSs will change, we can identify the most promising paths for an article in the January 1999 issue of IEEE for OS research to follow—toward either a radically dif- Concurrency.1 With the benefit of hindsight, ferent model or the evolution of existing systems. In Sthe results were decidedly mixed. These six experts research, it’s often better to overshoot (and then figure correctly predicted the emergence of scale-out archi- out what worked) than to undershoot. tectures, nonuniform memory access (NUMA) perfor- Current trends in both computer hardware and mance issues, and the increasing importance of OS application software strongly suggest that OSs will security. But they failed to predict the dominance of need to be designed differently in the future. Whether Linux and open source software, the decline of propri- this means that Linux, Windows, and the like will be etary Unix variants, and the success of vertically inte- replaced by something else or simply evolve rapidly grated Mac OS X and iOS. will be determined by a combination of various tech- The reasons to believe that OS design won’t change nical, business, and social factors beyond the con- much going forward are well known and rehearsed: trol of OS technologists and researchers. -

Oracle Database Administrator's Reference for UNIX-Based Operating Systems

Oracle® Database Administrator’s Reference 10g Release 2 (10.2) for UNIX-Based Operating Systems B15658-06 March 2009 Oracle Database Administrator's Reference, 10g Release 2 (10.2) for UNIX-Based Operating Systems B15658-06 Copyright © 2006, 2009, Oracle and/or its affiliates. All rights reserved. Primary Author: Brintha Bennet Contributing Authors: Kevin Flood, Pat Huey, Clara Jaeckel, Emily Murphy, Terri Winters, Ashmita Bose Contributors: David Austin, Subhranshu Banerjee, Mark Bauer, Robert Chang, Jonathan Creighton, Sudip Datta, Padmanabhan Ganapathy, Thirumaleshwara Hasandka, Joel Kallman, George Kotsovolos, Richard Long, Rolly Lv, Padmanabhan Manavazhi, Matthew Mckerley, Sreejith Minnanghat, Krishna Mohan, Rajendra Pingte, Hanlin Qian, Janelle Simmons, Roy Swonger, Lyju Vadassery, Douglas Williams This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this software or related documentation is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S.