ABSTRACT WU, LEI. Vulnerability Detection and Mitigation in Commodity Android Devices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pembuatan Game Fun Boom Countdown Berbasis Mobile Android

PEMBUATAN GAME FUN BOOM COUNTDOWN BERBASIS MOBILE ANDROID NASKAH PUBLIKASI diajukan oleh Adhitya Novebi Rahmawan 11.11.4617 kepada SEKOLAH TINGGI MANAJEMEN INFORMATIKA DAN KOMPUTER AMIKOM YOGYAKARTA YOGYAKARTA 2017 NASKAH PUBLIKASI PEMBUATAN GAME FUN BOOM COUNTDOWN BERBASIS MOBILE ANDROID yang dipersiapkan dan disusun oleh Adhitya Novebi Rahmawan 11.11.4617 Dosen Pembimbing Mei P Kurniawan, M.Kom NIK. 190302187 Tanggal, 10 Februari 2017 Ketua Program Studi Teknik Informatika Bambang Sudarmawan NIK. 190302035 PEMBUATAN GAME FUN BOOM COUNTDOWN BERBASIS MOBILE ANDROID Adhitya Novebi Rahmawan1), Mei P Kurniawan2), 1) Teknik Informatika STMIK AMIKOM Yogyakarta 2)Sistem Informasi STMIK AMIKOM Yogyakarta Jl Ringroad Utara, Condongcatur, Depok, Sleman, Yogyakarta Indonesia 55283 Email : [email protected] 1), [email protected] 2) Abstract - Games, gadgets and smartphones are berisi semua ilmu pengetahuan umum mulai dari pengetahuan sejarah, budaya sampai peristiwa- something inseparable from children’s world peristiwa penting dunia. Tetapi lagi-lagi membaca nowadays. Many children around us have been menjadi kendala utama orang malas mempelajari already carrying a smartphone and rarely carrying pengetahuan umum dari buku. Oleh karena itu dapat books instead of reading it. The curriculums of dikatakan ilmu pengetahuan umum yang diberikan oleh buku kurang diminati oleh khalayak umum. education increase of being difficult each year, but the interest of children to read is declining. It what Masalah terakhir yang muncul adalah materi yang disampaikan oleh buku kurang interaktif. makes Boom Countdown Game is created so that Untuk membangun minat seseorang dalam children can learn while playing. mempelajari ilmu pengetahuan umum diperlukan This game is based on Android because most of the alat yang lebih interaktif dari buku. -

Presentación De Powerpoint

Modelos compatibles de Imóvil No. Marca Modelo Teléfono Versión del sistema operativo 1 360 1501_M02 1501_M02 Android 5.1 2 100+ 100B 100B Android 4.1.2 3 Acer Iconia Tab A500 Android 4.0.3 4 ALPS (Golden Master) MR6012H1C2W1 MR6012H1C2W1 Android 4.2.2 5 ALPS (Golden Master) PMID705GTV PMID705GTV Android 4.2.2 6 Amazon Fire HD 6 Fire HD 6 Fire OS 4.5.2 / Android 4.4.3 7 Amazon Fire Phone 32GB Fire Phone 32GB Fire OS 3.6.8 / Android 4.2.2 8 Amoi A862W A862W Android 4.1.2 9 amzn KFFOWI KFFOWI Android 5.1.1 10 Apple iPad 2 (2nd generation) MC979ZP iOS 7.1 11 Apple iPad 4 MD513ZP/A iOS 7.1 12 Apple iPad 4 MD513ZP/A iOS 8.0 13 Apple iPad Air MD785ZP/A iOS 7.1 14 Apple iPad Air 2 MGLW2J/A iOS 8.1 15 Apple iPad Mini MD531ZP iOS 7.1 16 Apple iPad Mini 2 FE276ZP/A iOS 8.1 17 Apple iPad Mini 3 MGNV2J/A iOS 8.1 18 Apple iPhone 3Gs MC132ZP iOS 6.1.3 19 Apple iPhone 4 MC676LL iOS 7.1.2 20 Apple iPhone 4 MC603ZP iOS 7.1.2 21 Apple iPhone 4 MC604ZP iOS 5.1.1 22 Apple iPhone 4s MD245ZP iOS 8.1 23 Apple iPhone 4s MD245ZP iOS 8.4.1 24 Apple iPhone 4s MD245ZP iOS 6.1.2 25 Apple iPhone 5 MD297ZP iOS 6.0 26 Apple iPhone 5 MD298ZP/A iOS 8.1 27 Apple iPhone 5 MD298ZP/A iOS 7.1.1 28 Apple iPhone 5c MF321ZP/A iOS 7.1.2 29 Apple iPhone 5c MF321ZP/A iOS 8.1 30 Apple iPhone 5s MF353ZP/A iOS 8.0 31 Apple iPhone 5s MF353ZP/A iOS 8.4.1 32 Apple iPhone 5s MF352ZP/A iOS 7.1.1 33 Apple iPhone 6 MG492ZP/A iOS 8.1 34 Apple iPhone 6 MG492ZP/A iOS 9.1 35 Apple iPhone 6 Plus MGA92ZP/A iOS 9.0 36 Apple iPhone 6 Plus MGAK2ZP/A iOS 8.0.2 37 Apple iPhone 6 Plus MGAK2ZP/A iOS 8.1 -

Benutzerhandbuch User Instructions

1 STECKERTYPEN Kompatibilitätsliste, welcher Aufsatz für Ihr 3 KOMPATIBILITÄT 11-Pin-Adapter(-kabel) kompatibel: trockenes Tuch. Verwenden Sie keine Endgerät passend ist. Folgende Modelle sind mit dem SlimPort-Adap- • Samsung Galaxy S3 (i9300, i747, i535, T999, chemischen Zusätze. Adapterkabel: ter(-kabel) kompatibel: R530), Galaxy S4 (i9500), Galaxy S4 Active, • Eine Entsorgung muss über die zuständigen MicroUSB Eingang • Acer Predator 8, Acer Iconia Tab 10, Asus Galaxy S4 Zoom, Galaxy S5 (i9600), Galaxy S5 Sammelstellen erfolgen. 5 Pin 11 Pin (Stromversorgung) Memo Pad 8, PadFone Infinity, PadfoneX, Acer Zoom, Galaxy Note 2 (N7100), Galaxy Note 3 Iconia Tab10, Predator 8, Amazon Fire HD Kids (N9005), Galaxy Note 8, Galaxy Mega 6.3 und 2 BENUTZUNG Edition, Fire HD6, Fire HD7, HDX 8.9, 5.8, Galaxy Tab 3 (8", 10.1"), Galaxy Tab S (8.4", Adapter: Blackberry Passport, Classic, Fujitsu Arrow, 10.5"), Galaxy TabPro (8.4", 10.1"), Galaxy Tab Stylistic QH582, Google LG Nexus 4, LG Nexus S2 (9,7” T815N), Galaxy Express, Galaxy K 5, Nexus 7, HP Chromebook 11, Pro Slate 8, Zoom Pro Slate 12, LG G Flex, G Flex 2, G Pad, G Pro • BITTE ÜBERPRÜFEN SIE AUF DER HERSTEL- 2, G2, G3, G4, GX, GX2, Optimus G Pro, LERSEITE IHRES TABLETS ODER SMARTPHO- HDMI Ausgang MicroUSB Eingang Micro-USB zum Optimus GK, V10, VU 3, YotaPhone 2, ZTE NES, OB IHR GERÄT (Modellnummer beachten!) Benutzerhandbuch (Stromversorgung) Smartphone / Tablet Nubia X6, Nubia Z5S MLH ODER SLIMPORT KOMPATIBEL IST. • ACHTUNG: Samsung Galaxy S6 und S7 MicroUSB zu HDMI - Slimport / Micro-USB zum • Stellen Sie zunächst die Stromverbindung Folgende Modelle sind mit dem MHL 5-Pin- Adapter(-kabel) kompatibel: sowie sämtliche Neo Modelle unterstützen MHL Adapter / Adapterkabel Smartphone / Tablet HDMI Ausgang her. -

Pairing Smartphones in Close Proximity Using Magnetometers

MagPairing: Pairing Smartphones in Close Proximity Using Magnetometers Rong Jin∗, Liu Shiy, Kai Zengz , Amit Pandex, Prasant Mohapatrax ∗School of Electronic Information and Communications, Huazhong University of Science and Technology Email: [email protected] yDepartment of Computer and Information Science, University of Michigan - Dearborn, MI 48128 Email: [email protected] z Department of Electrical and Computer Engineering, George Mason University, VA 22030 Email: [email protected] xDepartment of Computer Science, University of California, Davis, CA, 95616 Email: famit,[email protected] Abstract—With the prevalence of mobile computing, lots of Using auxiliary out-of-band (OOB) channels to facilitate wireless devices need to establish secure communication on the device pairing has been studied as a feasible option involving fly without pre-shared secrets. Device pairing is critical for visual [1, 2, 3, 4, 5, 6, 7, 8], acoustic [9, 10, 11, 12, 13], bootstrapping secure communication between two previously u- nassociated devices over the wireless channel. Using auxiliary out- tactile [14] or vibrational sensors [15, 16]. However, these of-band channels involving visual, acoustic, tactile or vibrational methods are not optimized in terms of usability, which is sensors has been proposed as a feasible option to facilitate device considered of utmost importance in pairing scheme based on pairing. However, these methods usually require users to perform OOB channels [2, 17, 18, 19], and require users to perform additional tasks such as copying, comparing, and shaking. It is additional tasks such as copying, comparing and shaking. It preferable to have a natural and intuitive pairing method with minimal user tasks. -

Alcatel One Touch Go Play 7048 Alcatel One Touch

Acer Liquid Jade S Alcatel Idol 3 4,7" Alcatel Idol 3 5,5" Alcatel One Touch Go Play 7048 Alcatel One Touch Pop C3/C2 Alcatel One Touch POP C7 Alcatel Pixi 4 4” Alcatel Pixi 4 5” (5045x) Alcatel Pixi First Alcatel Pop 3 5” (5065x) Alcatel Pop 4 Lte Alcatel Pop 4 plus Alcatel Pop 4S Alcatel Pop C5 Alcatel Pop C9 Allview C6 Quad Apple Iphone 4 / 4s Apple Iphone 5 / 5s / SE Apple Iphone 5c Apple Iphone 6/6s 4,7" Apple Iphone 6 plus / 6s plus Apple Iphone 7 Apple Iphone 7 plus Apple Iphone 8 Apple Iphone 8 plus Apple Iphone X HTC 8S HTC Desire 320 HTC Desire 620 HTC Desire 626 HTC Desire 650 HTC Desire 820 HTC Desire 825 HTC 10 One M10 HTC One A9 HTC One M7 HTC One M8 HTC One M8s HTC One M9 HTC U11 Huawei Ascend G620s Huawei Ascend G730 Huawei Ascend Mate 7 Huawei Ascend P7 Huawei Ascend Y530 Huawei Ascend Y540 Huawei Ascend Y600 Huawei G8 Huawei Honor 5x Huawei Honor 7 Huawei Honor 8 Huawei Honor 9 Huawei Mate S Huawei Nexus 6p Huawei P10 Lite Huawei P8 Huawei P8 Lite Huawei P9 Huawei P9 Lite Huawei P9 Lite Mini Huawei ShotX Huawei Y3 / Y360 Huawei Y3 II Huawei Y5 / Y541 Huawei Y5 / Y560 Huawei Y5 2017 Huawei Y5 II Huawei Y550 Huawei Y6 Huawei Y6 2017 Huawei Y6 II / 5A Huawei Y6 II Compact Huawei Y6 pro Huawei Y635 Huawei Y7 2017 Lenovo Moto G4 Plus Lenovo Moto Z Lenovo Moto Z Play Lenovo Vibe C2 Lenovo Vibe K5 LG F70 LG G Pro Lite LG G2 LG G2 mini D620 LG G3 LG G3 s LG G4 LG G4c H525 / G4 mini LG G5 / H830 LG K10 / K10 Lte LG K10 2017 / K10 dual 2017 LG K3 LG K4 LG K4 2017 LG K7 LG K8 LG K8 2017 / K8 dual 2017 LG L Fino LG L5 II LG L7 LG -

Meizu Mx English Manual

Meizu Mx English Manual Compare, research, and read user reviews on the Meizu MX4 phone. Find and download free cell phone user manual you need online. Meizu MX Quad-Core Phone User Manual Guide from Cellphoneusermanuals.com. This is the official Meizu MX User Guide in English provided from the manufacturer. If you are looking for detailed technical specifications, please see our Specs. Hi Meizu MX fans! An update for your monster device is here. It is a new Chinese and international firmware for the MX4. Those who installed Flyme OS 4.0.2I. Meizu MX2 User Manual Guide. Home » Mobile Phone » Meizu MX Manual Users Guide PDF Download. meizu mx manual,meizu manual,meizu mx user. Founded in 2003, Meizu began as a manufacturer of MP3 players and later The Meizu MX was released on January 1, 2012, exactly one year after the M9. Meizu Mx English Manual Read/Download Does the latest high-end offering from Meizu manage to stand out of the crowd? with quick access to a bunch of different modes, like a full manual mode, that gives to find any stock Android and Material Design elements in this user interface, Meizu has been using this design since style since the original MX, which. You will also only have a choice of English or Chinese languages. download the “update.zip” file needed to manually install Flyme 4.2.1A for the Meizu MX4. Far Superior Images than 2K Screens Using Less Power. We have thought higher than ever and increased the resolution of the MX4 Pro to an astonishing 2,560. -

Version 1.0 - Magic-Device-Tool

MariusQuabeck / magic-device-tool Dismiss Join GitHub today GitHub is home to over 20 million developers working together to host and review code, manage projects, and build software together. Sign up A simple and feature full batch tool to handle installing/replacing Operating Systems (Ubuntu Phone / Ubuntu Touch, Android, LineageOS, Maru OS, Sailfish OS and Phoenix OS) on your mobile devices. 463 commits 4 branches 0 releases 14 contributors GPL-3.0 master New pull request Find file Clone or download MariusQuabeck Update Lineage OS download link Latest commit 56d73c1 20 days ago devices Update Lineage OS download link 20 days ago tools Hotfixes: result of backup/restore operation was always failed, N5 sc… 6 months ago LICENSE Create LICENSE a year ago README.md feature full -> featureful 3 months ago launcher.sh Add Nexus 7 2012 3G tilapia + Install Ubuntu 13.04 Desktop 5 months ago mdt.png Update logo a year ago mdtnames.png Add files via upload a year ago snapcraft.yaml Update snapcraft.yaml remove mplayer 9 months ago statuslineageos feed test 11 months ago README.md Version 1.0 - magic-device-tool A simple and featureful tool to handle installing/replacing Operating Systems (Ubuntu Phone / Ubuntu Touch, Android, LineageOS, Maru OS, Sailfish OS, and Phoenix OS) on your mobile devices. Contact Marius Quabeck (Email) Mister_Q on the freenode IRC network Join us on Telegram ! Donate if you like this tool Buy me devices ;) Standard Disclaimer Text This tool does not let you Dual Boot between Android and Ubuntu Touch. Not all ROMs are available for all devices. -

Deepdroid: Dynamically Enforcing Enterprise Policy on Android Devices

DeepDroid: Dynamically Enforcing Enterprise Policy on Android Devices Xueqiang Wang∗y, Kun Sunz, Yuewu Wang∗ and Jiwu Jing∗ ∗ Data Assurance and Communication Security Research Center, Institute of Information Engineering, Chinese Academy of Sciences fwangxueqiang, ywwang, [email protected] yUniversity of Chinese Academy of Sciences zDepartment of Computer Science, College of William and Mary [email protected] Abstract—It is becoming a global trend for company to influence the design and usage of mobile devices in employees equipped with mobile devices to access compa- the enterprise environments. While users are blurring the ny’s assets. Besides enterprise apps, lots of personal apps lines between company and personal usage, enterprises from various untrusted app stores may also be installed demand a secure and robust mobile device management on those devices. To secure the business environment, to protect their business assets. For instance, in a building policy enforcement on what, how, and when certain apps can access system resources is required by enterprise IT. that forbids any audio recording, all mobile devices’ However, Android, the largest mobile platform with a microphones should be disabled when the users check market share of 81.9%, provides very restricted interfaces in the building and be enabled when the users check for enterprise policy enforcement. In this paper, we present out. DeepDroid, a dynamic enterprise security policy enforce- ment scheme on Android devices. Different from existing The permission model on Android, the largest mobile approaches, DeepDroid is implemented by dynamic mem- platform with a market share of 81% [2], only grants ory instrumentation of a small number of critical system an “all-or-nothing” installation option for mobile users processes without any firmware modification. -

Now Your World Just Got Bigger Turn Your Smartphone Into a Tablet

Turn your Smartphone into a Tablet... Introducing the Universal TransPad ... now your world just got bigger 8/27/2014 The LOGICAL way to create a tablet TM TransPhone International, Copyright © 2013, ALL RIGHTS RESERVED. Covered by one or more US patents numbers 6,999,792, 7,266,391 & 7,477,919. Other US and international patents pending August 2014 Version 991 UniversalTransPad “UTP” Executive Summary THE LOGICAL WAY TO MAKE TABLETS The LOGICAL way to create a tablet TM TransPhone International, Copyright © 2013, ALL RIGHTS RESERVED. Covered by one or more US patents numbers 6,999,792, 7,266,391 & 7,477,919. Other US and international patents pending KILLER PRODUCTS ARE: PRODUCTS WHICH SOLVE REAL PROBLEMS EVERYBODY HAS The LOGICAL way to create a tablet TM TransPhone International, Copyright © 2013, ALL RIGHTS RESERVED. Covered by one or more US patents numbers 6,999,792, 7,266,391 & 7,477,919. Other US and international patents pending SMARTPHONES EVERYONE IN THE WORLD - WHO HAS A SMARTPHONE - HAS THE SAME PROBLEM The LOGICAL way to create a tablet TM TransPhone International, Copyright © 2013, ALL RIGHTS RESERVED. Covered by one or more US patents numbers 6,999,792, 7,266,391 & 7,477,919. Other US and international patents pending THE SCREEN IS WAY TOO SMALL The LOGICAL way to create a tablet TM TransPhone International, Copyright © 2013, ALL RIGHTS RESERVED. Covered by one or more US patents numbers 6,999,792, 7,266,391 & 7,477,919. Other US and international patents pending Our Killer Solution CONVERT THE CUSTOMER’S OWN SMARTPHONE INTO A HIGH-SPEC TABLET (AND BACK AGAIN) IN SECONDS The LOGICAL way to create a tablet TM TransPhone International, Copyright © 2013, ALL RIGHTS RESERVED. -

SKU Item Group Product Name Grade Functionality Boxed Color

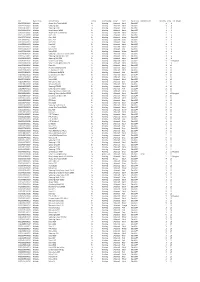

SKU Item Group Product Name Grade Functionality Boxed Color Cloud Lock Additional Info Quantity Price VAT Margin 00100272900009 Mobiles Alcatel One Touch 1016D B- Working Unboxed Black CloudOFF 1 8 00100457400004 Mobiles Samsung i600 B- Working Unboxed Black CloudOFF 1 8 00100468700001 Mobiles Samsung U700 B- Working Unboxed Silver CloudOFF 1 8 00100490100010 Mobiles Sony Ericsson W302 B- Working Unboxed Black CloudOFF 1 8 00100272900006 Mobiles Alcatel One Touch 1016D A- Working Unboxed Black CloudOFF 1 9 00101771700010 Mobiles Doro 1362 B- Working Unboxed Black CloudOFF 2 9 00101771700018 Mobiles Doro 1362 B- Working Unboxed White CloudOFF 1 9 00101845600007 Mobiles Doro 2414 B- Working Unboxed Silver CloudOFF 1 9 00101845600027 Mobiles Doro 2414 B- Working Unboxed Grey CloudOFF 2 9 00100556600003 Mobiles Doro 515 B- Working Unboxed Black CloudOFF 1 9 00100354900001 Mobiles LG T565b B- Working Unboxed Black CloudOFF 1 9 00100376400012 Mobiles Nokia 5230 B- Working Unboxed Pink CloudOFF 1 9 00100376400050 Mobiles Nokia 5230 B- Working Unboxed White CloudOFF 1 9 00100413800010 Mobiles Samsung Corby Genio Touch S3650 B- Working Unboxed Black CloudOFF 1 9 00100461700001 Mobiles Samsung Omnia Lite B7300 B- Working Unboxed Black CloudOFF 4 9 00100810800003 Mobiles Samsung SGH-i320 B- Working Unboxed Black CloudOFF 1 9 00100490100010 Mobiles Sony Ericsson W302 B- Working Unboxed Black CloudOFF 1 9 Marginal 00100493800010 Mobiles Sony Ericsson Xperia Mini Pro B- Working Unboxed Black CloudOFF 1 9 00100301800010 Mobiles Doro 410 A- Working Unboxed -

Modelos Compatibles De I-Móvil

Modelos compatibles de i-móvil No. Marca Modelo del Teléfono Versión del sistema operativo 1 360 1501_M02 1501_M02 Android 5.1 2 360 N4 1503-M02 Android 6.0 3 100+ 100B 100B Android 4.1.2 4 Acer Iconia Tab A500 Android 4.0.3 5 ALPS (Golden Master) MR6012H1C2W1 MR6012H1C2W1 Android 4.2.2 6 ALPS (Golden Master) PMID705GTV PMID705GTV Android 4.2.2 7 Amazon Fire HD 6 Fire HD 6 Fire OS 4.5.2 / Android 4.4.3 8 Amazon Fire Phone 32GB Fire Phone 32GB Fire OS 3.6.8 / Android 4.2.2 9 Amoi A862W A862W Android 4.1.2 10 amzn KFFOWI KFFOWI Android 5.1.1 11 Apple iPad 2 (2nd generation) MC979ZP iOS 7.1 12 Apple iPad 4 MD513ZP/A iOS 7.1 13 Apple iPad 4 MD513ZP/A iOS 8.0 14 Apple iPad Air MD785ZP/A iOS 7.1 15 Apple iPad Air 2 MGLW2J/A iOS 8.1 16 Apple iPad Mini MD531ZP iOS 7.1 17 Apple iPad Mini 2 FE276ZP/A iOS 8.1 18 Apple iPad Mini 3 MGNV2J/A iOS 8.1 19 Apple iPhone 3Gs MC132ZP iOS 6.1.3 20 Apple iPhone 4 MC603ZP iOS 7.1.2 21 Apple iPhone 4 MC604ZP iOS 5.1.1 22 Apple iPhone 4 MC676LL iOS 7.1.2 23 Apple iPhone 4s MD245ZP iOS 6.1.2 24 Apple iPhone 4s MD245ZP iOS 8.1 25 Apple iPhone 4s MD245ZP iOS 8.4.1 26 Apple iPhone 5 MD297ZP iOS 6.0 27 Apple iPhone 5 MD298ZP/A iOS 7.1.1 28 Apple iPhone 5 MD298ZP/A iOS 8.1 29 Apple iPhone 5c MF321ZP/A iOS 7.1.2 30 Apple iPhone 5c MF321ZP/A iOS 8.1 31 Apple iPhone 5s MF352ZP/A iOS 7.1.1 32 Apple iPhone 5s MF353ZP/A iOS 8.0 33 Apple iPhone 5s MF353ZP/A iOS 8.4.1 34 Apple iPhone 6 MG492ZP/A iOS 8.1 35 Apple iPhone 6 MG492ZP/A iOS 9.1 36 Apple iPhone 6 Plus MGA92ZP/A iOS 9.0 37 Apple iPhone 6 Plus MGAK2ZP/A iOS 8.0.2 38 -

Presentación De Powerpoint

Modelos compatibles de Imóvil Versión del sistema operativo o No. Marca Modelo Teléfono superior 1 360 1501_M02 1501_M02 Android 5.1 2 360 N4 1503-M02 Android 6.0 3 100+ 100B 100B Android 4.1.2 4 Acer Iconia Tab A500 Android 4.0.3 5 ALPS (Golden Master) MR6012H1C2W1 MR6012H1C2W1 Android 4.2.2 6 ALPS (Golden Master) PMID705GTV PMID705GTV Android 4.2.2 7 Amazon Fire HD 6 Fire HD 6 Android 4.4.3 8 Amazon Fire Phone 32GB Fire Phone 32GB Android 4.2.2 9 Amoi A862W A862W Android 4.1.2 10 amzn KFFOWI KFFOWI Android 5.1.1 11 Apple iPad 2 (2nd generation) MC979ZP iOS 7.1 12 Apple iPad 4 MD513ZP/A iOS 7.1 13 Apple iPad 4 MD513ZP/A iOS 8.0 14 Apple iPad Air MD785ZP/A iOS 7.1 15 Apple iPad Air 2 MGLW2J/A iOS 8.1 16 Apple iPad Mini MD531ZP iOS 7.1 17 Apple iPad Mini 2 FE276ZP/A iOS 8.1 18 Apple iPad Mini 3 MGNV2J/A iOS 8.1 19 Apple iPad Pro MM172ZP/A iOS 10.3.2 20 Apple iPad Pro 10.5-inch Wi-Fi MQDW2ZP/A iOS 10.3.2 21 Apple iPad Pro 12.9-inch Wi-Fi MQDC2ZP/A iOS 10.3.2 22 Apple iPad Wi-Fi MP2G2ZP/A iOS 10.3.1 23 Apple iPhone 3Gs MC132ZP iOS 6.1.3 24 Apple iPhone 4 MC603ZP iOS 7.1.2 25 Apple iPhone 4 MC604ZP iOS 5.1.1 26 Apple iPhone 4 MC676LL iOS 7.1.2 27 Apple iPhone 4s MD245ZP iOS 6.1.2 28 Apple iPhone 4s MD245ZP iOS 8.1 29 Apple iPhone 4s MD245ZP iOS 8.4.1 30 Apple iPhone 5 MD297ZP iOS 6.0 31 Apple iPhone 5 MD298ZP/A iOS 7.1.1 32 Apple iPhone 5 MD298ZP/A iOS 8.1 33 Apple iPhone 5c MF321ZP/A iOS 7.1.2 34 Apple iPhone 5c MF321ZP/A iOS 8.1 35 Apple iPhone 5s MF352ZP/A iOS 7.1.1 36 Apple iPhone 5s MF353ZP/A iOS 8.0 37 Apple iPhone 5s MF353ZP/A iOS