Timing of the Solar Wind Propagation Delay Between L1 and Earth Based on Machine Learning C

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

University of Iowa Instruments in Space

University of Iowa Instruments in Space A-D13-089-5 Wind Van Allen Probes Cluster Mercury Earth Venus Mars Express HaloSat MMS Geotail Mars Voyager 2 Neptune Uranus Juno Pluto Jupiter Saturn Voyager 1 Spaceflight instruments designed and built at the University of Iowa in the Department of Physics & Astronomy (1958-2019) Explorer 1 1958 Feb. 1 OGO 4 1967 July 28 Juno * 2011 Aug. 5 Launch Date Launch Date Launch Date Spacecraft Spacecraft Spacecraft Explorer 3 (U1T9)58 Mar. 26 Injun 5 1(U9T68) Aug. 8 (UT) ExpEloxrpelro r1e r 4 1915985 8F eJbu.l y1 26 OEGxOpl o4rer 41 (IMP-5) 19697 Juunlye 2 281 Juno * 2011 Aug. 5 Explorer 2 (launch failure) 1958 Mar. 5 OGO 5 1968 Mar. 4 Van Allen Probe A * 2012 Aug. 30 ExpPloiorenre 3er 1 1915985 8M Oarc. t2. 611 InEjuxnp lo5rer 45 (SSS) 197618 NAouvg.. 186 Van Allen Probe B * 2012 Aug. 30 ExpPloiorenre 4er 2 1915985 8Ju Nlyo 2v.6 8 EUxpKlo 4r e(rA 4ri1el -(4IM) P-5) 197619 DJuenc.e 1 211 Magnetospheric Multiscale Mission / 1 * 2015 Mar. 12 ExpPloiorenre 5e r 3 (launch failure) 1915985 8A uDge.c 2. 46 EPxpiolonreeerr 4130 (IMP- 6) 19721 Maarr.. 313 HMEaRgCnIe CtousbpeShaetr i(cF oMxu-1ltDis scaatelell itMe)i ssion / 2 * 2021081 J5a nM. a1r2. 12 PionPeioenr e1er 4 1915985 9O cMt.a 1r.1 3 EExpxlpolorerer r4 457 ( S(IMSSP)-7) 19721 SNeopvt.. 1263 HMaalogSnaett oCsupbhee Sriact eMlluitlet i*scale Mission / 3 * 2021081 M5a My a2r1. 12 Pioneer 2 1958 Nov. 8 UK 4 (Ariel-4) 1971 Dec. 11 Magnetospheric Multiscale Mission / 4 * 2015 Mar. -

Information Summaries

TIROS 8 12/21/63 Delta-22 TIROS-H (A-53) 17B S National Aeronautics and TIROS 9 1/22/65 Delta-28 TIROS-I (A-54) 17A S Space Administration TIROS Operational 2TIROS 10 7/1/65 Delta-32 OT-1 17B S John F. Kennedy Space Center 2ESSA 1 2/3/66 Delta-36 OT-3 (TOS) 17A S Information Summaries 2 2 ESSA 2 2/28/66 Delta-37 OT-2 (TOS) 17B S 2ESSA 3 10/2/66 2Delta-41 TOS-A 1SLC-2E S PMS 031 (KSC) OSO (Orbiting Solar Observatories) Lunar and Planetary 2ESSA 4 1/26/67 2Delta-45 TOS-B 1SLC-2E S June 1999 OSO 1 3/7/62 Delta-8 OSO-A (S-16) 17A S 2ESSA 5 4/20/67 2Delta-48 TOS-C 1SLC-2E S OSO 2 2/3/65 Delta-29 OSO-B2 (S-17) 17B S Mission Launch Launch Payload Launch 2ESSA 6 11/10/67 2Delta-54 TOS-D 1SLC-2E S OSO 8/25/65 Delta-33 OSO-C 17B U Name Date Vehicle Code Pad Results 2ESSA 7 8/16/68 2Delta-58 TOS-E 1SLC-2E S OSO 3 3/8/67 Delta-46 OSO-E1 17A S 2ESSA 8 12/15/68 2Delta-62 TOS-F 1SLC-2E S OSO 4 10/18/67 Delta-53 OSO-D 17B S PIONEER (Lunar) 2ESSA 9 2/26/69 2Delta-67 TOS-G 17B S OSO 5 1/22/69 Delta-64 OSO-F 17B S Pioneer 1 10/11/58 Thor-Able-1 –– 17A U Major NASA 2 1 OSO 6/PAC 8/9/69 Delta-72 OSO-G/PAC 17A S Pioneer 2 11/8/58 Thor-Able-2 –– 17A U IMPROVED TIROS OPERATIONAL 2 1 OSO 7/TETR 3 9/29/71 Delta-85 OSO-H/TETR-D 17A S Pioneer 3 12/6/58 Juno II AM-11 –– 5 U 3ITOS 1/OSCAR 5 1/23/70 2Delta-76 1TIROS-M/OSCAR 1SLC-2W S 2 OSO 8 6/21/75 Delta-112 OSO-1 17B S Pioneer 4 3/3/59 Juno II AM-14 –– 5 S 3NOAA 1 12/11/70 2Delta-81 ITOS-A 1SLC-2W S Launches Pioneer 11/26/59 Atlas-Able-1 –– 14 U 3ITOS 10/21/71 2Delta-86 ITOS-B 1SLC-2E U OGO (Orbiting Geophysical -

Ionospheric Cusp Flows Pulsed by Solar Wind Alfvén Waves

c Annales Geophysicae (2002) 20: 161–174 European Geophysical Society 2002 Annales Geophysicae Ionospheric cusp flows pulsed by solar wind Alfven´ waves P. Prikryl1, G. Provan2, K. A. McWilliams2, and T. K. Yeoman2 1Communications Research Centre, Ottawa, Ontario K2H 8S2, Canada 2Department of Physics and Astronomy, University of Leicester, UK Received: 7 February 2001 – Revised: 24 August 2001 – Accepted: 10 September 2001 Abstract. Pulsed ionospheric flows (PIFs) in the cusp foot- bow shock were the source of magnetopause surface waves print have been observed by the SuperDARN radars with inducing reconnection. periods between a few minutes and several tens of minutes. Key words. Interplanetary physics (MHD waves and PIFs are believed to be a consequence of the interplanetary turbulence) – Magnetospheric physics (magnetosphere- magnetic field (IMF) reconnection with the magnetospheric ionosphere interactions; solar wind-magnetosphere interac- magnetic field on the dayside magnetopause, ionospheric tions) signatures of flux transfer events (FTEs). The quasiperiodic PIFs are correlated with Alfvenic´ fluctuations observed in the upstream solar wind. It is concluded that on these occasions, the FTEs were driven by Alfven´ waves coupling to the day- 1 Introduction side magnetosphere. Case studies are presented in which the dawn-dusk component of the Alfven´ wave electric field mod- Ionospheric dynamics near the cusp footprint attest to pro- ulates the reconnection rate as evidenced by the radar obser- cesses at the dayside magnetopause and in particular, to vations of the ionospheric cusp flows. The arrival of the IMF pulsed magnetic reconnection (Cowley et al., 1991; Lock- southward turning at the magnetopause is determined from wood et al., 1993). -

Photographs Written Historical and Descriptive

CAPE CANAVERAL AIR FORCE STATION, MISSILE ASSEMBLY HAER FL-8-B BUILDING AE HAER FL-8-B (John F. Kennedy Space Center, Hanger AE) Cape Canaveral Brevard County Florida PHOTOGRAPHS WRITTEN HISTORICAL AND DESCRIPTIVE DATA HISTORIC AMERICAN ENGINEERING RECORD SOUTHEAST REGIONAL OFFICE National Park Service U.S. Department of the Interior 100 Alabama St. NW Atlanta, GA 30303 HISTORIC AMERICAN ENGINEERING RECORD CAPE CANAVERAL AIR FORCE STATION, MISSILE ASSEMBLY BUILDING AE (Hangar AE) HAER NO. FL-8-B Location: Hangar Road, Cape Canaveral Air Force Station (CCAFS), Industrial Area, Brevard County, Florida. USGS Cape Canaveral, Florida, Quadrangle. Universal Transverse Mercator Coordinates: E 540610 N 3151547, Zone 17, NAD 1983. Date of Construction: 1959 Present Owner: National Aeronautics and Space Administration (NASA) Present Use: Home to NASA’s Launch Services Program (LSP) and the Launch Vehicle Data Center (LVDC). The LVDC allows engineers to monitor telemetry data during unmanned rocket launches. Significance: Missile Assembly Building AE, commonly called Hangar AE, is nationally significant as the telemetry station for NASA KSC’s unmanned Expendable Launch Vehicle (ELV) program. Since 1961, the building has been the principal facility for monitoring telemetry communications data during ELV launches and until 1995 it processed scientifically significant ELV satellite payloads. Still in operation, Hangar AE is essential to the continuing mission and success of NASA’s unmanned rocket launch program at KSC. It is eligible for listing on the National Register of Historic Places (NRHP) under Criterion A in the area of Space Exploration as Kennedy Space Center’s (KSC) original Mission Control Center for its program of unmanned launch missions and under Criterion C as a contributing resource in the CCAFS Industrial Area Historic District. -

The Effect of Ionospheric Conductivity on Magnetospheric Dynamics

University of New Hampshire University of New Hampshire Scholars' Repository Doctoral Dissertations Student Scholarship Fall 2018 THE EFFECT OF IONOSPHERIC CONDUCTIVITY ON MAGNETOSPHERIC DYNAMICS Benjamin Joseph Jensen University of New Hampshire, Durham Follow this and additional works at: https://scholars.unh.edu/dissertation Recommended Citation Jensen, Benjamin Joseph, "THE EFFECT OF IONOSPHERIC CONDUCTIVITY ON MAGNETOSPHERIC DYNAMICS" (2018). Doctoral Dissertations. 2414. https://scholars.unh.edu/dissertation/2414 This Dissertation is brought to you for free and open access by the Student Scholarship at University of New Hampshire Scholars' Repository. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of University of New Hampshire Scholars' Repository. For more information, please contact [email protected]. THE EFFECT OF IONOSPHERIC CONDUCTIVITY ON MAGNETOSPHERIC DYNAMICS BY JOSEPH B. JENSEN BS, Physics, Utah State University, Logan UT, 2013 DISSERTATION Submitted to the University of New Hampshire in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in Physics September 2018 ALL RIGHTS RESERVED ©2018 Joseph B. Jensen This dissertation has been examined and approved in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Physics by: Dissertation Advisor, Joachim Raeder, Professor, Department of Physics University of New Hampshire Lynn Kistler, Professor,Department of Physics University of New Hampshire Kai Germaschewski, Associate Professor, Department of Physics University of New Hampshire Marc Lessard, Associate Professor, Department of Physics University of New Hampshire Simon G. Shepherd, Associate Professor of Engineering Thayer School of Engineering at Dartmouth College on 6 July 2018. Original approval signatures are on file with the University of New Hampshire Graduate School. -

Plasma Wave Turbulence at the Magnetopause&Colon

VOL. 84, NO. A12 JOURNAL OF GEOPHYSICAL RESEARCH DECEMBER 1, 1979 Plasma Wave Turbulence at the Magnetopause: Observations From ISEE 1 and 2 D. A. GURNETT,l R. R. ANDERSON,l B. T. TSURUTANI,2 E. J. SMITH,2 G. PASCHMANN,3 G. HAERENDEL,3 $. J. BAME,4 AND C. T. RUSSELLs In this paper we investigateplasma wave electric and magnetic fields in the vicinity of the magneto- pauseby usingrecent measurements from the ISEE 1 and 2 spacecraft.Strong electric and magneticfield turbulenceis often observedat the magnetopause.The electricfield spectrumof this turbulencetypically extendsover an extremelylarge frequencyrange, from lessthan a few hertz to above 100 kHz, and the magneticfield turbulencetypically extends from a few hertz to about 1 kHz. The maximum intensities usually occur in the magnetopausecurrent layer and plasmaboundary layer. Somewhatsimilar turbu- lence spectraare also sometimesobserved in associationwith flux transfer events and possible'in- clusions'of boundarylayer plasma in the magnetosphere.In addition to the broad-bandelectric and magneticfield turbulence,narrow-band electrostatic emissions are occasionallyobserved near the elec- tron plasmafrequency in the vicinity of the magnetopause.Two possibleplasma instabilities, the elec- trostaticion-cyclotron instability and the lower-hybrid-driftinstability, are consideredthe primary can- didatesfor explaining the broad-bandelectric field turbulence.The narrow-bandelectrostatic emissions near the local electron plasma frequency are believed to be either plasma oscillationsor electrostatic wavesnear the upper-hybrid-resonancefrequency. 1. INTRODUCTION waves. Although the magnetopauseis known to be very tur- bulent in the low-frequency MHD portion of the spectrum, Becauseof the many important questionswhich have been relatively little is known about the plasma wave intensitiesat raised recently concerning the physical processeswhich occur higher frequenciesin the region of primary importance for at the earth's magnetopauseboundary [Heikkila, 1975; Hae- microscopicplasma processes.Neugebauer et al. -

Citation and Similar Papers at Core.Ac.Ukw

4 https://ntrs.nasa.gov/search.jsp?R=19670007176 2020-03-16T18:43:02+00:00Z View metadata, citation and similar papers at core.ac.ukw .. -. brought to you by CORE I provided by NASA Technical Reports Server 1 . National Aeronautics and STace Administration Goddard Space Flight Center C ont r ac t No NAS -5 -f 7 60 THE OUTERMOST BELT OF CFLARGED PARTICLES _- .- - by K. I, Grin,yaue t: M, 2. I~alOkhlOV cussa 3 GPO PRICE $ CFSTI PRICE(S) $ 17 NOVEbI3ER 1965 Hard copy (HC) .J d-0 Microfiche (M F) ,J3’ ff 853 July 85 Issl. kosniicheskogo prostrznstva by K. N. Gringaua Trudy Vsesoyuzrloy koneferentsii & M. z. Khokhlov po kosaiches?%inlucham, 467 - 482 Noscon, June 1965. This report deals with the result of the study of a eone of char- ged pxticles with comparatively low ener-ies (from -100 ev to 10 - 4Okev), situated beyond the outer rzdiation belt (including the new data obtained on Ilectron-2 and Zond-2). 'The cutkors review, first of all, an2 in chronolo~icalorder, the space probes on which data on soft electrons 'and protons were obtained beyond the rsdistion belts. A brief review is given of soae examples of regis- tration of soft electrons at high geominetic latitudes by Mars-1 and Elec- tron-2. It is shown that here, BS in other space probes, the zones of soft electron flwcys are gartly overlap7inr with the zones of trapped radiation. The spatial distributio;: of fluxcs of soft electrons is sixdied in liqht of data oStziined fro.1 various sFnce probes, such as Lunik-1, Explorer-12, Explorer-18, for the daytime rerion along the map-etosphere boundary &om the sumy side. -

<> CRONOLOGIA DE LOS SATÉLITES ARTIFICIALES DE LA

1 SATELITES ARTIFICIALES. Capítulo 5º Subcap. 10 <> CRONOLOGIA DE LOS SATÉLITES ARTIFICIALES DE LA TIERRA. Esta es una relación cronológica de todos los lanzamientos de satélites artificiales de nuestro planeta, con independencia de su éxito o fracaso, tanto en el disparo como en órbita. Significa pues que muchos de ellos no han alcanzado el espacio y fueron destruidos. Se señala en primer lugar (a la izquierda) su nombre, seguido de la fecha del lanzamiento, el país al que pertenece el satélite (que puede ser otro distinto al que lo lanza) y el tipo de satélite; este último aspecto podría no corresponderse en exactitud dado que algunos son de finalidad múltiple. En los lanzamientos múltiples, cada satélite figura separado (salvo en los casos de fracaso, en que no llegan a separarse) pero naturalmente en la misma fecha y juntos. NO ESTÁN incluidos los llevados en vuelos tripulados, si bien se citan en el programa de satélites correspondiente y en el capítulo de “Cronología general de lanzamientos”. .SATÉLITE Fecha País Tipo SPUTNIK F1 15.05.1957 URSS Experimental o tecnológico SPUTNIK F2 21.08.1957 URSS Experimental o tecnológico SPUTNIK 01 04.10.1957 URSS Experimental o tecnológico SPUTNIK 02 03.11.1957 URSS Científico VANGUARD-1A 06.12.1957 USA Experimental o tecnológico EXPLORER 01 31.01.1958 USA Científico VANGUARD-1B 05.02.1958 USA Experimental o tecnológico EXPLORER 02 05.03.1958 USA Científico VANGUARD-1 17.03.1958 USA Experimental o tecnológico EXPLORER 03 26.03.1958 USA Científico SPUTNIK D1 27.04.1958 URSS Geodésico VANGUARD-2A -

Two-Stage Oscillatory Response of the Magnetopause to a Tangential Discontinuity/Vortex Sheet Followed by Northward IMF: Cluster Observations C

JOURNAL OF GEOPHYSICAL RESEARCH, VOL. 113, A03208, doi:10.1029/2007JA012800, 2008 Click Here for Full Article Two-stage oscillatory response of the magnetopause to a tangential discontinuity/vortex sheet followed by northward IMF: Cluster observations C. J. Farrugia,1,2 F. T. Gratton,3,4 E. J. Lund,1 P. E. Sandholt,5 S. W. H. Cowley,6 R. B. Torbert,1,2 G. Gnavi,3 I. R. Mann,7 L. Bilbao,3 C. Mouikis,1 L. Kistler,1,2 C. W. Smith,1 H. J. Singer,8 and J. F. Watermann9 Received 6 September 2007; revised 23 November 2007; accepted 10 December 2007; published 19 March 2008. [1] We discuss the motion and structure of the magnetopause/boundary layer observed by Cluster in response to a joint tangential discontinuity/vortex sheet (TD/VS) observed by the Advanced Composition Explorer spacecraft on 7 December 2000. The observations are then supplemented by theory. Sharp polarity reversals in the east-west components of the field and flow By and Vy occurred at the discontinuity. These rotations were followed by a period of strongly northward interplanetary magnetic field (IMF). These two factors elicited a two-stage response at the magnetopause, as observed by Cluster situated in the boundary layer at the duskside terminator. First, the magnetopause suffered a large deformation from its equilibrium position, with large-amplitude oscillations of 3-min period being set up. These are argued to be mainly the result of tangential stresses associated with DVy the contribution of dynamic pressure changes being small in comparison. This strengthens recent evidence of the importance to magnetospheric dynamics of changes in azimuthal solar wind flow. -

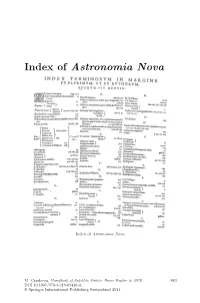

Index of Astronomia Nova

Index of Astronomia Nova Index of Astronomia Nova. M. Capderou, Handbook of Satellite Orbits: From Kepler to GPS, 883 DOI 10.1007/978-3-319-03416-4, © Springer International Publishing Switzerland 2014 Bibliography Books are classified in sections according to the main themes covered in this work, and arranged chronologically within each section. General Mechanics and Geodesy 1. H. Goldstein. Classical Mechanics, Addison-Wesley, Cambridge, Mass., 1956 2. L. Landau & E. Lifchitz. Mechanics (Course of Theoretical Physics),Vol.1, Mir, Moscow, 1966, Butterworth–Heinemann 3rd edn., 1976 3. W.M. Kaula. Theory of Satellite Geodesy, Blaisdell Publ., Waltham, Mass., 1966 4. J.-J. Levallois. G´eod´esie g´en´erale, Vols. 1, 2, 3, Eyrolles, Paris, 1969, 1970 5. J.-J. Levallois & J. Kovalevsky. G´eod´esie g´en´erale,Vol.4:G´eod´esie spatiale, Eyrolles, Paris, 1970 6. G. Bomford. Geodesy, 4th edn., Clarendon Press, Oxford, 1980 7. J.-C. Husson, A. Cazenave, J.-F. Minster (Eds.). Internal Geophysics and Space, CNES/Cepadues-Editions, Toulouse, 1985 8. V.I. Arnold. Mathematical Methods of Classical Mechanics, Graduate Texts in Mathematics (60), Springer-Verlag, Berlin, 1989 9. W. Torge. Geodesy, Walter de Gruyter, Berlin, 1991 10. G. Seeber. Satellite Geodesy, Walter de Gruyter, Berlin, 1993 11. E.W. Grafarend, F.W. Krumm, V.S. Schwarze (Eds.). Geodesy: The Challenge of the 3rd Millennium, Springer, Berlin, 2003 12. H. Stephani. Relativity: An Introduction to Special and General Relativity,Cam- bridge University Press, Cambridge, 2004 13. G. Schubert (Ed.). Treatise on Geodephysics,Vol.3:Geodesy, Elsevier, Oxford, 2007 14. D.D. McCarthy, P.K. -

Temperature Variations in the Dayside Magnetosheath and Their

Journal of Geophysical Research: Space Physics RESEARCH ARTICLE Temperature variations in the dayside magnetosheath 10.1002/2016JA023729 and their dependence on ion-scale magnetic structures: Key Points: THEMIS statistics and measurements by MMS • A positive correlation exists between the amplitude of magnetic fluctuations and temperature A. P. Dimmock1 , A. Osmane1 , T. I. Pulkkinen1 , K. Nykyri2 , and E. Kilpua3 variation • Temperature fluctuations are strongly 1 associated with larger local in Department of Electronics and Nanoengineering, School of Electrical Engineering, Aalto University, Espoo, Finland, 2 ion-scale magnetic structures than Centre for Space and Atmospheric Research, Embry-Riddle Aeronautical University, Daytona Beach, Florida, USA, the fluid input at the shock 3Department of Physics, University of Helsinki, Helsinki, Finland • The amplitude of ion gyroscale fluctuations and in situ temperature variations are favored by the Abstract The magnetosheath contains an array of waves, instabilities, and nonlinear magnetic dawn flank structures which modify global plasma properties by means of various wave-particle interactions. The present work demonstrates that ion-scale magnetic field structures (∼0.2–0.5 Hz) observed in the dayside Correspondence to: A. P. Dimmock, magnetosheath are statistically correlated to ion temperature changes on orders 10–20% of the andrew.dimmock@aalto.fi background value. In addition, our statistical analysis implies that larger temperature changes are in equipartition to larger amplitude magnetic structures. This effect was more pronounced behind the Citation: quasi-parallel bow shock and during faster solar wind speeds. The study of two separate intervals suggests Dimmock, A. P., A. Osmane, that this effect can result from both local and external drivers. -

United States Space Program Firsts

KSC Historical Report 18 KHR-18 Rev. December 2003 UNITED STATES SPACE PROGRAM FIRSTS Robotic & Human Mission Firsts Kennedy Space Center Library Archives Kennedy Space Center, Florida Foreword This summary of the United States space program firsts was compiled from various reference publications available in the Kennedy Space Center Library Archives. The list is divided into four sections. Robotic mission firsts, Human mission firsts, Space Shuttle mission firsts and Space Station mission firsts. Researched and prepared by: Barbara E. Green Kennedy Space Center Library Archives Kennedy Space Center, Florida 32899 phone: [321] 867-2407 i Contents Robotic Mission Firsts ……………………..........................……………...........……………1-4 Satellites, missiles and rockets 1950 - 1986 Early Human Spaceflight Firsts …………………………............................……........…..……5-8 Projects Mercury, Gemini, Apollo, Skylab and Apollo Soyuz Test Project 1961 - 1975 Space Shuttle Firsts …………………………….........................…………........……………..9-12 Space Transportation System 1977 - 2003 Space Station Firsts …………………………….........................…………........………………..13 International Space Station 1998-2___ Bibliography …………………………………..............................…………........…………….....…14 ii KHR-18 Rev. December 2003 DATE ROBOTIC EVENTS MISSION 07/24/1950 First missile launched at Cape Canaveral. Bumper V-2 08/20/1953 First Redstone missile was fired. Redstone 1 12/17/1957 First long range weapon launched. Atlas ICBM 01/31/1958 First satellite launched by U.S. Explorer 1 10/11/1958 First observations of Earth’s and interplanetary magnetic field. Pioneer 1 12/13/1958 First capsule containing living cargo, squirrel monkey, Gordo. Although not Bioflight 1 a NASA mission, data was utilized in Project Mercury planning. 12/18/1958 First communications satellite placed in space. Once in place, Brigadier Project Score General Goodpaster passed a message to President Eisenhower 02/17/1959 First fully instrumented Vanguard payload.