A History of Twentieth Century Translation Theory and Its Application for Bible Translation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ÖZGEÇMİŞ Prof. Dr. Işın Öner 29 Mayıs Üniversitesi Edebiyat

ÖZGEÇMİŞ Prof. Dr. Işın Öner 29 Mayıs Üniversitesi Edebiyat Fakültesi Çevibilim Bölümü [email protected] ÖĞRENİM DURUMU 1990 Doktora. İngiliz Dilbilimi. Hacettepe Üniversitesi Batı Dilleri ve Edebiyatları. 1983-1986 Yüksek Lisans ve Doktora. Türk Dili ve Edebiyatı. Boğaziçi Üniversitesi. (Dersleri tamamladı. Tez yazmadı.) 1983 Y. Lisans. Dilbilim. Boğaziçi Üniversitesi. 1981 Lisans. İngiliz Dili ve Edebiyatı. Boğaziçi Üniversitesi. AKADEMİK UNVANLAR Doçentlik Tarihi : 1991 Mütercim-Tercümanlık Bölümü, Boğaziçi Üniversitesi Profesörlük Tarihi : 1996 Mütercim-Tercümanlık Bölümü, Boğaziçi Üniversitesi YÖNETİLEN YÜKSEK LİSANS VE DOKTORA TEZLERİ Yüksek Lisans Tezleri 1998 M.A. Banu TELLİOĞLU “Reflections of Gideon Toury’s Target-oriented Theory and Hans J.Vermeer’s Skopos Theory on Translation Criticism: A Meta-Critique”. İstanbul: B.Ü. Sosyal Bilimler Enstitüsü. Çeviribilim Anabilim Dalı. 1998 M.A. Ayşe Fitnat ECE “From “Theory versus Pratice” to “Theory in Practice””. İstanbul: B.Ü. Sosyal Bilimler Enstitüsü. Çeviribilim Anabilim Dalı. 1997 M.A. Ayşe Banu KARADAĞ “From Impossibility to Possibility in Poetry Translation: A New Insight into Translation Criticism”. İstanbul: B.Ü. Sosyal Bilimler Enstitüsü. Çeviribilim Anabilim Dalı. 1996 M.A. Taner KARAKOÇ“A Pathway Between Descriptive Translation Studies and Transltion Didaction: with Metaphor Translation in Shakespeare’s Romeo and Juliet under Focus” İstanbul: B.Ü. Sosyal Bilimler Enstitüsü. Çeviribilim Anabilim Dalı. Doktora Tezleri 2001 PhD. Ebru DİRİKER “(De.) (Re.) Contextualising Simultaneous Interpreting: Interpreters in the Ivory Tower”. İstanbul: B.Ü. Sosyal Bilimler Enstitüsü. Çeviribilim Anabilim Dalı. (Boğaziçi Üniversitesi Sosyal Bilimler Alanı 2001 Yılı Tez Ödülü) 1994 PhD. Ayşe Nihal AKBULUT “Türk Yazın Dizgesinde Shakespeare’in Bir Yaz Gecesi Rüyası Çevirileri: Çeviri Kuramında Norm Kavramının Değerlendirilmesi”. İstanbul: İ.Ü. -

Translation: an Advanced Resource Book

TRANSLATION Routledge Applied Linguistics is a series of comprehensive resource books, providing students and researchers with the support they need for advanced study in the core areas of English language and Applied Linguistics. Each book in the series guides readers through three main sections, enabling them to explore and develop major themes within the discipline: • Section A, Introduction, establishes the key terms and concepts and extends readers’ techniques of analysis through practical application. • Section B, Extension, brings together influential articles, sets them in context, and discusses their contribution to the field. • Section C, Exploration, builds on knowledge gained in the first two sections, setting thoughtful tasks around further illustrative material. This enables readers to engage more actively with the subject matter and encourages them to develop their own research responses. Throughout the book, topics are revisited, extended, interwoven and deconstructed, with the reader’s understanding strengthened by tasks and follow-up questions. Translation: • examines the theory and practice of translation from a variety of linguistic and cultural angles, including semantics, equivalence, functional linguistics, corpus and cognitive linguistics, text and discourse analysis, gender studies and post- colonialism • draws on a wide range of languages, including French, Spanish, German, Russian and Arabic • explores material from a variety of sources, such as the Internet, advertisements, religious texts, literary and technical texts • gathers together influential readings from the key names in the discipline, including James S. Holmes, George Steiner, Jean-Paul Vinay and Jean Darbelnet, Eugene Nida, Werner Koller and Ernst-August Gutt. Written by experienced teachers and researchers in the field, Translation is an essential resource for students and researchers of English language and Applied Linguistics as well as Translation Studies. -

Valediction for Hans J. Vermeer

Valediction for Hans J. Vermeer delivered by Andreas F. Kelletat on the occasion of the urn interment on 20 February 2010 at the Bergfriedhof cemetery, Heidelberg Translated by Marina Dudenhöfer M.A. Dear members of the family, Dear friends and colleagues of Professor Hans Vermeer, It was only five weeks ago, on 17 January 2010, that Michael Schreiber, the Dean of our School of Translation and Interpreting Studies, Linguistics and Cultural Studies (FTSK) in Germersheim, handed over an honorary doctorate from our university to Hans Vermeer for his dedication to the establishment of a discipline in Translation Studies in a small ceremony at his house. The first steps for this award were taken in early 2009 before Hans Vermeer’s illness, and before he even knew he was ill. There was an official ceremony planned for autumn 2010 to coincide with Hans Vermeer’s 80th birthday on 24 September 2010, but it did not take place. Instead of celebrating a joyful birthday and award ceremony amongst students, friends and colleagues, we are gathered in this cemetery today to inter the ashes of the dearly departed. The family of my dear colleague Vermeer asked me to speak to you on this solemn occasion and it is my honour to remember with you today an extraordinary researcher, academic and teacher. Many of you gathered here do not need my help to remember him, as you were part of his life as a researcher and teacher, colleague and friend. Several of you knew him for much longer and were closer to him than I myself. -

Book of Abstracts Translata 2017 Scientific Committee

Translata III Book of Abstracts Innsbruck, 7 – 9 December, 2017 TRANSLATA III Redefining and Refocusing Translation and Interpreting Studies Book of Abstracts of the 3rd International Conference on Translation and Interpreting Studies December 7th – 9th, 2017 University Innsbruck Department of Translation Studies Translata 2017 Book of Abstracts 3 Edited by: Peter Sandrini Department of Translation studies University of Innsbruck Revised by: Sandra Reiter Department of Translation studies University of Innsbruck ISBN: 978-3-903030-54-1 Publication date: December 2017 Published by: STUDIA Universitätsverlag, Herzog-Siegmund-Ufer 15, A-6020 Innsbruck Druck und Buchbinderei: STUDIA Universitätsbuchhandlung und –verlag License: The Bookof Abstracts of the 3rd Translata Conference is published under the Creative Commons Attribution-ShareAlike 4.0 International License (https://creativecommons.org/licenses) Disclaimer: This publications has been reproduced directly from author- prepared submissions. The authors are responsible for the choice, presentations and wording of views contained in this publication and for opinions expressed therin, which are not necessarily those of the University of Innsbruck or, the organisers or the editor. Edited with: LibreOffice (libreoffice.org) and tuxtrans (tuxtrans.org) 4 Book of Abstracts Translata 2017 Scientific committee Local (in alphabetical order): Erica Autelli Mascha Dabić Maria Koliopoulou Martina Mayer Alena Petrova Peter Sandrini Astrid Schmidhofer Andy Stauder Pius ten Hacken Michael Ustaszewski -

Interview with Mary Snell-Hornby Autor(Es): Hornby, Mary Snell

Interview with Mary Snell-Hornby Autor(es): Hornby, Mary Snell; Althoff, Gustavo; Leal, Alice Publicado por: Universidade Federal de Santa Catarina URL persistente: URI:http://hdl.handle.net/10316.2/33059 Accessed : 24-Sep-2021 05:11:42 A navegação consulta e descarregamento dos títulos inseridos nas Bibliotecas Digitais UC Digitalis, UC Pombalina e UC Impactum, pressupõem a aceitação plena e sem reservas dos Termos e Condições de Uso destas Bibliotecas Digitais, disponíveis em https://digitalis.uc.pt/pt-pt/termos. Conforme exposto nos referidos Termos e Condições de Uso, o descarregamento de títulos de acesso restrito requer uma licença válida de autorização devendo o utilizador aceder ao(s) documento(s) a partir de um endereço de IP da instituição detentora da supramencionada licença. Ao utilizador é apenas permitido o descarregamento para uso pessoal, pelo que o emprego do(s) título(s) descarregado(s) para outro fim, designadamente comercial, carece de autorização do respetivo autor ou editor da obra. Na medida em que todas as obras da UC Digitalis se encontram protegidas pelo Código do Direito de Autor e Direitos Conexos e demais legislação aplicável, toda a cópia, parcial ou total, deste documento, nos casos em que é legalmente admitida, deverá conter ou fazer-se acompanhar por este aviso. impactum.uc.pt digitalis.uc.pt INTERVIEW WITH MARY SNELL-HORNBY 1 2 MARY SNELL-HORNBY / GUSTAVO ALTHOFF & ALICE LEAL ince 1989 Mary Snell-Hornby has been Professor of Translation Studies at the University of Vienna. She was a founding member S of the European Society for Translation Studies (EST) and its first President (from 1992 to 1998), she was on the Executive Board of the Eu- ropean Society for Lexicography (EURALEX) from 1986 to 1992 and was President of the Vienna Language Society from 1992 to 1994. -

Dynamic Equivalence: Nida's Perspective and Beyond

Dynamic Equivalence: Nida’s Perspective and Beyond Dohun Kim Translation is an interlingual and intercultural communication, in which correspondence at the level of formal and meaningful structures does not necessarily lead to a successful communication: a secondary communication or even a communication breakdown may occur due to distinct historical-cultural contexts. Such recognition led Nida to put forward Dynamic Equivalence, which brings the receptor to the centre of the communication. The concept triggered the focus shift from the form of the message to the response of the receptor. Further, its theoretical basis went beyond applying the research results of linguistics to the practice of translation; it founded a linguistic theory of translation for researchers and provided a practical manual of translation for translators. While Nida’s claims on the priority of Dynamic Equivalence over Formal Correspondence have been widely accepted and cited by translation researchers and practitioners, the mechanism and validity of Nida’s theoretical construct of the translation process have been neither addressed nor updated sufficiently. Hence, this paper sets out to revisit Nida and explain in detail what his theoretical background was, and how it led him to Formal Correspondence and Dynamic Equivalence. Keywords: deep structure, dynamic equivalence, kernel, receptor response, translation model We must analyze the transmission of a message in terms of a dynamic dimension. This analysis is especially important for translating, since the production -

Interview with José Lambert

!"#$ INTERVIEW WITH JOSÉ LAMBERT JOSÉ LAMBERT José Lambert is a professor and scholar in Comparative Literature and Translation Studies, and one of the founders of CETRA – Centre for Translation Studies1 % at the University of Leuven, Belgium. Initially, when it was created in 1989, what later became CETRA consisted of a special research program whose purpose was the promotion of high-level research in in Translation Studies, a perennial goal still pursued to this day. Nowadays, José Lambert serves as its honorary chairman. He is also a co-editor of Target – International Journal of Translation Studies2 and the author of more than 100 articles. Additionally, he co-edited such volumes as Literature and Translation: New Perspectives in Literary Studies (1978), Translation in the Development of Literatures – Les Traductions dans le développement des Littératures (1993), Translation and Modernization (1995), Translation Studies in Hungary (1996) and Crosscultural and Linguistic Perspectives on European Open and Distance Learning (1998). He has been a guest professor at many universities around the world such as Penn University, New York University, University of Alberta, University of Amsterdam and the Sorbonne, having lectured in many others as well. Starting in 2010/2 he will serve a 2-year tenure as a guest professor at Universidade Federal de Santa Catarina. The interview here presented is an excerpt of a larger project still in the making and with a broader agenda. In its broader version the project aims at collecting José Lambert’s opinions and views on a variety of subjects related to Translation Studies, from its history to its many conceptual and theoretical debates. -

Interview with Mary Snell-Hornby

INTERVIEW WITH MARY SNELL-HORNBY 1 2 MARY SNELL-HORNBY / GUSTAVO ALTHOFF & ALICE LEAL ince 1989 Mary Snell-Hornby has been Professor of Translation Studies at the University of Vienna. She was a founding member S of the European Society for Translation Studies (EST) and its first President (from 1992 to 1998), she was on the Executive Board of the Eu- ropean Society for Lexicography (EURALEX) from 1986 to 1992 and was President of the Vienna Language Society from 1992 to 1994. From 1997 to 2010 she was an Honorary Professor of the University of Warwick (UK). In May 2010 she was awarded an Honorary Doctorate of the University of Tampe- re (Finland) for her contribution to the discipline of Translation Studies. Before her appointment in Vienna she worked in German and English Language and Literature, specializing in translation, contrastive semantics and lexicography. She has published widely in fields varying from Language Teach- ing, Literary Studies and Linguistics, but particularly in Translation Studies, and is a member of a number of advisory boards and the General Editor of the Series Studien zur Translation (Stauffenburg Verlag, Tübingen). She has worked as a visiting professor at many universities around Europe and other parts of the world, including Hong Kong, the Philippines, Thailand and Brazil (Fortaleza, UFSC Florianópolis). In the present interview, the vast majority of the questions are focused on the interviewee's 2006 The Turns of Translation Studies: New Paradigms or Shifting Viewpoints?, as well as on some of the remarks made by José Lambert is his interview to Scientia Traductionis in 2010 (issue number 7). -

The Impact of Translation Strategies on Second

THE IMPACT OF TRANSLATION STRATEGIES ON SECOND LANGUAGE WRITING A dissertation submitted to Kent State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy by Carine Graff August 2018 © Copyright All rights reserved Except for previously published materials Dissertation written by Carine Graff Licence, University of Mulhouse, 1999 Maîtrise, University of Mulhouse, France, 2001 M.A., University of Wisconsin-Milwaukee, 2011 Ph.D., Kent State University, 2018 Approved by Richard Washbourne, Chair, Doctoral Dissertation Committee Erik Angelone____, Members, Doctoral Dissertation Committee Brian Baer_______ Sarah Rilling_____ Sara Newman_____ Accepted by Keiran Dunne_____, Chair, Department of Modern and Classical Language Studies James L. Blank____, Dean, College of Arts and Sciences TABLE OF CONTENTS TABLE OF CONTENTS ........................................................................................................ iii LIST OF FIGURES ................................................................................................................ vii LIST OF TABLES .................................................................................................................viii ACKNOWLEDGEMENTS ...................................................................................................... x Chapter 1: Introduction ........................................................................................................... 1 Research Problem ........................................................................................................... -

“The Dynamic Equivalence Caper”—A Response

Wendland and Pattemore, “Dynamic,” OTE 26 (2013): 471-490 471 “The Dynamic Equivalence Caper”—A Response ERNST WENDLAND (S TELLEBOSCH UNIVERSITY ) AND STEPHEN PATTEMORE (U NITED BIBLE SOCIETIES ) ABSTRACT This article overviews and responds to Roland Boer’s recent wide- ranging critique of Eugene A Nida’s theory and practice of “dynamic equivalence” in Bible translating. 1 Boer’s narrowly- focused, rather insufficiently-researched evaluation of Nida’s work suffers from both a lack of historical perspective and a current awareness of what many, more recent translation scholars and practitioners have been writing for the past several decades. Our rejoinder discusses some of the major misperceptions and mislead- ing assertions that appear sequentially in the various sections of Boer’s article with the aim of setting the record straight, or at least of framing the assessment of modern Bible translation endeavors and goals in a more positive and accurate light. A INTRODUCTION: A “CAPER”? Why does Boer classify the dynamic equivalence (DE) approach as a “caper”? According to the Oxford Dictionary, a “caper” refers to (1) “a playful skipping 1 Roland Boer, “The Dynamic Equivalence Caper,” in Ideology, Culture, and Translation (ed. Scott S. Elliott and Roland Boer; Atlanta: Society of Biblical Litera- ture, 2012), 13-23. Boer’s article introduces and potentially negatively colors the collection of essays in which it appears, most of which derive from several meetings of the “Ideology, Culture, and Translation” group of the SBL, from 2005 to the pre- sent (Boer, “Dynamic Equivalence,” 1, 4). After a short introduction by the editors, two larger sections follow—the first more theoretical (“Exploring the Intersection of Translation Studies and Critical Theory in Biblical Studies”), the second dealing with a number of case studies (“Sites in Translation”). -

Bible Translation in the UBS

128 성경원문연구 제14호 Bible Translation in the UBS By Aloo Osotsi Mojola* I. Introducing the Nida & Post Nida Perspectives: Third Presentation Introduction: Bible Translation in the UBS in the 20th Century was characterized by the Nida perspective. Eric M. North's brilliant appreciation of Eugene Nida's life and contributions written to mark his 60th birthday in 1974 is good place to begin. (See Matthew Black and William Smalley, eds. On Language, Culture and Religion In Honor of Eugene A. Nida, The Hague: Mouton, 1974: vii-xxvii). Nida's interest, labours and contribution to Bible translation began in the late 1930's and continue to this day albeit in a limited way. Nonetheless his writings and ideas dominated the field for the rest of the century. We are all to various extents indebted to him. 1. Just to name a few, Eugene Nida's key contributions to our field: a) He was trail blazer and pioneer through the medium of his ground breaking books, eg. Bible Translating, ABS, 1947 & Toward and Science of Translating E. J. Brill, 1964, The Theory and Practice of Translating (with Charles Taber), E. J. Brill, 1969 (Translation Studies), * United Bible Societies, Nairobi, Kenya. Presentation to be given at the Korean Translation Workship in Seoul, Korea, February 2003. Bible Translation in the UBS / Aloo Osotsi Mojola 129 Customs and Cultures, Harper & Row, 1954 (Cross Cultural Studies), Message and Mission, Harper & Row, 1960 (Communication Studies), Componential Analysis of Meaning, Mouton, 1975 & Greek Dictionary based on Semantic Domains (with Johannes Louw) (Semantics and Lexicography), etc. b) He pioneered through his global travels and field visits to translation teams in remote locations world wide much of what UBS translation consultants are still doing today. -

Dynamic Equivalence and Formal Equivalence

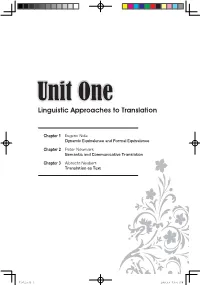

Unit One Linguistic Approaches to Translation Unit One Linguistic Approaches to Translation Chapter 1 Eugene Nida Dynamic Equivalence and Formal Equivalence Chapter 2 Peter Newmark Semantic and Communicative Translation Chapter 3 Albrecht Neubert Translation as Text ~~ U1-U2.indd 1 2009.9.9 5:33:11 PM Selected Readings of Contemporary Western Translation Theories Introduction Translation was not investigated scientifically until the 1960s in the Western history of translation as a great number of scholars and translators believed that translation was an art or a skill. When the American linguist and translation theorist E. A. Nida was working on his Toward a Science of Translating (1964), he argued that the process of translation could be described in an objective and scientific manner, “for just as linguistics may be classified as a descriptive science, so the transference of a message from one language to another is likewise a valid subject for scientific description” (1964:3). Hence Nida in his work makes full use of the new development of linguistics, semantics, information theory, communication theory and sociosemiotics in an attempt to explore the various linguistic and cultural factors involved in the process of translating. For instance, when discussing the process of translation, Nida adopts the useful elements of the transformational generative grammar put forward by the American linguist Noam Chomsky, suggesting that it is more effective to transfer the meaning from the source language to the receptor language on the kernel level, a key concept in Chomsky’s theory. Nida’s theory of dynamic equivalence, which is introduced in this unit, also approaches translation from a sociolinguistic perspective.