Experimental Kernel-Based Quantum Machine Learning in Finite Feature

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Theory and Application of Fermi Pseudo-Potential in One Dimension

Theory and application of Fermi pseudo-potential in one dimension The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Wu, Tai Tsun, and Ming Lun Yu. 2002. “Theory and Application of Fermi Pseudo-Potential in One Dimension.” Journal of Mathematical Physics 43 (12): 5949–76. https:// doi.org/10.1063/1.1519940. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:41555821 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA CERN-TH/2002-097 Theory and application of Fermi pseudo-potential in one dimension Tai Tsun Wu Gordon McKay Laboratory, Harvard University, Cambridge, Massachusetts, U.S.A., and Theoretical Physics Division, CERN, Geneva, Switzerland and Ming Lun Yu 41019 Pajaro Drive, Fremont, California, U.S.A.∗ Abstract The theory of interaction at one point is developed for the one-dimensional Schr¨odinger equation. In analog with the three-dimensional case, the resulting interaction is referred to as the Fermi pseudo-potential. The dominant feature of this one-dimensional problem comes from the fact that the real line becomes disconnected when one point is removed. The general interaction at one point is found to be the sum of three terms, the well-known delta-function potential arXiv:math-ph/0208030v1 21 Aug 2002 and two Fermi pseudo-potentials, one odd under space reflection and the other even. -

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Lattice 2018 28 July 2018 This talk has two parts (1) Near-term prospects for quantum computing. (2) Opportunities in quantum simulation of quantum field theory. Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Two fundamental ideas (1) Quantum complexity Why we think quantum computing is powerful. (2) Quantum error correction Why we think quantum computing is scalable. A complete description of a typical quantum state of just 300 qubits requires more bits than the number of atoms in the visible universe. Why we think quantum computing is powerful We know examples of problems that can be solved efficiently by a quantum computer, where we believe the problems are hard for classical computers. -

Quantum Machine Learning: Benefits and Practical Examples

Quantum Machine Learning: Benefits and Practical Examples Frank Phillipson1[0000-0003-4580-7521] 1 TNO, Anna van Buerenplein 1, 2595 DA Den Haag, The Netherlands [email protected] Abstract. A quantum computer that is useful in practice, is expected to be devel- oped in the next few years. An important application is expected to be machine learning, where benefits are expected on run time, capacity and learning effi- ciency. In this paper, these benefits are presented and for each benefit an example application is presented. A quantum hybrid Helmholtz machine use quantum sampling to improve run time, a quantum Hopfield neural network shows an im- proved capacity and a variational quantum circuit based neural network is ex- pected to deliver a higher learning efficiency. Keywords: Quantum Machine Learning, Quantum Computing, Near Future Quantum Applications. 1 Introduction Quantum computers make use of quantum-mechanical phenomena, such as superposi- tion and entanglement, to perform operations on data [1]. Where classical computers require the data to be encoded into binary digits (bits), each of which is always in one of two definite states (0 or 1), quantum computation uses quantum bits, which can be in superpositions of states. These computers would theoretically be able to solve certain problems much more quickly than any classical computer that use even the best cur- rently known algorithms. Examples are integer factorization using Shor's algorithm or the simulation of quantum many-body systems. This benefit is also called ‘quantum supremacy’ [2], which only recently has been claimed for the first time [3]. There are two different quantum computing paradigms. -

COVID-19 Detection on IBM Quantum Computer with Classical-Quantum Transfer Learning

medRxiv preprint doi: https://doi.org/10.1101/2020.11.07.20227306; this version posted November 10, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. It is made available under a CC-BY-NC-ND 4.0 International license . Turk J Elec Eng & Comp Sci () : { © TUB¨ ITAK_ doi:10.3906/elk- COVID-19 detection on IBM quantum computer with classical-quantum transfer learning Erdi ACAR1*, Ihsan_ YILMAZ2 1Department of Computer Engineering, Institute of Science, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey 2Department of Computer Engineering, Faculty of Engineering, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey Received: .201 Accepted/Published Online: .201 Final Version: ..201 Abstract: Diagnose the infected patient as soon as possible in the coronavirus 2019 (COVID-19) outbreak which is declared as a pandemic by the world health organization (WHO) is extremely important. Experts recommend CT imaging as a diagnostic tool because of the weak points of the nucleic acid amplification test (NAAT). In this study, the detection of COVID-19 from CT images, which give the most accurate response in a short time, was investigated in the classical computer and firstly in quantum computers. Using the quantum transfer learning method, we experimentally perform COVID-19 detection in different quantum real processors (IBMQx2, IBMQ-London and IBMQ-Rome) of IBM, as well as in different simulators (Pennylane, Qiskit-Aer and Cirq). By using a small number of data sets such as 126 COVID-19 and 100 Normal CT images, we obtained a positive or negative classification of COVID-19 with 90% success in classical computers, while we achieved a high success rate of 94-100% in quantum computers. -

A Theoretical Study of Quantum Memories in Ensemble-Based Media

A theoretical study of quantum memories in ensemble-based media Karl Bruno Surmacz St. Hugh's College, Oxford A thesis submitted to the Mathematical and Physical Sciences Division for the degree of Doctor of Philosophy in the University of Oxford Michaelmas Term, 2007 Atomic and Laser Physics, University of Oxford i A theoretical study of quantum memories in ensemble-based media Karl Bruno Surmacz, St. Hugh's College, Oxford Michaelmas Term 2007 Abstract The transfer of information from flying qubits to stationary qubits is a fundamental component of many quantum information processing and quantum communication schemes. The use of photons, which provide a fast and robust platform for encoding qubits, in such schemes relies on a quantum memory in which to store the photons, and retrieve them on-demand. Such a memory can consist of either a single absorber, or an ensemble of absorbers, with a ¤-type level structure, as well as other control ¯elds that a®ect the transfer of the quantum signal ¯eld to a material storage state. Ensembles have the advantage that the coupling of the signal ¯eld to the medium scales with the square root of the number of absorbers. In this thesis we theoretically study the use of ensembles of absorbers for a quantum memory. We characterize a general quantum memory in terms of its interaction with the signal and control ¯elds, and propose a ¯gure of merit that measures how well such a memory preserves entanglement. We derive an analytical expression for the entanglement ¯delity in terms of fluctuations in the stochastic Hamiltonian parameters, and show how this ¯gure could be measured experimentally. -

Operation-Induced Decoherence by Nonrelativistic Scattering from a Quantum Memory

INSTITUTE OF PHYSICS PUBLISHING JOURNAL OF PHYSICS A: MATHEMATICAL AND GENERAL J. Phys. A: Math. Gen. 39 (2006) 11567–11581 doi:10.1088/0305-4470/39/37/015 Operation-induced decoherence by nonrelativistic scattering from a quantum memory D Margetis1 and J M Myers2 1 Department of Mathematics, and Institute for Physical Science and Technology, University of Maryland, College Park, MD 20742, USA 2 Division of Engineering and Applied Sciences, Harvard University, Cambridge, MA 02138, USA E-mail: [email protected] and [email protected] Received 16 June 2006, in final form 7 August 2006 Published 29 August 2006 Online at stacks.iop.org/JPhysA/39/11567 Abstract Quantum computing involves transforming the state of a quantum memory. We view this operation as performed by transmitting nonrelativistic (massive) particles that scatter from the memory. By using a system of (1+1)- dimensional, coupled Schrodinger¨ equations with point interaction and narrow- band incoming pulse wavefunctions, we show how the outgoing pulse becomes entangled with a two-state memory. This effect necessarily induces decoherence, i.e., deviations of the memory content from a pure state. We describe incoming pulses that minimize this decoherence effect under a constraint on the duration of their interaction with the memory. PACS numbers: 03.65.−w, 03.67.−a, 03.65.Nk, 03.65.Yz, 03.67.Lx 1. Introduction Quantum computing [1–4] relies on a sequence of operations, each of which transforms a pure quantum state to, ideally, another pure state. These states describe a physical system, usually called memory. A central problem in quantum computing is decoherence, in which the pure state degrades to a mixed state, with deleterious effects for the computation. -

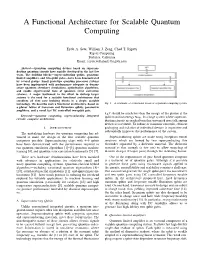

A Functional Architecture for Scalable Quantum Computing

A Functional Architecture for Scalable Quantum Computing Eyob A. Sete, William J. Zeng, Chad T. Rigetti Rigetti Computing Berkeley, California Email: feyob,will,[email protected] Abstract—Quantum computing devices based on supercon- ducting quantum circuits have rapidly developed in the last few years. The building blocks—superconducting qubits, quantum- limited amplifiers, and two-qubit gates—have been demonstrated by several groups. Small prototype quantum processor systems have been implemented with performance adequate to demon- strate quantum chemistry simulations, optimization algorithms, and enable experimental tests of quantum error correction schemes. A major bottleneck in the effort to devleop larger systems is the need for a scalable functional architecture that combines all thee core building blocks in a single, scalable technology. We describe such a functional architecture, based on Fig. 1. A schematic of a functional layout of a quantum computing system. a planar lattice of transmon and fluxonium qubits, parametric amplifiers, and a novel fast DC controlled two-qubit gate. kBT should be much less than the energy of the photon at the Keywords—quantum computing, superconducting integrated qubit transition energy ~!01. In a large system where supercon- circuits, computer architecture. ducting circuits are packed together, unwanted crosstalk among devices is inevitable. To reduce or minimize crosstalk, efficient I. INTRODUCTION packaging and isolation of individual devices is imperative and substantially improves the performance of the system. The underlying hardware for quantum computing has ad- vanced to make the design of the first scalable quantum Superconducting qubits are made using Josephson tunnel computers possible. Superconducting chips with 4–9 qubits junctions which are formed by two superconducting thin have been demonstrated with the performance required to electrodes separated by a dielectric material. -

High Energy Physics Quantum Computing

High Energy Physics Quantum Computing Quantum Information Science in High Energy Physics at the Large Hadron Collider PI: O.K. Baker, Yale University Unraveling the quantum structure of QCD in parton shower Monte Carlo generators PI: Christian Bauer, Lawrence Berkeley National Laboratory Co-PIs: Wibe de Jong and Ben Nachman (LBNL) The HEP.QPR Project: Quantum Pattern Recognition for Charged Particle Tracking PI: Heather Gray, Lawrence Berkeley National Laboratory Co-PIs: Wahid Bhimji, Paolo Calafiura, Steve Farrell, Wim Lavrijsen, Lucy Linder, Illya Shapoval (LBNL) Neutrino-Nucleus Scattering on a Quantum Computer PI: Rajan Gupta, Los Alamos National Laboratory Co-PIs: Joseph Carlson (LANL); Alessandro Roggero (UW), Gabriel Purdue (FNAL) Particle Track Pattern Recognition via Content-Addressable Memory and Adiabatic Quantum Optimization PI: Lauren Ice, Johns Hopkins University Co-PIs: Gregory Quiroz (Johns Hopkins); Travis Humble (Oak Ridge National Laboratory) Towards practical quantum simulation for High Energy Physics PI: Peter Love, Tufts University Co-PIs: Gary Goldstein, Hugo Beauchemin (Tufts) High Energy Physics (HEP) ML and Optimization Go Quantum PI: Gabriel Perdue, Fermilab Co-PIs: Jim Kowalkowski, Stephen Mrenna, Brian Nord, Aris Tsaris (Fermilab); Travis Humble, Alex McCaskey (Oak Ridge National Lab) Quantum Machine Learning and Quantum Computation Frameworks for HEP (QMLQCF) PI: M. Spiropulu, California Institute of Technology Co-PIs: Panagiotis Spentzouris (Fermilab), Daniel Lidar (USC), Seth Lloyd (MIT) Quantum Algorithms for Collider Physics PI: Jesse Thaler, Massachusetts Institute of Technology Co-PI: Aram Harrow, Massachusetts Institute of Technology Quantum Machine Learning for Lattice QCD PI: Boram Yoon, Los Alamos National Laboratory Co-PIs: Nga T. T. Nguyen, Garrett Kenyon, Tanmoy Bhattacharya and Rajan Gupta (LANL) Quantum Information Science in High Energy Physics at the Large Hadron Collider O.K. -

Valleytronics: Opportunities, Challenges, and Paths Forward

REVIEW Valleytronics www.small-journal.com Valleytronics: Opportunities, Challenges, and Paths Forward Steven A. Vitale,* Daniel Nezich, Joseph O. Varghese, Philip Kim, Nuh Gedik, Pablo Jarillo-Herrero, Di Xiao, and Mordechai Rothschild The workshop gathered the leading A lack of inversion symmetry coupled with the presence of time-reversal researchers in the field to present their symmetry endows 2D transition metal dichalcogenides with individually latest work and to participate in honest and addressable valleys in momentum space at the K and K′ points in the first open discussion about the opportunities Brillouin zone. This valley addressability opens up the possibility of using the and challenges of developing applications of valleytronic technology. Three interactive momentum state of electrons, holes, or excitons as a completely new para- working sessions were held, which tackled digm in information processing. The opportunities and challenges associated difficult topics ranging from potential with manipulation of the valley degree of freedom for practical quantum and applications in information processing and classical information processing applications were analyzed during the 2017 optoelectronic devices to identifying the Workshop on Valleytronic Materials, Architectures, and Devices; this Review most important unresolved physics ques- presents the major findings of the workshop. tions. The primary product of the work- shop is this article that aims to inform the reader on potential benefits of valleytronic 1. Background devices, on the state-of-the-art in valleytronics research, and on the challenges to be overcome. We are hopeful this document The Valleytronics Materials, Architectures, and Devices Work- will also serve to focus future government-sponsored research shop, sponsored by the MIT Lincoln Laboratory Technology programs in fruitful directions. -

Fundamental Aspects of Solving Quantum Problems with Machine Learning

Fundamental aspects of solving quantum problems with machine learning Hsin-Yuan (Robert) Huang, Richard Kueng, Michael Broughton, Masoud Mohseni, Ryan Babbush, Sergio Boixo, Hartmut Neven, Jarrod McClean and John Preskill Google AI Quantum Institute of Quantum Information and Matter (IQIM), Caltech Johannes Kepler University Linz [1] Information-theoretic bounds on quantum advantage in machine learning, arXiv:2101.02464. [2] Power of data in quantum machine learning, arXiv:2011.01938. [3] Provable machine learning algorithms for quantum many-body problems, In preparation. Motivation • Machine learning (ML) has received great attention in the quantum community these days. Classical ML Enhancing ML for quantum physics/chemistry with quantum computers The goal : The goal : Solve challenging quantum Design quantum ML algorithms many-body problems that yield better than significant advantage traditional classical algorithms over any classical algorithm “Solving the quantum many-body problem with artificial neural networks.” Science 355.6325 (2017): 602-606. "Learning phase transitions by confusion." Nature Physics 13.5 (2017): 435-439. "Supervised learning with quantum-enhanced feature spaces." Nature 567.7747 (2019): 209-212. Motivation • Yet, many fundamental questions remain to be answered. Classical ML Enhancing ML for quantum physics/chemistry with quantum computers The question : The question : How can ML be more useful What are the advantages of than non-ML algorithms? quantum ML in general? “Solving the quantum many-body problem with artificial neural networks.” Science 355.6325 (2017): 602-606. "Learning phase transitions by confusion." Nature Physics 13.5 (2017): 435-439. "Supervised learning with quantum-enhanced feature spaces." Nature 567.7747 (2019): 209-212. General Setting • In this work, we focus on training an ML model to predict x ↦ fℰ(x) = Tr(Oℰ(|x⟩⟨x|)), where x is a classical input, ℰ is an unknown CPTP map, and O is an observable. -

Future Directions of Quantum Information Processing a Workshop on the Emerging Science and Technology of Quantum Computation, Communication, and Measurement

Future Directions of Quantum Information Processing A Workshop on the Emerging Science and Technology of Quantum Computation, Communication, and Measurement Seth Lloyd, Massachusetts Institute of Technology Dirk Englund, Massachusetts Institute of Technology Workshop funded by the Basic Research Office, Office of the Assistant Prepared by Kate Klemic Ph.D. and Jeremy Zeigler Secretary of Defense for Research & Engineering. This report does not Virginia Tech Applied Research Corporation necessarily reflect the policies or positions of the US Department of Defense Preface Over the past century, science and technology have brought remarkable new capabilities to all sectors of the economy; from telecommunications, energy, and electronics to medicine, transportation and defense. Technologies that were fantasy decades ago, such as the internet and mobile devices, now inform the way we live, work, and interact with our environment. Key to this technological progress is the capacity of the global basic research community to create new knowledge and to develop new insights in science, technology, and engineering. Understanding the trajectories of this fundamental research, within the context of global challenges, empowers stakeholders to identify and seize potential opportunities. The Future Directions Workshop series, sponsored by the Basic Research Office of the Office of the Assistant Secretary of Defense for Research and Engineering, seeks to examine emerging research and engineering areas that are most likely to transform future technology capabilities. These workshops gather distinguished academic and industry researchers from the world’s top research institutions to engage in an interactive dialogue about the promises and challenges of these emerging basic research areas and how they could impact future capabilities. -

Quantum Secure Direct Communication with Quantum Memory

Quantum secure direct communication with quantum memory Wei Zhang1,3, Dong-Sheng Ding1,3*, Yu-Bo Sheng2, Lan Zhou2, Bao-Sen Shi1,3† and Guang-Can Guo1,3 1Key Laboratory of Quantum Information, Chinese Academy of Sciences, University of Science and Technology of China, Hefei, Anhui 230026, China 2Key Laboratory of Broadband Wireless Communication and Sensor Network Technology, Nanjing University of Posts and Telecommunications, Ministry of Education, Nanjing 210003, China 3Synergetic Innovation Center of Quantum Information and Quantum Physics, University of Science and Technology of China, Hefei, Anhui 230026, China Corresponding authors:* [email protected] [email protected] †[email protected] Quantum communication provides an absolute security advantage, and it has been widely developed over the past 30 years. As an important branch of quantum communication, quantum secure direct communication (QSDC) promotes high security and instantaneousness in communication through directly transmitting messages over a quantum channel. The full implementation of a quantum protocol always requires the ability to control the transfer of a message effectively in the time domain; thus, it is essential to combine QSDC with quantum memory to accomplish the communication task. In this paper, we report the experimental demonstration of QSDC with state-of-the-art atomic quantum memory for the first time in principle. We used the polarization degrees of freedom of photons as the information carrier, and the fidelity of entanglement decoding was verified as approximately 90%. Our work completes a fundamental step toward practical QSDC and demonstrates a potential application for long-distance quantum communication in a quantum network. The importance of information and communication security is increasing rapidly as the Internet becomes indispensable in modern society.