High Energy Physics Quantum Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Lattice 2018 28 July 2018 This talk has two parts (1) Near-term prospects for quantum computing. (2) Opportunities in quantum simulation of quantum field theory. Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Two fundamental ideas (1) Quantum complexity Why we think quantum computing is powerful. (2) Quantum error correction Why we think quantum computing is scalable. A complete description of a typical quantum state of just 300 qubits requires more bits than the number of atoms in the visible universe. Why we think quantum computing is powerful We know examples of problems that can be solved efficiently by a quantum computer, where we believe the problems are hard for classical computers. -

Quantum Machine Learning: Benefits and Practical Examples

Quantum Machine Learning: Benefits and Practical Examples Frank Phillipson1[0000-0003-4580-7521] 1 TNO, Anna van Buerenplein 1, 2595 DA Den Haag, The Netherlands [email protected] Abstract. A quantum computer that is useful in practice, is expected to be devel- oped in the next few years. An important application is expected to be machine learning, where benefits are expected on run time, capacity and learning effi- ciency. In this paper, these benefits are presented and for each benefit an example application is presented. A quantum hybrid Helmholtz machine use quantum sampling to improve run time, a quantum Hopfield neural network shows an im- proved capacity and a variational quantum circuit based neural network is ex- pected to deliver a higher learning efficiency. Keywords: Quantum Machine Learning, Quantum Computing, Near Future Quantum Applications. 1 Introduction Quantum computers make use of quantum-mechanical phenomena, such as superposi- tion and entanglement, to perform operations on data [1]. Where classical computers require the data to be encoded into binary digits (bits), each of which is always in one of two definite states (0 or 1), quantum computation uses quantum bits, which can be in superpositions of states. These computers would theoretically be able to solve certain problems much more quickly than any classical computer that use even the best cur- rently known algorithms. Examples are integer factorization using Shor's algorithm or the simulation of quantum many-body systems. This benefit is also called ‘quantum supremacy’ [2], which only recently has been claimed for the first time [3]. There are two different quantum computing paradigms. -

![Arxiv:1912.08821V2 [Hep-Ph] 27 Jul 2020](https://docslib.b-cdn.net/cover/8997/arxiv-1912-08821v2-hep-ph-27-jul-2020-238997.webp)

Arxiv:1912.08821V2 [Hep-Ph] 27 Jul 2020

MIT-CTP/5157 FERMILAB-PUB-19-628-A A Systematic Study of Hidden Sector Dark Matter: Application to the Gamma-Ray and Antiproton Excesses Dan Hooper,1, 2, 3, ∗ Rebecca K. Leane,4, y Yu-Dai Tsai,1, z Shalma Wegsman,5, x and Samuel J. Witte6, { 1Fermilab, Fermi National Accelerator Laboratory, Batavia, IL 60510, USA 2University of Chicago, Kavli Institute for Cosmological Physics, Chicago, IL 60637, USA 3University of Chicago, Department of Astronomy and Astrophysics, Chicago, IL 60637, USA 4Center for Theoretical Physics, Massachusetts Institute of Technology, Cambridge, MA 02139, USA 5University of Chicago, Department of Physics, Chicago, IL 60637, USA 6Instituto de Fisica Corpuscular (IFIC), CSIC-Universitat de Valencia, Spain (Dated: July 29, 2020) Abstract In hidden sector models, dark matter does not directly couple to the particle content of the Standard Model, strongly suppressing rates at direct detection experiments, while still allowing for large signals from annihilation. In this paper, we conduct an extensive study of hidden sector dark matter, covering a wide range of dark matter spins, mediator spins, interaction diagrams, and annihilation final states, in each case determining whether the annihilations are s-wave (thus enabling efficient annihilation in the universe today). We then go on to consider a variety of portal interactions that allow the hidden sector annihilation products to decay into the Standard Model. We broadly classify constraints from relic density requirements and dwarf spheroidal galaxy observations. In the scenario that the hidden sector was in equilibrium with the Standard Model in the early universe, we place a lower bound on the portal coupling, as well as on the dark matter’s elastic scattering cross section with nuclei. -

COVID-19 Detection on IBM Quantum Computer with Classical-Quantum Transfer Learning

medRxiv preprint doi: https://doi.org/10.1101/2020.11.07.20227306; this version posted November 10, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. It is made available under a CC-BY-NC-ND 4.0 International license . Turk J Elec Eng & Comp Sci () : { © TUB¨ ITAK_ doi:10.3906/elk- COVID-19 detection on IBM quantum computer with classical-quantum transfer learning Erdi ACAR1*, Ihsan_ YILMAZ2 1Department of Computer Engineering, Institute of Science, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey 2Department of Computer Engineering, Faculty of Engineering, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey Received: .201 Accepted/Published Online: .201 Final Version: ..201 Abstract: Diagnose the infected patient as soon as possible in the coronavirus 2019 (COVID-19) outbreak which is declared as a pandemic by the world health organization (WHO) is extremely important. Experts recommend CT imaging as a diagnostic tool because of the weak points of the nucleic acid amplification test (NAAT). In this study, the detection of COVID-19 from CT images, which give the most accurate response in a short time, was investigated in the classical computer and firstly in quantum computers. Using the quantum transfer learning method, we experimentally perform COVID-19 detection in different quantum real processors (IBMQx2, IBMQ-London and IBMQ-Rome) of IBM, as well as in different simulators (Pennylane, Qiskit-Aer and Cirq). By using a small number of data sets such as 126 COVID-19 and 100 Normal CT images, we obtained a positive or negative classification of COVID-19 with 90% success in classical computers, while we achieved a high success rate of 94-100% in quantum computers. -

Achievements of the IARPA-QEO and DARPA-QAFS Programs & The

Achievements of the IARPA-QEO and DARPA-QAFS programs & The prospects for quantum enhancement with QA Elizabeth Crosson & DL, Nature Reviews Physics (2021) AQC 20201 June 22, 2021 Daniel Lidar USC A decade+ since the D‐Wave 1: where does QA stand? Numerous studies have attempted to demonstrate speedups using the D-Wave processors. Some studies have succeeded in A scaling speedup against path-integral Monte demonstrating constant-factor Carlo was claimed for quantum simulation of speedups in optimization against all geometrically frustrated magnets (scaling of classical algorithms they tried, e.g., relaxation time in escape from topological frustrated cluster-loop problems [1]. obstructions) [2]. Simulation is a natural and promising QA application. But QA was originally proposed as an optimization heuristic. No study so far has demonstrated an unequivocal scaling speedup in optimization. [1] S. Mandrà, H. Katzgraber, Q. Sci. & Tech. 3, 04LT01 (2018) [2] A. King et al., arXiv:1911.03446 What could be the reasons for the lack of scaling speedup? • Perhaps QA is inherently a poor algorithm? More on this later. • Control errors? Random errors in the final Hamiltonian (J-chaos) have a strong detrimental effect. • Such errors limit the size of QA devices that can be expected to accurately solve optimization instances without error correction/suppression [1,2]. • Decoherence? Single-qubit and ≪ anneal time. Often cited as a major problem of QA relative to the gate-model. • How important is this? Depends on weak vs strong coupling to the environment [3]. More on this later as well. [1] T. Albash, V. Martin‐Mayor, I. Hen, Q. -

Why and When Is Pausing Beneficial in Quantum Annealing?

Why and when pausing is beneficial in quantum annealing Huo Chen1, 2 and Daniel A. Lidar1, 2, 3, 4 1Department of Electrical Engineering, University of Southern California, Los Angeles, California 90089, USA 2Center for Quantum Information Science & Technology, University of Southern California, Los Angeles, California 90089, USA 3Department of Chemistry, University of Southern California, Los Angeles, California 90089, USA 4Department of Physics and Astronomy, University of Southern California, Los Angeles, California 90089, USA Recent empirical results using quantum annealing hardware have shown that mid anneal pausing has a surprisingly beneficial impact on the probability of finding the ground state for of a variety of problems. A theoretical explanation of this phenomenon has thus far been lacking. Here we provide an analysis of pausing using a master equation framework, and derive conditions for the strategy to result in a success probability enhancement. The conditions, which we identify through numerical simulations and then prove to be sufficient, require that relative to the pause duration the relaxation rate is large and decreasing right after crossing the minimum gap, small and decreasing at the end of the anneal, and is also cumulatively small over this interval, in the sense that the system does not thermally equilibrate. This establishes that the observed success probability enhancement can be attributed to incomplete quantum relaxation, i.e., is a form of beneficial non-equilibrium coupling to the environment. I. INTRODUCTION lems [23] and training deep generative machine learn- ing models [24]. Numerical studies [25, 26] of the p-spin Quantum annealing [1{4] stands out among the multi- model also agree with these empirical results. -

![Arxiv:1908.04480V2 [Quant-Ph] 23 Oct 2020](https://docslib.b-cdn.net/cover/8997/arxiv-1908-04480v2-quant-ph-23-oct-2020-468997.webp)

Arxiv:1908.04480V2 [Quant-Ph] 23 Oct 2020

Quantum adiabatic machine learning with zooming Alexander Zlokapa,1 Alex Mott,2 Joshua Job,3 Jean-Roch Vlimant,1 Daniel Lidar,4 and Maria Spiropulu1 1Division of Physics, Mathematics & Astronomy, Alliance for Quantum Technologies, California Institute of Technology, Pasadena, CA 91125, USA 2DeepMind Technologies, London, UK 3Lockheed Martin Advanced Technology Center, Sunnyvale, CA 94089, USA 4Departments of Electrical and Computer Engineering, Chemistry, and Physics & Astronomy, and Center for Quantum Information Science & Technology, University of Southern California, Los Angeles, CA 90089, USA Recent work has shown that quantum annealing for machine learning, referred to as QAML, can perform comparably to state-of-the-art machine learning methods with a specific application to Higgs boson classification. We propose QAML-Z, a novel algorithm that iteratively zooms in on a region of the energy surface by mapping the problem to a continuous space and sequentially applying quantum annealing to an augmented set of weak classifiers. Results on a programmable quantum annealer show that QAML-Z matches classical deep neural network performance at small training set sizes and reduces the performance margin between QAML and classical deep neural networks by almost 50% at large training set sizes, as measured by area under the ROC curve. The significant improvement of quantum annealing algorithms for machine learning and the use of a discrete quantum algorithm on a continuous optimization problem both opens a new class of problems that can be solved by quantum annealers and suggests the approach in performance of near-term quantum machine learning towards classical benchmarks. I. INTRODUCTION lem Hamiltonian, ensuring that the system remains in the ground state if the system is perturbed slowly enough, as given by the energy gap between the ground state and Machine learning has gained an increasingly impor- the first excited state [36{38]. -

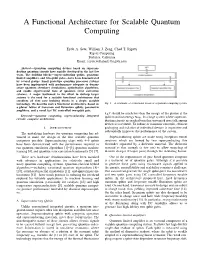

A Functional Architecture for Scalable Quantum Computing

A Functional Architecture for Scalable Quantum Computing Eyob A. Sete, William J. Zeng, Chad T. Rigetti Rigetti Computing Berkeley, California Email: feyob,will,[email protected] Abstract—Quantum computing devices based on supercon- ducting quantum circuits have rapidly developed in the last few years. The building blocks—superconducting qubits, quantum- limited amplifiers, and two-qubit gates—have been demonstrated by several groups. Small prototype quantum processor systems have been implemented with performance adequate to demon- strate quantum chemistry simulations, optimization algorithms, and enable experimental tests of quantum error correction schemes. A major bottleneck in the effort to devleop larger systems is the need for a scalable functional architecture that combines all thee core building blocks in a single, scalable technology. We describe such a functional architecture, based on Fig. 1. A schematic of a functional layout of a quantum computing system. a planar lattice of transmon and fluxonium qubits, parametric amplifiers, and a novel fast DC controlled two-qubit gate. kBT should be much less than the energy of the photon at the Keywords—quantum computing, superconducting integrated qubit transition energy ~!01. In a large system where supercon- circuits, computer architecture. ducting circuits are packed together, unwanted crosstalk among devices is inevitable. To reduce or minimize crosstalk, efficient I. INTRODUCTION packaging and isolation of individual devices is imperative and substantially improves the performance of the system. The underlying hardware for quantum computing has ad- vanced to make the design of the first scalable quantum Superconducting qubits are made using Josephson tunnel computers possible. Superconducting chips with 4–9 qubits junctions which are formed by two superconducting thin have been demonstrated with the performance required to electrodes separated by a dielectric material. -

Error Suppression and Error Correction in Adiabatic Quantum Computation: Techniques and Challenges

PHYSICAL REVIEW X 3, 041013 (2013) Error Suppression and Error Correction in Adiabatic Quantum Computation: Techniques and Challenges Kevin C. Young* and Mohan Sarovar Scalable and Secure Systems Research (08961), Sandia National Laboratories, Livermore, California 94550, USA Robin Blume-Kohout Advanced Device Technologies (01425), Sandia National Laboratories, Albuquerque, New Mexico 87185, USA (Received 10 September 2012; revised manuscript received 23 July 2013; published 13 November 2013) Adiabatic quantum computation (AQC) has been lauded for its inherent robustness to control imperfections and relaxation effects. A considerable body of previous work, however, has shown AQC to be acutely sensitive to noise that causes excitations from the adiabatically evolving ground state. In this paper, we develop techniques to mitigate such noise, and then we point out and analyze some obstacles to further progress. First, we examine two known techniques that leverage quantum error-detecting codes to suppress noise and show that they are intimately related and may be analyzed within the same formalism. Next, we analyze the effectiveness of such error-suppression techniques in AQC, identify critical constraints on their performance, and conclude that large-scale, fault-tolerant AQC will require error correction, not merely suppression. Finally, we study the consequences of encoding AQC in quantum stabilizer codes and discover that generic AQC problem Hamiltonians rapidly convert physical errors into uncorrectable logical errors. We present several techniques to remedy this problem, but all of them require unphysical resources, suggesting that the adiabatic model of quantum computation may be fundamentally incompatible with stabilizer quantum error correction. DOI: 10.1103/PhysRevX.3.041013 Subject Areas: Quantum Physics, Quantum Information I. -

Experimental Kernel-Based Quantum Machine Learning in Finite Feature

www.nature.com/scientificreports OPEN Experimental kernel‑based quantum machine learning in fnite feature space Karol Bartkiewicz1,2*, Clemens Gneiting3, Antonín Černoch2*, Kateřina Jiráková2, Karel Lemr2* & Franco Nori3,4 We implement an all‑optical setup demonstrating kernel‑based quantum machine learning for two‑ dimensional classifcation problems. In this hybrid approach, kernel evaluations are outsourced to projective measurements on suitably designed quantum states encoding the training data, while the model training is processed on a classical computer. Our two-photon proposal encodes data points in a discrete, eight-dimensional feature Hilbert space. In order to maximize the application range of the deployable kernels, we optimize feature maps towards the resulting kernels’ ability to separate points, i.e., their “resolution,” under the constraint of fnite, fxed Hilbert space dimension. Implementing these kernels, our setup delivers viable decision boundaries for standard nonlinear supervised classifcation tasks in feature space. We demonstrate such kernel-based quantum machine learning using specialized multiphoton quantum optical circuits. The deployed kernel exhibits exponentially better scaling in the required number of qubits than a direct generalization of kernels described in the literature. Many contemporary computational problems (like drug design, trafc control, logistics, automatic driving, stock market analysis, automatic medical examination, material engineering, and others) routinely require optimiza- tion over huge amounts of data1. While these highly demanding problems can ofen be approached by suitable machine learning (ML) algorithms, in many relevant cases the underlying calculations would last prohibitively long. Quantum ML (QML) comes with the promise to run these computations more efciently (in some cases exponentially faster) by complementing ML algorithms with quantum resources. -

Yonatan F. Kahn, Ph.D

Yonatan F. Kahn, Ph.D. Contact Loomis Laboratory 415 E-mail: [email protected] Information Urbana, IL 61801 USA Website: yfkahn.physics.illinois.edu Research High-energy theoretical physics (phenomenology): direct, indirect, and collider searches for sub-GeV Interests dark matter, laboratory and astrophysical probes of ultralight particles Positions Assistant professor August 2019 { present University of Illinois Urbana-Champaign Urbana, IL USA Current research: • Sub-GeV dark matter: new experimental proposals for direct detection • Axion-like particles: direct detection, indirect detection, laboratory searches • Phenomenology of new light weakly-coupled gauge forces: collider searches and effects on low-energy observables • Neural networks: physics-inspired theoretical descriptions of autoencoders and feed-forward networks Postdoctoral fellow August 2018 { July 2019 Kavli Institute for Cosmological Physics (KICP) University of Chicago Chicago, IL USA Postdoctoral research associate September 2015 { August 2018 Princeton University Princeton, NJ USA Education Massachusetts Institute of Technology September 2010 { June 2015 Cambridge, MA USA Ph.D, physics, June 2015 • Thesis title: Forces and Gauge Groups Beyond the Standard Model • Advisor: Jesse Thaler • Thesis committee: Jesse Thaler, Allan Adams, Christoph Paus University of Cambridge October 2009 { June 2010 Cambridge, UK Certificate of Advanced Study in Mathematics, June 2010 • Completed Part III of the Mathematical Tripos in Applied Mathematics and Theoretical Physics • Essay topic: From Topological Strings to Matrix Models Northwestern University September 2004 { June 2009 Evanston, IL USA B.A., physics and mathematics, June 2009 • Senior thesis: Models of Dark Matter and the INTEGRAL 511 keV line • Senior thesis advisor: Tim Tait B.Mus., horn performance, June 2009 Mentoring Graduate students • Siddharth Mishra-Sharma, Princeton (Ph.D. -

Durham E-Theses

Durham E-Theses Quantum search at low temperature in the single avoided crossing model PATEL, PARTH,ASHVINKUMAR How to cite: PATEL, PARTH,ASHVINKUMAR (2019) Quantum search at low temperature in the single avoided crossing model, Durham theses, Durham University. Available at Durham E-Theses Online: http://etheses.dur.ac.uk/13285/ Use policy The full-text may be used and/or reproduced, and given to third parties in any format or medium, without prior permission or charge, for personal research or study, educational, or not-for-prot purposes provided that: • a full bibliographic reference is made to the original source • a link is made to the metadata record in Durham E-Theses • the full-text is not changed in any way The full-text must not be sold in any format or medium without the formal permission of the copyright holders. Please consult the full Durham E-Theses policy for further details. Academic Support Oce, Durham University, University Oce, Old Elvet, Durham DH1 3HP e-mail: [email protected] Tel: +44 0191 334 6107 http://etheses.dur.ac.uk 2 Quantum search at low temperature in the single avoided crossing model Parth Ashvinkumar Patel A Thesis presented for the degree of Master of Science by Research Dr. Vivien Kendon Department of Physics University of Durham England August 2019 Dedicated to My parents for supporting me throughout my studies. Quantum search at low temperature in the single avoided crossing model Parth Ashvinkumar Patel Submitted for the degree of Master of Science by Research August 2019 Abstract We begin with an n-qubit quantum search algorithm and formulate it in terms of quantum walk and adiabatic quantum computation.