Quantum Machine Learning: Benefits and Practical Examples

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Lattice 2018 28 July 2018 This talk has two parts (1) Near-term prospects for quantum computing. (2) Opportunities in quantum simulation of quantum field theory. Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Two fundamental ideas (1) Quantum complexity Why we think quantum computing is powerful. (2) Quantum error correction Why we think quantum computing is scalable. A complete description of a typical quantum state of just 300 qubits requires more bits than the number of atoms in the visible universe. Why we think quantum computing is powerful We know examples of problems that can be solved efficiently by a quantum computer, where we believe the problems are hard for classical computers. -

COVID-19 Detection on IBM Quantum Computer with Classical-Quantum Transfer Learning

medRxiv preprint doi: https://doi.org/10.1101/2020.11.07.20227306; this version posted November 10, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. It is made available under a CC-BY-NC-ND 4.0 International license . Turk J Elec Eng & Comp Sci () : { © TUB¨ ITAK_ doi:10.3906/elk- COVID-19 detection on IBM quantum computer with classical-quantum transfer learning Erdi ACAR1*, Ihsan_ YILMAZ2 1Department of Computer Engineering, Institute of Science, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey 2Department of Computer Engineering, Faculty of Engineering, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey Received: .201 Accepted/Published Online: .201 Final Version: ..201 Abstract: Diagnose the infected patient as soon as possible in the coronavirus 2019 (COVID-19) outbreak which is declared as a pandemic by the world health organization (WHO) is extremely important. Experts recommend CT imaging as a diagnostic tool because of the weak points of the nucleic acid amplification test (NAAT). In this study, the detection of COVID-19 from CT images, which give the most accurate response in a short time, was investigated in the classical computer and firstly in quantum computers. Using the quantum transfer learning method, we experimentally perform COVID-19 detection in different quantum real processors (IBMQx2, IBMQ-London and IBMQ-Rome) of IBM, as well as in different simulators (Pennylane, Qiskit-Aer and Cirq). By using a small number of data sets such as 126 COVID-19 and 100 Normal CT images, we obtained a positive or negative classification of COVID-19 with 90% success in classical computers, while we achieved a high success rate of 94-100% in quantum computers. -

Clustering by Quantum Annealing on the Three–Level Quantum El- Ements Qutrits V

CLUSTERING BY QUANTUM ANNEALING ON THE THREE–LEVEL QUANTUM EL- EMENTS QUTRITS V. E. Zobov and I. S. Pichkovskiy1 Abstract Clustering is grouping of data by the proximity of some properties. We report on the possibility of increasing the efficiency of clustering of points in a plane using artificial quantum neural networks after the replacement of the two-level neurons called qubits represented by the spins S = 1/2 by the three-level neu- rons called qutrits represented by the spins S = 1. The problem has been solved by the slow adiabatic change of the Hamiltonian in time. The methods for controlling a qutrit system using projection operators have been developed and the numerical simulation has been performed. The Hamiltonians for two well-known cluster- ing methods, one-hot encoding and k-means ++, have been built. The first method has been used to partition a set of six points into three or two clusters and the second method, to partition a set of nine points into three clusters and seven points into four clusters. The simulation has shown that the clustering problem can be ef- fectively solved on qutrits represented by the spins S = 1. The advantages of clustering on qutrits over that on qubits have been demonstrated. In particular, the number of qutrits required to represent N data points is smaller than the number of qubits by a factor of log23NN / log . Since, for qutrits, it is easier to partition the data points into three clusters rather than two ones, the approximate hierarchical procedure of data partition- ing into a larger number of clusters is accelerated. -

Quantum Inductive Learning and Quantum Logic Synthesis

Portland State University PDXScholar Dissertations and Theses Dissertations and Theses 2009 Quantum Inductive Learning and Quantum Logic Synthesis Martin Lukac Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/open_access_etds Part of the Electrical and Computer Engineering Commons Let us know how access to this document benefits ou.y Recommended Citation Lukac, Martin, "Quantum Inductive Learning and Quantum Logic Synthesis" (2009). Dissertations and Theses. Paper 2319. https://doi.org/10.15760/etd.2316 This Dissertation is brought to you for free and open access. It has been accepted for inclusion in Dissertations and Theses by an authorized administrator of PDXScholar. For more information, please contact [email protected]. QUANTUM INDUCTIVE LEARNING AND QUANTUM LOGIC SYNTHESIS by MARTIN LUKAC A dissertation submitted in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY in ELECTRICAL AND COMPUTER ENGINEERING. Portland State University 2009 DISSERTATION APPROVAL The abstract and dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering were presented January 9, 2009, and accepted by the dissertation committee and the doctoral program. COMMITTEE APPROVALS: Irek Perkowski, Chair GarrisoH-Xireenwood -George ^Lendaris 5artM ?teven Bleiler Representative of the Office of Graduate Studies DOCTORAL PROGRAM APPROVAL: Malgorza /ska-Jeske7~Director Electrical Computer Engineering Ph.D. Program ABSTRACT An abstract of the dissertation of Martin Lukac for the Doctor of Philosophy in Electrical and Computer Engineering presented January 9, 2009. Title: Quantum Inductive Learning and Quantum Logic Synhesis Since Quantum Computer is almost realizable on large scale and Quantum Technology is one of the main solutions to the Moore Limit, Quantum Logic Synthesis (QLS) has become a required theory and tool for designing Quantum Logic Circuits. -

Chapter 1 Chapter 2 Preliminaries Measurements

To Vera Rae 3 Contents 1 Preliminaries 14 1.1 Elements of Linear Algebra ............................ 17 1.2 Hilbert Spaces and Dirac Notations ........................ 23 1.3 Hermitian and Unitary Operators; Projectors. .................. 27 1.4 Postulates of Quantum Mechanics ......................... 34 1.5 Quantum State Postulate ............................. 36 1.6 Dynamics Postulate ................................. 42 1.7 Measurement Postulate ............................... 47 1.8 Linear Algebra and Systems Dynamics ...................... 50 1.9 Symmetry and Dynamic Evolution ........................ 52 1.10 Uncertainty Principle; Minimum Uncertainty States ............... 54 1.11 Pure and Mixed Quantum States ......................... 55 1.12 Entanglement; Bell States ............................. 57 1.13 Quantum Information ............................... 59 1.14 Physical Realization of Quantum Information Processing Systems ....... 65 1.15 Universal Computers; The Circuit Model of Computation ............ 68 1.16 Quantum Gates, Circuits, and Quantum Computers ............... 74 1.17 Universality of Quantum Gates; Solovay-Kitaev Theorem ............ 79 1.18 Quantum Computational Models and Quantum Algorithms . ........ 82 1.19 Deutsch, Deutsch-Jozsa, Bernstein-Vazirani, and Simon Oracles ........ 89 1.20 Quantum Phase Estimation ............................ 96 1.21 Walsh-Hadamard and Quantum Fourier Transforms ...............102 1.22 Quantum Parallelism and Reversible Computing .................107 1.23 Grover Search Algorithm -

An Introduction to Quantum Computing

An Introduction to Quantum Computing CERN Bo Ewald October 17, 2017 Bo Ewald November 5, 2018 TOPICS •Introduction to Quantum Computing • Introduction and Background • Quantum Annealing •Early Applications • Optimization • Machine Learning • Material Science • Cybersecurity • Fiction •Final Thoughts Copyright © D-Wave Systems Inc. 2 Richard Feynman – Proposed Quantum Computer in 1981 1960 1970 1980 1990 2000 2010 2020 Copyright © D-Wave Systems Inc. 3 April 1983 – Richard Feynman’s Talk at Los Alamos Title: Los Alamos Experience Author: Phyllis K Fisher Page 247 Copyright © D-Wave Systems Inc. 4 The “Marriage” Between Technology and Architecture • To design and build any computer, one must select a compatible technology and a system architecture • Technology – the physical devices (IC’s, PCB’s, interconnects, etc) used to implement the hardware • Architecture – the organization and rules that govern how the computer will operate • Digital – CISC (Intel x86), RISC (MIPS, SPARC), Vector (Cray), SIMD (CM-1), Volta (NVIDIA) • Quantum – Gate or Circuit (IBM, Rigetti) Annealing (D-Wave, ARPA QEO) Copyright © D-Wave Systems Inc. 5 Quantum Technology – “qubit” Building Blocks Copyright © D-Wave Systems Inc. 6 Simulation on IBM Quantum Experience (IBM QX) Preparation Rotation by Readout of singlet state 휃1 and 휃2 measurement IBM QX, Yorktown Heights, USA X X-gate: U1 phase-gate: Xȁ0ۧ = ȁ1ۧ U1ȁ0ۧ = ȁ0ۧ, U1ȁ1ۧ = 푒푖휃ȁ1ۧ Xȁ1ۧ = ȁ0ۧ ۧ CNOT gate: C ȁ0 0 ۧ = ȁ0 0 Hadamard gate: 01 1 0 1 0 H C01ȁ0110ۧ = ȁ1110ۧ Hȁ0ۧ = ȁ0ۧ + ȁ1ۧ / 2 C01ȁ1100ۧ = ȁ1100ۧ + Hȁ1ۧ = ȁ0ۧ − ȁ1ۧ / 2 C01ȁ1110ۧ = ȁ0110ۧ 7 Simulated Annealing on Digital Computers 1950 1960 1970 1980 1990 2000 2010 Copyright © D-Wave Systems Inc. -

Nearest Centroid Classification on a Trapped Ion Quantum Computer

www.nature.com/npjqi ARTICLE OPEN Nearest centroid classification on a trapped ion quantum computer ✉ Sonika Johri1 , Shantanu Debnath1, Avinash Mocherla2,3,4, Alexandros SINGK2,3,5, Anupam Prakash2,3, Jungsang Kim1 and Iordanis Kerenidis2,3,6 Quantum machine learning has seen considerable theoretical and practical developments in recent years and has become a promising area for finding real world applications of quantum computers. In pursuit of this goal, here we combine state-of-the-art algorithms and quantum hardware to provide an experimental demonstration of a quantum machine learning application with provable guarantees for its performance and efficiency. In particular, we design a quantum Nearest Centroid classifier, using techniques for efficiently loading classical data into quantum states and performing distance estimations, and experimentally demonstrate it on a 11-qubit trapped-ion quantum machine, matching the accuracy of classical nearest centroid classifiers for the MNIST handwritten digits dataset and achieving up to 100% accuracy for 8-dimensional synthetic data. npj Quantum Information (2021) 7:122 ; https://doi.org/10.1038/s41534-021-00456-5 INTRODUCTION Thus, one might hope that noisy quantum computers are 1234567890():,; Quantum technologies promise to revolutionize the future of inherently better suited for machine learning computations than information and communication, in the form of quantum for other types of problems that need precise computations like computing devices able to communicate and process massive factoring or search problems. amounts of data both efficiently and securely using quantum However, there are significant challenges to be overcome to resources. Tremendous progress is continuously being made both make QML practical. -

Using Machine Learning for Quantum Annealing Accuracy Prediction

algorithms Article Using Machine Learning for Quantum Annealing Accuracy Prediction Aaron Barbosa 1, Elijah Pelofske 1, Georg Hahn 2,* and Hristo N. Djidjev 1,3 1 CCS-3 Information Sciences, Los Alamos National Laboratory, Los Alamos, NM 87545, USA; [email protected] (A.B.); [email protected] (E.P.); [email protected] (H.N.D.) 2 T.H. Chan School of Public Health, Harvard University, Boston, MA 02115, USA 3 Institute of Information and Communication Technologies, Bulgarian Academy of Sciences, 1113 Sofia, Bulgaria * Correspondence: [email protected] Abstract: Quantum annealers, such as the device built by D-Wave Systems, Inc., offer a way to compute solutions of NP-hard problems that can be expressed in Ising or quadratic unconstrained binary optimization (QUBO) form. Although such solutions are typically of very high quality, problem instances are usually not solved to optimality due to imperfections of the current generations quantum annealers. In this contribution, we aim to understand some of the factors contributing to the hardness of a problem instance, and to use machine learning models to predict the accuracy of the D-Wave 2000Q annealer for solving specific problems. We focus on the maximum clique problem, a classic NP-hard problem with important applications in network analysis, bioinformatics, and computational chemistry. By training a machine learning classification model on basic problem characteristics such as the number of edges in the graph, or annealing parameters, such as the D-Wave’s chain strength, we are able to rank certain features in the order of their contribution to the solution hardness, and present a simple decision tree which allows to predict whether a problem Citation: Barbosa, A.; Pelofske, E.; will be solvable to optimality with the D-Wave 2000Q. -

Thermally Assisted Quantum Annealing of a 16-Qubit Problem

ARTICLE Received 29 Jan 2013 | Accepted 23 Apr 2013 | Published 21 May 2013 DOI: 10.1038/ncomms2920 Thermally assisted quantum annealing of a 16-qubit problem N. G. Dickson1, M. W. Johnson1, M. H. Amin1,2, R. Harris1, F. Altomare1, A. J. Berkley1, P. Bunyk1, J. Cai1, E. M. Chapple1, P. Chavez1, F. Cioata1, T. Cirip1, P. deBuen1, M. Drew-Brook1, C. Enderud1, S. Gildert1, F. Hamze1, J. P. Hilton1, E. Hoskinson1, K. Karimi1, E. Ladizinsky1, N. Ladizinsky1, T. Lanting1, T. Mahon1, R. Neufeld1,T.Oh1, I. Perminov1, C. Petroff1, A. Przybysz1, C. Rich1, P. Spear1, A. Tcaciuc1, M. C. Thom1, E. Tolkacheva1, S. Uchaikin1, J. Wang1, A. B. Wilson1, Z. Merali3 &G.Rose1 Efforts to develop useful quantum computers have been blocked primarily by environmental noise. Quantum annealing is a scheme of quantum computation that is predicted to be more robust against noise, because despite the thermal environment mixing the system’s state in the energy basis, the system partially retains coherence in the computational basis, and hence is able to establish well-defined eigenstates. Here we examine the environment’s effect on quantum annealing using 16 qubits of a superconducting quantum processor. For a problem instance with an isolated small-gap anticrossing between the lowest two energy levels, we experimentally demonstrate that, even with annealing times eight orders of magnitude longer than the predicted single-qubit decoherence time, the probabilities of performing a successful computation are similar to those expected for a fully coherent system. Moreover, for the problem studied, we show that quantum annealing can take advantage of a thermal environment to achieve a speedup factor of up to 1,000 over a closed system. -

A Functional Architecture for Scalable Quantum Computing

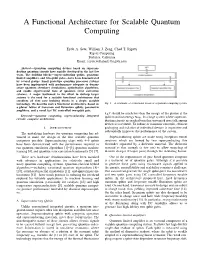

A Functional Architecture for Scalable Quantum Computing Eyob A. Sete, William J. Zeng, Chad T. Rigetti Rigetti Computing Berkeley, California Email: feyob,will,[email protected] Abstract—Quantum computing devices based on supercon- ducting quantum circuits have rapidly developed in the last few years. The building blocks—superconducting qubits, quantum- limited amplifiers, and two-qubit gates—have been demonstrated by several groups. Small prototype quantum processor systems have been implemented with performance adequate to demon- strate quantum chemistry simulations, optimization algorithms, and enable experimental tests of quantum error correction schemes. A major bottleneck in the effort to devleop larger systems is the need for a scalable functional architecture that combines all thee core building blocks in a single, scalable technology. We describe such a functional architecture, based on Fig. 1. A schematic of a functional layout of a quantum computing system. a planar lattice of transmon and fluxonium qubits, parametric amplifiers, and a novel fast DC controlled two-qubit gate. kBT should be much less than the energy of the photon at the Keywords—quantum computing, superconducting integrated qubit transition energy ~!01. In a large system where supercon- circuits, computer architecture. ducting circuits are packed together, unwanted crosstalk among devices is inevitable. To reduce or minimize crosstalk, efficient I. INTRODUCTION packaging and isolation of individual devices is imperative and substantially improves the performance of the system. The underlying hardware for quantum computing has ad- vanced to make the design of the first scalable quantum Superconducting qubits are made using Josephson tunnel computers possible. Superconducting chips with 4–9 qubits junctions which are formed by two superconducting thin have been demonstrated with the performance required to electrodes separated by a dielectric material. -

Explorations in Quantum Neural Networks with Intermediate Measurements

ESANN 2020 proceedings, European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning. Online event, 2-4 October 2020, i6doc.com publ., ISBN 978-2-87587-074-2. Available from http://www.i6doc.com/en/. Explorations in Quantum Neural Networks with Intermediate Measurements Lukas Franken and Bogdan Georgiev ∗Fraunhofer IAIS - Research Center for ML and ML2R Schloss Birlinghoven - 53757 Sankt Augustin Abstract. In this short note we explore a few quantum circuits with the particular goal of basic image recognition. The models we study are inspired by recent progress in Quantum Convolution Neural Networks (QCNN) [12]. We present a few experimental results, where we attempt to learn basic image patterns motivated by scaling down the MNIST dataset. 1 Introduction The recent demonstration of Quantum Supremacy [1] heralds the advent of the Noisy Intermediate-Scale Quantum (NISQ) [2] technology, where signs of supe- riority of quantum over classical machines in particular tasks may be expected. However, one should keep in mind the limitations of NISQ-devices when study- ing and developing quantum-algorithmic solutions - among other things, these include limits on the number of gates and qubits. At the same time the interaction of quantum computing and machine learn- ing is growing, with a vast amount of literature and new results. To name a few applications, the well-known HHL algorithm [3], quantum phase estimation [5] and inner products speed-up techniques lead to further advances in Support Vector Machines [4] and Principal Component Analysis [6, 7]. Intensive progress and ongoing research has also been made towards quantum analogues of Neural Networks (QNN) [8, 9, 10]. -

Quantum Algorithms

An Introduction to Quantum Algorithms Emma Strubell COS498 { Chawathe Spring 2011 An Introduction to Quantum Algorithms Contents Contents 1 What are quantum algorithms? 3 1.1 Background . .3 1.2 Caveats . .4 2 Mathematical representation 5 2.1 Fundamental differences . .5 2.2 Hilbert spaces and Dirac notation . .6 2.3 The qubit . .9 2.4 Quantum registers . 11 2.5 Quantum logic gates . 12 2.6 Computational complexity . 19 3 Grover's Algorithm 20 3.1 Quantum search . 20 3.2 Grover's algorithm: How it works . 22 3.3 Grover's algorithm: Worked example . 24 4 Simon's Algorithm 28 4.1 Black-box period finding . 28 4.2 Simon's algorithm: How it works . 29 4.3 Simon's Algorithm: Worked example . 32 5 Conclusion 33 References 34 Page 2 of 35 An Introduction to Quantum Algorithms 1. What are quantum algorithms? 1 What are quantum algorithms? 1.1 Background The idea of a quantum computer was first proposed in 1981 by Nobel laureate Richard Feynman, who pointed out that accurately and efficiently simulating quantum mechanical systems would be impossible on a classical computer, but that a new kind of machine, a computer itself \built of quantum mechanical elements which obey quantum mechanical laws" [1], might one day perform efficient simulations of quantum systems. Classical computers are inherently unable to simulate such a system using sub-exponential time and space complexity due to the exponential growth of the amount of data required to completely represent a quantum system. Quantum computers, on the other hand, exploit the unique, non-classical properties of the quantum systems from which they are built, allowing them to process exponentially large quantities of information in only polynomial time.