C 2016 Anupam Das UNDERSTANDING and MITIGATING the PRIVACY RISKS of SMARTPHONE SENSOR FINGERPRINTING

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mobile Audio IC Industry Report, 2007-2008

Mobile Audio IC Industry Report, 2007-2008 MobileaudioICismainlyappliedtomobilephoneandMP3 playersandnowitisextendedtogameconsole,hand-held navigation,digitalcameraandotherfields.However, applicationinthesefieldsisstillsmall,Comparedtothatin mobilephoneandMP3players.MobilephoneaudioICis dividedintothreecategories,namelymelodyIC,CODEC,and audioamplifierIC.TheaudioICofMP3playersmainlyadopts MP3decoderICandCODEC. MelodyICmarketprospectsarebleak.Keyglobalmobile phonemanufacturers,likeNokia,Motorola,Sony-Ericssonand Siemens,seldomadoptmelodyIC.Especiallysince2005,the fourbigproducershavegenerallyusedapplicationprocessor andcustomizedanalogbaseband toreplacemelodyIC.In earlystage,mainlyJapanesemobilephoneproducers, Samsung,LGandChinesehandsetproducersadoptedmelody ICchips.Nowadays,onlyJapanesemanufacturersstillinsist onadoptingmelodyIC,andtherestallhavegivenitup. ThereareonlyasmallnumberofaudioCODECmanufacturers,mainlyTI,AKMandWolfson Microelectronics.Audio CODECmarketentrythresholdisveryhigh,andonlythemanufacturersmasteringDELTA-SIGMAconversioncan winthemselvesaplaceinthefield.What'smore,audioCODECis mostlyappliedtocompressedmusicplayers,and especiallyahugeamountisusedinMP3anddigitalcamera.ToCODECproducers,mobilephoneisonlytheir sidelinebusiness,aboutwhichtheydon'tcaremuch,iftheyloseit.AsforMP3player,Wolfson isabsolutelythe marketdominator,monopolizingthemarket.Asfornon-portablemusicplayerslikeCDplayerandprofessional acousticsequipment,ADI,AKMandCirrussharethemarket.Wolfson isseldominvolvedinthehigh-quality audiomarket.Thesemanufacturersarecomparativelyconservative,andtheydon'tpaymuchattentiontoportable -

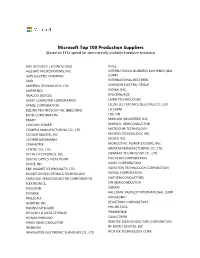

Microsoft Top 100 Production Suppliers (Based on FY14 Spend for Commercially Available Hardware Products)

Microsoft Top 100 Production Suppliers (Based on FY14 spend for commercially available hardware products) AAC ACOUSTIC TECHNOLOGIES INTEL ALLEGRO MICROSYSTEMS, INC. INTERNATIONAL BUSINESS MACHINES (IBM ALPS ELECTRIC COMPANY CORP.) AMD INTERNATIONAL RECTIFIER AMPEREX TECHNOLOGY, LTD. JOHNSON ELECTRIC GROUP AMPHENOL KIONIX, INC. ANALOG DEVICES KYOCERA/AVX ASKEY COMPUTER CORPORATION LAIRD TECHNOLOGIES ATMEL CORPORATION LELON ELECTRONICS (SUZHOU) CO., LTD BIZLINK TECHNOLOGY INC (BIZCONN) LG CHEM BOYD CORPORATION LITE-ON BRADY MARLOW INDUSTRIES, INC. CHICONY POWER MARVELL SEMICONDUCTOR COMPEQ MANUFACTURING CO., LTD. MICROCHIP TECHNOLOGY COOLER MASTER, INC. MICRON TECHNOLOGY, INC. COOPER BUSSMANN MOLEX, INC. CYMMETRIK MONOLITHIC POWER SYSTEMS, INC. CYNTEC CO., LTD. MURATA MANUFACTURING CO., LTD. DELTA ELECTRONICS, INC. NEWMAX TECHNOLOGY CO., LTD. DIGITAL OPTICS VISTA POINT NICHICON CORPORATION DIODE, INC. NIDEC CORPORATION E&E MAGNETICS PRODUCTS, LTD. NUVOTON TECHNOLOGY CORPORATION ELLINGTON ELECTRONICS TECHNOLOGY NVIDIA CORPORATION FAIRCHILD SEMICONDUCTOR CORPORATION NXP SEMICONDUCTORS FLEXTRONICS ON SEMICONDUCTOR FOXCONN OSRAM FOXLINK PALCONN, PALPILOT INTERNATIONAL CORP. FREESCALE PANASONIC GOERTEK, INC. PEGATRON CORPORATION HANNSTAR BOARD PHILIPS PLDS HITACHI-LG DATA STORAGE PRIMESENSE HONDA PRINTING QUALCOMM HYNIX SEMICONDUCTOR REALTEK SEMICONDUCTOR CORPORATION INFINEON RF MICRO DEVICES, INC. INNOVATOR ELECTRONIC SHENZHEN CO., LTD RICHTEK TECHNOLOGY CORP. ROHM CORPORATION SAMSUNG DISPLAY SAMSUNG ELECTRONICS SAMSUNG SDI SAMSUNG SEMICONDUCTOR SEAGATE SHEN ZHEN JIA AI MOTOR CO., LTD. SHENZHEN HORN AUDIO CO., LTD. SHINKO ELECTRIC INDUSTRIES CO., LTD. STARLITE PRINTER, LTD. STMICROELECTRONICS SUNG WEI SUNUNION ENVIRONMENTAL PACKAGING CO., LTD TDK TE CONNECTIVITY TEXAS INSTRUMENTS TOSHIBA TPK TOUCH SOLUTIONS, INC. UNIMICRON TECHNOLOGY CORP. UNIPLAS (SHANGHAI) CO., LTD. UNISTEEL UNIVERSAL ELECTRONICS INCORPORATED VOLEX WACOM CO., LTD. WELL SHIN TECHNOLOGY WINBOND WOLFSON MICROELECTRONICS, LTD. X-CON ELECTRONICS, LTD. YUE WAH CIRCUITS CO., LTD. -

European Technology Media & Telecommunications Monitor

European Technology Media & Telecommunications Monitor Market and Industry Update H1 2013 Piper Jaffray European TMT Team: Eric Sanschagrin Managing Director Head of European TMT [email protected] +44 (0) 207 796 8420 Jessica Harneyford Associate [email protected] +44 (0) 207 796 8416 Peter Shin Analyst [email protected] +44 (0) 207 796 8444 Julie Wright Executive Assistant [email protected] +44 (0) 207 796 8427 TECHNOLOGY, MEDIA & TELECOMMUNICATIONS MONITOR Market and Industry Update Selected Piper Jaffray H1 2013 TMT Transactions TMT Investment Banking Transactions Date: June 2013 $47,500,000 Client: IPtronics A/S Transaction: Mellanox Technologies, Ltd. signed a definitive agreement to acquire IPtronics A/S from Creandum AB, Sunstone Capital A/S and others for $47.5 million in cash. Pursuant to the Has Been Acquired By transaction, IPtronics’ current location in Roskilde, Denmark will serve as Mellanox’s first research and development centre in Europe and IPtronics A/S will operate as a wholly-owned indirect subsidiary of Mellanox Technologies, Ltd. Client Description: Mellanox Technologies Ltd. is a leading supplier of end-to-end InfiniBand and June 2013 Ethernet interconnect solutions and services for servers and storage. PJC Role: Piper Jaffray acted as exclusive financial advisor to IPtronics A/S. Date: May 2013 $46,000,000 Client: inContact, Inc. (NasdaqCM: SAAS) Transaction: inContact closed a $46.0 million follow-on offering of 6,396,389 shares of common stock, priced at $7.15 per share. Client Description: inContact, Inc. provides cloud contact center software solutions. PJC Role: Piper Jaffray acted as bookrunner for the offering. -

Conexant Systems, Inc

Conexant Systems, Inc. Notice of Annual Meeting, Proxy Statement and 2009 Annual Report on Form 10-K Corporate Overview Conexant develops innovative semiconductor solutions for imaging, audio, embedded-modem, and video applications, which are all areas where the company has established leadership positions. Conexant is a fabless company headquartered in Newport Beach, Calif., and has key design centers in the U.S., China, and India, and sales offi ces worldwide. CONEXANT SYSTEMS, INC. D. SCOTT MERCER CHAIRMAN AND CHIEF EXECUTIVE OFFICER To Our Shareholders: Conexant today is a company transformed. We are now a company to Wall Street in a series of investor meetings and smaller, leaner, and more profi table enterprise focused on successfully accessed the capital markets by executing a public delivering operational excellence and innovative semiconductor offering of new shares, raising a net amount of $21.2 million. solutions for imaging, audio, embedded-modem, and video surveillance applications. In each of these areas, we’re We are now focused on retiring, refi nancing, or restructuring committed to building on the leading positions we’ve established. our convertible notes, which are “puttable” in March 2011. In the past few months, we have been responding to requests by During fi scal 2009 we made outstanding progress across multiple note holders to exchange new shares of common stock for fronts. In the face of extraordinarily challenging economic convertible notes, which improves our balance sheet without conditions, we improved our fi nancial performance, strengthened negatively affecting our enterprise value. Satisfying our our capital structure, and introduced a full slate of compelling convertible debt remains one of our highest company priorities, new products. -

Press Release

Press Release Wolfson licenses Oxford Digital’s TinyCore DSP Edinburgh, UK, 5 January 2011 – Wolfson Microelectronics plc, a global leader in the design and development of mixed-signal semiconductors and Audio Hubs that combine to deliver High Definition Audio solutions, has today announced a licence agreement with audio processing specialist Oxford Digital Limited to use its digital signal processor (DSP) core in a selection of Wolfson’s industry-leading Audio Hub products. Oxford Digital’s TinyCore DSP core delivers a low-power, low gate count, highly configurable solution which simplifies the software development process, allowing easy porting of audio function algorithms thereby reducing the time-to-market for customers. Wolfson’s architecture-defining Audio Hubs blend together many of Wolfson’s successful audio components to deliver world-class audio performance, significantly enhanced battery life, longer music playback time and more end user features at a lower total cost. Crucially, Wolfson’s Audio Hubs enable system designers to optimally manage increasingly complex multiple use cases in portable multimedia applications including smartphones, tablet computers, e-book readers, navigation devices and media players. Eddie Sinnott, Portfolio Director at Wolfson Microelectronics, said, “We selected Oxford Digital’s TinyCore DSP due to its efficiency at executing certain key High Definition Audio algorithms, which our customers tell us provide them with a differentiator in their market place. Our choice was helped by the ease of its software porting process, excellent power efficiency, scalability and rapid time-to-market, all of which complement our architecture.” John Richards, CEO of Oxford Digital, said, “Wolfson is a leader in high-quality and low-power mixed-signal audio and we are excited to be working with them on integrating our IP into their products. -

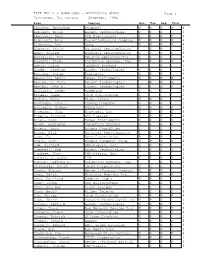

IEEE 802.3 & Subgroups

IEEE 802.3 & Subgroups - Attendance Sheet Page 1 Vancouver, BC, Canada November, 1996 Name Company Mon Tue Wed Thur Abraham, Menachem Prominet P P P P 4 Ackland, Bryan Lucent Technologies P P P 3 Aggarwal, Nand PCA Electronics P P P P 4 Albrecht, Alan Hewlett-Packard Company P P P P 4 Alderrou, Don Seeq P P P P 4 Almagor, David National Semiconductor P P 2 Amer, Khaled Rockwell Semiconductor P P P P 4 Annamalai, Kay Pericom Semiconductor P P P 3 Augusta, Steve Cabletron systems, Inc. P P P P 4 Aytac, Haluk Hewlett Packard P 1 Azadet, Kameran Lucent Technologies P P P 3 Batscha, Yoram Fibronics P P P 3 Beaudoin, Denis Texas Instruments P P P P 4 Benson, J. Paul Lucent Technologies P P P 3 Bestel, John L. Lucent Technologies P P P P 4 Billings, Roger Wideband P P P 3 Binder, James 3Com Corporation P 1 Bohrer, Mark Micro Linear P P P 3 Bontemps, Evan J. COMPAQ Computer P P P 3 Bouzaglo, Sidney NORDX/CDT P P P P 4 Bowerman, John Honeywell Inc. P P P P 4 Bowers, Richard GEC Plessey P P P P 4 Brier, Dave Texas Instruments P P P P 4 Brown, Benjamin Cabletron Systems P P P P 4 Bryers, Mark Bryers Consulting P P P 3 Bunch, Bill National Semiconductor P P P P 4 Cady, Ed Berg Electronics P P P 3 Cagle, John Compaq Computer Corp. P P P P 4 Cam, Richard PMC-Sierra, Inc. -

A Decade of Semiconductor Companies : 1988 Edition

1988 y DataQuest Do Not Remove A. Decade of Semiconductor Companies 1988 Edition Components Division TABLE OF CONTENTS Page I. Introduction 1 II. Venture Capital 11 III. Strategic Alliances 15 IV. Product Analysis 56 Emerging Technology Companies 56 Analog ICs 56 ASICs 58 Digital Signal Processing 59 Discrete Semiconductors 60 Gallium Arsenide 60 Memory 62 Microcomponents 64 Optoelectronics 65 Telecommunication ICs 65 Other Products 66 Bubble Memory 67 V. Company Profiles (139) 69 A&D Co., Ltd. 69 Acrian Inc. 71 ACTEL Corporation 74 Acumos, Inc. 77 Adaptec, Inc. 79 Advanced Linear Devices, Inc. 84 Advanced Microelectronic Products, Inc. 87 Advanced Power Technology, Inc. 89 Alliance Semiconductor 92 Altera Corporation 94 ANADIGICS, Inc. 100 Applied Micro Circuits Corporation 103 Asahi Kasei Microsystems Co., Ltd. 108 Aspen Semiconductor Corporation 111 ATMEL Corporation 113 Austek Microsystems Pty. Ltd. 116 Barvon Research, Inc. 119 Bipolar Integrated Technology 122 Brooktree Corporation 126 California Devices, Ihc. 131 California Micro Devices Corporation 135 Calmos Systems, Inc. 140 © 1988 Dataquest Incorporated June TABLE OF CONTENTS (Continued) Pagg Company Profiles (Continued) Calogic Corporation 144 Catalyst Semiconductor, Inc. 146 Celeritek, Inc. ISO Chartered Semiconductor Pte Ltd. 153 Chips and Technologies, Inc. 155 Cirrus Logic, Inc. 162 Conductus Inc. 166 Cree Research Inc. 167 Crystal Semiconductor Corporation 169 Custom Arrays Corporation 174 Custom Silicon Inc. 177 Cypress Semiconductor Corporation 181 Dallas Semiconductor Corporation 188 Dolphin Integration SA 194 Elantec, Inc. 196 Electronic Technology Corporation 200 Epitaxx Inc. 202 European Silicon Structures 205 Exel Microelectronics Inc. 209 G-2 Incorporated 212 GAIN Electronics 215 Gazelle Microcircuits, Inc. 218 Genesis Microchip Inc. -

Company Vendor ID (Decimal Format) (AVL) Ditest Fahrzeugdiagnose Gmbh 4621 @Pos.Com 3765 0XF8 Limited 10737 1MORE INC

Vendor ID Company (Decimal Format) (AVL) DiTEST Fahrzeugdiagnose GmbH 4621 @pos.com 3765 0XF8 Limited 10737 1MORE INC. 12048 360fly, Inc. 11161 3C TEK CORP. 9397 3D Imaging & Simulations Corp. (3DISC) 11190 3D Systems Corporation 10632 3DRUDDER 11770 3eYamaichi Electronics Co., Ltd. 8709 3M Cogent, Inc. 7717 3M Scott 8463 3T B.V. 11721 4iiii Innovations Inc. 10009 4Links Limited 10728 4MOD Technology 10244 64seconds, Inc. 12215 77 Elektronika Kft. 11175 89 North, Inc. 12070 Shenzhen 8Bitdo Tech Co., Ltd. 11720 90meter Solutions, Inc. 12086 A‐FOUR TECH CO., LTD. 2522 A‐One Co., Ltd. 10116 A‐Tec Subsystem, Inc. 2164 A‐VEKT K.K. 11459 A. Eberle GmbH & Co. KG 6910 a.tron3d GmbH 9965 A&T Corporation 11849 Aaronia AG 12146 abatec group AG 10371 ABB India Limited 11250 ABILITY ENTERPRISE CO., LTD. 5145 Abionic SA 12412 AbleNet Inc. 8262 Ableton AG 10626 ABOV Semiconductor Co., Ltd. 6697 Absolute USA 10972 AcBel Polytech Inc. 12335 Access Network Technology Limited 10568 ACCUCOMM, INC. 10219 Accumetrics Associates, Inc. 10392 Accusys, Inc. 5055 Ace Karaoke Corp. 8799 ACELLA 8758 Acer, Inc. 1282 Aces Electronics Co., Ltd. 7347 Aclima Inc. 10273 ACON, Advanced‐Connectek, Inc. 1314 Acoustic Arc Technology Holding Limited 12353 ACR Braendli & Voegeli AG 11152 Acromag Inc. 9855 Acroname Inc. 9471 Action Industries (M) SDN BHD 11715 Action Star Technology Co., Ltd. 2101 Actions Microelectronics Co., Ltd. 7649 Actions Semiconductor Co., Ltd. 4310 Active Mind Technology 10505 Qorvo, Inc 11744 Activision 5168 Acute Technology Inc. 10876 Adam Tech 5437 Adapt‐IP Company 10990 Adaptertek Technology Co., Ltd. 11329 ADATA Technology Co., Ltd. -

European Company Profiles Acapella Ltd

European Company Profiles Acapella Ltd. ACAPELLA LTD. Acapella Ltd. Delta House Chilworth Research Centre Southampton, Hants SO16 7NS United Kingdom Telephone: (44) 1703-769008 Fax: (44) 1703-768612 Web Site: www.acapella.co.uk Fabless IC Supplier Company Overview and Strategy Founded in 1990, Acapella has three primary activities. Acapella is a designer and fabless manufacturer of ICs for fiber optic communication applications. Additionally, Acapella provides IC design consultancy services, (resulting from 1992 acquisition of Analogue Design Consultants - ADC), and serves as Tanner Research, Inc.’s (Pasadena, CA) European distributor for IC CAD tools. Acapella’s strategy is to target the fiber optic communications market because it fits their position as a small start-up company with limited resources. As the company grows in size and reputation, they plan to move more toward mainstream optical communications products. Acapella markets its products worldwide and has a single regional distributor in Denmark, Germany, Israel, Italy, Japan, Portugal, Spain and Taiwan, as well as two distributors in both Switzerland and the U.S. Management Phil Tolcher Sales Manager Products and Processes Acapella’s IC division focuses on the design and manufacture of ICs for Fiber Optic Communications. Their particular expertise is the merging of high performance analogue circuitry with intelligent digital interface and control technologies. Acapella claims this has made them the leading supplier of Ping Pong single fibre communications ICs. Acapella positions their Ping Pong ICs as providing full duplex links without the need for expensive WDM optics. Acapella also offers a single LED IC for both transmission and reception (low cost, normal link budgets), and single wavelength Laser Duplex Devices (for higher link budgets). -

United States Bankruptcy Court District of Delaware

B6 Summary (Offcial Form 6 - Summary) (12107) United States Bankruptcy Court District of Delaware In re MagnaChip Semiconductor LLC Case No. 09-12009 (PJW) Debtor Chapter 11 SUMMARY OF SCHEDULES Indicate as to each schedule whether that schedule is attached and state the number of pages in each. Report the totals from Schedules A, B, D, E, F, I, and J in the boxes provided. Add the amounts from Schedules A and B to determine the total amount of the debtor's assets. Add the amounts of all claims from Schedules D, E, and F to determine the total amount of the debtor's liabilities. Individual debtors must also complete the "Statistical Summar of Certain Liabilities and Related Data" if they fie a case under chapter 7,11, or 13. NAME OF SCHEDULE ATTACHED NO. OF ASSETS LIABILITIES OTHER (YES/NO) SHEETS A - Real Propert Yes 1 B - Personal Propert Yes 4 C - Propert Claimed as Exempt No 0 D - Creditors Holding Secured Claims Yes 1 E - Creditors Holding Unsecured Yes 2 Priority Claims (Total of Claims on Schedule E) F - Creditors Holding Unsecured Yes 2 Nonpriority Claims G - Executory Contracts and Yes 23 Unexpired Leases H - Codebtors Yes 5 1 - Current Income ofIndividual No 0 Debtor(s) J - Current Expenditures ofIndividual No 0 Debtor(s) 38 Total Number of Sheets of ALL Schedules Total Assets Total Liabilities 851,879,383.52 Copyright (e) 1996-2009. Best Case Solutions. Evanston, IL. (BOO) 492.B037 Best Case Bankruptcy UNITED STATES BANKRUPTCY COURT FOR THE DISTRICT OF DELAWARE Global Notes and Statement of Limitations, Methodology and Disclaimer Regarding the Debtors' Statement and Schedules MagnaChip Semiconductor Finance Company, MagnaChip Semiconductor LLC, MagnaChip Semiconductor SA Holdings LLC, MagnaChip Semiconductor, Ii1c., MagnaChip Semiconductor S.A., and MagnaChip Semiconductor B.V. -

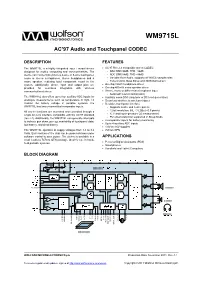

Wolfson WM9715L Audio Codec and Touch Screen Controller

w WM9715L AC’97 Audio and Touchpanel CODEC DESCRIPTION FEATURES The WM9715L is a highly integrated input / output device AC’97 Rev 2.2 compatible stereo CODEC designed for mobile computing and communications. The - DAC SNR 90dB, THD –86dB device can connect directly to a 4-wire or 5-wire touchpanel, - ADC SNR 88dB, THD –88dB mono or stereo microphones, stereo headphones and a - Variable Rate Audio, supports all WinCE sample rates mono speaker, reducing total component count in the - Tone Control, Bass Boost and 3D Enhancement system. Additionally, phone input and output pins are On-chip 45mW headphone driver provided for seamless integration with wireless On-chip 400mW mono speaker driver communication devices. Stereo, mono or differential microphone input - Automatic Level Control (ALC) The WM9715L also offers up to four auxiliary ADC inputs for Auxiliary mono DAC (ring tone or DC level generation) analogue measurements such as temperature or light. To Seamless interface to wireless chipset monitor the battery voltage in portable systems, the Resistive touchpanel interface WM9715L has two uncommitted comparator inputs. - Supports 4-wire and 5-wire panels All device functions are accessed and controlled through a - 12-bit resolution, INL 3 LSBs (<0.5 pixels) single AC-Link interface compatible with the AC’97 standard - X, Y and touch-pressure (Z) measurement (rev 2.2). Additionally, the WM9715L can generate interrupts - Pen-down detection supported in Sleep Mode to indicate pen down, pen up, availability of touchpanel data, 2 comparator inputs for battery monitoring Up to 4 auxiliary ADC inputs low battery, and dead battery. 1.8V to 3.6V supplies The WM9715L operates at supply voltages from 1.8 to 3.6 7x7mm QFN Volts. -

CLASS D AUDIO DESIGN TIPS About Us

CLASS D AUDIO DESIGN TIPS About us Wolfson Microelectronics plc (LSE: WLF.L) is a leading High performance audio global provider of high performance, mixed-signal Wolfson engineers have a passion for audio. We don’t just semiconductors to the consumer electronics market. design our circuits, we listen to them. This ensures that our mixed-signal semiconductors can really differentiate your Renowned internationally for our high performance product in the eyes and ears of your end customer. audio and ultra low power consumption, Wolfson delivers the audio and imaging technology at the Reliable high volume supply heart of some of the world’s most successful digital Wolfson’s proven ability to supply in high volume has consumer goods. Applications which include mobile helped us fi nd a place at the heart of some of the world’s phones, MP3 players, digital cameras, fl at panel most sought-after digital electronic products. televisions, gaming consoles, Hi-Fis, all-in-one printers and scanners, and DVD players and recorders. Advanced mixed-signal design Experience really counts in mixed-signal design and, If a digital product can capture an image or play a with over 20 years experience, our design team is able to sound Wolfson aims to have a product that will allow it produce some of the world’s most advanced to perform these tasks better. mixed-signal devices. Wolfson’s headquarters are in Edinburgh, UK, where Engineer to engineer we employ some of the most experienced and With our background as a design house, Wolfson innovative designers and engineers in the industry.