Campus Bridging Workshop Reports

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

Announcing Today

Mobile Internet Devices: The Innovation Platform Anand Chandrasekher Intel Corporation PC Internet Growth Traffic 100000 10000 100TB/Month 1000 100 10 2008 1 Time SOURCE: Cisco Monthly Internet Traffic Worldwide But Internet Usage is Changing: Social Networking EBSCO Advertising Age Alexa Global Internet Traffic Rankings 1999 20051 20082 Rank Web Site Rank Web Site Rank Web Site 1 AOL 1 yahoo.com 1 yahoo.com 2 yahoo.com 2 msn.com 2 youtube.com 3 Microsoft/msn.com 3 google.com 3 live.com 4 lycos.com 4 ebay.com 4 google.com 5 go.com 5 amazon.com 5 myspace.com 6 realnetworks.com 6 microsoft.com 6 facebook.com 7 excite@home 7 myspace.com 7 msn.com 8 ebay.com 8 google.co.uk 8 hi5.com 9 altavista.com 9 aol.com 9 wikipedia.org 10 timewarner.com 10 go.com 10 orkut.com Traffic rank is based on three months of aggregated historical traffic data from Alexa Toolbar users and is a combined measure of page views / users (geometric mean of the two quantities averaged over time). (1) Rankings as of 12/31/05, excludes Microsoft Passport; (2) Rankings as of 11/06/07 Source: Alexa Global Traffic Rankings, Morgan Stanley Research Internet Unabated Social Networking User Generated Content Location: $4,000 50 Users 45 Revenues $3,500 40 $3,000 35 30 $2,500 25 $2,000 Millions 20 $1,500 15 >300M Unique Global Visitors $1,000 Facebook: 10 84% Y-on-Y Growth 5 $500 June ’07: 52M unique visitors, 28B Minutes 0 $0 June ’08: 132M unique visitors 200820092010201120122013 Source: ComScore World Metrics Users Want to Take These Experience with Them Users Want to Take These Experience with Them 2 to 1 Preference for Full Internet vs. -

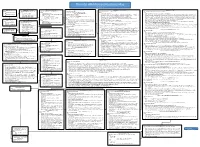

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

EU Energy Star Database | Laptop PC Archive 2006-2007 | 2007.07.20

LAPTOP PC - archive 2006-2007 ) BRAND MODEL Watts in Sleep in On / Idle Watts CPU (MHz) speed Rated RAM (MB) Hard disk (GB) Cache (kB) Video RAM (MB) Power supply (W) System Operating storage Optical Year EU (2006/2007 Current PD_ID Acer Aspire 1200 Series 2.66 Celeron 1300 256 20 256 60 CDr 2002 10120 Acer Aspire 1200X 2.72 Celeron 1000 128 10 60 XP Pro CDr 2002 10108 Acer Aspire 1200XV (10GB) 2.72 Celeron 1000 128 10 60 XP Pro CDr 2002 10106 Acer Aspire 1200XV (20 GB) 2.72 Celeron 1000 256 20 60 XP Pro CDr 2002 10107 Acer Aspire 1202XC (10 GB) 2.76 Celeron 1200 256 10 256 60 XP Pro CDr 2002 10104 Acer Aspire 1202XC (20 GB) 2.74 Celeron 1200 256 20 256 60 XP Pro CDr 2002 10105 Acer Aspire 1310 (also in 14.1", 2.9 kg version) 0.80 AMD 2000 60 512 32 75 XP DVDr/CDrw 2003 1003531 Acer Aspire 1400 Series 1.63 P4 2000 256 30 512 90 DVDr/CDrw 2002 10118 Acer Aspire 1410 series 0.97 Celeron 1500 512 80 1024 64 65 XP 2004 x 1022450 Acer Aspire 1450 series 1.20 Athlon 1860 512 60 512 64 90 XP DVDrw 2003 1013067 Acer Aspire 1640Z series 0.95 12.00 PM 2000 512 80 2048 128 65 XP DVDrw 2006 x 1035428 Acer Aspire 1650Z series 0.95 12.00 PM 2000 512 80 2048 128 65 XP DVDrw 2006 x 1035429 Acer Aspire 1680 series 0.97 PM 2000 512 80 2048 64 65 XP 2004 x 1022449 Acer Aspire 1690 series 0.95 15.30 PM 2130 2048 80 2048 128 65 XP DVDrw 2005 x 1025226 Acer Aspire 1800 2.10 P4 3800 2048 80 1024 128 150 XP DVDrw 2004 x 1022660 Acer Aspire 2000 series 2.30 PM 1700 512 80 100 65 XP DVDr 2003 1012901 Acer Aspire 2010 series 2.61 P4 1800 1024 80 1000 65 65 XP CDr -

The Intel X86 Microarchitectures Map Version 2.2

The Intel x86 Microarchitectures Map Version 2.2 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • New instructions: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

![[Procesadores]](https://docslib.b-cdn.net/cover/4469/procesadores-2234469.webp)

[Procesadores]

VIERNES 29 de noviembre de 2013 SENA ERICK DE LA HOZ ANDERSSON PALMA ALEXIS GUERRERO FREDER TORRES MARCOS MONTENEGRO [PROCESADORES] El cerebro de las micro computadoras es el microprocesador, éste maneja las necesidades aritméticas, lógicas y de control de la computadora, todo trabajo que se ejecute en una computadora es realizado directa o indirectamente por el microprocesador INTRODUCCIÓN Se le conoce por sus siglas en inglés CPU (Unidad Central de Proceso). El microprocesador tiene su origen en la década de los sesenta, cuando se diseñó el circuito integrado (CI) al combinar varios componentes electrónicos en un solo componente sobre un “Chip” de silicio. El microprocesador es un tipo de componente electrónico en cuyo interior existen miles (o millones) de elementos llamados transistores, cuya combinación permite realizar el trabajo que tenga encomendado el chip. El microprocesador hizo a la computadora personal (PC) posible. En nuestros días uno o más de estos milagros modernos sirven como cerebro no sólo a computadoras personales, sino también a muchos otros dispositivos, como juguetes, aparatos electrodomésticos, automóviles, etc. Después del surgimiento de la PC (computadora personal), la investigación y desarrollo de los microprocesadores se convirtió en un gran negocio. El más exitoso productor de microprocesadores, la corporación Intel, convirtió al microprocesador en su producto más lucrativo en el mercado de la PC. A pesar de esta fuerte relación entre PC y microprocesador, las PC's son sólo una de las aplicaciones más visibles de la tecnología de los microprocesadores, y representan una fracción del total de microprocesadores producidos y comercializados. Los microprocesadores son tan comunes que probablemente no nos damos cuenta de su valor, nunca pensamos en ellos tal vez porque la gran mayoría de éstos siempre se encuentran ocultos en los dispositivos. -

Productivity and Software Development Effort Estimation in High-Performance Computing

ERGEBNISSE AUS DER INFORMATIK Sandra Wienke Productivity and Software Development Effort Estimation in High-Performance Computing Productivity and Software Development Effort Estimation in High-Performance Computing Von der Fakultät für Mathematik, Informatik und Naturwissenschaften der RWTH Aachen University zur Erlangung des akademischen Grades einer Doktorin der Naturwissenschaften genehmigte Dissertation vorgelegt von Sandra Juliane Wienke, Master of Science aus Berlin-Wedding Berichter: Universitätsprofessor Dr. Matthias S. Müller Universitätsprofessor Dr. Thomas Ludwig Tag der mündlichen Prüfung: 18. September 2017 Diese Dissertation ist auf den Internetseiten der Universitätsbibliothek online verfügbar. Bibliografische Information der Deutschen Nationalbibliothek Die Deutsche Nationalbibliothek verzeichnet diese Publikation in der Deutschen Nationalbibliografie; detaillierte bibliografische Daten sind im Internet über http://dnb.ddb.de abrufbar. Sandra Wienke: Productivity and Software Development Effort Estimation in High-Performance Compu- ting 1. Auflage, 2017 Gedruckt auf holz- und säurefreiem Papier, 100% chlorfrei gebleicht. Apprimus Verlag, Aachen, 2017 Wissenschaftsverlag des Instituts für Industriekommunikation und Fachmedien an der RWTH Aachen Steinbachstr. 25, 52074 Aachen Internet: www.apprimus-verlag.de, E-Mail: [email protected] Printed in Germany ISBN 978-3-86359-572-2 D 82 (Diss. RWTH Aachen University, 2017) Abstract Ever increasing demands for computational power are concomitant with rising electrical power needs and complexity in hardware and software designs. According increasing expenses for hardware, electrical power and programming tighten the rein on available budgets. Hence, an informed decision making on how to invest available budgets is more important than ever. Especially for procurements, a quantitative metric is needed to predict the cost effectiveness of an HPC center. In this work, I set up models and methodologies to support the HPC procure- ment process of German HPC centers. -

The Intel X86 Microarchitectures Map Version 2.1

The Intel x86 Microarchitectures Map Version 2.1 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 • 16-bit data bus: 8086 (iAPX Series: Series: Pentium Pro (used in desktops and servers) Series: Series: Skylake (2015, 14 nm) 86) • Desktop/Server: P5, P54C Variant: Klamath (1997, 0.35 μm) • Desktop/Server: i386DX • Desktop: Willamette (180 nm) Alternative Names: SKL (Desktop and Mobile), SKX (Server) • 8-bit data bus: 8088 (iAPX • Desktop/Server higher-performance: P54CQS, P54CS Alternative Names: Pentium II, PII • Desktop lower-performance: i386SX • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), Series: 88) • Mobile: P54C, P54LM Series: Klamath (used in desktops) • Mobile: i386SL, 80376, i386EX, Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) • • Compatibility: Pentium OverDrive New instructions: Deschutes (1998, 0.25 to 0.18 μm) Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) i386CXSA, i386SXSA, i386CXSB • Desktop lower-performance: Willamette-128 (Celeron), Northwood-128 (Celeron) • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), Variant: P55C (1997, 0.28 to 0.25 μm) Alternative Names: Pentium II, PII • Mobile: Northwood -

The Intel X86 Microarchitectures Map Version 3.0

The Intel x86 Microarchitectures Map Version 3.0 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) P6 (1995, 0.50 to 0.35 μm) Skylake (2015, 14 nm) NetBurst (2000 , 180 to 130 nm) Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: i686 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Alternative Names: Pentium 4, Pentium IV, P4 • 16-bit data bus: 8086 (iAPX Series: Series: Series: Pentium Pro (used in desktops and servers) Series: • Series: 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C Variant: Klamath (1997, 0.35 μm) • Desktop: Desktop 6th Generation Core i5 (i5-6xxx, S-H) • • Desktop: Pentium 4 1.x (except those with a suffix, Willamette, 180 nm), Pentium 4 2.0 (Willamette, 180 • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS Alternative Names: Pentium II, PII • Desktop higher-performance: Desktop 6th Generation Core i7 (i7-6xxx, S-H), Desktop 7th Generation Core i7 X (i7-7xxxX, X), Desktop 7th • nm) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Series: Klamath (used in desktops) Generation Core i9 X (i7-7xxxXE, i7-7xxxX, X), Desktop 9th Generation Core i7 X (i7-9xxxX, X), Desktop 9th Generation Core i9 X (i7- • • Desktop higher-performance: Pentium 4 1.xA (Northwood, 130 nm), Pentium 4 2.x (except 2.0, 2.40A, i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive New instructions: Deschutes (1998, 0.25 to 0.18 μm) 9xxxXE, i7-9xxxX, X) Variant: P55C (1997, -

Aqeri Technology Built Tough Product Guide

Product Guide Industry & Vehicle Aqeri Technology Built Tough 1 Product Guide It’s a hard road. Filled with twists, turns and bumps. Vehicle-mounted computers are in constant motion. And that means that they’re always being jostled and jolted about. But they’re not just subject to the rough conditions of life on the road. There is also a wide range of environmental conditions to take intoaccount, from sub- zero storage temperatures to the dirtiest of jobs. Not to mention the occasionally hard-handed use from a steady stream of different operators. Aqeri has extensive experience of developing technology for use in vehicles of all shapes and sizes. Our products are in use in long-haul supply transports, forklifts and tracked vehicles. To name just a few. Put simply, we keep our customer’s on the move. BOTEK KAPSCH TRAFFICOM In collaboration with Botek, Aqeri Kapsch Trafficom is a leading European supplies durable computers to Sweden’s supplier of Fast Lane Tolling systems leading waste management operators. that enable heavy transport vehicles to Their fleets of recycling and refuse pass toll stations without having to stop. vehicles collect wet, dirty and toxic Aqeri’s robust products make sure these materials from industries, households trucks keep rolling. Our rugged solutions and public works the year round. Our withstand everything that highways, rugged systems not only keep track freeways and motorways can kick up – of where the vehicles are and where and include specially modified industrial they’re going, but even the weight computers, laptops and RAID servers. measurements of the materials they’ve collected. -

Advancing the Cyberinfrastructure for Integrated Water Resources Modeling

Utah State University DigitalCommons@USU All Graduate Theses and Dissertations Graduate Studies 12-2017 Advancing the Cyberinfrastructure for Integrated Water Resources Modeling Caleb A. Buahin Utah State University Follow this and additional works at: https://digitalcommons.usu.edu/etd Part of the Civil and Environmental Engineering Commons Recommended Citation Buahin, Caleb A., "Advancing the Cyberinfrastructure for Integrated Water Resources Modeling" (2017). All Graduate Theses and Dissertations. 6901. https://digitalcommons.usu.edu/etd/6901 This Dissertation is brought to you for free and open access by the Graduate Studies at DigitalCommons@USU. It has been accepted for inclusion in All Graduate Theses and Dissertations by an authorized administrator of DigitalCommons@USU. For more information, please contact [email protected]. ADVANCING THE CYBERINFRASTRUCTURE FOR INTEGRATED WATER RESOURCES MODELING by Caleb A. Buahin A dissertation submitted in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY in Civil and Environmental Engineering Approved: ______________________ ____________________ Jeffery S. Horsburgh David G. Tarboton Major Professor Committee Member ______________________ ____________________ Bethany T. Neilson Sarah E. Null Committee Member Committee Member ______________________ ____________________ Steven J. Burian Mark R. McLellan, Ph.D. Committee Member Vice President for Research and Dean of the School of Graduate Studies UTAH STATE UNIVERSITY Logan, Utah 2017 ii Copyright © Caleb A. Buahin 2017 All Rights Reserved iii ABSTRACT Advancing the Cyberinfrastructure for Integrated Water Resources Modeling by Caleb A. Buahin, Doctor of Philosophy Utah State University, 2017 Major Professor: Dr. Jeffery S. Horsburgh Department: Civil and Environmental Engineering Water systems in the United States — especially those in the semi-arid western parts — are heavily human mediated because of the emphasis on managing the scarce water resources available and protecting life and property from water related hazards. -

ASIA EX-JAPAN IT HARDWARE Initiating Coverage: Ultrabooks And

EQUITY RESEARCH 6 October 2011 INITIATING COVERAGE ASIA EX-JAPAN IT HARDWARE Asia ex-Japan IT Hardware Initiating coverage: Ultrabooks and tablets 2-NEUTRAL Unchanged creating opportunities for some suppliers For a full list of our ratings, price targets and We initiate on three IT hardware suppliers – Foxconn Tech (FTC), Shin Zu Shing (SZS) earnings in this report, please see table on page 2 and Ju Teng – all No. 1 in their sub-segments of notebook casings and hinges: We expect FTC (2354 TT, 1-OW, PT NT$120) to benefit from a 3-year upcycle for metal Asia ex-Japan IT Hardware casings and SZS (3376 TT, 2-EW, PT NT$58) to profit on the likely adoption of hollow- Allen Chang type hinges in ultra-thin NBs. However, we believe Ju Teng (3336 HK, 3-UW, PT HK$1.20) +8862 663 84695 will suffer near term from the cannibalization of NBs with plastic casings until it can fully [email protected] offer metal casings. We maintain our 2-Neutral view on the Asia ex-Japan IT Hardware BCSTW, Taiwan sector with FTC, Simplo, Cheng Uei, Acer and Lenovo our top picks. Kirk Yang Tablets/ultrabooks appear the way forward: Most importantly, we view FTC, SZS and Ju +852 290 34635 Teng as important winners and losers – along with polymer battery makers Simplo [email protected] (1-OW, PT NT$290) and Dynapack (2-EW, NT$130) – in the current migration from Barclays Bank, Hong Kong conventional NBs with plastic casings to tablet/ultrabooks with metal casings.