Predicting Outcome of Indian Premier League (IPL) Matches Using Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Captain Cool: the MS Dhoni Story

Captain Cool The MS Dhoni Story GULU Ezekiel is one of India’s best known sports writers and authors with nearly forty years of experience in print, TV, radio and internet. He has previously been Sports Editor at Asian Age, NDTV and indya.com and is the author of over a dozen sports books on cricket, the Olympics and table tennis. Gulu has also contributed extensively to sports books published from India, England and Australia and has written for over a hundred publications worldwide since his first article was published in 1980. Based in New Delhi from 1991, in August 2001 Gulu launched GE Features, a features and syndication service which has syndicated columns by Sir Richard Hadlee and Jacques Kallis (cricket) Mahesh Bhupathi (tennis) and Ajit Pal Singh (hockey) among others. He is also a familiar face on TV where he is a guest expert on numerous Indian news channels as well as on foreign channels and radio stations. This is his first book for Westland Limited and is the fourth revised and updated edition of the book first published in September 2008 and follows the third edition released in September 2013. Website: www.guluzekiel.com Twitter: @gulu1959 First Published by Westland Publications Private Limited in 2008 61, 2nd Floor, Silverline Building, Alapakkam Main Road, Maduravoyal, Chennai 600095 Westland and the Westland logo are the trademarks of Westland Publications Private Limited, or its affiliates. Text Copyright © Gulu Ezekiel, 2008 ISBN: 9788193655641 The views and opinions expressed in this work are the author’s own and the facts are as reported by him, and the publisher is in no way liable for the same. -

Page 01 Jan 06.Indd

SATURDAY 6 JANUARY 2018 21 I’m feeling pretty good. I’m SPORT Australian tennis star hitting the ball well and I’m Today at 6.00pm. serving really well. So the first Nick Kyrgios after setting up a semi-final Men’s singles final two matches of the year, I’ve got against Grigor Dimitrov at the At Khalifa International Tennis through two tough three-setters. Brisbane International and Squash Complex Kohli earns record $2.7m Khawaja, Smith bat well after salary for 2018 IPL England post 346 at the SCG campaign AFP REUTERS our team so we don’t have to bowl at him,” Australia fast NEW DELHI: Batting star SYDNEY: Australia captain Steve bowler Pat Cummins said of his Virat Kohli will pick up the Smith continued to be England’s captain. Indian Premier League’s Ashes nemesis yesterday as he “You lose a couple of wickets largest-ever salary -- $2.7m passed 6,000 Test runs in an and he comes out to bat. He’s just -- in this year’s tournament, unbeaten knock of 44 to help his incredible, I think he’s been the with a host of big foreign team to 193 for two at close of real difference between the two names including Australian play on the second day of the sides.” captain Steve Smith also fifth Test. The tourists, 3-0 down in cashing in. The world’s top ranked the series with the urn already Kohli will stay with Royal batsman and Usman Khawaja relinquished, earlier posted a Challengers Bangalore along- batted through the final session competitive tally in the face of side South Africa’s AB de Vil- in a 107-run partnership after some fearsome pace bowling on liers, who will earn $1.7m in openers Cameron Bancroft and the back of a hard wagging tail the cash-rich event started by David Warner had departed ear- and some poor Australian the Indian cricket board in lier in Australia’s reply to Eng- catching. -

Lekhwiya Eye Dies Aft Er Accident Persepolis Scalp These Two Sides Have Met Previously in This Competition, in 2015

NBA | Page 5 MOTORSPORT | Page 6 Gritty Celtics Dixon takes stun Cavs to Indy 500 pole, reduce series Alonso to defi cit start in fi ft h Tuesday, May 23, 2017 FOOTBALL Sha’baan 27, 1438 AH La Liga win is GULF TIMES bigger than World Cup for Zidane SPORT Page 3 AFC CHAMPIONS LEAGUE DEMISE Former MotoGP champion Hayden Lekhwiya eye dies aft er accident Persepolis scalp These two sides have met previously in this competition, in 2015. During that time, both teams had won by a similar 3-0 margin respectively in their home matches This file photo taken on January 10, 2012 shows former Ducati rider Nicky Haiden during a press conference in Italy. Agencies rider to achieve the feat – before Rome a move to the MotoGP World Championship for 2003. He took two podiums that year, at Motegi merican former Mo- and Phillip Island, as a rookie. toGP champion Nicky More podiums followed in 2004 hayden hit by a car in before Hayden took his fi rst pole a bicycle accident in and Grand Prix victory at Laguna AItaly last week, has died of severe Seca in 2005. The following year, brain damage, the Italian hos- the “Kentucky Kid” became the pital where he was treated said MotoGP World Champion as he yesterday. beat Valentino Rossi to the pre- Hayden, 36, who won the mier class crown, securing the 2006 MotoGP world title, suf- title in the last round of the year fered severe chest and head inju- in Valencia. ries in the accident, which hap- He moved to Ducati in 2009 pened while he was training on and, later, the Aspar team, be- his bicycle in central Italy, south fore racing his last full season of the seaside resort of Rimini. -

Balance Sheet Merge Satistics & Color Bitmap.Cdr

MADHYA PRADESH CRICKET ASSOCIATION Holkar Stadium, Khel Prashal, Race Course Road, INDORE-452 003 (M.P.) Phone : (0731) 2543602, 2431010, Fax : (0731) 2534653 e-mail : [email protected] Date : 17th Aug. 2011 MEETING NOTICE To, All Members, Madhya Pradesh Cricket Association. The Annual General Body Meeting of Madhya Pradesh Cricket Association will be held at Holkar Stadium, Khel Prashal, Race Course Road, Indore on 3rd September 2011 at 12 Noon to transact the following business. A G E N D A 1. Confirmation of the minutes of the previous Annual General Body Meeting held on 22.08.2010. 2. Adoption of Annual Report for 2010-2011. 3. Consideration and approval of Audited Statement of accounts and audit report for the year 2010-2011. 4. To consider and approve the Proposed Budget for the year 2011-2012. 5. Appointment of the Auditors for 2011-2012. 6. Any other matter with the permission of the Chair. (NARENDRA MENON) Hon. Secretary Note : 1. If you desire to seek any information, you are requested to write to Hon. Secretary latest by 28th Aug. 2011. 2. The Quorum for meeting is One Third of the total membership. If no quorum is formed, the Meeting will be adjourned for 15 minutes. No quorum will be necessary for adjourned meeting. The adjourned meeting will be held at the same place. THE MEETING WILL BE FOLLOWED BY LUNCH. 1 MADHYA PRADESH CRICKET ASSOCIATION, INDORE UNCONFIRMED MINUTES OF ANNUAL GENERAL BODY MEETING HELD ON 22nd AUGUST 2010 A meeting of the General Body of MPCA was held on Sunday 22nd Aug’ 2010 at Usha Raje Cricket Centre at 12.00 noon. -

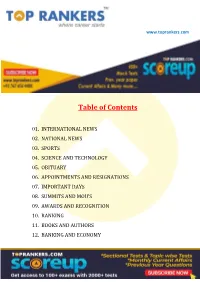

Table of Contents

www.toprankers.com Table of Contents 01. INTERNATIONAL NEWS 02. NATIONAL NEWS 03. SPORTS 04. SCIENCE AND TECHNOLOGY 05. OBITUARY 06. APPOINTMENTS AND RESIGNATIONS 07. IMPORTANT DAYS 08. SUMMITS AND MOU’S 09. AWARDS AND RECOGNITION 10. RANKING 11. BOOKS AND AUTHORS 12. BANKING AND ECONOMY www.toprankers.com INTERNATIONAL NEWS India, Netherlands sign agreement to support decarbonisation NITI Aayog of India and the Embassy of the Netherlands in New Delhi have signed a Statement of Intent on September 28, 2020 to support decarbonisation and energy transition agenda in order to accommodate cleaner energy. The main objective of the partnership is to co-create innovative technological solutions. The SoI was signed by NITI Aayog CEO Amitabh Kant and Ambassador of the Netherlands to India Marten van den Berg. India extends $1 bn credit line to Central Asian countries The second meeting of the India-Central Asia Dialogue was held virtually, under the chairmanship of the External Affairs Minister of India Dr S Jaishankar. The foreign minister of all the five Central Asian countries- Kazakhstan, Tajikistan, Turkmenistan, Uzbekistan, and Kyrgyzstan– participated in the meeting. During the meeting, India announced US $1 billion line of credit for “priority developmental projects” in Central Asian countries in the fields of connectivity, energy, IT, healthcare, education, agriculture and offered to provide grant assistance for implementation of High Impact Community Development Projects (HICDP) for furthering socio-economic development in the countries of the region. Apart from this, the Acting Foreign Minister of the Islamic Republic of Afghanistan also attended the meeting as a special invitee. The first India-Central Asia Dialogue was held in Uzbekistan’s Samarkand in 2019. -

Quick Heal Technologies Limited Q4 & FY17 – Results Update May 2017

Strictly private & Confidential Quick Heal Technologies Limited Q4 & FY17 – Results Update May 2017 1 DISCLAIMER This presentation and the following discussion may contain “forward looking statements” by Quick Heal Technologies Limited (“Quick Heal” or the “Company”) that are not historical in nature. These forward looking statements, which may include statements relating to future results of operations, financial condition, business prospects, plans and objectives are based on the current beliefs, assumptions, expectations, estimates and projections of the management of Quick Heal about the business, industry and markets in which Quick Heal operates. These statements are not guarantees of future performance and are subject to known and unknown risks, uncertainties and other factors, some of which are beyond Quick Heal’s control and difficult to predict, that could cause actual results, performance or achievements to differ materially from those in the forward looking statements. Such statements are not and should not be construed as a representation of future performance or achievements of Quick Heal. In particular, such statements should not be regarded as a projection of future performance of Quick Heal. It should be noted that the actual performance or achievements of Quick Heal may vary significantly from such statements. 2 DISCUSSION SUMMARY • KEY HIGHLIGHTS • COMPANY OVERVIEW • STRATEGY & OUTLOOK • Q4 & FY17 RESULT ANALYSIS • Q4 & FY17 FINANCIALS • SHAREHOLDING STRUCTURE 3 KEY HIGHLIGHTS – MOVING IN RIGHT DIRECTION STEADY GROWTH -

Reportable in the High Court of Judicature at Bombay

WWW.LIVELAW.IN Board of Control for Cricket in India vs Deccan Chronicle Holding Ltd CARBPL-4466-20-J.docx GP A/W AGK & SSM REPORTABLE IN THE HIGH COURT OF JUDICATURE AT BOMBAY ORDINARY ORIGINAL CIVIL JURISDICTION IN ITS COMMERCIAL DIVISION COMM ARBITRATION PETITION (L) NO. 4466 OF 2020 Board of Control for Cricket in India, a society registered under the Tamil Nadu Societies Registration Act 1975 and having its head office at Cricket Centre, Wankhede Stadium, Mumbai 400 020 … Petitioner ~ versus ~ Deccan Chronicle Holdings Ltd, a company incorporated under the Companies Act 1956 and having its registered office at 36, Sarojini Devi Road, Secunderabad, Andhra Pradesh … Respondent appearances Mr Tushar Mehta, Solicitor General, with FOR THE PETITIONER Samrat Sen, Kanu Agrawal, Indranil “BCCI” Deshmukh, Adarsh Saxena, Ms R Shah and Kartik Prasad, Advocates i/b Cyril Amarchand Mangaldas Page 1 of 176 16th June 2021 ::: Uploaded on - 16/06/2021 ::: Downloaded on - 16/06/2021 17:38:08 ::: WWW.LIVELAW.IN Board of Control for Cricket in India vs Deccan Chronicle Holding Ltd CARBPL-4466-20-J.docx Mr Haresh Jagtiani, Senior Advocate, with Mr Navroz Seervai, Senior Advocate, FOR THE RESPONDENT Mr Sharan Jagtiani, Senior Advocate, “DCHL” Yashpal Jain, Suprabh Jain, Ankit Pandey, Ms Rishika Harish & Ms Bhumika Chulani, Advocates i/b Yashpal Jain CORAM : GS Patel, J JUDGMENT RESERVED ON : 12th January 2021 JUDGMENT PRONOUNCED ON : 16th June 2021 JUDGMENT: OUTLINE OF CONTENTS This judgment is arranged in the following parts. A. INTRODUCTION ........................................................................ 4 B. THE CHALLENGE IN BRIEF; SUMMARY OF CONCLUSIONS................................................ 6 C. THE AMBIT OF SECTION 34 ................................................. -

Professional Sports Leagues Around the World and Sports Became a Business with Owners of Sports Teams on Lines Similar to Owners of Companies

EAMSA 2015 Competitive Paper 1. Introduction: Elite sports, the way we know it, have had a large fan following for more than a 100 years. The modern Olympics starting in 1896 was a turning point in organized elite sports across different types of sporting events. As the Olympics have evolved they have taken in more sports under its umbrella. Individual sports have had their own specialized events at regular intervals. These include the annual Wimbledon tennis tournament that started in 1877 and the Football World Cup held every four years that had its first edition in 1930. Initially elite sports people were amateurs for whom sports was a past time and not a profession. Over the years professionalization of sports took place as sports people converted what used to be a past time into a full-fledged profession. This led to the formation of a large number of professional sports leagues around the world and sports became a business with owners of sports teams on lines similar to owners of companies. Sports as a business opened up different ways to monetize the purchasing power of sports fans and led to the creation of a variety of different revenue models. The advent of professional sports leagues happened in Europe and North America. Europe developed professional sports leagues in football that included the English Premier League (EPL), La Liga in Spain, Bundesliga in Germany and Serie A in Italy. In North America the professional sports leagues revolved around the sports popular in that part of the world. These included Major League Baseball (MLB), National Basketball Association (NBA), National Football League (NFL), and National Hockey League (NHL). -

Reportable in the High Court of Judicature at Bombay

Board of Control for Cricket in India vs Deccan Chronicle Holding Ltd CARBPL-4466-20-J.docx GP A/W AGK & SSM REPORTABLE IN THE HIGH COURT OF JUDICATURE AT BOMBAY ORDINARY ORIGINAL CIVIL JURISDICTION IN ITS COMMERCIAL DIVISION COMM ARBITRATION PETITION (L) NO. 4466 OF 2020 Board of Control for Cricket in India, a society registered under the Tamil Nadu Societies Registration Act 1975 and having its head office at Cricket Centre, Wankhede Stadium, Mumbai 400 020 … Petitioner ~ versus ~ Deccan Chronicle Holdings Ltd, a company incorporated under the Companies Act 1956 and having its registered office at 36, Sarojini Devi Road, Secunderabad, Andhra Pradesh … Respondent appearances Mr Tushar Mehta, Solicitor General, with FOR THE PETITIONER Samrat Sen, Kanu Agrawal, Indranil “BCCI” Deshmukh, Adarsh Saxena, Ms R Shah and Kartik Prasad, Advocates i/b Cyril Amarchand Mangaldas Page 1 of 176 16th June 2021 ::: Uploaded on - 16/06/2021 ::: Downloaded on - 16/06/2021 20:04:41 ::: Board of Control for Cricket in India vs Deccan Chronicle Holding Ltd CARBPL-4466-20-J.docx Mr Haresh Jagtiani, Senior Advocate, with Mr Navroz Seervai, Senior Advocate, FOR THE RESPONDENT Mr Sharan Jagtiani, Senior Advocate, “DCHL” Yashpal Jain, Suprabh Jain, Ankit Pandey, Ms Rishika Harish & Ms Bhumika Chulani, Advocates i/b Yashpal Jain CORAM : GS Patel, J JUDGMENT RESERVED ON : 12th January 2021 JUDGMENT PRONOUNCED ON : 16th June 2021 JUDGMENT: OUTLINE OF CONTENTS This judgment is arranged in the following parts. A. INTRODUCTION ........................................................................ 4 B. THE CHALLENGE IN BRIEF; SUMMARY OF CONCLUSIONS................................................ 6 C. THE AMBIT OF SECTION 34 .................................................. 10 D. THE FRANCHISE AGREEMENT OF 10TH APRIL 2008 ..................................................................... -

P17 4 Layout 1

TUESDAY, MAY 16, 2017 SPORTS Double departure hands Pak a testing transition ROSEAU, Dominica: As Younis Khan and talking and is currently the only Misbah-ul-Haq head off into the sunset Pakistani to have joined the coveted after long and illustrious careers, 10,000 test-run club. Pakistan begins a tricky period of transi- tion looking for a new wave of players GREAT SERVANTS ready to fill a huge void left by the retired The former captain, who led Pakistan batting greats. In a fitting finale, Younis, to the World Twenty20 title in 2009, tal- Pakistan’s most prolific test run-scorer, lied 10,099 runs in 118 tests, embellish- and Misbah, the country’s most success- ing his legacy with 34 hundreds at an ful captain, bowed out together in a average of more than 52. Together they blaze of glory on Sunday with the team were the pillars of Pakistan’s batting line- celebrating a first-ever series triumph in up for over a decade and it could take the Caribbean. many years for the country to find any- The thrilling 101-run victory in one capable of matching their feats. Dominica sealed a 2-1 win over West Pakistan’s situation mirrors the dilemma Indies and was Pakistan’s 26th under the South Asian rivals Sri Lanka faced when 42-year-old Misbah, who also led the side batting mainstays Kumar Sangakkara to the top of the International Cricket and Mahela Jayawardene ended their Council (ICC) world test rankings last year. international careers two years ago. -

Organizing of Cricket Players Karan Rao, December 2016

Organizing of Cricket Players Karan Rao, December 2016 Overview: The Indian Premier League (IPL) is a T20 cricket league held in India and is the most attended cricket league worldwide. The league ranks sixth amongst all sports leagues in the world and according to global valuation and corporate finance advisor Duff & Phelps, the value of brand IPL stands at around $4.16 billion in 2016.The IPL currently comprises of 8 franchise owned teams that more or less represent the major cities in India and has around 200 players from countries such as: India, South Africa, Australia, West Indies, New Zealand, England, Netherlands, Sri Lanka and Bangladesh. All the players are bought by the franchises via an auction. Through this case study we will explore the strategies and the methods used by the franchises to organize their teams, the selection criteria and evaluation techniques used by teams to select their resources and how the dynamic nature of the auction influences the organizing principles used by the franchises. We will also explore how the timing of a resource entering the organizing system effects its monetary value and changes its importance. What is being organized? In the organizing system, which is the IPL, the players are the resources (active/operant resources) that are being organized. As simple and straightforward as it may sound teams spend millions of dollars on scouts, software and data analysts to perfect the art of organizing their resources. This challenge is due to the fact that the team owners, analysts and coaches are trying to extrinsically organize (define a price on) an intrinsically dynamic resource property (a player’s attributes). -

214908890.Pdf

The Indian Premier League (IPL) is an Indian professional league for men's Twenty20 cricket clubs with double round- robin and playoffs. Without any Twenty20 cricket league system, it is India's primary Twenty20 cricket club competition. Currently contested by eight clubs, it does not operates on a system of promotion and relegation. Only Indian clubs are qualify to play in the Premier League. Seasons run in the Indian summer spanning between April and June, with most games are played in the afternoons. The competition was formed by the Board of Control for Cricket in India (BCCI) in 2008 after an altercation between the BCCI and the now-defunct Indian Cricket League.[1] The Premier League is headquartered in Mumbai,Maharashtra,[2][3] and is currently supervised by BCCI Vice-President Ranjib Biswal, who serves as the League's Chairman and Commissioner.[4] The Premier League is the most-watched Twenty20 cricket league in the world. It is generally considered to be the highest- profile showcase in the world for Twenty20 club cricket, the shortest form of professional cricket with just 20overs per innings. IPL is as well known for its commercial success and for the quality of Twenty20 cricket played. During the sixth IPL season (2013) its brand value was estimated to be around US$3.03 billion.[5][6] Live rights to the event are syndicated around the globe, and in 2010, the IPL became the first sporting event to be broadcast live on YouTube.[7] It is currently sponsored by Pepsi and thus officially known as the Pepsi Indian Premier League.[8] Two eligible bids were received, with Pepsi winning over Airtel with a bid of 3968 million.[9]However, the League has been the subject of several controversies where allegations of cricket betting, money laundering and spot fixing were witnessed.[10][11] Of the 11 clubs to have competed since the inception of the Premier League in 2008, five have won the title:Chennai Super Kings (2), Rajasthan Royals (1), Deccan Chargers (1), Kolkata Knight Riders (1) and Mumbai Indians (1).