Simgate: Full-System, Cycle-Close Simulation of the Stargate Sensor Network Intermediate Node

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Stargate Sg1 "Flesh and Blood" Episode #1001 Dialogue Continuity Script

STARGATE SG1 "FLESH AND BLOOD" EPISODE #1001 DIALOGUE CONTINUITY SCRIPT June 5, 2006 Prepared by: Line 21 Media Services Ltd. #122 - 1058 Mainland Street Vancouver, B.C. V6B 2T4 Phone: (604) 662-4600 [email protected] 1 STARGATE SG-1 - "Flesh and Blood" - Episode #1001 TIMECODE DIALOGUE START TIMECODE 01:00:00:00 AT FIRST FRAME OF PICTURE RECAP 01:00:00:05 TEAL'C: Previously on Stargate SG-1... 01:00:03:08 DOCI: In the name of the gods, ships shall be built to carry our warriors out amongst the stars. 01:00:08:22 ORLIN: Everything Origins followers devote themselves to is a lie. 01:00:12:16 MITCHELL: Whose baby is it? 01:00:13:21 VALA: I don't know. 01:00:14:19 PRIOR (V/O):The child is the will of the Ori. 01:00:18:10 VALA (V/O): The ships are planning to leave. 01:00:19:28 VALA (CONT'D): Somewhere out there, the Ori have a working supergate. 01:00:22:11 CARTER (V/O): We've managed to locate the dialing control crystals on one particular section of the gate. 2 STARGATE SG-1 - "Flesh and Blood" - Episode #1001 01:00:26:10 CARTER (CONT'D): We dial out before they can dial in. 01:00:28:20 CARTER (CONT'D INTO RADIO): Something's happening. 01:00:31:09 (EXPLOSION) 01:00:35:19 CARTER (CONT'D): My god... 01:00:36:27 NETAN: I didn't think you were that stupid. 01:00:38:14 TEAL'C: I have come to seek the assistance of the Lucian Alliance. -

Interfacing STARGATE-IP to UPB Networks

Interfacing STARGATE-IP to UPB Networks INTRODUCTION This Application Note describes Stargate-IP and Stargate-Lite support for controlling UPB Powerline devices. These control- lers, when connected to the powerline by a UPB Powerline Interface Module, can control a network of up to 250 UPB lighting dimmers and other UPB devices. This application note assumes a working knowledge of UPB and that the UPB devices have been installed and properly configured using the UPStart program (available from PCS or Simply Automated websites). Support for UPB requires a UPB Powerline Interface Module (PIM), also called a Computer Interface Module (CIM) and a UPB serial adapter and cable. The PIM connects to COM2 or COM3 of the Stargate via the UPB serial adapter cable. Once configured, the UPB devices are fully integrated into Stargate and can be controlled in the same manor as X10 or ALC lighting devices. Stargate Controllers NEW Controllers Stargate-IP and Stargate-Lite controllers shipped with firmware 4.0.0 or later are modified and ready for UPB support. These units are shipped with a UPB serial adapter and cable for connection to a UPB Powerline Interface Module. The UPB Powerline Interface Module is not supplied. The UPB Powerline Interface Module (PCS PIM) or Computer Interface Module (Simply Automated CIM) are available from various distributors. NOTE: Your PIM or CIM must be in MESSAGE mode, not PULSE mode, in order for this module to behave correctly. If you are experiencing problems when the PIM or CIM is connected to Stargate, your interface is likely in PULSE mode and needs to be changed to MESSAGE mode. -

Mayevent 2006 Horizon Volume 13 Issue 7

Hamilton Amateur Astronomers MayEvent 2006 Horizon Volume 13 Issue 7 Chair’s Report by Glenn Muller 34”, or about a degree East of M104. And, the fun doesn’t end there. In that immedi- Regular EH readers will know of my affinity for aster- ate vicinity is yet another asterism with the ominous isms; those unique formations of stars that prompt the moniker of “Jaws”. Situated just off the imaginary line imagination with their chance alignments. connecting Stargate to M104 are four bright stars in a Well, at a recent Binbrook session I was pushing the row. They form the head and mouth of a celestial shark, 6” reflector around Corvus, looking for the Sombrero the body of which consists of a string of fainter trailing Galaxy, when I chanced across six stars in a cluster stars. Both the Stargate and Jaws are mentioned in Phil no wider than 10’ forming two nested triangles. They Harrington’s book The Deep Sky: An Introduction. In instantly piqued my interest so, using the common sci- fact, it was Harrington who gave Jaws its label. Another entific jargon, I alerted the rest of the group. “Hey,” I good source of information for this interesting group is said. “Come take a look at these neat stars!”. John and the internet link Dianna, a nice couple from Winona who were observing www.backyard-astro.com/deepsky/ This fine site has a nice map of the with us for the first time, politely pried themselves from top100/15.html region and a couple of excellent sketches – well worth the wonderful views through their 11” Celestron SCT checking out. -

October 1984

EDITOR: Judy Butcher Send all articles to- (3i3) 254-1786 45200 Keding; Apt. 102 Utica, MI 48087 The W.A.S.P. is the official publication of the Warren Astronomical Society and is available free to all club members. Requests by other clubs to receive the W.A.S.P. and all other correspondence should be addressed to the editor. Articles should be submitted at least one week prior to the general meeting. Warren Astronomical Society President: Frank McCullough 254-1786 P.O. Box 474 1st V.P.: Joe Gulino 979-4041 East Detroit, MI 48021 2nd V.P.: Ken Strom 977-9489 Secretary: Ken Kelly 839-7250 Treasurer: Bob Lennox 689-6139 Librarian: John Wetzel 882-6816 'The Warren Astronomical Society is a local, non-profit organization of amateur astronomers. The Society holds meetings on the first and third Thursdays of each month. The meeting locations are as follows: 1st Thursday – Cranbrook Institute of Science 3rd Thursday – Macomb County Community 500 Lone Pine Road College – South Campus Bloomfield Hills, MI B Building, room 209 14500 Twelve Mile Rd. Warren, MI Membership is open to those interested in astronomy and its related fields. Dues are as follows and include a year’s subscription to Sky and Telescope. Student ................... $21.00 College ........................ $25.00 Senior Citizen ................... $25.00 Individual ............... $30.00 Family......................... $35.00 Observatory Chairman: Ken Strom 977-9489 Stargate Observatory is owned and operated by the Warren Astronomical Society in conjunction with Rotary International. Located on the grounds of Camp Rotary, Stargate features a 12½” club-built Cassegrainian telescope under an aluminum dome. -

Plural Subjectivity in Stargate SG-1

Language, Literature, and Interdisciplinary Studies (LLIDS) ISSN: 2547-0044 ellids.com/archives/2020/07/3.4-Ferebee.pdf CC Attribution-No Derivatives 4.0 International License www.ellids.com “Pain in Someone Else’s Body”: Plural Subjectivity in Stargate SG-1 K.M. Ferebee Abstract Lennard Davis, in his work on visualizing the disabled body, argues that at root the body is inherently and always already fragmented. The unified “whole body” is, therefore, hallucinatory in nature—an imaginary figure through which the body’s multiplicity is repressed. There is much in this view that is consonant with posthumanism, which so often seeks to destabilize the “whole” and singular one in favor of the multiple, the fragmentary, and the hybrid. Yet despite these considerations of the body as fragmentary, little attention has been paid to the value of considering the body not only as fragmentary, but also as potential fragment. What might we learn by rejecting anthropocentric assumptions about the body-mind’s inherent completeness, and exploring the radically plural ontologies offered by visions of shared, joint, or group body-minds? This paper turns to science fiction as a source of such visions, considering depictions of symbiotic and hive minds through the non-traditional models of ontology and agency. While science fiction has traditionally represented plural being as a troubling and fearful injury to wholeness, this paper aims to highlight the symbiotic Tok’ra1 of television series Stargate SG-1 as a model of excess being that not only challenges the naturalization of the “complete” body, but also asks us to interrogate presumed boundaries between self and other. -

STARGATE by Dean Devlin & Roland Emmerich Devlin/Emmerich Draft 7

STARGATE by Dean Devlin & Roland Emmerich Devlin/Emmerich Draft 7/6/93 FADE IN: PRIMITIVE SKETCHES E.C.U. Etched on stone, a JACKAL. Another of a GAZELLE, a spear piercing its skin. Primitive, yet dramatic tribal etchings. The SOUND of ancient CHANTING is HEARD. Widen to REVEAL... 1 EXT. DESERT LANDSCAPE, NORTH AFRICA - SUNSET 1 A young BOY chisels his artwork into the stone ROCKFACE at the edge of this valley. An old MEDICINE MAN, his face painted with bizarre white stripes, CHANTS nearby. The boy abruptly stops his work at the SOUND of distant CRIES. Quickly he climbs the stone. Standing at the top he SEES... HUNTERS 2 RETURNING FROM A KILL. THEY MARCH TOWARDS A SMALL CAMPSITE. 2 The tribes people rushing to greet them. Super up: North Africa 8000 B.C. 3 OMITTED 3 4 A BLAZING FIRE - LATER THAT NIGHT 4 Silhouetted tribesmen dancing in bizarre animal MASKS. Feet STOMPING. The young Boy stares at the fire, SPARKS rising into the air. We PAN UP following the sparks into the sky. A full moon. A SHADOW is suddenly cast across the moon, blotting it out. 5 INT. TENT - LATER THAT NIGHT 5 The young Boy sleeps. Above him hangs an odd carving that slowly begins to RATTLE. The tent's fabric begins to FLAP. The Boy's eyes pop open. He HEARS the sounds of a quickly brewing storm. Footsteps. People hurrying, calling out to each other. Suddenly the tent's entrance flap SAILS OPEN. BRIGHT LIGHT pours in through the entrance. 6 EXT. CAMPSITE - NIGHT 6 The Boy exits his tent, staring at the light, intrigued. -

Stargate Atlantis Allegiance (Legacy Book 3) Pdf, Epub, Ebook

STARGATE ATLANTIS ALLEGIANCE (LEGACY BOOK 3) PDF, EPUB, EBOOK Melissa Scott | 296 pages | 17 Jun 2020 | FANDEMONIUM BOOKS | 9781905586561 | English | none STARGATE ATLANTIS Allegiance (Legacy book 3) PDF Book Skip to main content. It has brought us together. Among these thieves and rogues is Vala Mal Doran, on the trail of the fabulous treasure left behind by the System Lord Kali. Artok : I am not. But life never stays simple for long…. John Sheppard submits his resignation following a mission in which two of his team members were lost while Elizabeth Weir negotiates with two warring tribes who have traces of the Ancient ATA gene. The expedition reestablishes contact with their Pegasus allies and learns that the Wraith are being united under a single Wraith Queen called Death. Namespaces Article Talk. Inheritors Book 6 in the Legacy Series. Please contact us if any details are missing and where possible we will add the information to our listing. And I don't mean the end of the Goa'uld. Chief Ladon Radim turns to Atlantis for help when the Genii's first starship disappears -- before time runs out for her crew. Payment details. Season 5. Missing Information? Artok : I asked a question of this Tok'ra. He reveals that he was left for dead in the forest but his symbiote managed to sustain him. The Third Path. Book search. Refer to eBay Return policy for more details. Julie Fortune. Email to friends Share on Facebook - opens in a new window or tab Share on Twitter - opens in a new window or tab Share on Pinterest - opens in a new window or tab. -

Stargate SG-1 FAQ – Player’S Guide

Stargate SG-1 FAQ – Player’s Guide The following is a list of character options and what book and page they can be found on. (Special thanks to Morgenstern, who some of the basics used here were lifted, and to Wolverine, EddieT, and XaleD for filling in some of these blanks. Thanks guys!!) Current updated to include Stargate SG-1 (main rule book), Season One, System Lords, and Season Two. If you find an error or have a question or comment, please let me know! :+) Specialties: Characters originating from Earth, or having a sufficiently advanced culture to have access to modern training may select a Specialty. • Air Force (SG1, 140) o Air Force Officer o Air Force Technician o Enlisted Air Force Recruit o Pararescue • Army (SG1, 141) o Army Medical Corps (S1, 122) o Army Officer o Army Ranger o Army Technician o Enlisted Army Recruit • Astronaut/Cosmonaut (S2, 128) • Civilian Specialist (SG1, 145) • Diplomatic Corps (SG1, 145) • Engineering Corps (SG1, 146) • The Fourth Estate (S2, 129) o Photojournalist o Print Reporter o Radio/Television Reporter • Marines (SG1, 142) o Enlisted Marine Recruit o Force Reconnaissance o Marine Officer o Marine Technician • Navy (SG1, 143) o Enlisted Navy Recruit o Naval Officer o Naval Technician o SEAL • National Intelligence Department (NID) (SG1, 144) o Area 51 Infiltrator o NID Officer o NID Interrogator (S1, 122) o Rogue Stargate Team Member • Russian Unit (SG1, 146) Species: Characters that are not human, or those that are human and not from Earth are represented with Species. These replace a character's choice of Specialty. -

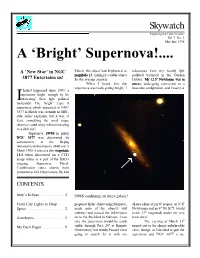

May-June 1998 a ‘Bright’ Supernova!

Skywatch ‘Exploring the Final Frontier’ Vol. 7 No. 3 May-June 1998 A ‘Bright’ Supernova!.... A ‘New Star’ in NGC March, this object had brightened to telescopes from my heavily light magnitude 13, making it a viable object polluted backyard in the Garden 3877 Entertains us! for the average amateur. District. My 12.5" Newtonian was in When I heard that this pieces, undergoing conversion to a supernova was really getting ‘bright,’ I truss tube configuration, and I wasn’t at t hadn’t happened since 1993: a supernova bright enough to be I‘interesting’ from light polluted backyards! The ‘bright’ Type II supernova which appeared in NGC 3877 in March was certainly no M81- style stellar explosion, but it was, at least, something the small scope observer could enjoy without traveling to a dark site! Supernova 1998S in galaxy NGC 3877 was discovered by astronomers at the Beijing Astronomical observatory (BAO) on 3 March 1998. It was at a dim magnitude 15.2 when discovered on a CCD image taken as a part of the BAO’s ongoing Supernova Patrol. Confirmation came shortly from personnel at Lick Observatory. By Mid CONTENTS Judy’s Eclipse ........... 2 1998S outshining an entire galaxy! From City Lights to Deep prepared finder charts using Megastar, all sure either of my 8" scopes, an 8" f7 Space ................. 3 made note of the object’s vital Newtonian and an 8" f10 SCT, would statistics--and passed the information reach 13th magnitude under my very Astrobytes .............. 5 on to Pat Rochford in Fairhope. I was poor skies! sure the supernova would be easily The evening of March 21st My Back Pages ......... -

Level-3-Stargate-Penguin-Readers.Pdf

The mysterious StarGate is 10,000 years old. When a group of soldiers go through it they travel millions of miles to a world where they have to fight to stay alive. Will they live? Will they find a way to get back to Earth? Or will they die? Penguin Readers are simplified texts designed in association with Longman, the wo rld famous educational publisher. to prov ide a step-by-step approach to the joys of reading for pleasure. Each book has an introductio n and extensive act ivity material. They are published at seve n levels from Easystarts (200 words) to Advanced (3000 wo rds). Ser ies Edito rs: Andy Hopkins and Jocelyn Potter NEW EDITON 6 Advanced (3000 words) Contemporary 5 Upper Intermediate (2300 words) C lassics 4 Intermediate (1700 wo rds) • O riginals 3 Pre-lnrermediate (1200 words) tJ 2 Elementar y (600 words) IBeginner (300 words) Br itish English Easysta rts (200 words) American English www.penguinreaders.com s TA R G AT E " jva~us F1L\!"l \ <ToJ<N ~ n> I~ ROI."'~ D ~loo) K~,j,\R .."",,,,If ffiDIO (JL\')J ' I~ CAR0I.CO Plffi'RE.S lmllll (11.11£001 JAYEDAVlDSO, ~ :HI.E I S Ell -~ IJ.QIJ.; CO;.< \TRl'CTl o sm mTRL~Sfl . NIE.\IPAD ER ·ST.\RGAn' 'r1I1C\ In.1Jf{)R5 -':lH\1DAR."lJ1lJ ...... ,ltREKB :\EOl J~ '"l'.:~arT'rnT ~'!#rn~TRI n nTO f'Oll OS ,c::)OSEf'H PORRO '"l ~ l: CH. illJ_ DL11lJh.. -=lUGERGRIm ~ --J:': I:.w. \nLrn. L':'; Il£.'i [),tJ a ~\ 'l\ = ~I!tR.l O K.>"\ m . -

Traversable Wormholes, Stargates, and Negative Energy

UNCLASSIFIED//FOR OFFICIAL USE ONLY Defense Intelligence Reference Document Acquisition Threat Support 6 April 2010 reOD : 1 December 2009 DIA-08-1004-004 Traversable Wormholes, Stargates, and Negative Energy UNCLASSIFIED//FOR OFFICIAL USE ONLY UNCLASSIFIED//FOR OFFICIAL USE ONLY Traversable Wormholes, Stargates, and Negative Energy Prepared by: Acquisition Support Division (DWO-3) Defense Warning Office Directorate for Analysis Defense Intelligence Agency Author: Eric W. Davis, Ph.D. Earthtech International, Inc. 11855 Research Blvd. Austin, TX 78759 Administrative Note COPYRIGHT WARNING : Further dissemination of the photographs in this publication is not authorized. This product is one in a series of advanced technology reports produced in FY 2009 under the Defense Intelligence Agency, Defense Warning Office's Advanced Aerospace Weapon System Applications (AAWSA) Program. Comments or questions pertaining to this document should be addressed to James T. Lacatski, D.Eng., AAWSA Program Manager, Defense Intelligence Agency, ATTN: CLAR/DWO-3, Bldg 6000, Washington, DC 20340-5100. ii UNCLASSIFIED//FOR OFFICIAL USE ONLY UNCLASSIFIED/ /FOR OFFICIAL USE ONLY Contents I. Summary .............................................................................................................v \ II. A Brief Review of Transversable Wormholes and the Stargate Solution ............ 1 A. Traversable Wormholes ................................................................................. 1 B. The "Stargate" Solution ................................................................................ -

June 2021 the Warren Astronomical Society Publication

Celebrating Sixty Years of the Warren Astronomical Society The W.A.S.P. Vol. 53, no. 6 June 2021 The Warren Astronomical Society Publication Robert Burnham, Jr. June 16, 1931 – March 20, 1993 The WASP Snack Volunteer Schedule Published by Warren Astronomical Society, Inc. The Snack Volunteer program is suspend- P.O. Box 1505 ed for the duration. When it resumes, vol- Warren, Michigan 48090-1505 unteers already on the list will be notified Dale Thieme, Editor by email. 2021 Officers President Diane Hall [email protected] 1st VP Dale Partin [email protected] 2ndVP Riyad Matti [email protected] Secretary Mark Kedzior [email protected] Treasurer Adrian Bradley [email protected] Outreach Bob Trembley [email protected] Publications Dale Thieme [email protected] Entire Board [email protected] The Warren Astronomical Society, Inc., is a local, non-profit organization of Discussion Group Meeting amateur astronomers. The Society holds meetings on the first Monday and third Thursday of each month, starting at 7:30 p.m. Come on over, and talk astronomy, space news, and whatnot! First Monday meeting: Third Thursday meeting: Cranbrook: Institute of Science Macomb Community College 1221 North Woodward Ave South campus, Bldg. J, Room J221 Bloomfield Hills, Michigan 14600 Twelve Mile Rd. Warren, Michigan Membership and Annual Dues Student Individual Senior Citizen for families $17.00 $30.00 $22.00 add $7.00 In This Issue: Astronomical League (optional) $7.50 Send membership applications and dues to the treasurer: President’s Field of View ............................... 3 c/o Warren Astronomical Society, Inc. P.O. Box 1505 Letters .........................................................