Optimization Problems and Their Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Economic Role of the Region's Geobrand in Strengthening the Educational Trend of Foreign Youth Migration Olga Popova1, Julia Myslyakova2, and Nafisa Gagarina2

XXIII International Conference Culture, Personality, Society in the Conditions of Digitalization: Methodology and Experience of Empirical Research Conference Volume 2020 Conference Paper The Economic Role of the Region's Geobrand in Strengthening the Educational Trend of Foreign Youth Migration Olga Popova1, Julia Myslyakova2, and Nafisa Gagarina2 1Ural State University of Economics, Department of Marketing and International Management, Yekaterinburg, Russian Federation 2Ural State University of Economics, Department of Business Foreign Language, Yekaterinburg, Russian Federation Abstract The article considers the influence of the geobrand of Yekaterinburg and the Sverdlovsk oblast with regards to attracting foreign students to the universities in the region. It provides the statistics on the positive economic impact of the geobrand on the educational trend of foreign youth migration. What attracts foreign students is not only the university, but also the region itself. In case of Yekaterinburg, it is a well-known geobrand created historically purposefully or spontaneously. Large-scale international Corresponding Author: events held in the region contribute a lot to the worldwide recognition of the geobrand. Olga Popova [email protected] It attracts additional resources and determines the competitive position of the area both locally and globally. With the development of digital culture, information society and Published: 21 January 2021 the media space, it is possible to increase the region’s competitiveness and investment attractiveness. It is essential for improving its image and for its positive perception Publishing services provided by Knowledge E internally and externally. Since 2017 Yekaterinburg has been positioned mainly as a cultural and sports capital. Its image is transforming from that of an industrial territory Olga Popova et al. -

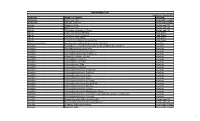

Unai Members List August 2021

UNAI MEMBER LIST Updated 27 August 2021 COUNTRY NAME OF SCHOOL REGION Afghanistan Kateb University Asia and the Pacific Afghanistan Spinghar University Asia and the Pacific Albania Academy of Arts Europe and CIS Albania Epoka University Europe and CIS Albania Polytechnic University of Tirana Europe and CIS Algeria Centre Universitaire d'El Tarf Arab States Algeria Université 8 Mai 1945 Guelma Arab States Algeria Université Ferhat Abbas Arab States Algeria University of Mohamed Boudiaf M’Sila Arab States Antigua and Barbuda American University of Antigua College of Medicine Americas Argentina Facultad de Ciencias Económicas de la Universidad de Buenos Aires Americas Argentina Facultad Regional Buenos Aires Americas Argentina Universidad Abierta Interamericana Americas Argentina Universidad Argentina de la Empresa Americas Argentina Universidad Católica de Salta Americas Argentina Universidad de Congreso Americas Argentina Universidad de La Punta Americas Argentina Universidad del CEMA Americas Argentina Universidad del Salvador Americas Argentina Universidad Nacional de Avellaneda Americas Argentina Universidad Nacional de Cordoba Americas Argentina Universidad Nacional de Cuyo Americas Argentina Universidad Nacional de Jujuy Americas Argentina Universidad Nacional de la Pampa Americas Argentina Universidad Nacional de Mar del Plata Americas Argentina Universidad Nacional de Quilmes Americas Argentina Universidad Nacional de Rosario Americas Argentina Universidad Nacional de Santiago del Estero Americas Argentina Universidad Nacional de -

August 29 - September 03, 2021

August 29 - September 03, 2021 www.irmmw-thz2021.org 1 PROGRAM PROGRAM MENU FUTURE AND PAST CONFERENCES························ 1 ORGANIZERS·················································· 2 COMMITTEES················································· 3 PLENARY SESSION LIST······································ 8 PRIZES & AWARDS··········································· 10 SCIENTIFIC PROGRAM·······································16 MONDAY···················································16 TUESDAY··················································· 50 WEDNESDAY·············································· 85 THURSDAY··············································· 123 FRIDAY···················································· 167 INFORMATION.. FOR PRESENTERS ORAL PRESENTERS PLENARY TALK 45 min. (40 min. presentation + 5 min. discussion) KEYNOTES COMMUNICATION 30 min. (25 min. presentation + 5 min.discussion) ORAL COMMUNICATION 15 min. (12 min. presentation + 3 min.discussion) Presenters should be present at ZOOM Meeting room 10 minutes before the start of the session and inform the Session Chair of their arrival through the chat window. Presenters test the internet, voice and video in advance. We strongly recommend the External Microphone for a better experience. Presenters will be presenting their work through “Screen share” of their slides. POSTER PRESENTERS Presenters MUST improve the poster display content through exclusive editing links (Including the Cover, PDF file, introduction.) Please do respond in prompt when questions -

Contact Details 3/3 -31 Vstreshny Pereylok 620102 Yekaterinburg

ELENA KALABINA Contact Details 3/3 -31_Vstreshny Pereylok Email: [email protected] 620102 Yekaterinburg Phone: + 7 912 248 5261 Russia Date of birth: Month Date, Year Nationality:Russian 11/06/1961 Current Academic Affiliation Teaching and research, Ural Federal University Research interest Behavioral economics, Health Economics, Personnel policy of industrial companies, The transformation of the system of relations «employee – employer», Evolution of labor markets, The remuneration policy of enterprises, The effectiveness of enterprise additional training of employees of industrial companies. Education Dr. in Economics, Omsk State University FM Dostoevsky, Russia, 2013 Doctoral candidacy, Saint Petersburg State University, Russia, 2003-2006 Ph. D in Economics, Ural State Technical University (UGTU - UPE), Russia, 1995 Ph.D. student, Sverdlovsk Institute of National Economy, Russia, 1982-1987 Expert, Sverdlovsk Institute of National Economy, Russia, 1978-1982 Dissertation «Socio-economic efficiency of enterprises in the state sector of the economy», Ural State Economic University, July, 2007, Supervisors: Svetlana Smirnyh. «The development of the domestic labor market in the local economic mainstay», Ural State Economic University, July, 2009, Supervisors: Svetlana Orehova. «The effectiveness of additional vocational training system of the industrial enterprise workers», Omsk State University FM Dostoevsky , September 2014, Supervisors: Ekaterina Aleksandrova. Teaching experience Industrial organization(undergraduate), Business economy(postgraduate), -

ACLS Annual Report 2005-2007 Excerpt

excerpted from American Council of Learned Societies Annual Report for the years 2006-2007 and 2006-2005. 2007 FELLOWS AND GRANTEES O F T he American COUncil of Learned Societies Funded by the ACLS ACLS FELLOWSHIP PROGRAM Fellowship Endowment LILA ABU-LUGHOD, Professor, Anthropology and Women’s Studies, Columbia University Do Muslim Women Have Rights? An Anthropologist’s View of the Debates about Muslim Women’s Human Rights in the Context of the “Clash of Civilizations” ENRIQUE DesMOND ARIAS, Assistant Professor, Political Science, City University of New York, John Jay College Democracy and the Privatization of Violence in Rio de Janeiro: An Ethnographic Study of Politics and Conflict in the Three Neighborhoods JANINE G. BARCHAS, Associate Professor, English Literature, University of Texas, Austin Heroes and Villains of Grubstreet: Edmund Curll, Samuel Richardson, and the Eighteenth-Century Book Trade GIOVANNA BeNADUSI, Associate Professor, European History, University of South Florida Visions of the Social Order: Women’s Last Wills, Notaries, and the State in Baroque Tuscany AVIVA BeN-UR, Associate Professor, Jewish History, University of Massachusetts, Amherst Professor Ben-Ur has been designated an ACLS/SSRC/NEH International and Area Studies Fellow.* Jewish Identity in a Slave Society: Suriname, 1660–1863 ReNAte BlUMENfelD-KOSINskI, Professor, French Literature, University of Pittsburgh The Dream World of Philippe de Mézières (1327–1405): Politics and Spirituality in the Late Middle Ages CLIffORD BOB, Associate Professor, Political Science, Duquesne University Globalizing the Right Wing: Conservative Activism and World Politics SUSAN LeslIE BOYNTON, Associate Professor, Historical Musicology, Columbia University Silent Music: Medieval Ritual and the Construction of History in Eighteenth-Century Spain WIllIAM C. -

Auc Geographica 55 1/2020

ACTA UNIVERSITATIS CAROLINAE AUC GEOGRAPHICA 55 1/2020 CHARLES UNIVERSITY • KAROLINUM PRESS AUC Geographica is licensed under a Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited. © Charles University, 2020 ISSN 0300-5402 (Print) ISSN 2336-1980 (Online) Original Article 3 The impact of external institutional shocks on Russian regions Irina V. Danilova1, Olga A. Bogdanova1, Angelika V. Karpushkina2, Tatiana M. Karetnikova3,* 1 State and Municipal Administration South Ural State University (National Research University), Department of Economic Theory, Regional Economy, Russia 2 South Ural State University (National Research University), Department of Economic Security, Russia 3 State and Municipal Administration South Ural State University (National Research University), Department of Economic Theory, Regional Economy, Russia * Corresponding author: [email protected] ABSTRACT The aim is to assess the susceptibility of the regional economy to shocks associated with unexpected changes in institutional rules, trading instruments, as well as accession to international organizations. The impulse response approach to the study of shocks served as a methodological basis. The authors propose and test a new methodological approach that consists in identifying regions characterized by persistent development or a potential for changing the gross regional product as a response to an external shock impulse. It also allows to determine resonant factors that affect the vulnerability, depth and scale of economic consequences. The study reveals that an external institutional shock influences the economic development of regions in various ways, which is due to a number of vulnerability factors. -

On the Problem of the Studentship's Cultural Identity Development

Journal of Siberian Federal University. Humanities & Social Sciences 7 (2017 10) 1450-1457 ~ ~ ~ УДК 316.723 On the Problem of the Studentship’s Cultural Identity Development Semyon D. Voroshin* South Ural State University National Research University 76 Lenin, Chelyabinsk, 454080, Russia Received 23.06.2017, received in revised form 17.08.2017, accepted 25.08.2017 This article deals with the study of the problem of the university museum’s participation in the studentship’s cultural identity development. The author studies the activity of the Hall of Arts of the Art Museum of the South Ural State University (SUSU). The influence of the art exhibition process on the development of different levels of the students’ identity has been demonstrated. Academic exhibitions of the Hall of Arts of different content have been analyzed. The influence of exhibitions by artists of the South Ural on the development of different levels of studentship’s identity has been studied. The role of global, national and regional exhibitions in the university’s cultural life has been demonstrated. Keywords: university museums, museum activities of the university, studentship’s cultural identity, Hall of Arts of the South Ural State University. DOI: 10.17516/1997-1370-0147. Research area: сulturology. With today’s increasing globalization of all social bonds available to an individual. trends, the issue of self-determination of a person The 20th century’s US scientist Eric Ericsson, the in society is one of the up-to-date questions. founder of the identity doctrine, believed that the Recent researches tend to study the process of roots of this process go down into the earlier age, self-determination in the context of the “cultural when a person has to perform various social roles identity” concept. -

Vladimir PUTIN

7/11/2011 SHANGHAI COOPERATION ORGAniZATION: NEW WORD in GLOBAL POLitics | page. 7 IS THE “GREAT AND POWERFUL uniON” BACK? | page. 21 MIKHAIL THE GREAT | page. 28 GRIEVES AND JOYS OF RussiAN-CHINESE PARtnERSHIP | page. 32 CAspiAN AppLE OF DiscORD | page. 42 SKOLKOVO: THE NEW CitY OF THE Sun | page. 52 Vladimir PUTIN SCO has become a real, recognized factor of economic cooperation, and we need to fully utilize all the benefits and opportunities of coop- eration in post-crisis period CONTENT Project manager KIRILL BARSKY SHANGHAI COOPERAtiON ORGAniZAtiON: Denis Tyurin 7 NEW WORD in GLOBAL POLitics Editor in chief Государственная корпорация «Банк развития и внешнеэконо- Tatiana SINITSYNA 16 мической деятельности (внешэкономБанк)» Deputy Editor in Chief TAtiANA SinitsYNA Maxim CRANS 18 THE summit is OVER. LONG LivE THE summit! Chairman of the Editorial Board ALEXANDER VOLKOV 21 IS THE “GREAT AND POWERFUL uniON” BACK? Kirill BARSKIY ALEXEY MASLOV The Editorial Board 23 SCO UnivERsitY PROJEct: DEFinitELY succEssFUL Alexei VLASOV Sergei LUZyanin AnATOLY KOROLYOV Alexander LUKIN 28 MIKHAIL THE GREAT Editor of English version DmitRY KOSYREV Natalia LATYSHEVA 32 GRIEVES AND JOYS OF RussiAN-CHINESE PARtnERSHIP Chinese version of the editor FARIBORZ SAREMI LTD «International Cultural 35 SCO BECOMES ALTERNAtivE TO WEst in AsiA transmission ALEXANDER KnYAZEV Design, layout 36 MANAGEABLE CHAOS: US GOAL in CEntRAL AsiA Michael ROGACHEV AnnA ALEKSEYEVA Technical support - 38 GREENWOOD: RussiAN CHinESE MEGA PROJEct Michael KOBZARYOV Andrei KOZLOV MARinA CHERNOVA THE citY THEY COULD NEVER HAVE CAptuRED... 40 Project Assistant VALERY TumANOV Anastasia KIRILLOVA 42 CAspiAN AppLE OF DiscORD Yelena GAGARINA Olga KOZLOva 44 SECOND “GOLDEN” DECADE OF RussiAN-CHINESE FRIENDSHIP AnDREI VAsiLYEV USING MATERIALS REFERENCE TO THE NUMBER INFOSCO REQUIRED. -

The Faculty of Philosophy of Ural Federal University: History, Events, People

Journal of Siberian Federal University. Humanities & Social Sciences 1 (2012 5) 3-12 ~ ~ ~ УДК 1(091) + 378.4(470.5):1 The Faculty of Philosophy of Ural Federal University: History, Events, People Boris V. Emel’yanov and Alexander V. Pertsev* Ural Federal University 51 Lenina str., Ekaterinburg, 620083 Russia 1 Received 15.07.2011, received in revised form 16.10.2011, accepted 27.11.2011 The article presents a historical review of creation and life of the faculty of philosophy of Ural State University (Ural Federal University at present). Special attention is paid to the current state of innovative, educational and scientific activities of its chairs. Keywords: faculty of philosophy, dialectical and historical materialism, history of philosophy, sociolinguistics, religious studies, legal philosophy, philosophical anthropology. The faculty of philosophy of Ural University worked in the department of propaganda and is the third faculty of philosophy in Russia after agitation as a secretary of the Communist party St. Petersburg and Moscow; it has its own history, of Belarus. His transfer to Sverdlovsk and the features of institutionalization and contribution appointment as the head of the department of to the history of Russian philosophy. philosophy of Ural University was a kind of Although the actual date of its foundation is exile, the reason for which was his signature 1965, its story began much earlier. under the obituary of Mikhoels, a famous Sverdlovsk was recognized as a cultural director deceased in Minsk. His contribution to and scientific center of the Urals after the Great the development of Philosophy in Ural University Patriotic war. Many famous scientists, including is doubtless. -

Communications in Computer and Information Science 965

Communications in Computer and Information Science 965 Commenced Publication in 2007 Founding and Former Series Editors: Phoebe Chen, Alfredo Cuzzocrea, Xiaoyong Du, Orhun Kara, Ting Liu, Dominik Ślęzak, and Xiaokang Yang Editorial Board Simone Diniz Junqueira Barbosa Pontifical Catholic University of Rio de Janeiro (PUC-Rio), Rio de Janeiro, Brazil Joaquim Filipe Polytechnic Institute of Setúbal, Setúbal, Portugal Ashish Ghosh Indian Statistical Institute, Kolkata, India Igor Kotenko St. Petersburg Institute for Informatics and Automation of the Russian Academy of Sciences, St. Petersburg, Russia Krishna M. Sivalingam Indian Institute of Technology Madras, Chennai, India Takashi Washio Osaka University, Osaka, Japan Junsong Yuan University at Buffalo, The State University of New York, Buffalo, USA Lizhu Zhou Tsinghua University, Beijing, China More information about this series at http://www.springer.com/series/7899 Vladimir Voevodin • Sergey Sobolev (Eds.) Supercomputing 4th Russian Supercomputing Days, RuSCDays 2018 Moscow, Russia, September 24–25, 2018 Revised Selected Papers 123 Editors Vladimir Voevodin Sergey Sobolev Research Computing Center (RCC) RCC Moscow State University Moscow State University Moscow, Russia Moscow, Russia ISSN 1865-0929 ISSN 1865-0937 (electronic) Communications in Computer and Information Science ISBN 978-3-030-05806-7 ISBN 978-3-030-05807-4 (eBook) https://doi.org/10.1007/978-3-030-05807-4 Library of Congress Control Number: 2018963970 © Springer Nature Switzerland AG 2019 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. -

How to Survive in Russian Opposition Politics

57 How To Survive In Russian Opposition Politics A Conversation with Leonid Volkov Chief of Staff for Alexei Navalny FLETCHER FORUM: As a leading figure in the Russian opposition, you have unique experience in opposition politics. Yet, you began your career in software and information technology. How did you gravitate toward politics, and what skills and qualities are most important to such work? How have you made use of information technology in your political efforts? LEONID VOLKOV: This was quite a natural move. I used to be the top- level manager for Russia’s fourth-largest software company, and I decided I had to care more about my city. I didn’t like the people who represented me on the City Council, so I got myself elected to City Council exactly ten years ago, in the spring of 2009. And this was how it all started. I found myself as the only Independent on the City Council of Russia’s fourth largest city, my home city of Yekaterinburg. I quickly realized what was going wrong with local politics. My IT background, my IT skills—these are the only ways the opposition can communicate with the voters. Putin’s Kremlin is in control of all the other possible media—TV, radio, newspa- pers—so we can’t get any kind of access to the television. The only thing we can do is reach out through social media, YouTube, mailing lists, all approaches that require a lot of information technology. We have to be more sophisticated and outsmart the Russian govern- ment because we have few resources. -

PROGRAMME International Forum COGNITIVE NEUROSCIENCE – 2018

Ural Federal University named after first President of Russia B. N.eltsin Y The Ural State Medical University The Branch Union «Neuronet» with the participation of The Russian Psychological Society (Sverdlovsk region branch) The Russian Academy of Education The Russian Academy of Sciences The medical centre «UMMC-Health» supported by Representative offices of the President of Russia in the Ural Federal district PROGRAMME International Forum COGNITIVE NEUROSCIENCE – 2018. November 6 – 8, 2018 Ekaterinburg ORGANIZING COMMITTEE: Chairman: Viktor Koksharov, PhD in Historical sciences, Head of Ural Federal University named after the first President of Russia B. Yeltsin (hereinafter – UrFU) (Ekaterinburg, Russia). Vice-chairman: Sergey Kortov, Dr. habil. of Economics, PhD in Physics and Mathematics, First Vice-principal of UrFU (Ekaterinburg, Russia). Vice-chairman: Alexandr Semenov, Executive Director of The Branch Union «Neuronet» (Moscow, Russia). Members of the Organizing Committee: Georgy Borisov, Alexey Kislov, Nadezhda Korepina, Olga Kuntareva, Kseniya Kunnikova, Alexander Kotyusov, Maria Lavrova, Alexey Maltsev, Svetlana Pavlova, Elvira Symanyuk, Nadezhda Terlyga, Irina Polyakova, Julia Pyankova, PROGRAMME COMMITTEE: Chairman: Sergey Malykh, Dr. of Psychology, professor, Academician of the Russian Academy of Education, Head of the Laboratory of age Psychogenetics at Russian Academy of Education (Moscow, Russia). Vice-chairman: Elvira Symanyuk, Dr. habil. of Psychological Sciences, Head of the Department of General and Social Psychology, Director Ural Institute of Humanities at UrFU, Professor at the Russian Academy of Education, Head of regional scientific centre of the Russian Academy of Education (Ekaterinburg, Russia). Members: Olga Vindeker, Olga Dorogina, Irina Yershova, Jes Olesen, Evald Zeer, Sergey Kiselev, Elena Lebedeva, Olga Lomtadidze, Margarita Permyakova, Anna Pecherkina, Alena Sidenkova, Sergey Hatsko, Yuriy Shipitsin, Jaap Kappelle.