Extracting Information Networks from the Blogosphere

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Early Working Draft Raw Materials, Creative Works, and Cultural

Early Working Draft Raw Materials, Creative Works, and Cultural Hierarchies Andrew Gilden In disputes involving creative works, courts increasingly ask whether a preexisting text, image or persona has been used as “raw material” for new authorship. If so, the accused infringer becomes shielded by the fair use doctrine under copyright law or a First Amendment defense under right of publicity law. Although copyright and right of publicity decisions have begun to rely heavily on the concept, neither the case law nor existing scholarship has explored what it actually means to use something as “raw material,” whether this inquiry adequately draws lines between infringing and non- infringing conduct, or how well it maps onto the expressive values that the fair use doctrine and the First Amendment defense aim to capture This Article reveals serious problems with the raw material inquiry. First, a survey of all decisions invoking raw materials uncovers troubling distributional patterns; in nearly every case the winner is the party in the more privileged class, race or gender position. Notwithstanding courts’ assurance that the inquiry is “straightforward,” distinctions between raw and “cooked” materials appear to be structured by a range of social hierarchies operating in the background. Second, these cases express ethically troubling messages about the use and reuse of text, image and likeness. The more offensive, callous and objectionable the appropriation, the more visibly “raw” the preexisting material is likely to be. Given the shortcomings of the raw material inquiry, this Article argues that courts should engage more directly with an accused infringer’s actual creative process and rely less on a formal comparison between the two works in dispute. -

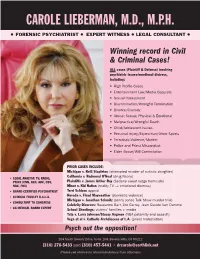

Carole Lieberman, M.D., M.P.H

CAROLE LIEBERMAN, M.D., M.P.H. l FORENSIC PSYCHIATRIST l EXPERT WITNESS l LEGAL CONSULTANT l Winning record in Civil & Criminal Cases! ALL cases (Plaintiff & Defense) involving psychiatric issues/emotional distress, including: • High Profile Cases • Entertainment Law/Media Copycats • Sexual Harassment • Discrimination/Wrongful Termination • Divorce/Custody • Abuse: Sexual, Physical & Emotional • Malpractice/Wrongful Death • Child/Adolescent issues • Personal Injury/Equestrian/Other Sports • Terrorism/Violence/Murder • Police and Priest Misconduct • Elder Abuse/Will Contestation PRIOR CASES INCLUDE: Michigan v. Kelli Stapleton (attempted murder of autistic daughter) California v. Redmond O’Neal • LEGAL ANALYST: TV, RADIO, (drug felony) Plaintiffs v. James Arthur Ray PRINT (CNN, HLN, ABC, CBS, (Sedona sweat lodge homicide) NBC, FOX) Minor v. Kid Nation (reality TV → emotional distress) Terri Schiavo • BOARD CERTIFIED PSYCHIATRIST appeal Nevada v. Floyd Mayweather • CLINICAL FACULTY U.C.L.A. (domestic violence) Michigan v. Jonathan Schmitz • CONSULTANT TO CONGRESS (Jenny Jones Talk Show murder trial) Celebrity Divorces: Roseanne Barr, Jim Carrey, Jean Claude Van Damme • CA MEDICAL BOARD EXPERT School Shootings: victims’ families v. media Tate v. Larry Johnson/Stacey Augman (NBA paternity and assault) Vega et al v. Catholic Archdiocese of L.A. (priest molestation) Psych out the opposition! 204 South Beverly Drive, Suite 108, Beverly Hills, CA 90212 (310) 278-5433 (310) 457-5441 [email protected] and • (Please see reverse for recommendations from attorneys) Here’s what your colleagues are saying about Carole Lieberman, M.D. Psychiatrist and Expert Witness: “I have had the opportunity to work with the “I have rarely had the good fortune to present best expert witnesses in the business, and I such well-considered testimony from such a would put Dr. -

Fame Us Introduction

FAME US INTRODUCTION Brian Howell A while ago, I started taking pictures of celebrity impersonators after a string of chance encounters with look-alikes. When I began researching the subject, I had no idea that so many people made a living at it. I became intrigued, and as a result, this project was born. I attended a number of conventions for the celebrity imper- sonator industry; there are two major gatherings a year: Las Vegas in the spring and Orlando, Florida in the fall. The impersonators are there to showcase their tal- ent and to network with others in the industry; the conventions are like any other, except that on any given day, you might see George W. Bush hamming it up with Ozzy Osbourne, or bump into Marilyn Monroe wearing her dress from The Seven Year Itch, except there is no subway air vent to blow it up to her waist. Impersonation is a full-time career for many of the people who appear in this book. Some of them make a great living at it, including a few who look more like the ce- lebrity they impersonate than the actual celebrity himself. While getting to know them better, I’ve learned that the biggest misconception about impersonators is that they are somehow obsessed fans desperate to physically become the celebrity they impersonate. But I never met anyone like that. On the contrary, many imper- sonators got into the business because of others—those passersby on the street who stop and stare, and ask, “Has anyone ever told you you look like…?” Simply put, and not in a derogatory way, impersonators are opportunistic, taking advan- tage of a culture with an insatiable, uncontrollable thirst for celebrity and all that it entails. -

FESTIVAL to the Volcanoes of Auvergne – Unforgettable

InsPIrIng you to reach your dreams #19 /JuLy-AUGUST 2010 Glorious Cannes, Glamorous summer MICK JAGGER A Stone in exile? PAlme d’oR wInner Exclusive interview AlEx Cox British film expert BElGIAn EU PRESIdEnCY Back in business? EDITORIAL Cannes-tastic! Cannes is one of those places cocktails, courtesy of chivas and the utter where you feel anything could exhilaration of being handed the controls of J happen. Away from the glitz craft’s latest speedboat the Torpedo as it and glamour of La Croisette topped 40 knots in Cannes harbour, we did our best to enjoy ourselves. and its famous red carpet, a whole industry throbs in the Of course, it’s not all parties. Closer to home, Andy background. Carling returns with a sideways look at how Belgium may handle its six months as head of n such a city of movers and shakers, a the EU’s rotating presidency. There’s still time to place which seems practically never to get yourself in trim for the beach, too – let Together sleep and a town in which new stars are and Power Plate Institute Brussels introduce you born, Together is right at home. To save to the latest exercise craze, with our fantastic you the trouble of trekking the thousand- readers giveaway. oddI kilometres to Cannes, we selflessly ventured there to bring you the very best in fashion shows, Plus, as we know you’ve come to expect and private parties and everything you might expect enjoy by now, there’s a beautiful fashion from the hardest working (and hardest partying) photoshoot, Kimberley Lovato’s sensual magazine in Brussels. -

A Network Approach to Define Modularity of Components In

A Network Approach to Define Modularity of Components Manuel E. Sosa1 Technology and Operations Management Area, in Complex Products INSEAD, 77305 Fontainebleau, France Modularity has been defined at the product and system levels. However, little effort has e-mail: [email protected] gone into defining and quantifying modularity at the component level. We consider com- plex products as a network of components that share technical interfaces (or connections) Steven D. Eppinger in order to function as a whole and define component modularity based on the lack of Sloan School of Management, connectivity among them. Building upon previous work in graph theory and social net- Massachusetts Institute of Technology, work analysis, we define three measures of component modularity based on the notion of Cambridge, Massachusetts 02139 centrality. Our measures consider how components share direct interfaces with adjacent components, how design interfaces may propagate to nonadjacent components in the Craig M. Rowles product, and how components may act as bridges among other components through their Pratt & Whitney Aircraft, interfaces. We calculate and interpret all three measures of component modularity by East Hartford, Connecticut 06108 studying the product architecture of a large commercial aircraft engine. We illustrate the use of these measures to test the impact of modularity on component redesign. Our results show that the relationship between component modularity and component redesign de- pends on the type of interfaces connecting product components. We also discuss direc- tions for future work. ͓DOI: 10.1115/1.2771182͔ 1 Introduction The need to measure modularity has been highlighted implicitly by Saleh ͓12͔ in his recent invitation “to contribute to the growing Previous research on product architecture has defined modular- field of flexibility in system design” ͑p. -

Celebrity Rankings Study

Interview dates: December 19-21, 2006 Interviews: 1,004 adults, 779 registered voters Margin of error: +3.1 for all adults; +3.6 for registered voters Project #81-5861-25 Associated Press/AOL Celebrity Rankings Study Celebrity Rankings Study 1. If you were asked to name a famous person to be the biggest villain of the year, whom would you choose? George W. Bush 25% Osama bin Laden 8% Saddam Hussein 6% President Mahmoud Ahmadinejad of Iran 5% Kim Jong Il, North Korean leader 2% Donald Rumsfeld 2% John Kerry 1% Rosie O'Donnell 1% Dick Cheney 1% Hillary Clinton 1% Brad Pitt 1% Tom Cruise 1% Satan/The Devil 1% Donald Trump 1% O.J. Simpson 1% Hugo Chavez 1% George Clooney 1% Nancy Pelosi 1% Bill Clinton 1% Colin Farrell 1% Oprah Winfrey 1% Mel Gibson - Paris Hilton - Terrell Owens - Britney Spears - Angelina Jolie - Arnold Schwarzenegger - Santa Claus - Bill O'Reilly - Martha Stewart - Matt Damon - Other 15% None - (DK/NS) 20% 1101 Connecticut Avenue NW, Suite 200 Washington, DC 20036 www.Ipsos-NA.com/PA (202) 463-7300 Ipsos Public Affairs Project #81-5861-25 Page 2 December 19-21, 2006 AP-AOL Celebrity Rankings Study 2. If you were asked to name a famous person to be the biggest hero of the year, whom would you choose? George W. Bush 13% Soldiers/troops in Iraq 6% Oprah Winfrey 3% Barack Obama 3% Jesus Christ 3% Bono 2% Angelina Jolie 1% Al Gore 1% Arnold Schwarzenegger 1% Colin Powell 1% Mel Gibson 1% George Clooney 1% Bill Gates 1% Donald Rumsfeld 1% Bill Clinton 1% Jimmy Carter 1% Martin Luther King 1% Condoleeza Rice 1% Billy Graham 1% Warren Buffett 1% Pope Benedict - Hillary Clinton - Brad Pitt - Paris Hilton - Nancy Pelosi - Tiger Woods - John McCain - Sylvester Stallone - Tom Cruise - Lance Armstrong - Donald Trump - Mother Theresa - Ladainian Tomlinson - Brett Favre - Other 25% None 1% (DK/NS) 27% Ipsos Public Affairs Project #81-5861-25 Page 3 December 19-21, 2006 AP-AOL Celebrity Rankings Study 3. -

Literatura Y Otras Artes: Hip Hop, Eminem and His Multiple Identities »

TRABAJO DE FIN DE GRADO « Literatura y otras artes: Hip Hop, Eminem and his multiple identities » Autor: Juan Muñoz De Palacio Tutor: Rafael Vélez Núñez GRADO EN ESTUDIOS INGLESES Curso Académico 2014-2015 Fecha de presentación 9/09/2015 FACULTAD DE FILOSOFÍA Y LETRAS UNIVERSIDAD DE CÁDIZ INDEX INDEX ................................................................................................................................ 3 SUMMARIES AND KEY WORDS ........................................................................................... 4 INTRODUCTION ................................................................................................................. 5 1. HIP HOP ................................................................................................................... 8 1.1. THE 4 ELEMENTS ................................................................................................................ 8 1.2. HISTORICAL BACKGROUND ................................................................................................. 10 1.3. WORLDWIDE RAP ............................................................................................................. 21 2. EMINEM ................................................................................................................. 25 2.1. BIOGRAPHICAL KEY FEATURES ............................................................................................. 25 2.2 RACE AND GENDER CONFLICTS ........................................................................................... -

Party of the Year

SOCIAL SAFARI | by R. COURI HAY Ariana Madonna @ Rockefeller, Raising Malawi Hannah Selleck and Georgina Bloomberg @ NY Botanical Garden Winter Wonderland Ball Event planner Harriette Rose Katz @ Animal Ashram Anne Hathaway @ NY Botanical Prince Pavlos, Garden Princess Marie- Winter Chantal and Wonderland Princess Olym- Bal pia of Greece and Denmark graced Gstaad Board Members Audrey and Martin Gruss @ Lincoln Center Fund Gala, Georgina Chapman honoring Carolina Herrera hosted a dinner in Gstaad PARTYGstaad,Valentino, Georgina OF Chapman, THE Botanical Garden, CarolinaYEAR Herrera, Karolína Wilbur Ross, Madonna & Animal Ashram Kurková @ Raising Malawi TEARS OF A CLOWN artwork by Damien Hirst, Ai Weiwei, Julian Schnabel, Cindy Sher- If parties could win an Oscar, then Madonna’s Raising Malawi baccha- man, Richard Prince, Steven Meisel and a piece by Tracey Emin, nal would take home top honors for 2016. It was a night of a hundred who beamed from the audience when it sold for $550,000. Karolína stars including Leonardo DiCaprio, Adriana Lima, A-Rod, Donna Kurková sold a diamond snake by Bulgari to Prince Alexander von Karan, Len Blavatnik, Jeremy Scott, Natasha Poly, Alexander Gilkes, Furstenberg for $180,000, but only afer she ofered to lick the serpent. P. Diddy, Calvin Klein, Ron Burkle and David Blaine, who smirked Ooh la la! When a Fiat 500 came on the block, she slapped Agnelli as he swallowed a broken wineglass. Tey all played a role in this night heir Lapo Elkann, saying, “Tis car has been arrested along with its of fun, performance -

Event Sponsorship Proposal

presents 8th Edition CONTENTS I. About Us p.3 The Company – VIP Belgium p.3 References p.4 The Foundation – Wheeling Around the World p.6 The Founder p.7 II. Cine-Arts Galas p.8 The Concept p.8 Cine-Arts Gala 2015 p.9 Cine-Arts Gala 2016 p.12 Gala Program p.13 Auction p.14 III. Become a Partner p.15 Sponsorship Opportunities p.15 Communication Benefits p.15 IV. Contact p.16 2 I. About Us The company VIP Belgium Founded in 2008, VIP BELGIUM has been a key player in the Belgian and international events scene. It organizes events including private parties, galas, company and charity events. Since the last eight years, VIP BELGIUM has also been renowned for its outstanding events held during the International Film Festival in Cannes at the most exclusive locations such as private Yachts and Villas, Majestic Hotel, Grand Hyatt Cannes Hotel Martinez, the Palm Beach,… Each time, a unique concept not only brings together the Belgian and the French, but also the international audience of the Cannes Film Festival. VIP BELGIUM is an active society, trusted within its field, with a solid reputation among its most loyal customers. www.vipbelgium.com From left to right: Michelle Rodriguez, Penelope Cruz, Bruce Willis, Jean-Claude Van Damme, VIP Belgium Yacht at Cannes Film Festival 2010, Prince Albert of Monaco 3 Reference A quick overview of companies which have already trusted Alexandre Bodart Pinto: 4 They talked about us: 5 The Foundation Wheeling Around the World Nowadays, 20% of the population faces disability, forming the largest minority in the world. -

Practical Applications of Community Detection

Volume 6, Issue 4, April 2016 ISSN: 2277 128X International Journal of Advanced Research in Computer Science and Software Engineering Research Paper Available online at: www.ijarcsse.com Practical Applications of Community Detection Mini Singh Ahuja1, Jatinder Singh2, Neha3 1 Research Scholar, Department of Computer Science, Punjab Technical University, Punjab, India 2 Professor, Department of Computer Science, KC Group of Institutes Nawashahr, Punjab, India 3 Student (M.Tech), Department of Computer Science, Regional Campus Gurdaspur, Punjab, India Abstract: Network is a collection of entities that are interconnected with links. The widespread use of social media applications like youtube, flicker, facebook is responsible for evolution of more complex networks. Online social networks like facebook and twitter are very large and dynamic complex networks. Community is a group of nodes that are more densely connected as compared to nodes outside the community. Within the community nodes are more likely to be connected but less likely to be connected with nodes of other communities. Community detection in such networks is one of the most challenging tasks. Community structures provide solutions to many real world problems. In this paper we have discussed about various applications areas of community structures in complex network. Keywords: complex network, community structures, applications of community structures, online social networks, community detection algorithms……. etc. I. INTRODUCTION Network is a collection of entities called nodes or vertices which are connected through edges or links. Computers that are connected, web pages that link to each other, group of friends are basic examples of network. Complex network is a group of interacting entities with some non trivial dynamical behavior [1]. -

Social Network Analysis with Content and Graphs

SOCIAL NETWORK ANALYSIS WITH CONTENT AND GRAPHS Social Network Analysis with Content and Graphs William M. Campbell, Charlie K. Dagli, and Clifford J. Weinstein Social network analysis has undergone a As a consequence of changing economic renaissance with the ubiquity and quantity of » and social realities, the increased availability of large-scale, real-world sociographic data content from social media, web pages, and has ushered in a new era of research and sensors. This content is a rich data source for development in social network analysis. The quantity of constructing and analyzing social networks, but content-based data created every day by traditional and social media, sensors, and mobile devices provides great its enormity and unstructured nature also present opportunities and unique challenges for the automatic multiple challenges. Work at Lincoln Laboratory analysis, prediction, and summarization in the era of what is addressing the problems in constructing has been dubbed “Big Data.” Lincoln Laboratory has been networks from unstructured data, analyzing the investigating approaches for computational social network analysis that focus on three areas: constructing social net- community structure of a network, and inferring works, analyzing the structure and dynamics of a com- information from networks. Graph analytics munity, and developing inferences from social networks. have proven to be valuable tools in solving these Network construction from general, real-world data presents several unexpected challenges owing to the data challenges. Through the use of these tools, domains themselves, e.g., information extraction and pre- Laboratory researchers have achieved promising processing, and to the data structures used for knowledge results on real-world data. -

Toreador of Paris: Facilitated Various Parties and Individual Pleasures

Toreador of Paris: Facilitated various parties and individual pleasures. The most public and lucrative of these was probably the unicorn viewing. Unicorn Viewing 600 euros [per ticket] Party on June 10th (with wine) 52,000 euros Party on June 12th (without wine) 20,000 euros Private Session on June 13th 500 euros Private Session on June 14th (All night) 31,000 euros Party on June 17th (with wine) 81,000 euros Unicorn Viewing (2) 800 euros [per ticket] Party on June 20th (without wine) 16,000 euros Private Session on June 22nd 41,000 euros Private Session on June 23rd (All night) 0 euros Public Beating on June 26th 1000 euros [per ticket] Party on June 27th (with wine) 65,000 euros Attendance: Unicorn Viewing: 42 Kindred (25,200 euros) Unicorn Viewing (2): 31 Kindred (24,800 euros) Public Beating: 15 Kindred (15,000 euros) Total: 311,500 euros 430,617 dollars Nosferatu of Germany – The Nosferatu were given free access to the illusion of Hedi Klum, a svelte German model, in exchange for information on Ash Gently, but those who wanted specific things still had to pay. Private Session on July18 (Toni Garrn) 50 euros Private Session on July18 (Daniela Amavia) 50 euros Private Session on July19 (Blond woman) 50 euros Private Session on July19 (Carlott Cordes) 50 euros Private Session on July19 (Mary Kate and Ashley) 150 euros Private Session on July20 (Toni Garrn) 50 euros Private Session on July20 (Daniela Amavia) 50 euros Private Session on July20 (Madonna) 50 euros Private Session on July21 (Madonna) 50 euros Private Session on July21 (Madonna)