Case Study: Beyond Homogeneous Decomposition with Qbox Scaling Long-Range Forces on Massively Parallel Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Free and Open Source Software for Computational Chemistry Education

Free and Open Source Software for Computational Chemistry Education Susi Lehtola∗,y and Antti J. Karttunenz yMolecular Sciences Software Institute, Blacksburg, Virginia 24061, United States zDepartment of Chemistry and Materials Science, Aalto University, Espoo, Finland E-mail: [email protected].fi Abstract Long in the making, computational chemistry for the masses [J. Chem. Educ. 1996, 73, 104] is finally here. We point out the existence of a variety of free and open source software (FOSS) packages for computational chemistry that offer a wide range of functionality all the way from approximate semiempirical calculations with tight- binding density functional theory to sophisticated ab initio wave function methods such as coupled-cluster theory, both for molecular and for solid-state systems. By their very definition, FOSS packages allow usage for whatever purpose by anyone, meaning they can also be used in industrial applications without limitation. Also, FOSS software has no limitations to redistribution in source or binary form, allowing their easy distribution and installation by third parties. Many FOSS scientific software packages are available as part of popular Linux distributions, and other package managers such as pip and conda. Combined with the remarkable increase in the power of personal devices—which rival that of the fastest supercomputers in the world of the 1990s—a decentralized model for teaching computational chemistry is now possible, enabling students to perform reasonable modeling on their own computing devices, in the bring your own device 1 (BYOD) scheme. In addition to the programs’ use for various applications, open access to the programs’ source code also enables comprehensive teaching strategies, as actual algorithms’ implementations can be used in teaching. -

Quan Wan, "First Principles Simulations of Vibrational Spectra

THE UNIVERSITY OF CHICAGO FIRST PRINCIPLES SIMULATIONS OF VIBRATIONAL SPECTRA OF AQUEOUS SYSTEMS A DISSERTATION SUBMITTED TO THE FACULTY OF THE INSTITUTE FOR MOLECULAR ENGINEERING IN CANDIDACY FOR THE DEGREE OF DOCTOR OF PHILOSOPHY BY QUAN WAN CHICAGO, ILLINOIS AUGUST 2015 Copyright c 2015 by Quan Wan All Rights Reserved For Jing TABLE OF CONTENTS LISTOFFIGURES .................................... vii LISTOFTABLES ..................................... xii ACKNOWLEDGMENTS ................................. xiii ABSTRACT ........................................ xiv 1 INTRODUCTION ................................... 1 2 FIRST PRINCIPLES CALCULATIONS OF GROUND STATE PROPERTIES . 5 2.1 Densityfunctionaltheory. 5 2.1.1 TheSchr¨odingerEquation . 5 2.1.2 Density Functional Theory and the Kohn-Sham Ansatz . .. 6 2.1.3 Approximations to the Exchange-Correlation Energy Functional... 9 2.1.4 Planewave Pseudopotential Scheme . 11 2.1.5 First Principles Molecular Dynamics . 12 3 FIRST-PRINCIPLES CALCULATIONS IN THE PRESENCE OF EXTERNAL ELEC- TRICFIELDS ..................................... 14 3.1 Density functional perturbation theory for Homogeneous Electric Field Per- turbations..................................... 14 3.1.1 LinearResponsewithinDFPT. 14 3.1.2 Homogeneous Electric Field Perturbations . 15 3.1.3 ImplementationofDFPTintheQboxCode . 17 3.2 Finite Field Methods for Homogeneous Electric Field . 20 3.2.1 Finite Field Methods . 21 3.2.2 Computational Details of Finite Field Implementations . 25 3.2.3 Results for Single Water Molecules and Liquid Water . 27 3.2.4 Conclusions ................................ 32 4 VIBRATIONAL SIGNATURES OF CHARGE FLUCTUATIONS IN THE HYDRO- GENBONDNETWORKOFLIQUIDWATER . 34 4.1 IntroductiontoVibrationalSpectroscopy . ..... 34 4.1.1 NormalModeandTCFApproaches. 35 4.1.2 InfraredSpectroscopy. .. .. 36 4.1.3 RamanSpectroscopy ........................... 37 4.2 RamanSpectroscopyforLiquidWater . 39 4.3 TheoreticalMethods ............................... 41 4.3.1 Simulation Details . 41 4.3.2 Effective molecular polarizabilities . -

Density Functional Theory

Density Functional Theory Fundamentals Video V.i Density Functional Theory: New Developments Donald G. Truhlar Department of Chemistry, University of Minnesota Support: AFOSR, NSF, EMSL Why is electronic structure theory important? Most of the information we want to know about chemistry is in the electron density and electronic energy. dipole moment, Born-Oppenheimer charge distribution, approximation 1927 ... potential energy surface molecular geometry barrier heights bond energies spectra How do we calculate the electronic structure? Example: electronic structure of benzene (42 electrons) Erwin Schrödinger 1925 — wave function theory All the information is contained in the wave function, an antisymmetric function of 126 coordinates and 42 electronic spin components. Theoretical Musings! ● Ψ is complicated. ● Difficult to interpret. ● Can we simplify things? 1/2 ● Ψ has strange units: (prob. density) , ● Can we not use a physical observable? ● What particular physical observable is useful? ● Physical observable that allows us to construct the Hamiltonian a priori. How do we calculate the electronic structure? Example: electronic structure of benzene (42 electrons) Erwin Schrödinger 1925 — wave function theory All the information is contained in the wave function, an antisymmetric function of 126 coordinates and 42 electronic spin components. Pierre Hohenberg and Walter Kohn 1964 — density functional theory All the information is contained in the density, a simple function of 3 coordinates. Electronic structure (continued) Erwin Schrödinger -

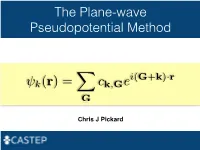

The Plane-Wave Pseudopotential Method

The Plane-wave Pseudopotential Method i(G+k) r k(r)= ck,Ge · XG Chris J Pickard Electrons in a Solid Nearly Free Electrons Nearly Free Electrons Nearly Free Electrons Electronic Structures Methods Empirical Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ultrasoft Pseudopotentials (Vanderbilt, 1990) Projector Augmented Waves Deriving the Pseudo Hamiltonian PAW vs PAW C. G. van de Walle and P. E. Bloechl 1993 (I) PAW to calculate all electron properties from PSP calculations" P. E. Bloechl 1994 (II)! The PAW electronic structure method" " The two are linked, but should not be confused References David Vanderbilt, Soft self-consistent pseudopotentials in a generalised eigenvalue formalism, PRB 1990 41 7892" Kari Laasonen et al, Car-Parinello molecular dynamics with Vanderbilt ultrasoft pseudopotentials, PRB 1993 47 10142" P.E. Bloechl, Projector augmented-wave method, PRB 1994 50 17953" G. Kresse and D. Joubert, From ultrasoft pseudopotentials to the projector augmented-wave method, PRB 1999 59 1758 On-the-fly Pseudopotentials - All quantities for PAW (I) reconstruction available" - Allows automatic consistency of the potentials with functionals" - Even fewer input files required" - The code in CASTEP is a cut down (fast) pseudopotential code" - There are internal databases" - Other generation codes (Vanderbilt’s original, OPIUM, LD1) exist It all works! Kohn-Sham Equations Being (Self) Consistent Eigenproblem in a Basis Just a Few Atoms In a Crystal Plane-waves -

Introduction to DFT and the Plane-Wave Pseudopotential Method

Introduction to DFT and the plane-wave pseudopotential method Keith Refson STFC Rutherford Appleton Laboratory Chilton, Didcot, OXON OX11 0QX 23 Apr 2014 Parallel Materials Modelling Packages @ EPCC 1 / 55 Introduction Synopsis Motivation Some ab initio codes Quantum-mechanical approaches Density Functional Theory Electronic Structure of Condensed Phases Total-energy calculations Introduction Basis sets Plane-waves and Pseudopotentials How to solve the equations Parallel Materials Modelling Packages @ EPCC 2 / 55 Synopsis Introduction A guided tour inside the “black box” of ab-initio simulation. Synopsis • Motivation • The rise of quantum-mechanical simulations. Some ab initio codes Wavefunction-based theory • Density-functional theory (DFT) Quantum-mechanical • approaches Quantum theory in periodic boundaries • Plane-wave and other basis sets Density Functional • Theory SCF solvers • Molecular Dynamics Electronic Structure of Condensed Phases Recommended Reading and Further Study Total-energy calculations • Basis sets Jorge Kohanoff Electronic Structure Calculations for Solids and Molecules, Plane-waves and Theory and Computational Methods, Cambridge, ISBN-13: 9780521815918 Pseudopotentials • Dominik Marx, J¨urg Hutter Ab Initio Molecular Dynamics: Basic Theory and How to solve the Advanced Methods Cambridge University Press, ISBN: 0521898633 equations • Richard M. Martin Electronic Structure: Basic Theory and Practical Methods: Basic Theory and Practical Density Functional Approaches Vol 1 Cambridge University Press, ISBN: 0521782856 -

“Band Structure” for Electronic Structure Description

www.fhi-berlin.mpg.de Fundamentals of heterogeneous catalysis The very basics of “band structure” for electronic structure description R. Schlögl www.fhi-berlin.mpg.de 1 Disclaimer www.fhi-berlin.mpg.de • This lecture is a qualitative introduction into the concept of electronic structure descriptions different from “chemical bonds”. • No mathematics, unfortunately not rigorous. • For real understanding read texts on solid state physics. • Hellwege, Marfunin, Ibach+Lüth • The intention is to give an impression about concepts of electronic structure theory of solids. 2 Electronic structure www.fhi-berlin.mpg.de • For chemists well described by Lewis formalism. • “chemical bonds”. • Theorists derive chemical bonding from interaction of atoms with all their electrons. • Quantum chemistry with fundamentally different approaches: – DFT (density functional theory), see introduction in this series – Wavefunction-based (many variants). • All solve the Schrödinger equation. • Arrive at description of energetics and geometry of atomic arrangements; “molecules” or unit cells. 3 Catalysis and energy structure www.fhi-berlin.mpg.de • The surface of a solid is traditionally not described by electronic structure theory: • No periodicity with the bulk: cluster or slab models. • Bulk structure infinite periodically and defect-free. • Surface electronic structure theory independent field of research with similar basics. • Never assume to explain reactivity with bulk electronic structure: accuracy, model assumptions, termination issue. • But: electronic -

Simple Molecular Orbital Theory Chapter 5

Simple Molecular Orbital Theory Chapter 5 Wednesday, October 7, 2015 Using Symmetry: Molecular Orbitals One approach to understanding the electronic structure of molecules is called Molecular Orbital Theory. • MO theory assumes that the valence electrons of the atoms within a molecule become the valence electrons of the entire molecule. • Molecular orbitals are constructed by taking linear combinations of the valence orbitals of atoms within the molecule. For example, consider H2: 1s + 1s + • Symmetry will allow us to treat more complex molecules by helping us to determine which AOs combine to make MOs LCAO MO Theory MO Math for Diatomic Molecules 1 2 A ------ B Each MO may be written as an LCAO: cc11 2 2 Since the probability density is given by the square of the wavefunction: probability of finding the ditto atom B overlap term, important electron close to atom A between the atoms MO Math for Diatomic Molecules 1 1 S The individual AOs are normalized: 100% probability of finding electron somewhere for each free atom MO Math for Homonuclear Diatomic Molecules For two identical AOs on identical atoms, the electrons are equally shared, so: 22 cc11 2 2 cc12 In other words: cc12 So we have two MOs from the two AOs: c ,1() 1 2 c ,1() 1 2 2 2 After normalization (setting d 1 and _ d 1 ): 1 1 () () [2(1 S )]1/2 12 [2(1 S )]1/2 12 where S is the overlap integral: 01 S LCAO MO Energy Diagram for H2 H2 molecule: two 1s atomic orbitals combine to make one bonding and one antibonding molecular orbital. -

Qbox (Qb@Ll Branch)

Qbox (qb@ll branch) Summary Version 1.2 Purpose of Benchmark Qbox is a first-principles molecular dynamics code used to compute the properties of materials directly from the underlying physics equations. Density Functional Theory is used to provide a quantum-mechanical description of chemically-active electrons and nonlocal norm-conserving pseudopotentials are used to represent ions and core electrons. The method has O(N3) computational complexity, where N is the total number of valence electrons in the system. Characteristics of Benchmark The computational workload of a Qbox run is split between parallel dense linear algebra, carried out by the ScaLAPACK library, and a custom 3D Fast Fourier Transform. The primary data structure, the electronic wavefunction, is distributed across a 2D MPI process grid. The shape of the process grid is defined by the nrowmax variable set in the Qbox input file, which sets the number of process rows. Parallel performance can vary significantly with process grid shape, although past experience has shown that highly rectangular process grids (e.g. 2048 x 64) give the best performance. The majority of the run time is spent in matrix multiplication, Gram-Schmidt orthogonalization and parallel FFTs. Several ScaLAPACK routines do not scale well beyond 100k+ MPI tasks, so threading can be used to run on more cores. The parallel FFT and a few other loops are threaded in Qbox with OpenMP, but most of the threading speedup comes from the use of threaded linear algebra kernels (blas, lapack, ESSL). Qbox requires a minimum of 1GB of main memory per MPI task. Mechanics of Building Benchmark To compile Qbox: 1. -

Electronic Structure Methods

Electronic Structure Methods • One-electron models – e.g., Huckel theory • Semiempirical methods – e.g., AM1, PM3, MNDO • Single-reference based ab initio methods •Hartree-Fock • Perturbation theory – MP2, MP3, MP4 • Coupled cluster theory – e.g., CCSD(T) • Multi-configurational based ab initio methods • MCSCF and CASSCF (also GVB) • MR-MP2 and CASPT2 • MR-CI •Ab Initio Methods for IPs, EAs, excitation energies •CI singles (CIS) •TDHF •EOM and Greens function methods •CC2 • Density functional theory (DFT)- Combine functionals for exchange and for correlation • Local density approximation (LDA) •Perdew-Wang 91 (PW91) • Becke-Perdew (BP) •BeckeLYP(BLYP) • Becke3LYP (B3LYP) • Time dependent DFT (TDDFT) (for excited states) • Hybrid methods • QM/MM • Solvation models Why so many methods to solve Hψ = Eψ? Electronic Hamiltonian, BO approximation 1 2 Z A 1 Z AZ B H = − ∑∇i − ∑∑∑+ + (in a.u.) 2 riA iB〈〈j rij A RAB 1/rij is what makes it tough (nonseparable)!! Hartree-Fock method: • Wavefunction antisymmetrized product of orbitals Ψ =|φ11 (τφτ ) ⋅⋅⋅NN ( ) | ← Slater determinant accounts for indistinguishability of electrons In general, τ refers to both spatial and spin coordinates For the 2-electron case 1 Ψ = ϕ (τ )ϕ (τ ) = []ϕ (τ )ϕ (τ ) −ϕ (τ )ϕ (τ ) 1 1 2 2 2 1 1 2 2 2 1 1 2 Energy minimized – variational principle 〈Ψ | H | Ψ〉 Vary orbitals to minimize E, E = 〈Ψ | Ψ〉 keeping orbitals orthogonal hi ϕi = εi ϕi → orbitals and orbital energies 2 φ j (r2 ) 1 2 Z J = dr h = − ∇ − A + (J − K ) i ∑ ∫ 2 i i ∑ i i j ≠ i r12 2 riA ϕϕ()rr () Kϕϕ()rdrr= ji22 () ii121∑∫ j ji≠ r12 Characteristics • Each e- “sees” average charge distribution due to other e-. -

The Electronic Structure of Molecules an Experiment Using Molecular Orbital Theory

The Electronic Structure of Molecules An Experiment Using Molecular Orbital Theory Adapted from S.F. Sontum, S. Walstrum, and K. Jewett Reading: Olmstead and Williams Sections 10.4-10.5 Purpose: Obtain molecular orbital results for total electronic energies, dipole moments, and bond orders for HCl, H 2, NaH, O2, NO, and O 3. The computationally derived bond orders are correlated with the qualitative molecular orbital diagram and the bond order obtained using the NO bond force constant. Introduction to Spartan/Q-Chem The molecule, above, is an anti-HIV protease inhibitor that was developed at Abbot Laboratories, Inc. Modeling of molecular structures has been instrumental in the design of new drug molecules like these inhibitors. The molecular model kits you used last week are very useful for visualizing chemical structures, but they do not give the quantitative information needed to design and build new molecules. Q-Chem is another "modeling kit" that we use to augment our information about molecules, visualize the molecules, and give us quantitative information about the structure of molecules. Q-Chem calculates the molecular orbitals and energies for molecules. If the calculation is set to "Equilibrium Geometry," Q-Chem will also vary the bond lengths and angles to find the lowest possible energy for your molecule. Q- Chem is a text-only program. A “front-end” program is necessary to set-up the input files for Q-Chem and to visualize the results. We will use the Spartan program as a graphical “front-end” for Q-Chem. In other words Spartan doesn’t do the calculations, but rather acts as a go-between you and the computational package. -

Electronic Structure Calculations and Density Functional Theory

Electronic Structure Calculations and Density Functional Theory Rodolphe Vuilleumier P^olede chimie th´eorique D´epartement de chimie de l'ENS CNRS { Ecole normale sup´erieure{ UPMC Formation ModPhyChem { Lyon, 09/10/2017 Rodolphe Vuilleumier (ENS { UPMC) Electronic Structure and DFT ModPhyChem 1 / 56 Many applications of electronic structure calculations Geometries and energies, equilibrium constants Reaction mechanisms, reaction rates... Vibrational spectroscopies Properties, NMR spectra,... Excited states Force fields From material science to biology through astrochemistry, organic chemistry... ... Rodolphe Vuilleumier (ENS { UPMC) Electronic Structure and DFT ModPhyChem 2 / 56 Length/,("-%0,11,("2,"3,4)(",3"2,"1'56+,+&" and time scales 0,1 nm 10 nm 1µm 1ps 1ns 1µs 1s Variables noyaux et atomes et molécules macroscopiques Modèles Gros-Grains électrons (champs) ! #$%&'(%')$*+," #-('(%')$*+," #.%&'(%')$*+," !" Rodolphe Vuilleumier (ENS { UPMC) Electronic Structure and DFT ModPhyChem 3 / 56 Potential energy surface for the nuclei c The LibreTexts libraries Rodolphe Vuilleumier (ENS { UPMC) Electronic Structure and DFT ModPhyChem 4 / 56 Born-Oppenheimer approximation Total Hamiltonian of the system atoms+electrons: H^T = T^N + V^NN + H^ MI me approximate system wavefunction: ! ~ ~ ~ ΨS (RI ; ~r1;:::; ~rN ) = Ψ(RI )Ψ0(~r1;:::; ~rN ; RI ) ~ Ψ0(~r1;:::; ~rN ; RI ): the ground state electronic wavefunction of the ~ electronic Hamiltonian H^ at fixed ionic configuration RI n o Energy of the electrons at this ionic configuration: ~ E0(RI ) = Ψ0 -

The Atomic and Electronic Structure of Dislocations in Ga Based Nitride Semiconductors Imad Belabbas, Pierre Ruterana, Jun Chen, Gérard Nouet

The atomic and electronic structure of dislocations in Ga based nitride semiconductors Imad Belabbas, Pierre Ruterana, Jun Chen, Gérard Nouet To cite this version: Imad Belabbas, Pierre Ruterana, Jun Chen, Gérard Nouet. The atomic and electronic structure of dislocations in Ga based nitride semiconductors. Philosophical Magazine, Taylor & Francis, 2006, 86 (15), pp.2245-2273. 10.1080/14786430600651996. hal-00513682 HAL Id: hal-00513682 https://hal.archives-ouvertes.fr/hal-00513682 Submitted on 1 Sep 2010 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Philosophical Magazine & Philosophical Magazine Letters For Peer Review Only The atomic and electronic structure of dislocations in Ga based nitride semiconductors Journal: Philosophical Magazine & Philosophical Magazine Letters Manuscript ID: TPHM-05-Sep-0420.R2 Journal Selection: Philosophical Magazine Date Submitted by the 08-Dec-2005 Author: Complete List of Authors: BELABBAS, Imad; ENSICAEN, SIFCOM Ruterana, Pierre; ENSICAEN, SIFCOM CHEN, Jun; IUT, LRPMN NOUET, Gérard; ENSICAEN, SIFCOM Keywords: GaN, atomic structure, dislocations, electronic structure Keywords (user supplied): http://mc.manuscriptcentral.com/pm-pml Page 1 of 87 Philosophical Magazine & Philosophical Magazine Letters 1 2 3 4 The atomic and electronic structure of dislocations in Ga 5 6 based nitride semiconductors 7 8 9 10 11 12 1,3 1 2 1 13 I.