Trinity Capability Improvement V2.4

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Free and Open Source Software for Computational Chemistry Education

Free and Open Source Software for Computational Chemistry Education Susi Lehtola∗,y and Antti J. Karttunenz yMolecular Sciences Software Institute, Blacksburg, Virginia 24061, United States zDepartment of Chemistry and Materials Science, Aalto University, Espoo, Finland E-mail: [email protected].fi Abstract Long in the making, computational chemistry for the masses [J. Chem. Educ. 1996, 73, 104] is finally here. We point out the existence of a variety of free and open source software (FOSS) packages for computational chemistry that offer a wide range of functionality all the way from approximate semiempirical calculations with tight- binding density functional theory to sophisticated ab initio wave function methods such as coupled-cluster theory, both for molecular and for solid-state systems. By their very definition, FOSS packages allow usage for whatever purpose by anyone, meaning they can also be used in industrial applications without limitation. Also, FOSS software has no limitations to redistribution in source or binary form, allowing their easy distribution and installation by third parties. Many FOSS scientific software packages are available as part of popular Linux distributions, and other package managers such as pip and conda. Combined with the remarkable increase in the power of personal devices—which rival that of the fastest supercomputers in the world of the 1990s—a decentralized model for teaching computational chemistry is now possible, enabling students to perform reasonable modeling on their own computing devices, in the bring your own device 1 (BYOD) scheme. In addition to the programs’ use for various applications, open access to the programs’ source code also enables comprehensive teaching strategies, as actual algorithms’ implementations can be used in teaching. -

Quan Wan, "First Principles Simulations of Vibrational Spectra

THE UNIVERSITY OF CHICAGO FIRST PRINCIPLES SIMULATIONS OF VIBRATIONAL SPECTRA OF AQUEOUS SYSTEMS A DISSERTATION SUBMITTED TO THE FACULTY OF THE INSTITUTE FOR MOLECULAR ENGINEERING IN CANDIDACY FOR THE DEGREE OF DOCTOR OF PHILOSOPHY BY QUAN WAN CHICAGO, ILLINOIS AUGUST 2015 Copyright c 2015 by Quan Wan All Rights Reserved For Jing TABLE OF CONTENTS LISTOFFIGURES .................................... vii LISTOFTABLES ..................................... xii ACKNOWLEDGMENTS ................................. xiii ABSTRACT ........................................ xiv 1 INTRODUCTION ................................... 1 2 FIRST PRINCIPLES CALCULATIONS OF GROUND STATE PROPERTIES . 5 2.1 Densityfunctionaltheory. 5 2.1.1 TheSchr¨odingerEquation . 5 2.1.2 Density Functional Theory and the Kohn-Sham Ansatz . .. 6 2.1.3 Approximations to the Exchange-Correlation Energy Functional... 9 2.1.4 Planewave Pseudopotential Scheme . 11 2.1.5 First Principles Molecular Dynamics . 12 3 FIRST-PRINCIPLES CALCULATIONS IN THE PRESENCE OF EXTERNAL ELEC- TRICFIELDS ..................................... 14 3.1 Density functional perturbation theory for Homogeneous Electric Field Per- turbations..................................... 14 3.1.1 LinearResponsewithinDFPT. 14 3.1.2 Homogeneous Electric Field Perturbations . 15 3.1.3 ImplementationofDFPTintheQboxCode . 17 3.2 Finite Field Methods for Homogeneous Electric Field . 20 3.2.1 Finite Field Methods . 21 3.2.2 Computational Details of Finite Field Implementations . 25 3.2.3 Results for Single Water Molecules and Liquid Water . 27 3.2.4 Conclusions ................................ 32 4 VIBRATIONAL SIGNATURES OF CHARGE FLUCTUATIONS IN THE HYDRO- GENBONDNETWORKOFLIQUIDWATER . 34 4.1 IntroductiontoVibrationalSpectroscopy . ..... 34 4.1.1 NormalModeandTCFApproaches. 35 4.1.2 InfraredSpectroscopy. .. .. 36 4.1.3 RamanSpectroscopy ........................... 37 4.2 RamanSpectroscopyforLiquidWater . 39 4.3 TheoreticalMethods ............................... 41 4.3.1 Simulation Details . 41 4.3.2 Effective molecular polarizabilities . -

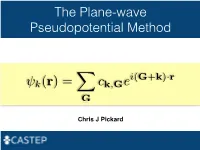

The Plane-Wave Pseudopotential Method

The Plane-wave Pseudopotential Method i(G+k) r k(r)= ck,Ge · XG Chris J Pickard Electrons in a Solid Nearly Free Electrons Nearly Free Electrons Nearly Free Electrons Electronic Structures Methods Empirical Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ultrasoft Pseudopotentials (Vanderbilt, 1990) Projector Augmented Waves Deriving the Pseudo Hamiltonian PAW vs PAW C. G. van de Walle and P. E. Bloechl 1993 (I) PAW to calculate all electron properties from PSP calculations" P. E. Bloechl 1994 (II)! The PAW electronic structure method" " The two are linked, but should not be confused References David Vanderbilt, Soft self-consistent pseudopotentials in a generalised eigenvalue formalism, PRB 1990 41 7892" Kari Laasonen et al, Car-Parinello molecular dynamics with Vanderbilt ultrasoft pseudopotentials, PRB 1993 47 10142" P.E. Bloechl, Projector augmented-wave method, PRB 1994 50 17953" G. Kresse and D. Joubert, From ultrasoft pseudopotentials to the projector augmented-wave method, PRB 1999 59 1758 On-the-fly Pseudopotentials - All quantities for PAW (I) reconstruction available" - Allows automatic consistency of the potentials with functionals" - Even fewer input files required" - The code in CASTEP is a cut down (fast) pseudopotential code" - There are internal databases" - Other generation codes (Vanderbilt’s original, OPIUM, LD1) exist It all works! Kohn-Sham Equations Being (Self) Consistent Eigenproblem in a Basis Just a Few Atoms In a Crystal Plane-waves -

Introduction to DFT and the Plane-Wave Pseudopotential Method

Introduction to DFT and the plane-wave pseudopotential method Keith Refson STFC Rutherford Appleton Laboratory Chilton, Didcot, OXON OX11 0QX 23 Apr 2014 Parallel Materials Modelling Packages @ EPCC 1 / 55 Introduction Synopsis Motivation Some ab initio codes Quantum-mechanical approaches Density Functional Theory Electronic Structure of Condensed Phases Total-energy calculations Introduction Basis sets Plane-waves and Pseudopotentials How to solve the equations Parallel Materials Modelling Packages @ EPCC 2 / 55 Synopsis Introduction A guided tour inside the “black box” of ab-initio simulation. Synopsis • Motivation • The rise of quantum-mechanical simulations. Some ab initio codes Wavefunction-based theory • Density-functional theory (DFT) Quantum-mechanical • approaches Quantum theory in periodic boundaries • Plane-wave and other basis sets Density Functional • Theory SCF solvers • Molecular Dynamics Electronic Structure of Condensed Phases Recommended Reading and Further Study Total-energy calculations • Basis sets Jorge Kohanoff Electronic Structure Calculations for Solids and Molecules, Plane-waves and Theory and Computational Methods, Cambridge, ISBN-13: 9780521815918 Pseudopotentials • Dominik Marx, J¨urg Hutter Ab Initio Molecular Dynamics: Basic Theory and How to solve the Advanced Methods Cambridge University Press, ISBN: 0521898633 equations • Richard M. Martin Electronic Structure: Basic Theory and Practical Methods: Basic Theory and Practical Density Functional Approaches Vol 1 Cambridge University Press, ISBN: 0521782856 -

Qbox (Qb@Ll Branch)

Qbox (qb@ll branch) Summary Version 1.2 Purpose of Benchmark Qbox is a first-principles molecular dynamics code used to compute the properties of materials directly from the underlying physics equations. Density Functional Theory is used to provide a quantum-mechanical description of chemically-active electrons and nonlocal norm-conserving pseudopotentials are used to represent ions and core electrons. The method has O(N3) computational complexity, where N is the total number of valence electrons in the system. Characteristics of Benchmark The computational workload of a Qbox run is split between parallel dense linear algebra, carried out by the ScaLAPACK library, and a custom 3D Fast Fourier Transform. The primary data structure, the electronic wavefunction, is distributed across a 2D MPI process grid. The shape of the process grid is defined by the nrowmax variable set in the Qbox input file, which sets the number of process rows. Parallel performance can vary significantly with process grid shape, although past experience has shown that highly rectangular process grids (e.g. 2048 x 64) give the best performance. The majority of the run time is spent in matrix multiplication, Gram-Schmidt orthogonalization and parallel FFTs. Several ScaLAPACK routines do not scale well beyond 100k+ MPI tasks, so threading can be used to run on more cores. The parallel FFT and a few other loops are threaded in Qbox with OpenMP, but most of the threading speedup comes from the use of threaded linear algebra kernels (blas, lapack, ESSL). Qbox requires a minimum of 1GB of main memory per MPI task. Mechanics of Building Benchmark To compile Qbox: 1. -

Quantum Espresso Intro Student Cluster Competition

SC21 STUDENT CLUSTER COMPETITION QUANTUM ESPRESSO INTRO STUDENT CLUSTER COMPETITION erhtjhtyhy YE LUO COLLEEN BERTONI Argonne National Laboratory Argonne National Laboratory Jun. 28th 2021 MANIFESTO § QUANTUM ESPRESSO is an integrated suite of Open-Source computer codes for electronic-structure calculations and materials modeling at the nanoscale. It is based on density-functional theory, plane waves, and pseudopotentials. § https://www.quantum-espresso.org/project/manifesto 2 SCIENCE, ALGORITHMS QUANTUM MECHANICS For microscopic material properties The picture can't be displayed. The picture can't be displayed. We solve this simplified case The picture can't be displayed. which is still extremely difficult 4 DENSITY FUNCTIONAL THEORY § Hohenberg–Kohn theorems – Theorem 1. The external potential (and hence the total energy), is a unique functional of the electron density. – Theorem 2. The functional that delivers the ground-state energy of the system gives the lowest energy if and only if the input density is the true ground-state density. § Kohn-Sham equation – The ground-state density of the interacting system of interest can be calculated as ground-state density of an auxiliary non-interacting system in an effective potential – Solvable with approximations, LDA 5 BOOMING PUBLICATIONS Highly correlated with the availability of HPC clusters Numbers of papers when DFT is searched as a topic Perspective on density functional theory K. Burke, J. Chem. Phys. 136, 150901 (2012) 6 MANY DFT CODES AROUND THE WORLD •Local orbital basis codes -

Downloads/Doe-Public-Access-Plan) Oak Ridge, Tennessee 37831, USA 10)Computational Physics Laboratory, Tampere University, P.O

UC Berkeley UC Berkeley Previously Published Works Title QMCPACK: Advances in the development, efficiency, and application of auxiliary field and real-space variational and diffusion quantum Monte Carlo. Permalink https://escholarship.org/uc/item/0s33688t Journal The Journal of chemical physics, 152(17) ISSN 0021-9606 Authors Kent, PRC Annaberdiyev, Abdulgani Benali, Anouar et al. Publication Date 2020-05-01 DOI 10.1063/5.0004860 Peer reviewed eScholarship.org Powered by the California Digital Library University of California QMCPACK: Advances in the development, efficiency, and application of auxiliary field and real-space variational and diffusion Quantum Monte Carlo P. R. C. Kent,1, a) Abdulgani Annaberdiyev,2 Anouar Benali,3 M. Chandler Bennett,4 Edgar Josu´eLandinez Borda,5 Peter Doak,6 Hongxia Hao,7 Kenneth D. Jordan,8 Jaron T. Krogel,9 Ilkka Kyl¨anp¨a¨a,10 Joonho Lee,11 Ye Luo,3 Fionn D. Malone,5 Cody A. Melton,12 Lubos Mitas,2 Miguel A. Morales,5 Eric Neuscamman,7, 13 Fernando A. Reboredo,9 Brenda Rubenstein,14 Kayahan Saritas,15 Shiv Upadhyay,8 Guangming Wang,2 Shuai Zhang,16 and Luning Zhao17 1)Center for Nanophase Materials Sciences Division and Computational Sciences and Engineering Division, Oak Ridge National Laboratory, Oak Ridge, Tennessee 37831, USAb) 2)Department of Physics, North Carolina State University, Raleigh, North Carolina 27695-8202, USA 3)Computational Science Division, Argonne National Laboratory, 9700 S. Cass Avenue, Lemont, IL 60439, USA 4)Materials Science and Technology Division, Oak Ridge National Laboratory, -

Quantum Embedding Theory for Strongly-Correlated States in Materials

Quantum Embedding Theory for Strongly-correlated States in Materials He Ma,y Nan Sheng,y Marco Govoni,∗,z,{ and Giulia Galli∗,z,y,{ yDepartment of Chemistry, University of Chicago, Chicago, IL 60637, USA. zPritzker School of Molecular Engineering, University of Chicago, Chicago, IL 60637, USA. {Materials Science Division and Center for Molecular Engineering, Argonne National Laboratory, Lemont, IL 60439, USA. E-mail: [email protected]; [email protected] Abstract Quantum embedding theories are promising approaches to investigate strongly- correlated electronic states of active regions of large-scale molecular or condensed sys- tems. Notable examples are spin defects in semiconductors and insulators. We present a detailed derivation of a quantum embedding theory recently introduced, which is based on the definition of effective Hamiltonians. The effect of the environment on a chosen active space is accounted for through screened Coulomb interactions evalu- ated using density functional theory. Importantly, the random phase approximation is not required and the evaluation of virtual electronic orbitals is circumvented with algorithms previously developed in the context of calculations based on many-body arXiv:2102.13178v1 [cond-mat.mtrl-sci] 25 Feb 2021 perturbation theory. In addition, we generalize the quantum embedding theory to ac- tive spaces composed of orbitals that are not eigenstates of Kohn-Sham Hamiltonians. Finally, we report results for spin defects in semiconductors. 1 1 Introduction Atomistic, quantum mechanical simulations are playing an increasingly important role in designing functional materials. In the past three decades, density functional theory (DFT) has become a standard method for quantum mechanical simulations of molecules and con- densed systems. -

Architecture of Qbox, a Parallel, Scalable first- Principles Molecular Dynamics (FPMD) Code

IBM Journal of Research and Development Tuesday, 23 October 2007 3:53 pm Allen Press, Inc. ibmr-52-01-09 Page 1 Architecture of F. Gygi Qbox: A scalable first-principles molecular dynamics code We describe the architecture of Qbox, a parallel, scalable first- principles molecular dynamics (FPMD) code. Qbox is a Cþþ/ Message Passing Interface implementation of FPMD based on the plane-wave, pseudopotential method for electronic structure calculations. It is built upon well-optimized parallel numerical libraries, such as Basic Linear Algebra Communication Subprograms (BLACS) and Scalable Linear Algebra Package (ScaLAPACK), and also features an Extensible Markup Language (XML) interface built on the Apache Xerces-C library. We describe various choices made in the design of Qbox that lead to excellent scalability on large parallel computers. In particular, we discuss the case of the IBM Blue Gene/Le platform on which Qbox was run using up to 65,536 nodes. Future design challenges for upcoming petascale computers are also discussed. Examples of applications of Qbox to a variety of first-principles simulations of solids, liquids, and nanostructures are briefly described. Introduction consistently achieved a sustained performance of about First-principles molecular dynamics (FPMD) is an 50% of peak performance. atomistic simulation method that combines an accurate As a result of the need for simulations of increasing size description of electronic structure with the capability to and the availability of parallel computers, FPMD code describe dynamical properties by means of molecular design has been moving toward parallel implementations. dynamics (MD) simulations. This approach has met with In the 1990s and early 2000s, the FPMD community tremendous success since its introduction by Car and adapted to the change in computer architecture by Parrinello in 1985 [1]. -

A Finite-Field Approach for GW Calculations Beyond The

A Finite-field Approach for GW Calculations Beyond the Random Phase Approximation He Ma,y,z Marco Govoni,y,{ Francois Gygi,x and Giulia Galli∗,y,z,{ yInstitute for Molecular Engineering, University of Chicago, Chicago, Illinois 60637, United States zDepartment of Chemistry, University of Chicago, Chicago, Illinois 60637, United States {Materials Science Division, Argonne National Laboratory, Lemont, Illinois 60439, United States. xDepartment of Computer Science, University of California Davis, Davis, California 95616, United States. E-mail: [email protected] Abstract We describe a finite-field approach to compute density response functions, which allows for efficient G0W0 and G0W0Γ0 calculations beyond the random phase approx- imation. The method is easily applicable to density functional calculations performed with hybrid functionals. We present results for the electronic properties of molecules and solids and we discuss a general scheme to overcome slow convergence of quasipar- arXiv:1808.10001v2 [physics.chem-ph] 17 Dec 2018 ticle energies obtained from G0W0Γ0 calculations, as a function of the basis set used to represent the dielectric matrix. 1 1 Introduction Accurate, first principles predictions of the electronic structure of molecules and materi- als are important goals in chemistry, condensed matter physics and materials science.1 In the past three decades, density functional theory (DFT)2,3 has been successfully adopted to predict numerous properties of molecules and materials.4 In principle, any ground or excited state properties can be formulated as functionals of the ground state charge den- sity. In practical calculations, the ground state charge density is determined by solving the Kohn-Sham (KS) equations with approximate exchange-correlation functionals, and many important excited state properties are not directly accessible from the solution of the KS equations. -

Qbox Code Tutorial

Qbox tutorial François Gygi University of California, Davis [email protected] http://qboxcode.org MICCoM Computational School Jul 17, 2017 1 Qbox code: main features • C++/MPI implementation of First-Principles Molecular Dynamics • DFT/GGA and hybrid DFT exchange-correlation • Plane-wave, norm-conserving pseudopotentials • Designed for large-scale parallel platforms • Built on optimized parallel libs: PBLAS, ScaLAPACK • XML interface • Installed on BlueGene/Q, Cray XC40, …, MacBook • http://qboxcode.org Qbox code architecture Qbox ScaLAPACK/PBLAS Apache XercesC XML lib BLAS/MASSV BLACS FFTW lib D/ZGEMM lib MPI F.G., IBM J. Res. Dev. 52,137 (2008) Downloading Qbox • Downloading: – http://qboxcode.org/download $ svn export http://qboxcode.org/svn/qb/tags/rel1_63_7 $ git clone https://github.com/qboxcode/qbox-public.git • MacOS X precompiled version: http://qboxcode.org/download/qbox-1.63.5-OSX-Yosemite.tgz • Compiling: – http://qboxcode.org/build Qbox basic operation • Start Qbox in interactive mode (serial version) – $ qb – Qbox prompt: [qbox] • Start Qbox reading from an input script – $ qb input.i • Start Qbox using an input script, writing on an output file – $ qb input.i > output.r • output.r is an XML document Qbox commands • Qbox reads commands from input and executes them sequentially • Examples – define the plane wave energy cutoff (Ry) [qbox] set ecut 35 – define an atom at a given position [qbox] atom C carbon 0.123 0.456 0.789 - position in atomic units (Bohr) Qbox commands angle compute the angle formed by three atoms atom -

Efficient Large-Scale Real-Space Electronic Structure Calculations

EFFICIENT LARGE-SCALE REAL-SPACE ELECTRONIC STRUCTURE CALCULATIONS A Thesis Presented to The Academic Faculty by Swarnava Ghosh In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the School of Civil and Environmental Engineering Georgia Institute of Technology August 2016 Copyright c 2016 by Swarnava Ghosh EFFICIENT LARGE-SCALE REAL-SPACE ELECTRONIC STRUCTURE CALCULATIONS Approved by: Dr. Phanish Suryanarayana, Advisor Dr. Ting Zhu School of Civil and Environmental Woodruff School of Mechanical Engineering Engineering Georgia Institute of Technology Georgia Institute of Technology Dr. Glaucio H. Paulino Dr. John E. pask School of Civil and Environmental Physics Division Engineering Lawrence Livermore National Labora- Georgia Institute of Technology tory Dr. Arash Yavari Date Approved: 7 July 2016 School of Civil and Environmental Engineering Georgia Institute of Technology Dedicated in the memory of Jethudadu and Dadubhai. iii ACKNOWLEDGEMENTS First and foremost, I would like to express my deepest sincere gratitude to my advisor, Professor Phanish Suryanarayana. His support, encouragement and deep insights into the subject has been tremendously valuable for me. I am deeply in debt to him for the time and effort he has dedicated to mentoring my research and helped me develop advanced technical skills. I am also grateful to Professor Glaucio H. Paulino, Professor Arash Yavari and Professor Ting Zhu for serving on my committee and providing feedback on this thesis. I acknowledge the encouragement and support of Dr. John E. Pask. His comments on my research have been invaluable. I gratefully acknowledge the financial support from National Science Foundation, De- partment of Energy, Office of Naval Research and the start-up funds provided by Georgia Institute of Technology over the course of my graduate studies.