First-Principles Simulations of Functional Materials for Energy Conversion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Free and Open Source Software for Computational Chemistry Education

Free and Open Source Software for Computational Chemistry Education Susi Lehtola∗,y and Antti J. Karttunenz yMolecular Sciences Software Institute, Blacksburg, Virginia 24061, United States zDepartment of Chemistry and Materials Science, Aalto University, Espoo, Finland E-mail: [email protected].fi Abstract Long in the making, computational chemistry for the masses [J. Chem. Educ. 1996, 73, 104] is finally here. We point out the existence of a variety of free and open source software (FOSS) packages for computational chemistry that offer a wide range of functionality all the way from approximate semiempirical calculations with tight- binding density functional theory to sophisticated ab initio wave function methods such as coupled-cluster theory, both for molecular and for solid-state systems. By their very definition, FOSS packages allow usage for whatever purpose by anyone, meaning they can also be used in industrial applications without limitation. Also, FOSS software has no limitations to redistribution in source or binary form, allowing their easy distribution and installation by third parties. Many FOSS scientific software packages are available as part of popular Linux distributions, and other package managers such as pip and conda. Combined with the remarkable increase in the power of personal devices—which rival that of the fastest supercomputers in the world of the 1990s—a decentralized model for teaching computational chemistry is now possible, enabling students to perform reasonable modeling on their own computing devices, in the bring your own device 1 (BYOD) scheme. In addition to the programs’ use for various applications, open access to the programs’ source code also enables comprehensive teaching strategies, as actual algorithms’ implementations can be used in teaching. -

Basis Sets Running a Calculation • in Performing a Computational

Session 2: Basis Sets • Two of the major methods (ab initio and DFT) require some understanding of basis sets and basis functions • This session describes the essentials of basis sets: – What they are – How they are constructed – How they are used – Significance in choice 1 Running a Calculation • In performing a computational chemistry calculation, the chemist has to make several decisions of input to the code: – The molecular geometry (and spin state) – The basis set used to determine the wavefunction – The properties to be calculated – The type(s) of calculations and any accompanying assumptions 2 Running a calculation •For ab initio or DFT calculations, many programs require a basis set choice to be made – The basis set is an approx- imate representation of the atomic orbitals (AOs) – The program then calculates molecular orbitals (MOs) using the Linear Combin- ation of Atomic Orbitals (LCAO) approximation 3 Computational Chemistry Map Chemist Decides: Computer calculates: Starting Molecular AOs determine the Geometry AOs O wavefunction (ψ) Basis Set (with H H ab initio and DFT) Type of Calculation LCAO (Method and Assumptions) O Properties to HH be Calculated MOs 4 Critical Choices • Choice of the method (and basis set) used is critical • Which method? • Molecular Mechanics, Ab initio, Semiempirical, or DFT • Which approximation? • MM2, MM3, HF, AM1, PM3, or B3LYP, etc. • Which basis set (if applicable)? • Minimal basis set • Split-valence • Polarized, Diffuse, High Angular Momentum, ...... 5 Why is Basis Set Choice Critical? • The -

Quan Wan, "First Principles Simulations of Vibrational Spectra

THE UNIVERSITY OF CHICAGO FIRST PRINCIPLES SIMULATIONS OF VIBRATIONAL SPECTRA OF AQUEOUS SYSTEMS A DISSERTATION SUBMITTED TO THE FACULTY OF THE INSTITUTE FOR MOLECULAR ENGINEERING IN CANDIDACY FOR THE DEGREE OF DOCTOR OF PHILOSOPHY BY QUAN WAN CHICAGO, ILLINOIS AUGUST 2015 Copyright c 2015 by Quan Wan All Rights Reserved For Jing TABLE OF CONTENTS LISTOFFIGURES .................................... vii LISTOFTABLES ..................................... xii ACKNOWLEDGMENTS ................................. xiii ABSTRACT ........................................ xiv 1 INTRODUCTION ................................... 1 2 FIRST PRINCIPLES CALCULATIONS OF GROUND STATE PROPERTIES . 5 2.1 Densityfunctionaltheory. 5 2.1.1 TheSchr¨odingerEquation . 5 2.1.2 Density Functional Theory and the Kohn-Sham Ansatz . .. 6 2.1.3 Approximations to the Exchange-Correlation Energy Functional... 9 2.1.4 Planewave Pseudopotential Scheme . 11 2.1.5 First Principles Molecular Dynamics . 12 3 FIRST-PRINCIPLES CALCULATIONS IN THE PRESENCE OF EXTERNAL ELEC- TRICFIELDS ..................................... 14 3.1 Density functional perturbation theory for Homogeneous Electric Field Per- turbations..................................... 14 3.1.1 LinearResponsewithinDFPT. 14 3.1.2 Homogeneous Electric Field Perturbations . 15 3.1.3 ImplementationofDFPTintheQboxCode . 17 3.2 Finite Field Methods for Homogeneous Electric Field . 20 3.2.1 Finite Field Methods . 21 3.2.2 Computational Details of Finite Field Implementations . 25 3.2.3 Results for Single Water Molecules and Liquid Water . 27 3.2.4 Conclusions ................................ 32 4 VIBRATIONAL SIGNATURES OF CHARGE FLUCTUATIONS IN THE HYDRO- GENBONDNETWORKOFLIQUIDWATER . 34 4.1 IntroductiontoVibrationalSpectroscopy . ..... 34 4.1.1 NormalModeandTCFApproaches. 35 4.1.2 InfraredSpectroscopy. .. .. 36 4.1.3 RamanSpectroscopy ........................... 37 4.2 RamanSpectroscopyforLiquidWater . 39 4.3 TheoreticalMethods ............................... 41 4.3.1 Simulation Details . 41 4.3.2 Effective molecular polarizabilities . -

An Introduction to Hartree-Fock Molecular Orbital Theory

An Introduction to Hartree-Fock Molecular Orbital Theory C. David Sherrill School of Chemistry and Biochemistry Georgia Institute of Technology June 2000 1 Introduction Hartree-Fock theory is fundamental to much of electronic structure theory. It is the basis of molecular orbital (MO) theory, which posits that each electron's motion can be described by a single-particle function (orbital) which does not depend explicitly on the instantaneous motions of the other electrons. Many of you have probably learned about (and maybe even solved prob- lems with) HucÄ kel MO theory, which takes Hartree-Fock MO theory as an implicit foundation and throws away most of the terms to make it tractable for simple calculations. The ubiquity of orbital concepts in chemistry is a testimony to the predictive power and intuitive appeal of Hartree-Fock MO theory. However, it is important to remember that these orbitals are mathematical constructs which only approximate reality. Only for the hydrogen atom (or other one-electron systems, like He+) are orbitals exact eigenfunctions of the full electronic Hamiltonian. As long as we are content to consider molecules near their equilibrium geometry, Hartree-Fock theory often provides a good starting point for more elaborate theoretical methods which are better approximations to the elec- tronic SchrÄodinger equation (e.g., many-body perturbation theory, single-reference con¯guration interaction). So...how do we calculate molecular orbitals using Hartree-Fock theory? That is the subject of these notes; we will explain Hartree-Fock theory at an introductory level. 2 What Problem Are We Solving? It is always important to remember the context of a theory. -

User Manual for the Uppsala Quantum Chemistry Package UQUANTCHEM V.35

User manual for the Uppsala Quantum Chemistry package UQUANTCHEM V.35 by Petros Souvatzis Uppsala 2016 Contents 1 Introduction 6 2 Compiling the code 7 3 What Can be done with UQUANTCHEM 9 3.1 Hartree Fock Calculations . 9 3.2 Configurational Interaction Calculations . 9 3.3 M¨ollerPlesset Calculations (MP2) . 9 3.4 Density Functional Theory Calculations (DFT)) . 9 3.5 Time Dependent Density Functional Theory Calculations (TDDFT)) . 10 3.6 Quantum Montecarlo Calculations . 10 3.7 Born Oppenheimer Molecular Dynamics . 10 4 Setting up a UQANTCHEM calculation 12 4.1 The input files . 12 4.1.1 The INPUTFILE-file . 12 4.1.2 The BASISFILE-file . 13 4.1.3 The BASISFILEAUX-file . 14 4.1.4 The DENSMATSTARTGUESS.dat-file . 14 4.1.5 The MOLDYNRESTART.dat-file . 14 4.1.6 The INITVELO.dat-file . 15 4.1.7 Running Uquantchem . 15 4.2 Input parameters . 15 4.2.1 CORRLEVEL ................................. 15 4.2.2 ADEF ..................................... 15 4.2.3 DOTDFT ................................... 16 4.2.4 NSCCORR ................................... 16 4.2.5 SCERR .................................... 16 4.2.6 MIXTDDFT .................................. 16 4.2.7 EPROFILE .................................. 16 4.2.8 DOABSSPECTRUM ............................... 17 4.2.9 NEPERIOD .................................. 17 4.2.10 EFIELDMAX ................................. 17 4.2.11 EDIR ..................................... 17 4.2.12 FIELDDIR .................................. 18 4.2.13 OMEGA .................................... 18 2 CONTENTS 3 4.2.14 NCHEBGAUSS ................................. 18 4.2.15 RIAPPROX .................................. 18 4.2.16 LIMPRECALC (Only openmp-version) . 19 4.2.17 DIAGDG ................................... 19 4.2.18 NLEBEDEV .................................. 19 4.2.19 MOLDYN ................................... 19 4.2.20 DAMPING ................................... 19 4.2.21 XLBOMD .................................. -

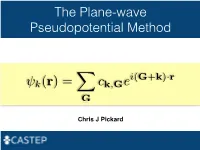

The Plane-Wave Pseudopotential Method

The Plane-wave Pseudopotential Method i(G+k) r k(r)= ck,Ge · XG Chris J Pickard Electrons in a Solid Nearly Free Electrons Nearly Free Electrons Nearly Free Electrons Electronic Structures Methods Empirical Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ab Initio Pseudopotentials Ultrasoft Pseudopotentials (Vanderbilt, 1990) Projector Augmented Waves Deriving the Pseudo Hamiltonian PAW vs PAW C. G. van de Walle and P. E. Bloechl 1993 (I) PAW to calculate all electron properties from PSP calculations" P. E. Bloechl 1994 (II)! The PAW electronic structure method" " The two are linked, but should not be confused References David Vanderbilt, Soft self-consistent pseudopotentials in a generalised eigenvalue formalism, PRB 1990 41 7892" Kari Laasonen et al, Car-Parinello molecular dynamics with Vanderbilt ultrasoft pseudopotentials, PRB 1993 47 10142" P.E. Bloechl, Projector augmented-wave method, PRB 1994 50 17953" G. Kresse and D. Joubert, From ultrasoft pseudopotentials to the projector augmented-wave method, PRB 1999 59 1758 On-the-fly Pseudopotentials - All quantities for PAW (I) reconstruction available" - Allows automatic consistency of the potentials with functionals" - Even fewer input files required" - The code in CASTEP is a cut down (fast) pseudopotential code" - There are internal databases" - Other generation codes (Vanderbilt’s original, OPIUM, LD1) exist It all works! Kohn-Sham Equations Being (Self) Consistent Eigenproblem in a Basis Just a Few Atoms In a Crystal Plane-waves -

Introduction to DFT and the Plane-Wave Pseudopotential Method

Introduction to DFT and the plane-wave pseudopotential method Keith Refson STFC Rutherford Appleton Laboratory Chilton, Didcot, OXON OX11 0QX 23 Apr 2014 Parallel Materials Modelling Packages @ EPCC 1 / 55 Introduction Synopsis Motivation Some ab initio codes Quantum-mechanical approaches Density Functional Theory Electronic Structure of Condensed Phases Total-energy calculations Introduction Basis sets Plane-waves and Pseudopotentials How to solve the equations Parallel Materials Modelling Packages @ EPCC 2 / 55 Synopsis Introduction A guided tour inside the “black box” of ab-initio simulation. Synopsis • Motivation • The rise of quantum-mechanical simulations. Some ab initio codes Wavefunction-based theory • Density-functional theory (DFT) Quantum-mechanical • approaches Quantum theory in periodic boundaries • Plane-wave and other basis sets Density Functional • Theory SCF solvers • Molecular Dynamics Electronic Structure of Condensed Phases Recommended Reading and Further Study Total-energy calculations • Basis sets Jorge Kohanoff Electronic Structure Calculations for Solids and Molecules, Plane-waves and Theory and Computational Methods, Cambridge, ISBN-13: 9780521815918 Pseudopotentials • Dominik Marx, J¨urg Hutter Ab Initio Molecular Dynamics: Basic Theory and How to solve the Advanced Methods Cambridge University Press, ISBN: 0521898633 equations • Richard M. Martin Electronic Structure: Basic Theory and Practical Methods: Basic Theory and Practical Density Functional Approaches Vol 1 Cambridge University Press, ISBN: 0521782856 -

Qbox (Qb@Ll Branch)

Qbox (qb@ll branch) Summary Version 1.2 Purpose of Benchmark Qbox is a first-principles molecular dynamics code used to compute the properties of materials directly from the underlying physics equations. Density Functional Theory is used to provide a quantum-mechanical description of chemically-active electrons and nonlocal norm-conserving pseudopotentials are used to represent ions and core electrons. The method has O(N3) computational complexity, where N is the total number of valence electrons in the system. Characteristics of Benchmark The computational workload of a Qbox run is split between parallel dense linear algebra, carried out by the ScaLAPACK library, and a custom 3D Fast Fourier Transform. The primary data structure, the electronic wavefunction, is distributed across a 2D MPI process grid. The shape of the process grid is defined by the nrowmax variable set in the Qbox input file, which sets the number of process rows. Parallel performance can vary significantly with process grid shape, although past experience has shown that highly rectangular process grids (e.g. 2048 x 64) give the best performance. The majority of the run time is spent in matrix multiplication, Gram-Schmidt orthogonalization and parallel FFTs. Several ScaLAPACK routines do not scale well beyond 100k+ MPI tasks, so threading can be used to run on more cores. The parallel FFT and a few other loops are threaded in Qbox with OpenMP, but most of the threading speedup comes from the use of threaded linear algebra kernels (blas, lapack, ESSL). Qbox requires a minimum of 1GB of main memory per MPI task. Mechanics of Building Benchmark To compile Qbox: 1. -

Powerpoint Presentation

Session 2 Basis Sets CCCE 2008 1 Session 2: Basis Sets • Two of the major methods (ab initio and DFT) require some understanding of basis sets and basis functions • This session describes the essentials of basis sets: – What they are – How they are constructed – How they are used – Significance in choice CCCE 2008 2 Running a Calculation • In performing ab initio and DFT computa- tional chemistry calculations, the chemist has to make several decisions of input to the code: – The molecular geometry (and spin state) – The basis set used to determine the wavefunction – The properties to be calculated – The type(s) of calculations and any accompanying assumptions CCCE 2008 3 Running a calculation • For ab initio or DFT calculations, many programs require a basis set choice to be made – The basis set is an approx- imate representation of the atomic orbitals (AOs) – The program then calculates molecular orbitals (MOs) using the Linear Combin- ation of Atomic Orbitals (LCAO) approximation CCCE 2008 4 Computational Chemistry Map Chemist Decides: Computer calculates: Starting Molecular AOs determine the Geometry AOs O wavefunction (ψ) Basis Set (with H H ab initio and DFT) Type of Calculation LCAO (Method and Assumptions) O Properties to HH be Calculated MOs CCCE 2008 5 Critical Choices • Choice of the method (and basis set) used is critical • Which method? • Molecular Mechanics, Ab initio, Semiempirical, or DFT • Which approximation? • MM2, MM3, HF, AM1, PM3, or B3LYP, etc. • Which basis set (if applicable)? • Minimal basis set • Split-valence -

Towards Efficient Data Exchange and Sharing for Big-Data Driven Materials Science: Metadata and Data Formats

www.nature.com/npjcompumats PERSPECTIVE OPEN Towards efficient data exchange and sharing for big-data driven materials science: metadata and data formats Luca M. Ghiringhelli1, Christian Carbogno1, Sergey Levchenko1, Fawzi Mohamed1, Georg Huhs2,3, Martin Lüders4, Micael Oliveira5,6 and Matthias Scheffler1,7 With big-data driven materials research, the new paradigm of materials science, sharing and wide accessibility of data are becoming crucial aspects. Obviously, a prerequisite for data exchange and big-data analytics is standardization, which means using consistent and unique conventions for, e.g., units, zero base lines, and file formats. There are two main strategies to achieve this goal. One accepts the heterogeneous nature of the community, which comprises scientists from physics, chemistry, bio-physics, and materials science, by complying with the diverse ecosystem of computer codes and thus develops “converters” for the input and output files of all important codes. These converters then translate the data of each code into a standardized, code- independent format. The other strategy is to provide standardized open libraries that code developers can adopt for shaping their inputs, outputs, and restart files, directly into the same code-independent format. In this perspective paper, we present both strategies and argue that they can and should be regarded as complementary, if not even synergetic. The represented appropriate format and conventions were agreed upon by two teams, the Electronic Structure Library (ESL) of the European Center for Atomic and Molecular Computations (CECAM) and the NOvel MAterials Discovery (NOMAD) Laboratory, a European Centre of Excellence (CoE). A key element of this work is the definition of hierarchical metadata describing state-of-the-art electronic-structure calculations. -

APW+Lo Basis Sets

Computer Physics Communications 179 (2008) 784–790 Contents lists available at ScienceDirect Computer Physics Communications www.elsevier.com/locate/cpc Force calculation for orbital-dependent potentials with FP-(L)APW + lo basis sets ∗ Fabien Tran a, , Jan Kuneš b,c, Pavel Novák c,PeterBlahaa,LaurenceD.Marksd, Karlheinz Schwarz a a Institute of Materials Chemistry, Vienna University of Technology, Getreidemarkt 9/165-TC, A-1060 Vienna, Austria b Theoretical Physics III, Center for Electronic Correlations and Magnetism, Institute of Physics, University of Augsburg, Augsburg 86135, Germany c Institute of Physics, Academy of Sciences of the Czech Republic, Cukrovarnická 10, CZ-162 53 Prague 6, Czech Republic d Department of Materials Science and Engineering, Northwestern University, Evanston, IL 60208, USA article info abstract Article history: Within the linearized augmented plane-wave method for electronic structure calculations, a force Received 8 April 2008 expression was derived for such exchange–correlation energy functionals that lead to orbital-dependent Received in revised form 14 May 2008 potentials (e.g., LDA + U or hybrid methods). The forces were implemented into the WIEN2k code and Accepted 27 June 2008 were tested on systems containing strongly correlated d and f electrons. The results show that the Availableonline5July2008 expression leads to accurate atomic forces. © PACS: 2008 Elsevier B.V. All rights reserved. 61.50.-f 71.15.Mb 71.27.+a Keywords: Computational materials science Density functional theory Forces Structure optimization Strongly correlated materials 1. Introduction The majority of present day electronic structure calculations on periodic solids are performed with the Kohn–Sham (KS) formulation [1] of density functional theory [2] using the local density (LDA) or generalized gradient approximations (GGA) for the exchange–correlation energy. -

Lecture 8 Gaussian Basis Sets CHEM6085: Density Functional

CHEM6085: Density Functional Theory Lecture 8 Gaussian basis sets C.-K. Skylaris CHEM6085 Density Functional Theory 1 Solving the Kohn-Sham equations on a computer • The SCF procedure involves solving the Kohn-Sham single-electron equations for the molecular orbitals • Where the Kohn-Sham potential of the non-interacting electrons is given by • We all have some experience in solving equations on paper but how we do this with a computer? CHEM6085 Density Functional Theory 2 Linear Combination of Atomic Orbitals (LCAO) • We will express the MOs as a linear combination of atomic orbitals (LCAO) • The strength of the LCAO approach is its general applicability: it can work on any molecule with any number of atoms Example: B C A AOs on atom A AOs on atom B AOs on atom C CHEM6085 Density Functional Theory 3 Example: find the AOs from which the MOs of the following molecules will be built CHEM6085 Density Functional Theory 4 Basis functions • We can take the LCAO concept one step further: • Use a larger number of AOs (e.g. a hydrogen atom can have more than one s AO, and some p and d AOs, etc.). This will achieve a more flexible representation of our MOs and therefore more accurate calculated properties according to the variation principle • Use AOs of a particular mathematical form that simplifies the computations (but are not necessarily equal to the exact AOs of the isolated atoms) • We call such sets of more general AOs basis functions • Instead of having to calculate the mathematical form of the MOs (impossible on a computer) the problem