IOT Based Human Search and Rescue Robot Using Swarm Robotics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Retrospective of the AAAI Robot Competitions

AI Magazine Volume 18 Number 1 (1997) (© AAAI) Articles A Retrospective of the AAAI Robot Competitions Pete Bonasso and Thomas Dean ■ This article is the content of an invited talk given this development. We found it helpful to by the authors at the Thirteenth National Confer- draw comparisons with developments in avia- ence on Artificial Intelligence (AAAI-96). The tion. The comparisons allow us to make use- piece begins with a short history of the competi- ful parallels with regard to aspirations, moti- tion, then discusses the technical challenges and vations, successes, and failures. the political and cultural issues associated with bringing it off every year. We also cover the sci- Dreams ence and engineering involved with the robot tasks and the educational and commercial aspects Flying has always seemed like a particularly of the competition. We finish with a discussion elegant way of getting from one place to of the community formed by the organizers, par- another. The dream for would-be aviators is ticipants, and the conference attendees. The orig- to soar like a bird; for many researchers in AI, inal talk made liberal use of video clips and slide the dream is to emulate a human. There are photographs; so, we have expanded the text and many variations on these dreams, and some added photographs to make up for the lack of resulted in several fanciful manifestations. In such media. the case of aviation, some believed that it should be possible for humans to fly by sim- here have been five years of robot com- ply strapping on wings. -

Administrators Guide to First Robotics Program Expenses

ADMINISTRATORS GUIDE TO FIRST ROBOTICS PROGRAM EXPENSES FIRST has 4 programs under the umbrella all foCused on inspiring more students to pursue Careers in sCienCe, teChnology, engineering, and mathematiCs. All of the programs use a robot Competition exCept the youngest group which is for K-3 and it is more of a make-it/engineering expo. Junior FIRST LEGO league (JrFLL) for students kindergarten through third grade is a researCh/design challenge and introduction to the engineering process. Kids go on a research journey, create a poster and LEGO model Present at Expo to reviewers and then all teams reCeive reCognition. Expos can be organized at the local level and a regional expo will be held in March. Costs are: ($50 - $300 per season) • $25 JrFLL program fees (annual dues) • $25 Expo registration fees • Teams Can use any LEGO, must have one moving part with a motor. FIRST does offer a large kit of parts for about $150 Registration: Never closes Register Here: http://www.usfirst.org/robotiCsprograms/jr.fll/registration Competitions/tournaments: Expo is held in the spring (RGV Local) FIRST Lego league (FLL) has a new game theme eaCh year, which also includes a student chosen project in whiCh they researCh and purpose a solution that fits their Community’s needs. The Challenge is revealed in early September eaCh year and the Competition season is from November – MarCh. Team size is limited to 10 students. Costs are: ($1,500 - $3,000 first season, then $1,000 - $2,500 per season to sustain program) • $225 FLL program fees (annual dues) • $300 -$420 Lego Mindstorms robot kit (re-usable each year) • $65 game field set-up kit (new each year) • $70 - $90 tournament registration fees • $500 - $1,000 for team apparel, meals, presentation materials, promotional items for team spirit, etc. -

Fresh Innovators Put Ideas Into Action in Robot Contest March 2010 Texas Instruments 3

WHITE PAPER Jean Anne Booth Director of WW Stellaris Marketing Fresh Innovators Put and Customer-Facing Engineering Sue Cozart Applications Engineer Ideas into Action in Robot Contest Introduction The annual FIRST® Robotics Challenge (FRC) inspires young men and women to explore applications of motors to make good use of mechanical Texas Instruments puts tools motion in imaginative ways, all in the name of a real-life game. In doing so, in the hands of creators of these high school students learn to work on a team toward a common goal, tomorrow’s machine control stretch their knowledge and exercise their creativity, apply critical thinking and systems. develop a strategic game plan, and move from theoretical and book learning to practical application of concepts, all the while learning valuable lessons that will help them in the workplace and in life throughout their adulthood. But as much as anything, the FIRST® programs are designed to interest children in science and math by making it fun and engaging through the use of robots. Texas Instruments is one of the FRC suppliers, lending its motor control technology to the materials and tool kit from which each team of students builds its robot. The Stellaris® microcontroller-based motor control system and software used by the students is from the same Jaguar® developer's kit used by professionals to build sophisticated motion-control systems used in industry day in and day out. The easy-to-use kits let naive high school students, as well as industry professionals, control various types of motors in complex systems without having to comprehend the underlying algorithms in use or details of the microcontroller (MCU) program code that is implementing the algorithms. -

Upgrading a Legacy Outdoors Robotic Vehicle4

Upgrading a Legacy Outdoors Robotic Vehicle4 1 1 2 Theodosis Ntegiannakis , Odysseas Mavromatakis , Savvas Piperidis , and Nikos C. Tsourveloudis3 1 Theodosis Ntegiannakis and Odysseas Mavromatakis are graduate students at the School of Production Engineering and Management, Technical University of Crete, Hellas. 2 corresponding author: Intelligent Systems and Robotics Laboratory, School of Production Engineering and Management, Technical University of Crete, 73100 Chania, Hellas, [email protected], www.robolab.tuc.gr 3 School of Production Engineering and Management, Technical University of Crete, Hellas, [email protected], www.robolab.tuc.gr Abstract. ATRV-mini was a popular, 2000's commercially available, outdoors robot. The successful upgrade procedure of a decommissioned ATRV-mini is presented in this paper. Its robust chassis construction, skid steering ability, and optional wifi connectivity were the major rea- sons for its commercial success, mainly for educational and research pur- poses. However the advances in electronics, microcontrollers and software during the last decades were not followed by the robot's manufacturer. As a result, the robot became obsolete and practically useless despite its good characteristics. The upgrade used up to date, off the shelf compo- nents and open source software tools. There was a major enhancement at robot's processing power, energy consumption, weight and autonomy time. Experimental testing proved the upgraded robot's operational in- tegrity and capability of undertaking educational, research and other typical robotic tasks. Keywords: all terrain autonomous robotic vehicle, educational robotics, ROS, robot upgrade, solar panels. 1 Introduction This work deals with the upgrading procedure of a decommissioned Real World Interface (RWI) ATRV-mini all-terrain robotic vehicle. -

CYBORG Seagulls Are Ready to Recycle

CYBORG Seagulls are ready to recycle By Edward Stratton The Daily Astorian Published: February 24, 2015 10:45AM The robotbuilding season for 25 Seaside and Astoria students on the C.Y.B.O.R.G. Seagulls robotics team ended Feb. 17. SEASIDE — Seaside High School’s studentbuilt robot SARA is in the bag and ready to recycle. The robotbuilding season for 25 Seaside and Astoria students on the CYBORG Seagulls robotics team ended Feb. 17. Now the team prepares to send 15 students to compete against 31 other teams in the divisional qualifier of the FIRST Robotics Competition starting Thursday in Oregon City. From left, Pedro Martinez, Austin JOSHUA BESSEX — THE DAILY ASTORIAN Milliren and Connor Adams, test out their SARA (Stacking Agile Robot The competition and its teams are chockfull of Assembly) robot during the CYBORG Seagulls robotic team meeting. acronyms, including Seaside’s team name The robot is designed to pick up recycling cans and cargo boxes and move them. The team will use SARA to compete in Recycle Rush, a (Creative Young Brains Observing and robotics game based on recycling Thursday through Saturday. Redefining Greatness, or CYBORG) and the Buy this photo league they compete in (For Inspiration and 1 of 6 Recognition of Science and Technology, or FIRST). For more information on the CYBORG Seagulls and the Building SARA FIRST Robotics Competition, visit www.team3673.org The CYBORG Seagulls, now in their fifth year of the competition, had from Jan. 4 to Feb. 17 to build and program SARA in their workshop. -

Acknowledgements Acknowl

2161 Acknowledgements Acknowl. B.21 Actuators for Soft Robotics F.58 Robotics in Hazardous Applications by Alin Albu-Schäffer, Antonio Bicchi by James Trevelyan, William Hamel, The authors of this chapter have used liberally of Sung-Chul Kang work done by a group of collaborators involved James Trevelyan acknowledges Surya Singh for de- in the EU projects PHRIENDS, VIACTORS, and tailed suggestions on the original draft, and would also SAPHARI. We want to particularly thank Etienne Bur- like to thank the many unnamed mine clearance experts det, Federico Carpi, Manuel Catalano, Manolo Gara- who have provided guidance and comments over many bini, Giorgio Grioli, Sami Haddadin, Dominic Lacatos, years, as well as Prof. S. Hirose, Scanjack, Way In- Can zparpucu, Florian Petit, Joshua Schultz, Nikos dustry, Japan Atomic Energy Agency, and Total Marine Tsagarakis, Bram Vanderborght, and Sebastian Wolf for Systems for providing photographs. their substantial contributions to this chapter and the William R. Hamel would like to acknowledge work behind it. the US Department of Energy’s Robotics Crosscut- ting Program and all of his colleagues at the na- C.29 Inertial Sensing, GPS and Odometry tional laboratories and universities for many years by Gregory Dudek, Michael Jenkin of dealing with remote hazardous operations, and all We would like to thank Sarah Jenkin for her help with of his collaborators at the Field Robotics Center at the figures. Carnegie Mellon University, particularly James Os- born, who were pivotal in developing ideas for future D.36 Motion for Manipulation Tasks telerobots. by James Kuffner, Jing Xiao Sungchul Kang acknowledges Changhyun Cho, We acknowledge the contribution that the authors of the Woosub Lee, Dongsuk Ryu at KIST (Korean Institute first edition made to this chapter revision, particularly for Science and Technology), Korea for their provid- Sect. -

The Personal Rover Project: the Comprehensive Design of A

Robotics and Autonomous Systems 42 (2003) 245–258 The Personal Rover Project: The comprehensive design of a domestic personal robot Emily Falcone∗, Rachel Gockley, Eric Porter, Illah Nourbakhsh The Robotics Institute, Carnegie Mellon University, Newell-Simon Hall 3111, 5000 Forbes Ave., Pittsburgh, PA 15213, USA Abstract In this paper, we summarize an approach for the dissemination of robotics technologies. In a manner analogous to the personal computer movement of the early 1980s, we propose that a productive niche for robotic technologies is as a long-term creative outlet for human expression and discovery. To this end, this paper describes our ongoing efforts to design, prototype and test a low-cost, highly competent personal rover for the domestic environment. © 2003 Elsevier Science B.V. All rights reserved. Keywords: Social robots; Educational robot; Human–robot interaction; Personal robot; Step climbing 1. Introduction duration of interaction and the roles played by human and robot participants. In cases where the human care- Robotics occupies a special place in the arena of giver provides short-term, nurturing interaction to a interactive technologies. It combines sophisticated robot, research has demonstrated the development of computation with rich sensory input in a physical effective social relationships [5,12,21]. Anthropomor- embodiment that can exhibit tangible and expressive phic robot design can help prime such interaction ex- behavior in the physical world. periments by providing immediately comprehensible In this regard, a central question that occupies our social cues for the human subjects [6,17]. research group pertains to the social niche of robotic In contrast, our interest lies in long-term human– artifacts in the company of the robotically uninitiated robot relationships, where a transient suspension of public-at-large: What is an appropriate first role for in- disbelief will prove less relevant than long-term social telligent human–robot interaction in the daily human engagement and growth. -

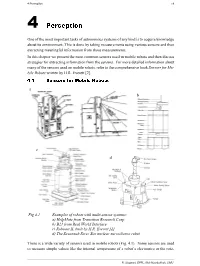

4 Perception 79

4 Perception 79 4 Perception One of the most important tasks of autonomous systems of any kind is to acquire knowledge about its environment. This is done by taking measurements using various sensors and then extracting meaningful information from those measurements. In this chapter we present the most common sensors used in mobile robots and then discuss strategies for extracting information from the sensors. For more detailed information about many of the sensors used on mobile robots, refer to the comprehensive book Sensors for Mo- bile Robots written by H.R. Everett [2]. 4.1 Sensors for Mobile Robots a b c d Fig 4.1 Examples of robots with multi-sensor systems: a) HelpMate from Transition Research Corp. b) B21 from Real World Interface c) Roboart II, built by H.R. Everett [2] d) The Savannah River Site nuclear surveillance robot There is a wide variety of sensors used in mobile robots (Fig. 4.1). Some sensors are used to measure simple values like the internal temperature of a robot’s electronics or the rota- R. Siegwart, EPFL, Illah Nourbakhsh, CMU 80 Autonomous Mobile Robots tional speed of the motors. Other, more sophisticated sensors can be used to acquire infor- mation about the robot’s environment or even to directly measure a robot’s global position. In this chapter we focus primarily on sensors used to extract information about the robot’s environment. Because a mobile robot moves around, it will frequently encounter unfore- seen environmental characteristics, and therefore such sensing is particularly critical. We begin with a functional classification of sensors. -

Pixy Hardware Interface

SSttrriikkeerr EEL 4914 Senior Design I Group 8 Cruz, Efrain Decamp, Loubens Narvaez, Luis Thomas, Brian Competitive Autonomous Air-Hockey Gaming system Table of Contents Table of Contents .................................................................................................. i 1 Executive Summary .......................................................................................... 1 2 Project Description ............................................................................................ 3 2.1 Motivation ................................................................................................................. 3 2.2 Goals and Objectives ................................................................................................. 4 2.3 Requirements and Specifications ........................................................................... 4 2.3.1 User Interface .................................................................................................... 4 2.3.2 Audio and Visual Effects .................................................................................... 6 2.3.3 Tracking System ................................................................................................. 7 2.3.4 Software .............................................................................................................. 9 2.3.5 System Hardware .............................................................................................. 10 2.3.6 Puck Return Mechanism .................................................................................. -

Botball Kit for Teaching Engineering Computing

Botball Kit for Teaching Engineering Computing David P. Miller Charles Winton School of AME Department of CIS University of Oklahoma University of N. Florida Norman, OK 73019 Jacksonville, FL 32224 Abstract Many engineering classes can benefit from hands on use of robots. The KISS Institute Botball kit has found use in many classes at a number of universities. This paper outlines how the kit is used in a few of these different classes at a couple of different universities. This paper also introduces the Collegiate Botball Challenge, and how it can be used as a class project. 1 Introduction Introductory engineering courses are used to teach general principles while introducing the students to all of the engineering disciplines. Robotics, as a multi-disciplinary application can be an ideal subject for projects that stress the different engineering fields. A major consideration in establishing a robotics course emphasizing mobile robots is the type of hands-on laboratory experience that will be incorporated into the course of instruction. Most electrical engineering schools lack the machine shops and expertise needed to create the mechanical aspects of a robot system. Most mechanical engineering schools lack the electronics labs and expertise needed for the actuation, sensing and computational aspects required to support robotics work. The situation is even more dire for most computer science schools. Computer science departments typically do not have the support culture for the kind of laboratories that are more typically associated with engineering programs. On the other hand, it is recognized that computer science students need courses which provide closer to real world experiences via representative hands-on exercises. -

A Multi-Robot Search and Retrieval System

From: AAAI Technical Report WS-02-18. Compilation copyright © 2002, AAAI (www.aaai.org). All rights reserved. MinDART : A Multi-Robot Search & Retrieval System Paul E. Rybski, Amy Larson, Heather Metcalf, Devon Skyllingstad, Harini Veeraraghavan and Maria Gini Department of Computer Science and Engineering, University of Minnesota 4-192 EE/CS Building Minneapolis, MN 55455 {rybski,larson,hmetcalf,dsky,harini,gini}@cs.umn.edu Abstract We are interested in studying how environmental and control factors affect the performance of a homogeneous multi-robot team doing a search and retrieval task. We have constructed a group of inexpensive robots called the Minnesota Distributed Autonomous Robot Team (MinDART) which use simple sen- sors and actuators to complete their tasks. We have upgraded these robots with the CMUCam, an inexpensive camera sys- tem that runs a color segmentation algorithm. The camera allows the robots to localize themselves as well as visually recognize other robots. We analyze how the team’s perfor- mance is affected by target distribution (uniform or clumped), size of the team, and whether search with explicit localization is more beneficial than random search. Introduction Cooperating teams of robots have the potential to outper- form a single robot attempting an identical task. Increasing Figure 1: The robots with their infrared targets and colored task or environmental knowledge may also improve perfor- landmarks. mance, but increased performance comes at a price. In addi- tion to the monetary concerns of building multiple robots, AAAI 2002 Mobile Robot Competition and Exhibition. Fi- the complexity of the control strategy and the processing nally, we analyze how we would expect these robots to per- overhead can outweigh the benefits. -

Srinivas Akella

Srinivas Akella Department of Computer Science University of North Carolina at Charlotte Tel: (704) 687-8573 9201 University City Boulevard Email: [email protected] Charlotte, NC 28223 http://webpages.uncc.edu/sakella Citizenship: USA RESEARCH INTERESTS: Robotics and automation; Manipulation and motion planning; Multiple robot coordination; Digital microfluidics and biotechnology; Manufacturing and assembly automation; Bioinformatics and protein folding; Data analytics. EDUCATION: 1996 CARNEGIE MELLON UNIVERSITY, Pittsburgh, PA. Ph.D. in Robotics, School of Computer Science. Thesis: Robotic Manipulation for Parts Transfer and Orienting: Mechanics, Planning, and Shape Uncertainty. Advisor: Prof. Matthew T. Mason. 1993 M.S. in Robotics, School of Computer Science. 1989 INDIAN INSTITUTE OF TECHNOLOGY, MADRAS, India. B.Tech. in Mechanical Engineering. EXPERIENCE: 2009-present UNIVERSITY OF NORTH CAROLINA AT CHARLOTTE, Charlotte, NC. Professor, Department of Computer Science (2015-present). Associate Professor, Department of Computer Science (2009-2015). 2000-2008 RENSSELAER POLYTECHNIC INSTITUTE, Troy, NY. Assistant Professor, Department of Computer Science. Senior Research Scientist, Department of Computer Science, and Center for Automation Technologies & Systems. 1996-1999 UNIVERSITY OF ILLINOIS AT URBANA-CHAMPAIGN, Urbana, IL. Beckman Fellow, Beckman Institute for Advanced Science and Technology. 1989-1996 CARNEGIE MELLON UNIVERSITY, Pittsburgh, PA. Research Assistant, The Robotics Institute. Summer 1992 ELECTROTECHNICAL LABORATORY, MITI, Tsukuba, Japan. Summer Intern, Intelligent Systems Division. AWARDS: 2018 CCI Excellence in Graduate Teaching Award, College of Computing and Informatics, UNC Charlotte. 2007 Advisor, Best Student Paper Awardee, Robotics Science and Systems Conference. 2005 Rensselaer Faculty Early Research Career Honoree, RPI. 2001 NSF CAREER Award, National Science Foundation. 1999 Finalist, Best Paper Award, IEEE International Conference on Robotics and Automation.