Seeing Music? What Musicians Need to Know About Vision

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Excesss Karaoke Master by Artist

XS Master by ARTIST Artist Song Title Artist Song Title (hed) Planet Earth Bartender TOOTIMETOOTIMETOOTIM ? & The Mysterians 96 Tears E 10 Years Beautiful UGH! Wasteland 1999 Man United Squad Lift It High (All About 10,000 Maniacs Candy Everybody Wants Belief) More Than This 2 Chainz Bigger Than You (feat. Drake & Quavo) [clean] Trouble Me I'm Different 100 Proof Aged In Soul Somebody's Been Sleeping I'm Different (explicit) 10cc Donna 2 Chainz & Chris Brown Countdown Dreadlock Holiday 2 Chainz & Kendrick Fuckin' Problems I'm Mandy Fly Me Lamar I'm Not In Love 2 Chainz & Pharrell Feds Watching (explicit) Rubber Bullets 2 Chainz feat Drake No Lie (explicit) Things We Do For Love, 2 Chainz feat Kanye West Birthday Song (explicit) The 2 Evisa Oh La La La Wall Street Shuffle 2 Live Crew Do Wah Diddy Diddy 112 Dance With Me Me So Horny It's Over Now We Want Some Pussy Peaches & Cream 2 Pac California Love U Already Know Changes 112 feat Mase Puff Daddy Only You & Notorious B.I.G. Dear Mama 12 Gauge Dunkie Butt I Get Around 12 Stones We Are One Thugz Mansion 1910 Fruitgum Co. Simon Says Until The End Of Time 1975, The Chocolate 2 Pistols & Ray J You Know Me City, The 2 Pistols & T-Pain & Tay She Got It Dizm Girls (clean) 2 Unlimited No Limits If You're Too Shy (Let Me Know) 20 Fingers Short Dick Man If You're Too Shy (Let Me 21 Savage & Offset &Metro Ghostface Killers Know) Boomin & Travis Scott It's Not Living (If It's Not 21st Century Girls 21st Century Girls With You 2am Club Too Fucked Up To Call It's Not Living (If It's Not 2AM Club Not -

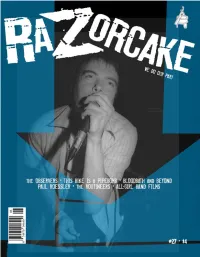

Read Razorcake Issue #27 As A

t’s never been easy. On average, I put sixty to seventy hours a Yesterday, some of us had helped our friend Chris move, and before we week into Razorcake. Basically, our crew does something that’s moved his stereo, we played the Rhythm Chicken’s new 7”. In the paus- IInot supposed to happen. Our budget is tiny. We operate out of a es between furious Chicken overtures, a guy yelled, “Hooray!” We had small apartment with half of the front room and a bedroom converted adopted our battle call. into a full-time office. We all work our asses off. In the past ten years, That evening, a couple bottles of whiskey later, after great sets by I’ve learned how to fix computers, how to set up networks, how to trou- Giant Haystacks and the Abi Yoyos, after one of our crew projectile bleshoot software. Not because I want to, but because we don’t have the vomited with deft precision and another crewmember suffered a poten- money to hire anybody to do it for us. The stinky underbelly of DIY is tially broken collarbone, This Is My Fist! took to the six-inch stage at finding out that you’ve got to master mundane and difficult things when The Poison Apple in L.A. We yelled and danced so much that stiff peo- you least want to. ple with sourpusses on their faces slunk to the back. We incited under- Co-founder Sean Carswell and I went on a weeklong tour with our aged hipster dancing. -

The Ithacan, 1999-11-04

Ithaca College Digital Commons @ IC The thI acan, 1999-2000 The thI acan: 1990/91 to 1999/2000 11-4-1999 The thI acan, 1999-11-04 Ithaca College Follow this and additional works at: http://digitalcommons.ithaca.edu/ithacan_1999-2000 Recommended Citation Ithaca College, "The thI acan, 1999-11-04" (1999). The Ithacan, 1999-2000. 11. http://digitalcommons.ithaca.edu/ithacan_1999-2000/11 This Newspaper is brought to you for free and open access by the The thI acan: 1990/91 to 1999/2000 at Digital Commons @ IC. It has been accepted for inclusion in The thI acan, 1999-2000 by an authorized administrator of Digital Commons @ IC. ,,; Sports 19 Go for the Jug The biggest game in Division III football reaches its 40th year. ~:K~~: ~... VOL 67, No. 11 THURSDAY, NOVEMBER 4, 1999 24 PAGES, FREE Reported assault unnerves campus ' ~~if~t/{r_;·1.:.· ·~·. <\: ~ <-:>-:·~.> l: ,. ·:mfi'·/\':_:.,t':f:;~:.;.,tf· ~,/' . ii--'' ..• ,.,>..;, :;", ,,., .,, < " •• '<):;', -~-~ ~ ~- · .f ' · _:- -':-, .· ,v, : J( , , s ~ ~~,:·. f - <,A\t~ \f; /!/(JnknbWn cis_$dilant !., ·_ .la_,'gez,:l<.:.;;· -~.'Y as sedrch tur'ns up feW leC1ds ,.. 1 ·j ,, : . '· " ::·:~. BY JASON SUBIK patched to assist her. · ;:,,,' Staff Writer _- /' ~:_., -- The Campus Safety investigation ·. ,·:'.._:_.. ,_:_.t!,.:··-·· .. C_ampufs safety'sk~ontinuingdinvcs- hWhall dheclined to comment a,; i.~o ,. · . ·-ttgat1on o 1ast wee s rcportc ear1 y · , w et er t ere are any suspects at hus ··, . ' morning assault on an Ithaca College fc- time. The perpetrator is still considered ,. \< _·-- male freshman has turned up few to be at large. Campus Safety does not~ · TJ..~t-. · 'leads. know if he is armed. -

Formerly the Record Plant

Formerly the Record Plant MISSION Sausalito Tech Partners will restore the historic Record Plant Studios in Sausalito to its former glory, melding new digital innovations and traditional analog tech for recording and live streamed performances. Our Mission - To Create, Share and Celebrate Life-Changing Content A TREASURED ASSET The new Bridgeway Studios – at the former Record Plant studios - will also feature an Experiential Center to preserve and celebrate the building’s historic contributions to popular music as well as several updated spaces for event rentals. The updated venue will become a treasured part of the community through outreach and engagement while music fans from around the world will flock to this refurbished mecca. A CALL FOR INVESTORS AND PARTNERS We are seeking investors and partners to join the team as we preserve, refurbish and resurrect the former Record Plant building. With the underlying tangible asset value, we are seeking investors to share in the in the tremendous upside of the project’s value creation. We also seek corporate, agency and content partners to leverage this one-of-a-kind space. A NEW ERA FOR “THE PLANT” @ BRIDGEWAY STUDIOS Historic Working Studios, both new and historic, will provide a full-service studio experience to artists, producers as well as well-known video streaming platforms. An “Experience Center” will provide a once-in-a-lifetime opportunity for fans to stand within the walls of the historic “Plant” and hear the magic of music recorded in the studios. Social Media and Corporate Partnerships will make the new venue accessible to all, taking the Record Plant history and recorded content to music fans the world over. -

Primus Brown Album Mp3, Flac, Wma

Primus Brown Album mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock Album: Brown Album Country: Europe Released: 1997 Style: Alternative Rock MP3 version RAR size: 1633 mb FLAC version RAR size: 1132 mb WMA version RAR size: 1727 mb Rating: 4.3 Votes: 497 Other Formats: MPC FLAC XM MP2 AIFF MPC WMA Tracklist 1 The Return Of Sathington Willoughby 5:04 2 Fisticuffs 4:24 3 Golden Boy 3:05 4 Over The Falls 2:41 5 Shake Hands With Beef 4:02 6 Camelback Cinema 3:59 7 Hats Off 1:57 8 Puddin' Taine 3:37 9 Bob's Party Time Lounge 4:43 10 Duchess And The Proverbial Mind Spread 3:29 11 Restin' Bones 4:29 12 Coddingtown 2:52 13 Kalamazoo 3:30 14 The Chastising Of Renegade 5:02 15 Arnie 3:54 Companies, etc. Copyright (c) – Interscope Records Phonographic Copyright (p) – Interscope Records Recorded At – Rancho Relaxo Mixed At – Rancho Relaxo Pressed By – Disctronics S Credits A&R [A&R Direction] – Tom Whalley Bass, Vocals – Les Claypool Coordinator [Project Coordinator] – Jill "Galaxy Queen" Rose* Design – Prawn Song Designs Drums – Brain Engineer – Claypool* Engineer [Studio Assistant] – Tim "Soya" Solyan* Guitar – Larry LaLonde Lyrics By – Claypool* Photography By – Miura Smith Producer, Music By – Primus Notes Recorded and mixed at Rancho Relaxo 12/96-4/97. Packaged in an opaque brown jewel case inside a cardboard slipcase. Made in the EU. Barcode and Other Identifiers Barcode (As printed): 6 06949 01262 5 Barcode (Scanned): 606949012625 Label Code: LC 6406 Other (Distribution Code): F: UN540 Rights Society: BIEM/GEMA Matrix / Runout (Mirrored -

Brisbane Driving School

itugb and play, feast ooltit:frmt» of the arth, iJie dtUffi^U of jay body, X. iiiakc mtt»ic and lovtp - Cor atl act« of ptcannre are my rhuuU. •|iiii l'»m that vhkh you lind itt ttVie tuUiVvtitnl ot dcMrt:. ^3>TSfH:- Ca«t \\\t tnaf^tc CIT«\C, revel \n lhe sorcery Virl»icl% d'mpclft a\l power. But commit no sacr'tficcK. '> Repudiate harmfulnc<(s, Exploitation and Mlmiiihtifr. Raibcjir, venerate ail cr<'aturett •J^itf rcMpeci thum a« tiiuttl buj, different to you. itters 1 atributors ueering the Bible 4 Cover by Steve oJ Granville Street. We all love you. oug Ogiivy on wisdom 6 Other excellent -I low Shit up 7 kids; David Dargy, iris in Cyberspace 8 Shannon Owen, \ Martin Bush, Nicole Hills, Di iustralia's Foreign Policy 10 wk Cassidy, Tim Mansfield, Amy Lee, Doug Ogiivy, Ivis Christ 12 l^^^w ^^ '^u^^K Stefan Armbruster, \ Damien Cassidy, "^ • ardi Gras 13 Kate Williamson, Paul Bruynesteyn, oth Africa 14 1 ' ';^k Jacinta Toomey, Jane Curtis, Lynette Toomey, he Tardy Vengence of the People 16 Lindsay Colborne, Sharon Hennesy, ;ummer Festivals 18 If Mark Mclnnes, David Marr, Chris he Interview 19 Green, Joy Brown, Michael Carden, Toby Borgeesi, oma 20 < . '•.?•^ Ben Ross, Maxine, Rebecca Gordon, usic 22 Marcus Salisbury, 1 Andrew Dickinson, I Maya Stuart-Fox, onald and me 24 i %; w-^. 1 Maleha Newaz, . ' •. , *m Paul Woods, Brett Proeddie, Justin /lind Mechanic 26 /y ' ' reat Moments Brisbane Architecture 7 i • . • • •! • . .'',. • '• J ; • i . , , • what f^ereckon ubble Boy 30 As living organisms we all require certain things to ireaming the Net 32 ' survive. -

Songs by Artist

TOTALLY TWISTED KARAOKE Songs by Artist 37 SONGS ADDED IN SEP 2021 Title Title (HED) PLANET EARTH 2 CHAINZ, DRAKE & QUAVO (DUET) BARTENDER BIGGER THAN YOU (EXPLICIT) 10 YEARS 2 CHAINZ, KENDRICK LAMAR, A$AP, ROCKY & BEAUTIFUL DRAKE THROUGH THE IRIS FUCKIN PROBLEMS (EXPLICIT) WASTELAND 2 EVISA 10,000 MANIACS OH LA LA LA BECAUSE THE NIGHT 2 LIVE CREW CANDY EVERYBODY WANTS ME SO HORNY LIKE THE WEATHER WE WANT SOME PUSSY MORE THAN THIS 2 PAC THESE ARE THE DAYS CALIFORNIA LOVE (ORIGINAL VERSION) TROUBLE ME CHANGES 10CC DEAR MAMA DREADLOCK HOLIDAY HOW DO U WANT IT I'M NOT IN LOVE I GET AROUND RUBBER BULLETS SO MANY TEARS THINGS WE DO FOR LOVE, THE UNTIL THE END OF TIME (RADIO VERSION) WALL STREET SHUFFLE 2 PAC & ELTON JOHN 112 GHETTO GOSPEL DANCE WITH ME (RADIO VERSION) 2 PAC & EMINEM PEACHES AND CREAM ONE DAY AT A TIME PEACHES AND CREAM (RADIO VERSION) 2 PAC & ERIC WILLIAMS (DUET) 112 & LUDACRIS DO FOR LOVE HOT & WET 2 PAC, DR DRE & ROGER TROUTMAN (DUET) 12 GAUGE CALIFORNIA LOVE DUNKIE BUTT CALIFORNIA LOVE (REMIX) 12 STONES 2 PISTOLS & RAY J CRASH YOU KNOW ME FAR AWAY 2 UNLIMITED WAY I FEEL NO LIMITS WE ARE ONE 20 FINGERS 1910 FRUITGUM CO SHORT 1, 2, 3 RED LIGHT 21 SAVAGE, OFFSET, METRO BOOMIN & TRAVIS SIMON SAYS SCOTT (DUET) 1975, THE GHOSTFACE KILLERS (EXPLICIT) SOUND, THE 21ST CENTURY GIRLS TOOTIMETOOTIMETOOTIME 21ST CENTURY GIRLS 1999 MAN UNITED SQUAD 220 KID X BILLEN TED REMIX LIFT IT HIGH (ALL ABOUT BELIEF) WELLERMAN (SEA SHANTY) 2 24KGOLDN & IANN DIOR (DUET) WHERE MY GIRLS AT MOOD (EXPLICIT) 2 BROTHERS ON 4TH 2AM CLUB COME TAKE MY HAND -

Primus the Desaturating Seven Mp3, Flac, Wma

Primus The Desaturating Seven mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock Album: The Desaturating Seven Country: Russia Released: 2017 Style: Alternative Rock, Funk Metal, Prog Rock MP3 version RAR size: 1743 mb FLAC version RAR size: 1407 mb WMA version RAR size: 1596 mb Rating: 4.3 Votes: 241 Other Formats: MOD MP2 ASF MIDI XM AA MMF Tracklist 1 The Valley 4:43 2 The Seven 3:08 3 The Trek 7:50 4 The Scheme 2:50 5 The Dream 6:36 6 The Storm 7:47 7 The Ends? 1:44 Companies, etc. Phonographic Copyright (p) – Prawn Song Records Copyright (c) – Prawn Song Records Notes A record of treachery and virtue 16-page art booklet included. Packaged in a jewel case with a transparent tray. Barcode and Other Identifiers Barcode (Text): 8 80882 30572 7 Barcode (String): 880882305727 Other versions Category Artist Title (Format) Label Category Country Year The Desaturating ATO 0404, ATO Records, ATO 0404, Primus Seven (LP, Album, US 2017 0882305710 Prawn Song 0882305710 Rai) The Desaturating 1-18-17 447 Primus Seven (CDr, Album, ATO Records 1-18-17 447 US 2017 Promo) The Desaturating ATO 0404, ATO Records, ATO 0404, Primus Seven (CD-ROM, US 2017 0882305710 Prawn Song 0882305710 Album) The Desaturating ATO0404LP Primus Seven (LP, Album, ATO Records ATO0404LP Europe 2017 Rai) ATO Records, The Desaturating Hostess HSE-4986 Primus HSE-4986 Japan 2017 Seven (CD, Album) Entertainment Unlimited Related Music albums to The Desaturating Seven by Primus Rock Primus - Tommy The Cat Rock Primus - Primus & The Chocolate Factory With The Fungi Ensemble Rock Primus - Live At Bloom '90 Electronic Primus Electronica - Number Rock Primus - HallucinoGeneticsTour 05.30.04 Rock Primus - Does Primus Really Fry? Electronic DJ Prawn - Unorthodox Behaviour Rock Primus - Rhinoplasty Rock Primus - The Tabernacle Atlanta, GA Rock Primus - Pork Soda. -

Cult of Death Llolt-D up in a Tt'u~ Fum(·''· Kurbh and H" Follltl\\L'r

. : ' ' \ .. , ... ' \ ' .·' .. ., "' •• Vo L X X I I s suE 3 . A p R I L 7 1 9 .. ... .. .. .. ' ' I 9 3 ·. I .' . .. .. i . f·. ·\ . ' ,. \ . ' \ . \ ': .. .. .• . - i. i . • ' ' · .· I . ' '· . .. .• . ~ ' ' ••• .. ~ .. • \ ·. .. .. .. ... .. ·. ..· . ., - ~ .. '. ·~ .. ' . .. -. : • t \ . ' .. -·. : .. .. · . ,, ....._ ..: •.. ... '.:. ..... .. .·. ' . I .. ; . \ ' .' . '. ·. .9Lmendment II: ·. • ... !• . • ·. · ' ' '-. .. ....... · ..... ... ...,. .. .• . , . ' . .. .. ' . ' . '· .. .. ·· ' . , . ·. ~ . • • • • '* • · " - .~ . -. • .. •' • ·. ... .. .- . .. :..,. - ~., ' . .... • .. • ..; ~:..... :~- ' """ \ ~· - ! ' I ·-· .. ·,. ' .\ -. • . ·, ··-. : '" .····. 4 .. ~ •• ... ... ... ··..- .. ' " . ' . - ... ·. ... ~ · ...· ... ... \ I • ..·· -. ' ' .. , ·. \ .. · .. ' . •. .. ... .. ....~ .· . .. .... I -; • .. i- : . Cult of Death llolt-d up in a Tt'u~ fum(·''· Kurbh and h" follltl\\l'r-. frnl'ntl~ ht-lil'H' he b ( hri,t-and till dPath du tht>m part T HE O THER S IDE L e "You can never really see what they see in this country until you have seen where they have been. Then you know why they are here, searching for a new life." But Ms. Payne, do you really, truly know why? I don't t think so. t Last month's issue of The Other Side had an article that talked about the Latino immigrant workers in e Southern California and their experiences here in the United States. The first mistake was to refer to them as r "illegals," ''beaners," and "wetbacks." I do not think that this story was written with malicious intent to hurt or offend anyone. It was an honest attempt of self-expression. I can see her honesty, but also her ignorance. You say that "they deserve a medal." Yes they do, but please do not belittle their situation. More than t a medal, they deserve a good job with fair wages and proper working facilities. What good is a medal if one 0 cannot afford a mantle to put it on. And maybe you thought you had it bad at Burger King for one year, but that is all many of us have. -

An Evening with PRIMUS

An Evening with PRIMUS Due nuovi esclusivi concerti in Italia: il 23 marzo a Pordenone l’unica tappa nell’intero NordestDopo esser risaliti alla ribalta delle cronache nel 2010 per il ritorno alla formazione più celebre con il leader Les Claypool alla voce e al basso, Larry LaLonde alla chitarra e Jay Lane alla batteria, il mitico trio statunitense dei PRIMUS, una delle formazioni più rilevanti nelle scene alternative metal di tutti i tempi, ha ripreso l’attività a pieno regime e poche settimane fa ha pubblicato una nuova fatica discografica. I PRIMUS IN ITALIA “Green Naughayde” è il titolo del nuovo progetto dei PRIMUS, settimo album in studio per Les Claypool e soci, che nei prossimi mesi verrà portato nei principali paesi europei. Lo spettacolo, dal nome “An Evening with PRIMUS”, progettato dalla band di “Pork Soda” sarà diverso ogni sera e si suddivide in due entusiasmanti parti per un totale di tre ore di performance. Dopo il tour sold out della scorsa estate, i PRIMUS torneranno in Italia la prossima primavera con due esclusivi concerti: venerdì 23 marzo a Pordenone e sabato 24 marzo a Brescia. Il primo appuntamento italiano (prima data del tour europeo) che si terrà al Palasport Forum, organizzato da Azalea Promotion in collaborazione con ilComune di Pordenone e Barley Arts, sarà l’unica tappa nell’intero Nordest della penisola italiana e soprattutto nelle vicine Austria e Slovenia, dove la band nutre da sempre una solida fan base. “Frank Zappa incontra il metal”, così il trio americano è stato definito agli inizi della carriera, sul finire degli anni’80. -

The Jungle: Untamed and Uncut What Really Goes on at Stony Brook Welcome to Stony Brook University

Vol. 2 Issue 1 September 2005 Monthly The Jungle: Untamed and Uncut What Really Goes on at Stony Brook Welcome to Stony Brook University. Don’t get the wrong impression from the headlines! Things at “The Brook”; as many of us affectionately call it, aren’t that bad, even if there is a website devoted to why it sucks (www.stonybrooksucks.com). Rather, this issue addresses and explores some of the prob- lems students have at the University from a unique perspective and offers some tips on overcoming them. Check out the special section, “Welcome to The Brook” for some tips and tricks for freshmen and transfers as well as some eye-opening informa- tion that will even surprise students who have been attending the University for years. From our analy- sis of the new mandatory health insurance policy to suggestions on how to make new friends on cam- pus, this issue has it all. Enjoy it and don’t forget to send us some feedback (www.stonybrookpatriot. com), whether you love us, hate us, or don’t care. Photo Courtesy of Erik Berte Investigation: Student Activity Fee Breakdown By Erica Smith $40,000. The highest recipients are Benedict and the apartments. To be fair to those who do not There is no doubt that clubs are a meaningful live on campus, the Commuters Student Associa- part of the college experience and, for many of us, tion is typically granted roughly the same amount, play a significant role. However, after looking at although this year they will receive significantly this year’s budget, as well as those in the past, I more than the dormitories with $46,210. -

September 1993

FEATURES TIM "HERB" ALEXANDER With their new album, Pork Soda, Primus has carved out a stylized and twisted place on the pop music landscape. And in the drum world, Tim Alexander's playing has declared its arrival in an equally against-the- grain fashion. • Matt Peiken 20 CLAYTON CAMERON The art of brush playing got a well-needed shot in the arm when Clayton Cameron got a hold of it. Now, after several years with Sammy Davis, Jr. and a highly regarded video on the subject, Cameron has taken his magic to Tony Bennett's drum 26 chair. Oh, and he plays sticks, too. • William F. Miller TOURING COUNTRY DRUMMERS Touring with country music's big stars is a different ball of wax from making the records. Get the lowdown on the country high road from Garth Brooks's Mike Palmer, Ricky Skaggs' Keith Edwards, Vince Gill's Martin Parker, and Billy Ray Cyrus's Gregg 30 Fletcher. • Robyn Flans VOLUME 17, NUMBER 9 COVER PHOTO BY ANDREW MACNAUGHTON COLUMNS EDUCATION NEWS EQUIPMENT 60 BASICS 8 UPDATE Miking Your Drums Fred Young, BY JOHN POKRANDT Prince's Michael B., John Tempesta of Exodus, and the 77 STRICTLY Sundogs' Jimmy TECHNIQUE Hobson, plus News Grouping Control Studies 114 INDUSTRY BY RON SPAGNARDI HAPPENINGS 78 CONCEPTS Faking It 34 ELECTRONIC BY GARY GRISWOLD DEPARTMENTS REVIEW 86 RocK'N' New KAT Products JAZZ CLINIC 4 EDITOR'S BY ED URIBE Your Left Foot OVERVIEW NEW AND 38 BY WILLIAM F. MILLER NOTABLE 102 ROCK CHARTS 6 READERS' Lars Ulrich: "Nothing PLATFORM 108 SHOP TALK Else Matters" Drumshells: TRANSCRIBED BY 12 ASK A PRO Where It All Starts