Uva-DARE (Digital Academic Repository)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mitigating the Risks of Whistleblowing an Approach Using Distributed System Technologies

Mitigating the Risks of Whistleblowing An Approach Using Distributed System Technologies Ali Habbabeh1, Petra Maria Asprion1, and Bettina Schneider1 1 University of Applied Sciences Northwestern Switzerland, Basel, Switzerland [email protected] [email protected] [email protected] Abstract. Whistleblowing is an effective tool to fight corruption and expose wrongdoing in governments and corporations. Insiders who are willing to report misconduct, called whistleblowers, often seek to reach a recipient who can dis- seminate the relevant information to the public. However, whistleblowers often face many challenges to protect themselves from retaliation when using the ex- isting (centralized) whistleblowing platforms. This study discusses several asso- ciated risks of whistleblowing when communicating with third parties using web- forms of newspapers, trusted organizations like WikiLeaks, or whistleblowing software like GlobaLeaks or SecureDrop. Then, this study proposes an outlook to a solution using decentralized systems to mitigate these risks using Block- chain, Smart Contracts, Distributed File Synchronization and Sharing (DFSS), and Distributed Domain Name Systems (DDNS). Keywords: Whistleblowing, Blockchain, Smart Contracts 1 Introduction By all indications, the topic of whistleblowing has been gaining extensive media atten- tion since the financial crisis in 2008, which ignited a crackdown on the corruption of institutions [1]. However, some whistleblowers have also become discouraged by the negative association with the term [2], although numerous studies show that whistle- blowers have often revealed misconduct of public interest [3]. Therefore, researchers like [3] argue that we - the community of citizens - must protect whistleblowers. Addi- tionally, some researchers, such as [1], claim that, although not perfect, we should re- ward whistleblowers financially to incentivize them to speak out to fight corruption [1]. -

Help I Am an Investigative Journalist in 2017

Help! I am an Investigative Journalist in 2017 Whistleblowers Australia Annual Conference 2016-11-20 About me • Information security professional Gabor Szathmari • Privacy, free speech and open gov’t advocate @gszathmari • CryptoParty organiser • CryptoAUSTRALIA founder (coming soon) Agenda Investigative journalism: • Why should we care? • Threats and abuses • Surveillance techniques • What can the reporters do? Why should we care about investigative journalism? Investigative journalism • Cornerstone of democracy • Social control over gov’t and private sector • When the formal channels fail to address the problem • Relies on information sources Manning Snowden Tyler Shultz Paul Stevenson Benjamin Koh Threats and abuses against investigative journalism Threats • Lack of data (opaque gov’t) • Journalists are imprisoned for doing their jobs • Sources are afraid to speak out Journalists’ Privilege • Evidence Amendment (Journalists’ Privilege) Act 2011 • Telecommunications (Interception and Access) Amendment (Data Retention) Act 2015 Recent Abuses • The Guardian: Federal police admit seeking access to reporter's metadata without warrant ! • The Intercept: Secret Rules Makes it Pretty Easy for the FBI to Spy on Journalists " • CBC News: La Presse columnist says he was put under police surveillance as part of 'attempt to intimidate’ # Surveillance techniques Brief History of Interception First cases: • Postal Service - Black Chambers 1700s • Telegraph - American Civil War 1860s • Telephone - 1890s • Short wave radio -1940s / 50s • Satellite (international calls) - ECHELON 1970s Recent Programs (2000s - ) • Text messages, mobile phone - DISHFIRE, DCSNET, Stingray • Internet - Carnivore, NarusInsight, Tempora • Services (e.g. Google, Yahoo) - PRISM, MUSCULAR • Metadata: MYSTIC, ADVISE, FAIRVIEW, STORMBREW • Data visualisation: XKEYSCORE, BOUNDLESSINFORMANT • End user device exploitation: HAVOK, FOXACID So how I can defend myself? Data Protection 101 •Encrypt sensitive data* in transit •Encrypt sensitive data* at rest * Documents, text messages, voice calls etc. -

The Tor Dark Net

PAPER SERIES: NO. 20 — SEPTEMBER 2015 The Tor Dark Net Gareth Owen and Nick Savage THE TOR DARK NET Gareth Owen and Nick Savage Copyright © 2015 by Gareth Owen and Nick Savage Published by the Centre for International Governance Innovation and the Royal Institute of International Affairs. The opinions expressed in this publication are those of the authors and do not necessarily reflect the views of the Centre for International Governance Innovation or its Board of Directors. This work is licensed under a Creative Commons Attribution — Non-commercial — No Derivatives License. To view this license, visit (www.creativecommons.org/licenses/by-nc- nd/3.0/). For re-use or distribution, please include this copyright notice. 67 Erb Street West 10 St James’s Square Waterloo, Ontario N2L 6C2 London, England SW1Y 4LE Canada United Kingdom tel +1 519 885 2444 fax +1 519 885 5450 tel +44 (0)20 7957 5700 fax +44 (0)20 7957 5710 www.cigionline.org www.chathamhouse.org TABLE OF CONTENTS vi About the Global Commission on Internet Governance vi About the Authors 1 Executive Summary 1 Introduction 2 Hidden Services 2 Related Work 3 Study of HSes 4 Content and Popularity Analysis 7 Deanonymization of Tor Users and HSes 8 Blocking of Tor 8 HS Blocking 9 Conclusion 9 Works Cited 12 About CIGI 12 About Chatham House 12 CIGI Masthead GLOBAL COMMISSION ON INTERNET GOVERNANCE PAPER SERIES: NO. 20 — SEPTEMBER 2015 ABOUT THE GLOBAL ABOUT THE AUTHORS COMMISSION ON INTERNET Gareth Owen is a senior lecturer in the School of GOVERNANCE Computing at the University of Portsmouth. -

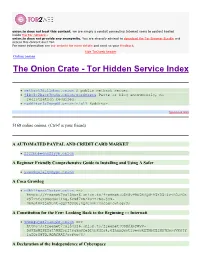

The Onion Crate - Tor Hidden Service Index Protected Onions Add New

onion.to does not host this content; we are simply a conduit connecting Internet users to content hosted inside the Tor network.. onion.to does not provide any anonymity. You are strongly advised to download the Tor Browser Bundle and access this content over Tor. For more information see our website for more details and send us your feedback. hide Tor2web header Online onions The Onion Crate - Tor Hidden Service Index Protected onions Add new nethack3dzllmbmo.onion A public nethack server. j4ko5c2kacr3pu6x.onion/wordpress Paste or blog anonymously, no registration required. redditor3a2spgd6.onion/r/all Redditor. Sponsored links 5168 online onions. (Ctrl-f is your friend) A AUTOMATED PAYPAL AND CREDIT CARD MARKET 2222bbbeonn2zyyb.onion A Beginner Friendly Comprehensive Guide to Installing and Using A Safer yuxv6qujajqvmypv.onion A Coca Growlog rdkhliwzee2hetev.onion ==> https://freenet7cul5qsz6.onion.to/freenet:USK@yP9U5NBQd~h5X55i4vjB0JFOX P97TAtJTOSgquP11Ag,6cN87XSAkuYzFSq-jyN- 3bmJlMPjje5uAt~gQz7SOsU,AQACAAE/cocagrowlog/3/ A Constitution for the Few: Looking Back to the Beginning ::: Internati 5hmkgujuz24lnq2z.onion ==> https://freenet7cul5qsz6.onion.to/freenet:USK@kpFWyV- 5d9ZmWZPEIatjWHEsrftyq5m0fe5IybK3fg4,6IhxxQwot1yeowkHTNbGZiNz7HpsqVKOjY 1aZQrH8TQ,AQACAAE/acftw/0/ A Declaration of the Independence of Cyberspace ufbvplpvnr3tzakk.onion ==> https://freenet7cul5qsz6.onion.to/freenet:CHK@9NuTb9oavt6KdyrF7~lG1J3CS g8KVez0hggrfmPA0Cw,WJ~w18hKJlkdsgM~Q2LW5wDX8LgKo3U8iqnSnCAzGG0,AAIC-- 8/Declaration-Final%5b1%5d.html A Dumps Market -

Anonymous Javascript Cryptography and Cover Traffic in Whistleblowing Applications

DEGREE PROJECT IN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2016 Anonymous Javascript Cryptography and Cover Traffic in Whistleblowing Applications JOAKIM UDDHOLM KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF COMPUTER SCIENCE AND COMMUNICATION Anonymous Javascript Cryptography and Cover Traffic in Whistleblowing Applications JOAKIM HJALMARSSON UDDHOLM Master’s Thesis at NADA Supervisor: Sonja Buchegger, Daniel Bosk Examiner: Johan Håstad Abstract In recent years, whistleblowing has lead to big headlines around the world. This thesis looks at whistleblower systems, which are systems specifically created for whistleblowers to submit tips anonymously. The problem is how to engineer such a system as to maximize the anonymity for the whistleblower whilst at the same time remain usable. The thesis evaluates existing implementations for the whistle- blowing problem. Eleven Swedish newspapers are evaluated for potential threats against their whistleblowing service. I suggest a new system that tries to improve on existing sys- tems. New features includes the introduction of JavaScript cryp- tography to lessen the reliance of trust for a hosted server. Use of anonymous encryption and cover traffic to partially anonymize the recipient, size and timing metadata on submissions sent by the whistleblowers. I explore the implementations of these fea- tures and the viability to address threats against JavaScript in- tegrity by use of cover traffic. The results show that JavaScript encrypted submissions are viable. The tamper detection system can provide some integrity for the JavaScript client. Cover traffic for the initial submissions to the journalists was also shown to be feasible. However, cover traffic for replies sent back-and-forth between whistleblower and journalist consumed too much data transfer and was too slow to be useful. -

Weaving the Dark Web: Legitimacy on Freenet, Tor, and I2P (Information

The Information Society Series Laura DeNardis and Michael Zimmer, Series Editors Interfaces on Trial 2.0, Jonathan Band and Masanobu Katoh Opening Standards: The Global Politics of Interoperability, Laura DeNardis, editor The Reputation Society: How Online Opinions Are Reshaping the Offline World, Hassan Masum and Mark Tovey, editors The Digital Rights Movement: The Role of Technology in Subverting Digital Copyright, Hector Postigo Technologies of Choice? ICTs, Development, and the Capabilities Approach, Dorothea Kleine Pirate Politics: The New Information Policy Contests, Patrick Burkart After Access: The Mobile Internet and Inclusion in the Developing World, Jonathan Donner The World Made Meme: Public Conversations and Participatory Media, Ryan Milner The End of Ownership: Personal Property in the Digital Economy, Aaron Perzanowski and Jason Schultz Digital Countercultures and the Struggle for Community, Jessica Lingel Protecting Children Online? Cyberbullying Policies of Social Media Companies, Tijana Milosevic Authors, Users, and Pirates: Copyright Law and Subjectivity, James Meese Weaving the Dark Web: Legitimacy on Freenet, Tor, and I2P, Robert W. Gehl Weaving the Dark Web Legitimacy on Freenet, Tor, and I2P Robert W. Gehl The MIT Press Cambridge, Massachusetts London, England © 2018 Robert W. Gehl All rights reserved. No part of this book may be reproduced in any form by any electronic or mechanical means (including photocopying, recording, or information storage and retrieval) without permission in writing from the publisher. This book was set in ITC Stone Serif Std by Toppan Best-set Premedia Limited. Printed and bound in the United States of America. Library of Congress Cataloging-in-Publication Data is available. ISBN: 978-0-262-03826-3 eISBN 9780262347570 ePub Version 1.0 I wrote parts of this while looking around for my father, who died while I wrote this book. -

Principi E Strumenti Del Whistleblowing: Il Caso Globaleaks 11 March

Principi e strumenti del whistleblowing: il caso GlobaLeaks 11 March 2015, Centro Nexa, Torino 73° Mercoledì di Nexa - Hermes Center for Transparency and Digital Human Rights https://globaleaks.org - http://logioshermes.org Hermes Center for Transparency and Digital Human Rights https://globaleaks.org Who’s using Whistleblowing and how Whistleblowing Whistleblowing + Technology = Citizens Power Digital Whistleblowing Paradigm change When “online” psychological barrier reduce Digital Security and Privacy Challenges for Whistleblowing Digital Whistleblowing works only with strong privacy But online reporting actions could leave online Especially due to corporate & government surveillance INTERCEPTION TRACING • Email • Email • Web Browsing • Web Browsing • Phone Calls • Location tracking • Location tracking • Proxy Logs • Metadata Unknown or Inappropriate Data Retention Policies Surveillance kills trust Distrust kills whistleblowing Restore trust and confidence by the whistleblowers Digital Security Digital Anonymity Data Encryption Anonimity vs. Confidentiality • Anonimity and Confidentiality – Confidentiality: I know who you are, but i am not going to tell to anyone – Anonymity: I don’t know who you are and i’ve no way to find it out • Analog vs. Digital Anonimity – Analog: I don’t tell you who i am – Digital: I don’t tell you who i am and where is my computer (IP address) • Anonimity Technology: Tor – Used everyday by +500.000 persons – +5000 volounteers – Co-financed by US Government • Improving Whistleblower’s trust by giving real, -

Cyber Security in a Volatile World

Research Volume Five Global Commission on Internet Governance Cyber Security in a Volatile World Research Volume Five Global Commission on Internet Governance Cyber Security in a Volatile World Published by the Centre for International Governance Innovation and the Royal Institute of International Affairs The copyright in respect of each chapter is noted at the beginning of each chapter. The opinions expressed in this publication are those of the authors and do not necessarily reflect the views of the Centre for International Governance Innovation or its Board of Directors. This work was carried out with the aid of a grant from the International Development Research Centre (IDRC), Ottawa, Canada. The views expressed herein do not necessarily represent those of IDRC or its Board of Governors. This work is licensed under a Creative Commons Attribution — Non-commercial — No Derivatives License. To view this licence, visit (www.creativecommons.org/licenses/ by-nc-nd/3.0/). For re-use or distribution, please include this copyright notice. Centre for International Governance Innovation, CIGI and the CIGI globe are registered trademarks. 67 Erb Street West 10 St James’s Square Waterloo, Ontario N2L 6C2 London, England SW1Y 4LE Canada United Kingdom tel +1 519 885 2444 fax +1 519 885 5450 tel +44 (0)20 7957 5700 fax +44 (0)20 7957 5710 www.cigionline.org www.chathamhouse.org TABLE OF CONTENTS About the Global Commission on Internet Governance . .iv . Preface . v Carl Bildt Introduction: Security as a Precursor to Internet Freedom and Commerce . .1 . Laura DeNardis Chapter One: Global Cyberspace Is Safer than You Think: Real Trends in Cybercrime . -

Fy 2020 Budget Justification

Congressional FY 2020 BUDGET JUSTIFICATION Table of Contents Executive Summary .................................................................................................................. 1 Summary Charts ...................................................................................................................... 11 Legislative Proposals ..............................................................................................................13 Voice of America (VOA) ......................................................................................................... 17 Office of Cuba Broadcasting (OCB) ................................................................................... 29 International Broadcasting Bureau (IBB) ........................................................................33 Office of Internet Freedom (OIF) & Open Technology Fund (OTF) .............................. 36 Technology, Services and Innovation (TSI) .................................................................... 45 Radio Free Europe/Radio Liberty (RFE/RL) ...................................................................57 Radio Free Asia (RFA) .............................................................................................................67 Middle East Broadcasting Networks, Inc. (MBN) ..........................................................77 Broadcasting Capital Improvements (BCI) .................................................................... 85 Performance Budget Information .................................................................................... -

Overview of Whistleblowing Software

U4 Helpdesk Answer Overview of whistleblowing software Author: Matthew Jenkins, Transparency International, [email protected] Reviewers: Marie Terracol, Transparency International and Saul Mullard, U4 Anti-Corruption Resource Centre Date: 14 April 2020 The technical demands of setting up a secure and anonymous whistleblowing mechanism can seem daunting. Yet public sector organisations such as anti-corruption agencies do not need to develop their own system from scratch; there are a number of both open-source and proprietary providers of digital whistleblowing platforms that can be deployed by public agencies. This Helpdesk answer lays out the core principles and practical considerations for online reporting systems, as well as the chief digital threats they face and how various providers’ solutions respond to these threats. Overall, the paper has identified that open source solutions tend to offer the greatest security for whistleblowers themselves, while the propriety software on the market places greater emphasis on usability and integrated case management functionalities. Ultimately, organisations looking to adopt web-based whistleblowing systems should be mindful of the broader whistleblowing context, such as the legal protections for whistleblowers and the severity of physical and digital threats, as well as their own organisational capacity. U4 Anti-Corruption Helpdesk A free service for staff from U4 partner agencies Query Please provide an overview of the most common web-based whistleblower systems, exploring their respective advantages and disadvantages in terms of anonymity, security, accessibility, costs and so on. We are particularly interested in systems that would be appropriate for Anti-Corruption Agencies. Contents Main points 1. Background a. Advantages of digital reporting — Whistleblowing can act as a crucial systems check on human rights abuses, b. -

2000-Deep-Web-Dark-Web-Links-2016 On: March 26, 2016 In: Deep Web 5 Comments

Tools4hackers -This You website may uses cookies stop to improve me, your experience. but you We'll assume can't you're ok stop with this, butus you all. can opt-out if you wish. Accept Read More 2000-deep-web-dark-web-links-2016 on: March 26, 2016 In: Deep web 5 Comments 2000 deep web links The Dark Web, Deepj Web or Darknet is a term that refers specifically to a collection of websites that are publicly visible, but hide the IP addresses of the servers that run them. Thus they can be visited by any web user, but it is very difficult to work out who is behind the sites. And you cannot find these sites using search engines. So that’s why we have made this awesome list of links! NEW LIST IS OUT CLICK HERE A warning before you go any further! Once you get into the Dark Web, you *will* be able to access those sites to which the tabloids refer. This means that you could be a click away from sites selling drugs and guns, and – frankly – even worse things. this article is intended as a guide to what is the Dark Web – not an endorsement or encouragement for you to start behaving in illegal or immoral behaviour. 1. Xillia (was legit back in the day on markets) http://cjgxp5lockl6aoyg.onion 2. http://cjgxp5lockl6aoyg.onion/worldwide-cardable-sites-by-alex 3. http://cjgxp5lockl6aoyg.onion/selling-paypal-accounts-with-balance-upto-5000dollars 4. http://cjgxp5lockl6aoyg.onion/cloned-credit-cards-free-shipping 5. 6. ——————————————————————————————- 7. -

Online Violence Against Women

Submission of the Citizen Lab (Munk School of Global Affairs, University of Toronto) to the United Nations Special Rapporteur on violence against women, its causes and consequences, Ms. Dubravka Šimonović November 2, 2017 For all inquiries related to this submission, please contact: Dr. Ronald J. Deibert Director, The Citizen Lab, Munk School of Global Affairs Professor of Political Science, University of Toronto [email protected] Contributors to this report (in alphabetical order): Dr. Ronald J. Deibert, Professor of Political Science; Director, The Citizen Lab Lex Gill, Research Fellow, The Citizen Lab Tamir Israel, Staff Lawyer, Canadian Internet Policy and Public Interest Clinic (CIPPIC) Chelsey Legge, Clinic Student, International Human Rights Program (IHRP), University of Toronto Irene Poetranto, Senior Researcher and Doctoral Student, The Citizen Lab Amitpal Singh, Research Assistant, The Citizen Lab Acknowledgements: We would also like to thank Gillian T. Hnatiw (Partner, Lerners LLP), Samer Muscati (Director, International Human Rights Program, University of Toronto), Miles Kenyon (Communications Officer, The Citizen Lab), Sarah McKune (Senior Researcher, The Citizen Lab), Jon Penney (Assistant Professor of Law; Director, Law & Technology Institute, Schulich School of Law) and Maria Xynou (Research and Partnerships Coordinator, Open Observatory of Network Interference (OONI)) for their support in the creation of this report. Table of Contents Executive Summary 1 About the Citizen Lab 2 The nature of technology-facilitated violence,