A Comparative Evaluation of Two Algorithms for Windows Registry Anomaly Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Strategies of Computer Worms

304543_ch09.qxd 1/7/05 9:05 AM Page 313 CHAPTER 9 Strategies of Computer Worms “Worm: n., A self-replicating program able to propagate itself across network, typically having a detrimental effect.” —Concise Oxford English Dictionary, Revised Tenth Edition 313 304543_ch09.qxd 1/7/05 9:05 AM Page 314 Chapter 9—Strategies of Computer Worms 9.1 Introduction This chapter discusses the generic (or at least “typical”) structure of advanced computer worms and the common strategies that computer worms use to invade new target systems. Computer worms primarily replicate on networks, but they represent a subclass of computer viruses. Interestingly enough, even in security research communities, many people imply that computer worms are dramatically different from computer viruses. In fact, even within CARO (Computer Antivirus Researchers Organization), researchers do not share a common view about what exactly can be classified as a “worm.” We wish to share a common view, but well, at least a few of us agree that all computer worms are ultimately viruses1. Let me explain. The network-oriented infection strategy is indeed a primary difference between viruses and computer worms. Moreover, worms usually do not need to infect files but propagate as standalone programs. Additionally, several worms can take con- trol of remote systems without any help from the users, usually exploiting a vul- nerability or set of vulnerabilities. These usual characteristics of computer worms, however, do not always hold. Table 9.1 shows several well-known threats. Table -

Tangled Web : Tales of Digital Crime from the Shadows of Cyberspace

TANGLED WEB Tales of Digital Crime from the Shadows of Cyberspace RICHARD POWER A Division of Macmillan USA 201 West 103rd Street, Indianapolis, Indiana 46290 Tangled Web: Tales of Digital Crime Associate Publisher from the Shadows of Cyberspace Tracy Dunkelberger Copyright 2000 by Que Corporation Acquisitions Editor All rights reserved. No part of this book shall be reproduced, stored in a Kathryn Purdum retrieval system, or transmitted by any means, electronic, mechanical, pho- Development Editor tocopying, recording, or otherwise, without written permission from the Hugh Vandivier publisher. No patent liability is assumed with respect to the use of the infor- mation contained herein. Although every precaution has been taken in the Managing Editor preparation of this book, the publisher and author assume no responsibility Thomas Hayes for errors or omissions. Nor is any liability assumed for damages resulting from the use of the information contained herein. Project Editor International Standard Book Number: 0-7897-2443-x Tonya Simpson Library of Congress Catalog Card Number: 00-106209 Copy Editor Printed in the United States of America Michael Dietsch First Printing: September 2000 Indexer 02 01 00 4 3 2 Erika Millen Trademarks Proofreader Benjamin Berg All terms mentioned in this book that are known to be trademarks or ser- vice marks have been appropriately capitalized. Que Corporation cannot Team Coordinator attest to the accuracy of this information. Use of a term in this book should Vicki Harding not be regarded as affecting the validity of any trademark or service mark. Design Manager Warning and Disclaimer Sandra Schroeder Every effort has been made to make this book as complete and as accurate Cover Designer as possible, but no warranty or fitness is implied. -

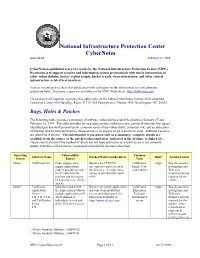

Cybernotes Issue #4-99 February 17, 1999

National Infrastructure Protection Center CyberNotes Issue #4-99 February 17, 1999 CyberNotes is published every two weeks by the National Infrastructure Protection Center (NIPC). Its mission is to support security and information system professionals with timely information on cyber vulnerabilities, hacker exploit scripts, hacker trends, virus information, and other critical infrastructure-related best practices. You are encouraged to share this publication with colleagues in the information and infrastructure protection field. Electronic copies are available on the NIPC Web site at http://www.nipc.gov. Please direct any inquiries regarding this publication to the Editor-CyberNotes, National Infrastructure Protection Center, FBI Building, Room 11719, 935 Pennsylvania Avenue, NW, Washington, DC, 20535. Bugs, Holes & Patches The following table provides a summary of software vulnerabilities identified between January 27 and February 12, 1999. The table provides the operating system, software name, potential vulnerability/impact, identified patches/workarounds/alerts, common name of the vulnerability, potential risk, and an indication of whether attacks have utilized this vulnerability or an exploit script is known to exist. Software versions are identified if known. This information is presented only as a summary; complete details are available from the source of the patch/workaround/alert, indicated in the footnote or linked site. Please note that even if the method of attack has not been utilized or an exploit script is not currently widely available on the Internet, a potential vulnerability has been identified. Operating Vulnerability/ Common Software Name Patches/Workarounds/Alerts Risk* Attacks/Scripts System Impact Name Allaire1 ColdFusion 4.0 Code snippets from Remove the CFDOCS ColdFusion High Bug discussed in sample applications directory or restrict access to Sample Code newsgroups and make it possible to make the directory. -

IBM X-Force Threat Insight Quarterly 2 X-Force Threat Insight Quarterly IBM Security Solutions

IBM Security Solutions May 2011 IBM X-Force Threat Insight Quarterly 2 X-Force Threat Insight Quarterly IBM Security Solutions Contents About the report 2 About the Report The IBM X-Force® Threat Insight Quarterly is designed to highlight some of the most significant threats and challenges 3 Evolution: From Nuisance to Weapon facing security professionals today. This report is a product of IBM Managed Security Services and the IBM X-Force 8 Prolific and Impacting Issues of Q1 2011 research and development team. Each issue focuses on specific challenges and provides a recap of the most significant recent 16 References online threats. IBM Managed Security Services are designed to help an organization improve its information security, by outsourcing security operations or supplementing your existing security teams. The IBM protection on-demand platform helps deliver Managed Security Services and the expertise, knowledge and infrastructure an organization needs to secure its information assets from Internet attacks. The X-Force team provides the foundation for a preemptive approach to Internet security. The X-Force team is one of the best-known commercial security research groups in the world. This group of security experts researches and evaluates vulnerabilities and security issues, develops assessment and countermeasure technology for IBM security products, and educates the public about emerging Internet threats. We welcome your feedback. Questions or comments regarding the content of this report should be addressed to [email protected]. 3 X-Force Threat Insight Quarterly IBM Security Solutions Evolution: From Nuisance to Weapon One of the more notable examples here is Brain3, a boot sector infector which originated in Pakistan and released in 1986, was Creeper, Wabbit, Animal, Elk Cloner, Brain, Vienna, Lehigh, one of the first examples of malware that infected PC’s running Stoned, Jerusalem. -

What Are Kernel-Mode Rootkits?

www.it-ebooks.info Hacking Exposed™ Malware & Rootkits Reviews “Accessible but not dumbed-down, this latest addition to the Hacking Exposed series is a stellar example of why this series remains one of the best-selling security franchises out there. System administrators and Average Joe computer users alike need to come to grips with the sophistication and stealth of modern malware, and this book calmly and clearly explains the threat.” —Brian Krebs, Reporter for The Washington Post and author of the Security Fix Blog “A harrowing guide to where the bad guys hide, and how you can find them.” —Dan Kaminsky, Director of Penetration Testing, IOActive, Inc. “The authors tackle malware, a deep and diverse issue in computer security, with common terms and relevant examples. Malware is a cold deadly tool in hacking; the authors address it openly, showing its capabilities with direct technical insight. The result is a good read that moves quickly, filling in the gaps even for the knowledgeable reader.” —Christopher Jordan, VP, Threat Intelligence, McAfee; Principal Investigator to DHS Botnet Research “Remember the end-of-semester review sessions where the instructor would go over everything from the whole term in just enough detail so you would understand all the key points, but also leave you with enough references to dig deeper where you wanted? Hacking Exposed Malware & Rootkits resembles this! A top-notch reference for novices and security professionals alike, this book provides just enough detail to explain the topics being presented, but not too much to dissuade those new to security.” —LTC Ron Dodge, U.S. -

CSCI E-170 Lecture 09: Attacker Motivations, Computer Crime and Secure Coding

CSCI E-170 Lecture 09: Attacker Motivations, Computer Crime and Secure Coding Simson L. Garfinkel Center for Research on Computation and Society Harvard University November 21, 2005 1 Today’s Agenda 1. Administrivia 2. Missing Readings for L09, L10 3. Threat Models: Who is the attacker? What can the attacker do? 4. Secure Coding 5. Translucent Databases 6. RFID 2 Administrivia 1. Quizes - If you are a remote student and you want it back, please email csci [email protected] with a fax number and we will fax it out. 2. Midterm Projects should be in. 3 Final Projects This is a research project, not a book review. You are expected to: 1. Create something and write about it. 2. Analyze something in detail. You have until next Monday to form groups of 4 students. Email group names and your proposed topic to csci [email protected]. Students who have not chosen groups will be assigned. 4 Why is building a secure system different than building a system that is: 1. Reliable 2. Safe 3. Easy-to-use ? 5 With security, there is an adversary. No reason to defend against an unbounded adversary. Why? 6 The nature of the adversary determines your defenses. Possible adversaries include: 1. Employees (good and bad) 2. High school students 3. Foreign Governments (“Titan Rain?”) Evaluate according to who they are and by what they can accomplish. 7 Remember RFC 602? Public acknowledgment of hackers on the Internet: 1. Sites used physical security have not taken measures to secure machines accessible over the network. -

Computer Parasitology

Computer Parasitology Carey Nachenberg Symantec AntiVirus Research Center [email protected] Posted with the permission of Virus Bulletin http://www.virusbtn.com/ Table of Contents Table of Contents............................................................................................................................................................. 2 Introduction....................................................................................................................................................................... 4 Worm Classifications...................................................................................................................................................... 4 Worm Transport Classifications............................................................................................................................... 4 E-mail Worms ......................................................................................................................................................... 4 Arbitrary Protocol Worms: IRC Worms, TCP/IP Worms, etc. ...................................................................... 4 Worm Launch Classifications................................................................................................................................... 5 Self-launching Worms ........................................................................................................................................... 5 User-launched Worms ........................................................................................................................................... -

The Ultimate Cybersecurity Guide for the It Professional

THE ULTIMATE CYBERSECURITY GUIDE FOR THE IT PROFESSIONAL { 01101000 01110100 01110100 01110000 01110011 00111010 00101111 00101111 01110111 01110111 01110111 00101110 01100011 01100001 01110010 01100010 01101111 01101110 01100010 01101100 01100001 01100011 01101011 00101110 01100011 01101111 01101101 } THE ULTIMATE CYBERSECURITY GUIDE FOR THE IT PROFESSIONAL 2019 Welcome to our comprehensive guide on the basics of cybersecurity. Whether you've been in IT for a long time or are just starting out, there is an expectation that everyone in IT should have some degree of expo- sure to InfoSec. A good way to do that is to learn from and get connected in the community. Cybersecurity is a fascinating and rapidly evolving area of IT. And those that are involved are friendly people who care passionately about keeping us all safe. With information from over 150 sourced references, and personal input from The Howler Hub community of security experts, this guide contains the key information to help you: • Understand key concepts that drive the security professional. • Learn a common language to engage with cybersecurity professionals. • Connect with sources to stay up-to-date on this evolving field. • Engage with cybersecurity experts and the threat hunting community at large. CONTENTS 01 02 03 History of Attackers + Common Cybersecurity Their Motives Attacks <pg num="001" /> <pg num="005" /> <pg num="007" /> 04 05 06 Terms to Know Experts to Blogs to Read <pg num="009" /> Follow <pg num="014" /> <pg num="013" /> 07 08 09 Events to Books to Read Movies + Shows Attend <pg num="017" /> to Watch <pg num="015" /> <pg num="019" /> 10 11 12 Communities Become a References to Engage Threat Hunter <pg num="023" /> <pg num="021" /> <pg num="022" /> 13 Appendices <pg num="024" /> <pg num="001" /> SEC. -

Controlled Worm Replication – 'Internet-In-A-Box'

VIRUS BULLETIN CONFERENCE, SEPTEMBER 2000 • 43 CONTROLLED WORM REPLICATION – ‘INTERNET-IN-A-BOX’ Ian Whalley IBM TJ Watson Research Centre, PO Box 704, Yorktown Heights, NY 10598, USA Tel +1 914 784 7368 • Fax +1 914 784 6054 • Email: [email protected] ABSTRACT So-called ‘worms’ have been a feature of the malware landscape since the beginning, and yet have been largely ignored by anti-virus companies until comparatively recently. However, the near-complete connectivity of computers in today’s western world, coupled with the largely Win32-centric base of installed operating systems make the rise of worms inevitable. The author will describe techniques and mechanisms for constructing and utilising an environment enabling the automatic analysis of multi-machine worms and network-aware viruses. Whilst these techniques are being developed for incorporation into the IBM/Symantec Immune System for Cyberspace, the paper is not intended to be a discussion of the Immune System concept. Instead, the intent is to describe an approach that has been applied to the problem with some measure of success. The approach involves building a virtual SOHO network, which is in turn connected to a virtual Internet. Both the virtual LAN and WAN are populated with virtual machines. The suspected worm is introduced into this environment, and executed therein. The whole system is closely monitored as execution progresses in the isolated environment, and data is amassed describing what the suspected worm did as it executed. This data is then processed by the system in an attempt to automatically determine whether or not the suspect programming is performing actions indicative of a worm or Internet-aware malware. -

The Administrator Shortcut Guide to Email Protection

Chapter 1 Introduction By Sean Daily, Series Editor Welcome to The Administrator Shortcut Guide to Email Protection! The book you are about to read represents an entirely new modality of book publishing and a major first in the publishing industry. The founding concept behind Realtimepublishers.com is the idea of providing readers with high-quality books about today’s most critical IT topics—at no cost to the reader. Although this may sound like a somewhat impossible feat to achieve, it is made possible through the vision and generosity of corporate sponsors such as Sybari, who agree to bear the book’s production expenses and host the book on its Web site for the benefit of its Web site visitors. It should be pointed out that the free nature of these books does not in any way diminish their quality. Without reservation, I can tell you that this book is the equivalent of any similar printed book you might find at your local bookstore (with the notable exception that it won’t cost you $30 to $80). In addition to the free nature of the books, this publishing model provides other significant benefits. For example, the electronic nature of this eBook makes events such as chapter updates and additions, or the release of a new edition of the book possible to achieve in a far shorter timeframe than is possible with printed books. Because we publish our titles in “real- time”—that is, as chapters are written or revised by the author—you benefit from receiving the information immediately rather than having to wait months or years to receive a complete product. -

The Evolving Virus Threat

The Evolving Virus Threat Mr. Carey Nachenberg Chief Researcher, Symantec Corporation Over the past twenty years, computer viruses have evolved from simple computer programs capable of spreading on a single PC to complex software worms which can ravage entire computer networks. Much of the evolution of new computer virus threats has come from two major sources. First, as popular new computing platforms become available virus authors seek to exploit these platforms. Second, the co- evolution of anti-virus technology has spurred the development increasingly powerful, more complex computer virus threats. Today, our computing infrastructure is more vulnerable than ever before due to a convergence of four key factors. First, the world population of computers is more homogeneous than ever before, creating a computing “monoculture;” if one machine is susceptible to a bug, the rest are too. Second, our computing systems are more connected than ever before, enabling a fast spreading virus to rapidly infect millions of machines. Third, our computers are more programmable than ever before; with simple script programming languages, even novice users can create malicious code that can control virtually every aspect of the computer system. Finally, the hardware and software platforms used by big business and the home user are converging, lowering the bar to build and test malicious software. This talk will study the evolution of computer viruses and consider the future virus threats we are likely to encounter. In addition, it will take an in-depth look at anti-virus technologies, present and future, to better understand how our computer countermeasures will have to change to provide adequate protection against tomorrow’s viruses. -

Virus Bulletin, August 2000

ISSN 0956-9979 AUGUST 2000 THE INTERNATIONAL PUBLICATION ON COMPUTER VIRUS PREVENTION, RECOGNITION AND REMOVAL Editor: Francesca Thorneloe CONTENTS Technical Consultant: Matt Ham Technical Editor: Jakub Kaminski COMMENT Divided We Fall 2 Consulting Editors: NEWS & VIRUS PREVALENCE TABLE 3 Nick FitzGerald, Independent consultant, NZ Ian Whalley, IBM Research, USA LETTERS 4 Richard Ford, Independent consultant, USA VIRUS ANALYSIS Edward Wilding, Maxima Group Plc, UK What’s New, PussyCats? 6 TECHNICAL FEATURE IN THIS ISSUE: Moving to Windows 2000 8 • Pack-a-Mac: In the coming months VB will be initiating FEATURE Comparative Reviews for Macintosh products. Full testing The Number of the Beasts 10 protocols, proposed schedules and submission criteria are outlined on p.22. BOOK REVIEW To Boldly Go? 12 • The numbers game: Denis Zenkin is a self-confessed virus prevalence table junkie. He shares his findings over CONFERENCE REPORT the past few years in his Feature on p.10. Tracking Bugs in Ontario 13 • Happy new network? Millennium fever is well and truly OPINION over but what is the reality about moving your corporate network over to Win2K? Péter Ször investigates on p.8. Form, Concept and Other Pleasant Utilities 14 A DAY IN THE LIFE Manic Monday 16 PRODUCT REVIEW VirusBuster for NT 18 REVIEW NEWS Of Macs and Men 22 END NOTES AND NEWS 24 VIRUS BULLETIN ©2000 Virus Bulletin Ltd, The Pentagon, Abingdon, Oxfordshire, OX14 3YP, England. www.virusbtn.com /2000/$0.00+2.50 No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form without the prior written permission of the publishers.