An Empirical Model for Assessing the Severe Weather Potential of Developing Convection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

National Weather Service Instruction 10-1601 November 3, 2005

Department of Commerce $ National Oceanic & Atmospheric Administration $ National Weather Service NATIONAL WEATHER SERVICE INSTRUCTION 10-1601 NOVEMBER 3, 2005 Operations and Services Performance, NWSPD 10-16 VERIFICATION PROCEDURES NOTICE: This publication is available at: http://www.nws.noaa.gov/directives/. OPR: OS52 (Charles Kluepfel) Certified by: OS5 (Kimberly Campbell) Type of Issuance: Routine SUMMARY OF REVISIONS: This directive supersedes National Weather Service Instruction 10-1601, dated April 10, 2004. The following changes have been made to this directive: 1) Introductory information on the legacy verification systems for public (section 1.1.1) and terminal aerodrome (section 6.1.1) forecasts has replaced outdated information in these sections. 2) The verification of fire weather forecasts (section 1.4) and red flag warnings (section 1.5) has been added. 3) Monthly preliminary reporting requirements for tornado and flash flood warning verification statistics have been discontinued and respectively removed from sections 2.1 and 4.1. 4) Rule 2, implemented January 1, 2002, for short-fused warnings, has been discontinued for all tornado/severe thunderstorm (section 2.1.2) and special marine (section 3.3.2) warnings issued after February 28, 2005. Once VTEC is implemented for flash flood warnings (section 4.1.2), Rule 2 will also be discontinued for all flash flood warnings issued after the change. 5) The time of warning issuance for tornado, severe thunderstorm and special marine warnings is taken from the Valid Time and Event Code (VTEC) line (sections 2.1.3 and 3.3.3). 6) The National Digital Forecast Database (NDFD) quantitative precipitation forecasts (QPF) are now verified. -

Optimized Autonomous Space In-Situ Sensor-Web for Volcano Monitoring

Optimized Autonomous Space In-situ Sensor-Web for Volcano Monitoring Wen-Zhan Song Behrooz Shirazi Renjie Huang Mingsen Xu Nina Peterson fsongwz, shirazi, renjie huang, mingsen xu, [email protected] Sensorweb Research Laboratory, Washington State University Rick LaHusen John Pallister Dan Dzurisin Seth Moran Mike Lisowski frlahusen, jpallist, dzurisin, smoran, [email protected] Cascades Volcano Observatory, U.S. Geological Survey Sharon Kedar Steve Chien Frank Webb Aaron Kiely Joshua Doubleday Ashley Davies David Pieri fsharon.kedar, steve.chien, frank.webb, aaron.b.kiely, jdoubled, ashley.davies, [email protected] Jet Propulsion Laboratory, California Institute of Technology Abstract—In response to NASA’s announced requirement for situ Sensor-web (OASIS) has two-way communication ca- Earth hazard monitoring sensor-web technology, a multidis- pability between ground and space assets, use both space ciplinary team involving sensor-network experts (Washington and ground data for optimal allocation of limited bandwidth State University), space scientists (JPL), and Earth scientists (USGS Cascade Volcano Observatory (CVO)), have developed a resources on the ground, and uses smart management of prototype of dynamic and scalable hazard monitoring sensor-web competing demands for limited space assets [1]. and applied it to volcano monitoring. The combined Optimized This research responds to the NASA objective [2] to Autonomous Space - In-situ Sensor-web (OASIS) has two-way “conduct a program of research and technology development communication capability between ground and space assets, to advance Earth observation from space, improve scientific uses both space and ground data for optimal allocation of limited bandwidth resources on the ground, and uses smart understanding, and demonstrate new technologies with the management of competing demands for limited space assets. -

Using Earth Observation Data to Improve Health in the United States Accomplishments and Future Challenges

a report of the csis technology and public policy program Using Earth Observation Data to Improve Health in the United States accomplishments and future challenges 1800 K Street, NW | Washington, DC 20006 Tel: (202) 887-0200 | Fax: (202) 775-3199 Author E-mail: [email protected] | Web: www.csis.org Lyn D. Wigbels September 2011 ISBN 978-0-89206-668-1 Ë|xHSKITCy066681zv*:+:!:+:! a report of the csis technology and public policy program Using Earth Observation Data to Improve Health in the United States accomplishments and future challenges Author Lyn D. Wigbels September 2011 About CSIS At a time of new global opportunities and challenges, the Center for Strategic and International Studies (CSIS) provides strategic insights and bipartisan policy solutions to decisionmakers in government, international institutions, the private sector, and civil society. A bipartisan, nonprofit organization headquartered in Washington, D.C., CSIS conducts research and analysis and devel- ops policy initiatives that look into the future and anticipate change. Founded by David M. Abshire and Admiral Arleigh Burke at the height of the Cold War, CSIS was dedicated to finding ways for America to sustain its prominence and prosperity as a force for good in the world. Since 1962, CSIS has grown to become one of the world’s preeminent international policy institutions, with more than 220 full-time staff and a large network of affiliated scholars focused on defense and security, regional stability, and transnational challenges ranging from energy and climate to global development and economic integration. Former U.S. senator Sam Nunn became chairman of the CSIS Board of Trustees in 1999, and John J. -

Polarimetric Radar Characteristics of Tornadogenesis Failure in Supercell Thunderstorms

atmosphere Article Polarimetric Radar Characteristics of Tornadogenesis Failure in Supercell Thunderstorms Matthew Van Den Broeke Department of Earth and Atmospheric Sciences, University of Nebraska-Lincoln, Lincoln, NE 68588, USA; [email protected] Abstract: Many nontornadic supercell storms have times when they appear to be moving toward tornadogenesis, including the development of a strong low-level vortex, but never end up producing a tornado. These tornadogenesis failure (TGF) episodes can be a substantial challenge to operational meteorologists. In this study, a sample of 32 pre-tornadic and 36 pre-TGF supercells is examined in the 30 min pre-tornadogenesis or pre-TGF period to explore the feasibility of using polarimetric radar metrics to highlight storms with larger tornadogenesis potential in the near-term. Overall the results indicate few strong distinguishers of pre-tornadic storms. Differential reflectivity (ZDR) arc size and intensity were the most promising metrics examined, with ZDR arc size potentially exhibiting large enough differences between the two storm subsets to be operationally useful. Change in the radar metrics leading up to tornadogenesis or TGF did not exhibit large differences, though most findings were consistent with hypotheses based on prior findings in the literature. Keywords: supercell; nowcasting; tornadogenesis failure; polarimetric radar Citation: Van Den Broeke, M. 1. Introduction Polarimetric Radar Characteristics of Supercell thunderstorms produce most strong tornadoes in North America, moti- Tornadogenesis Failure in Supercell vating study of their radar signatures for the benefit of the operational and research Thunderstorms. Atmosphere 2021, 12, communities. Since the polarimetric upgrade to the national radar network of the United 581. https://doi.org/ States (2011–2013), polarimetric radar signatures of these storms have become well-known, 10.3390/atmos12050581 e.g., [1–5], and many others. -

Soaring Weather

Chapter 16 SOARING WEATHER While horse racing may be the "Sport of Kings," of the craft depends on the weather and the skill soaring may be considered the "King of Sports." of the pilot. Forward thrust comes from gliding Soaring bears the relationship to flying that sailing downward relative to the air the same as thrust bears to power boating. Soaring has made notable is developed in a power-off glide by a conven contributions to meteorology. For example, soar tional aircraft. Therefore, to gain or maintain ing pilots have probed thunderstorms and moun altitude, the soaring pilot must rely on upward tain waves with findings that have made flying motion of the air. safer for all pilots. However, soaring is primarily To a sailplane pilot, "lift" means the rate of recreational. climb he can achieve in an up-current, while "sink" A sailplane must have auxiliary power to be denotes his rate of descent in a downdraft or in come airborne such as a winch, a ground tow, or neutral air. "Zero sink" means that upward cur a tow by a powered aircraft. Once the sailcraft is rents are just strong enough to enable him to hold airborne and the tow cable released, performance altitude but not to climb. Sailplanes are highly 171 r efficient machines; a sink rate of a mere 2 feet per second. There is no point in trying to soar until second provides an airspeed of about 40 knots, and weather conditions favor vertical speeds greater a sink rate of 6 feet per second gives an airspeed than the minimum sink rate of the aircraft. -

Electrical Role for Severe Storm Tornadogenesis (And Modification) R.W

y & W log ea to th a e m r li F C o r Armstrong and Glenn, J Climatol Weather Forecasting 2015, 3:3 f e o c l a a s n t r i http://dx.doi.org/10.4172/2332-2594.1000139 n u g o J Climatology & Weather Forecasting ISSN: 2332-2594 ReviewResearch Article Article OpenOpen Access Access Electrical Role for Severe Storm Tornadogenesis (and Modification) R.W. Armstrong1* and J.G. Glenn2 1Department of Mechanical Engineering, University of Maryland, College Park, MD, USA 2Munitions Directorate, Eglin Air Force Base, FL, USA Abstract Damage from severe storms, particularly those involving significant lightning is increasing in the US. and abroad; and, increasingly, focus is on an electrical role for lightning in intra-cloud (IC) tornadogenesis. In the present report, emphasis is given to severe storm observations and especially to model descriptions relating to the subject. Keywords: Tornadogenesis; Severe storms; Electrification; in weather science was submitted in response to solicitation from the Lightning; Cloud seeding National Science Board [16], and a note was published on the need for cooperation between weather modification practitioners and academic Introduction researchers dedicated to ameliorating the consequences of severe weather storms [17]. Need was expressed for interdisciplinary research Benjamin Franklin would be understandably disappointed. Here on the topic relating to that recently touted for research in the broader we are, more than 260 years after first demonstration in Marly-la-Ville, field of meteorological sciences [18]. -

Manuscript Was Written Jointly by YL, CK, and MZ

A simplified method for the detection of convection using high resolution imagery from GOES-16 Yoonjin Lee1, Christian D. Kummerow1,2, Milija Zupanski2 1Department of Atmospheric Science, Colorado state university, Fort Collins, CO 80521, USA 5 2Cooperative Institute for Research in the Atmosphere, Colorado state university, Fort Collins, CO 80521, USA Correspondence to: Yoonjin Lee ([email protected]) Abstract. The ability to detect convective regions and adding heating in these regions is the most important skill in forecasting severe weather systems. Since radars are most directly related to precipitation and are available in high temporal resolution, their data are often used for both detecting convection and estimating latent heating. However, radar data are limited to land areas, 10 largely in developed nations, and early convection is not detectable from radars until drops become large enough to produce significant echoes. Visible and Infrared sensors on a geostationary satellite can provide data that are more sensitive to small droplets, but they also have shortcomings: their information is almost exclusively from the cloud top. Relatively new geostationary satellites, Geostationary Operational Environmental Satellites-16 and -17 (GOES-16 and GOES-17), along with Himawari-8, can make up for some of this lack of vertical information through the use of very high spatial and temporal 15 resolutions. This study develops two algorithms to detect convection at vertically growing clouds and mature convective clouds using 1-minute GOES-16 Advanced Baseline Imager (ABI) data. Two case studies are used to explain the two methods, followed by results applied to one month of data over the contiguous United States. -

Assessing and Improving Cloud-Height Based Parameterisations of Global Lightning Flash Rate, and Their Impact on Lightning-Produced Nox and Tropospheric Composition

https://doi.org/10.5194/acp-2020-885 Preprint. Discussion started: 2 October 2020 c Author(s) 2020. CC BY 4.0 License. Assessing and improving cloud-height based parameterisations of global lightning flash rate, and their impact on lightning-produced NOx and tropospheric composition 5 Ashok K. Luhar1, Ian E. Galbally1, Matthew T. Woodhouse1, and Nathan Luke Abraham2,3 1CSIRO Oceans and Atmosphere, Aspendale, 3195, Australia 2National Centre for Atmospheric Science, Department of Chemistry, University of Cambridge, Cambridge, UK 3Department of Chemistry, University of Cambridge, Cambridge, UK 10 Correspondence to: Ashok K. Luhar ([email protected]) Abstract. Although lightning-generated oxides of nitrogen (LNOx) account for only approximately 10% of the global NOx source, it has a disproportionately large impact on tropospheric photochemistry due to the conducive conditions in the tropical upper troposphere where lightning is mostly discharged. In most global composition models, lightning flash rates used to calculate LNOx are expressed in terms of convective cloud-top height via the Price and Rind (1992) (PR92) 15 parameterisations for land and ocean. We conduct a critical assessment of flash-rate parameterisations that are based on cloud-top height and validate them within the ACCESS-UKCA global chemistry-climate model using the LIS/OTD satellite data. While the PR92 parameterisation for land yields satisfactory predictions, the oceanic parameterisation underestimates the observed flash-rate density severely, yielding a global average of 0.33 flashes s-1 compared to the observed 9.16 -1 flashes s over the ocean and leading to LNOx being underestimated proportionally. We formulate new/alternative flash-rate 20 parameterisations following Boccippio’s (2002) scaling relationships between thunderstorm electrical generator power and storm geometry coupled with available data. -

Delayed Tornadogenesis Within New York State Severe Storms Article

Wunsch, M. S. and M. M. French, 2020: Delayed tornadogenesis within New York State severe storms. J. Operational Meteor., 8 (6), 79-92, doi: https://doi.org/10.15191/nwajom.2020.0806. Article Delayed Tornadogenesis Within New York State Severe Storms MATTHEW S. WUNSCH NOAA/National Weather Service, Upton, NY, and School of Marine and Atmospheric Sciences, Stony Brook University, Stony Brook, NY MICHAEL M. FRENCH School of Marine and Atmospheric Sciences, Stony Brook University, Stony Brook, NY (Manuscript received 11 June 2019; review completed 21 October 2019) ABSTRACT Past observational research into tornadoes in the northeast United States (NEUS) has focused on integrated case studies of storm evolution or common supportive environmental conditions. A repeated theme in the former studies is the influence that the Hudson and Mohawk Valleys in New York State (NYS) may have on conditions supportive of tornado formation. Recent work regarding the latter has provided evidence that environments in these locations may indeed be more supportive of tornadoes than elsewhere in the NEUS. In this study, Weather Surveillance Radar–1988 Doppler data from 2008 to 2017 are used to investigate severe storm life cycles in NYS. Observed tornadic and non-tornadic severe cases were analyzed and compared to determine spatial and temporal differences in convective initiation (CI) points and severe event occurrence objectively within the storm paths. We find additional observational evidence supporting the hypothesis the Mohawk and Hudson Valley regions in NYS favor the occurrence of tornadogenesis: the substantially longer time it takes for storms that initiate in western NYS and Pennsylvania to become tornadic compared to storms that initiate in either central or eastern NYS. -

Cloud-Base Height Derived from a Ground-Based Infrared Sensor and a Comparison with a Collocated Cloud Radar

VOLUME 35 JOURNAL OF ATMOSPHERIC AND OCEANIC TECHNOLOGY APRIL 2018 Cloud-Base Height Derived from a Ground-Based Infrared Sensor and a Comparison with a Collocated Cloud Radar ZHE WANG Collaborative Innovation Center on Forecast and Evaluation of Meteorological Disasters, CMA Key Laboratory for Aerosol–Cloud–Precipitation, and School of Atmospheric Physics, Nanjing University of Information Science and Technology, Nanjing, and Training Center, China Meteorological Administration, Beijing, China ZHENHUI WANG Collaborative Innovation Center on Forecast and Evaluation of Meteorological Disasters, CMA Key Laboratory for Aerosol–Cloud–Precipitation, and School of Atmospheric Physics, Nanjing University of Information Science and Technology, Nanjing, China XIAOZHONG CAO,JIAJIA MAO,FA TAO, AND SHUZHEN HU Atmosphere Observation Test Bed, and Meteorological Observation Center, China Meteorological Administration, Beijing, China (Manuscript received 12 June 2017, in final form 31 October 2017) ABSTRACT An improved algorithm to calculate cloud-base height (CBH) from infrared temperature sensor (IRT) observations that accompany a microwave radiometer was described, the results of which were compared with the CBHs derived from ground-based millimeter-wavelength cloud radar reflectivity data. The results were superior to the original CBH product of IRT and closer to the cloud radar data, which could be used as a reference for comparative analysis and synergistic cloud measurements. Based on the data obtained by these two kinds of instruments for the same period (January–December 2016) from the Beijing Nanjiao Weather Observatory, the results showed that the consistency of cloud detection was good and that the consistency rate between the two datasets was 81.6%. The correlation coefficient between the two CBH datasets reached 0.62, based on 73 545 samples, and the average difference was 0.1 km. -

Study Guide Medical Terminology by Thea Liza Batan About the Author

Study Guide Medical Terminology By Thea Liza Batan About the Author Thea Liza Batan earned a Master of Science in Nursing Administration in 2007 from Xavier University in Cincinnati, Ohio. She has worked as a staff nurse, nurse instructor, and level department head. She currently works as a simulation coordinator and a free- lance writer specializing in nursing and healthcare. All terms mentioned in this text that are known to be trademarks or service marks have been appropriately capitalized. Use of a term in this text shouldn’t be regarded as affecting the validity of any trademark or service mark. Copyright © 2017 by Penn Foster, Inc. All rights reserved. No part of the material protected by this copyright may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage and retrieval system, without permission in writing from the copyright owner. Requests for permission to make copies of any part of the work should be mailed to Copyright Permissions, Penn Foster, 925 Oak Street, Scranton, Pennsylvania 18515. Printed in the United States of America CONTENTS INSTRUCTIONS 1 READING ASSIGNMENTS 3 LESSON 1: THE FUNDAMENTALS OF MEDICAL TERMINOLOGY 5 LESSON 2: DIAGNOSIS, INTERVENTION, AND HUMAN BODY TERMS 28 LESSON 3: MUSCULOSKELETAL, CIRCULATORY, AND RESPIRATORY SYSTEM TERMS 44 LESSON 4: DIGESTIVE, URINARY, AND REPRODUCTIVE SYSTEM TERMS 69 LESSON 5: INTEGUMENTARY, NERVOUS, AND ENDOCRINE S YSTEM TERMS 96 SELF-CHECK ANSWERS 134 © PENN FOSTER, INC. 2017 MEDICAL TERMINOLOGY PAGE III Contents INSTRUCTIONS INTRODUCTION Welcome to your course on medical terminology. You’re taking this course because you’re most likely interested in pursuing a health and science career, which entails proficiencyincommunicatingwithhealthcareprofessionalssuchasphysicians,nurses, or dentists. -

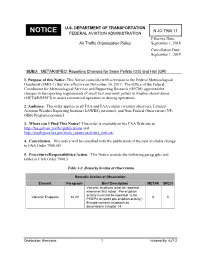

METAR/SPECI Reporting Changes for Snow Pellets (GS) and Hail (GR)

U.S. DEPARTMENT OF TRANSPORTATION N JO 7900.11 NOTICE FEDERAL AVIATION ADMINISTRATION Effective Date: Air Traffic Organization Policy September 1, 2018 Cancellation Date: September 1, 2019 SUBJ: METAR/SPECI Reporting Changes for Snow Pellets (GS) and Hail (GR) 1. Purpose of this Notice. This Notice coincides with a revision to the Federal Meteorological Handbook (FMH-1) that was effective on November 30, 2017. The Office of the Federal Coordinator for Meteorological Services and Supporting Research (OFCM) approved the changes to the reporting requirements of small hail and snow pellets in weather observations (METAR/SPECI) to assist commercial operators in deicing operations. 2. Audience. This order applies to all FAA and FAA-contract weather observers, Limited Aviation Weather Reporting Stations (LAWRS) personnel, and Non-Federal Observation (NF- OBS) Program personnel. 3. Where can I Find This Notice? This order is available on the FAA Web site at http://faa.gov/air_traffic/publications and http://employees.faa.gov/tools_resources/orders_notices/. 4. Cancellation. This notice will be cancelled with the publication of the next available change to FAA Order 7900.5D. 5. Procedures/Responsibilities/Action. This Notice amends the following paragraphs and tables in FAA Order 7900.5. Table 3-2: Remarks Section of Observation Remarks Section of Observation Element Paragraph Brief Description METAR SPECI Volcanic eruptions must be reported whenever first noted. Pre-eruption activity must not be reported. (Use Volcanic Eruptions 14.20 X X PIREPs to report pre-eruption activity.) Encode volcanic eruptions as described in Chapter 14. Distribution: Electronic 1 Initiated By: AJT-2 09/01/2018 N JO 7900.11 Remarks Section of Observation Element Paragraph Brief Description METAR SPECI Whenever tornadoes, funnel clouds, or waterspouts begin, are in progress, end, or disappear from sight, the event should be described directly after the "RMK" element.