Audio Content-Based Music Retrieval

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Conductor, Composer and Violinist Jaakko Kuusisto Enjoys An

Conductor, composer and violinist Jaakko Kuusisto enjoys an extensive career that was launched by a series of successes in international violin competitions in the 1990s. Having recorded concertos by some of the most prominent Finnish contemporary composers, such as Rautavaara, Aho, Sallinen and Pulkkis, he is one of the most frequently recorded Finnish instrumentalists. When BIS Records launched a project of complete Sibelius recordings, Kuusisto had a major role in the violin works and string chamber music. Moreover, his discography contains several albums outside the classical genre. Kuusisto’s conducting repertoire is equally versatile and ranges from baroque to the latest new works, regardless of genre. He has worked with many leading orchestras, including Minnesota Orchestra; Sydney, Melbourne and Adelaide Symphony Orchestras; NDR Hannover, DeFilharmonie in Belgium; Chamber Orchestras of Tallinn and Lausanne; Helsinki, Turku and Tampere Philharmonic Orchestras; Oulu Symphony Orchestra as well as the Avanti! Chamber Orchestra. Kuusisto recently conducted his debut performances with the Krakow Sinfonietta and Ottawa’s National Arts Center Orchestra, as well as at the Finnish National Opera’s major production Indigo, an opera by heavy metal cello group Apocalyptica’s Eicca Toppinen and Perttu Kivilaakso. The score of the opera is also arranged and orchestrated by Kuusisto. Kuusisto performs regularly as soloist and chamber musician and has held the position of artistic director at several festivals. Currently he is the artistic director of the Oulu Music Festival with a commitment presently until 2017. Kuusisto also enjoys a longstanding relationship with the Lahti Symphony Orchestra: having served as concertmaster during 1999-2012, his collaboration with the orchestra now continues with frequent guest conducting and recordings, to name a few. -

2017 MAJOR EURO Music Festival CALENDAR Sziget Festival / MTI Via AP Balazs Mohai

2017 MAJOR EURO Music Festival CALENDAR Sziget Festival / MTI via AP Balazs Mohai Sziget Festival March 26-April 2 Horizon Festival Arinsal, Andorra Web www.horizonfestival.net Artists Floating Points, Motor City Drum Ensemble, Ben UFO, Oneman, Kink, Mala, AJ Tracey, Midland, Craig Charles, Romare, Mumdance, Yussef Kamaal, OM Unit, Riot Jazz, Icicle, Jasper James, Josey Rebelle, Dan Shake, Avalon Emerson, Rockwell, Channel One, Hybrid Minds, Jam Baxter, Technimatic, Cooly G, Courtesy, Eva Lazarus, Marc Pinol, DJ Fra, Guim Lebowski, Scott Garcia, OR:LA, EL-B, Moony, Wayward, Nick Nikolov, Jamie Rodigan, Bahia Haze, Emerald, Sammy B-Side, Etch, Visionobi, Kristy Harper, Joe Raygun, Itoa, Paul Roca, Sekev, Egres, Ghostchant, Boyson, Hampton, Jess Farley, G-Ha, Pixel82, Night Swimmers, Forbes, Charline, Scar Duggy, Mold Me With Joy, Eric Small, Christer Anderson, Carina Helen, Exswitch, Seamus, Bulu, Ikarus, Rodri Pan, Frnch, DB, Bigman Japan, Crawford, Dephex, 1Thirty, Denzel, Sticky Bandit, Kinno, Tenbagg, My Mate From College, Mr Miyagi, SLB Solden, Austria June 9-July 10 DJ Snare, Ambiont, DLR, Doc Scott, Bailey, Doree, Shifty, Dorian, Skore, March 27-April 2 Web www.electric-mountain-festival.com Jazz Fest Vienna Dossa & Locuzzed, Eksman, Emperor, Artists Nervo, Quintino, Michael Feiner, Full Metal Mountain EMX, Elize, Ernestor, Wastenoize, Etherwood, Askery, Rudy & Shany, AfroJack, Bassjackers, Vienna, Austria Hemagor, Austria F4TR4XX, Rapture,Fava, Fred V & Grafix, Ostblockschlampen, Rafitez Web www.jazzfest.wien Frederic Robinson, -

OFFICIAL RULES Summitmedia, LLC Contesting Apocalyptica Plays Metallica Concert Tickets WBPT-FM

OFFICIAL RULES SummitMedia, LLC Contesting Apocalyptica plays Metallica Concert Tickets WBPT-FM The following are the official rules of SummitMedia, LLC (“Sponsor”) for the Apocalyptica plays Metallica Concert Ticket Contest (“Contest”). By participating, each participant agrees as follows: 1. NO PURCHASE IS NECESSARY. Void where prohibited by law. All federal, state, and local regulations apply. 2. ELIGIBILITY. Unless otherwise specified, the Contest is open only to legal U.S. residents age eighteen (18) years or older at the time of entry with a valid Social Security number and who reside in the WBPT-FM listening area. Unless otherwise specified, employees of SummitMedia, LLC , its parent company, affiliates, related entities and subsidiaries, promotional sponsors, prize providers, advertising agencies, other radio stations serving the SummitMedia, LLC radio station’s listening area, and the immediate family members and household members of all such employees are not eligible to participate. The term “immediate family members” includes spouses, parents and step-parents, siblings and step-siblings, and children and stepchildren. The term “household members” refers to people who share the same residence at least three (3) months out of the year. Only one winner per household or family is permitted. There is no limit to the number of times an individual may attempt to enter, but each individual may qualify only once per Contest. An individual who has won more than $500 in a SummitMedia, LLC Contest or Sweepstakes in a particular calendar quarter is not eligible to participate in another SummitMedia, LLC Contest or Sweepstakes in that quarter unless otherwise specifically stated. Entrants may not use an assumed name or alias (other than a screen name where a contest involves use of a social media site). -

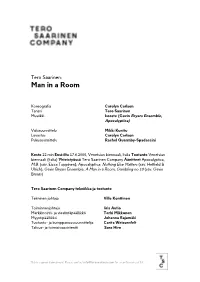

Man in a Room

Tero Saarinen: Man in a Room Koreografia Carolyn Carlson Tanssi Tero Saarinen Musiikki kooste (Gavin Bryars Ensemble, Apocalyptica) Valosuunnittelu Mikki Kunttu Lavastus Carolyn Carlson Pukusuunnittelu Rachel Quarmby-Spadaccini Kesto 22 min Ensi-ilta 17.6.2000, Venetsian biennaali, Italia Tuotanto Venetsian biennaali (Italia) Yhteistyössä Tero Saarinen Company Äänitteet Apocalyptica, M.B. (säv. Eicca Toppinen); Apocalyptica: Nothing Else Matters (säv. Hetfield & Ulrich); Gavin Bryars Ensemble, A Man in a Room, Gambling no 10 (säv. Gavin Bryars) Tero Saarinen Company tekniikka ja tuotanto Tekninen johtaja Ville Konttinen Toiminnanjohtaja Iiris Autio Markkinointi- ja viestintäpäällikkö Terhi Mikkonen Myyntipäällikkö Johanna Rajamäki Tuotanto- ja kumppanuussuunnittelija Carita Weissenfelt Talous- ja toimistoassistentti Sara Hirn This is a general document. Please contact [email protected] for a confirmed cast list. Tero Saarinen Company Vuonna 1996 perustettu Tero Saarinen Company on kiertänyt jo 40 maassa, kuudella eri mantereella. Ryhmä on esiintynyt lukuisilla arvostetuilla festivaaleilla ja teattereissa kuten BAM ja The Joyce New Yorkissa, Chaillot ja Châtelet Pariisissa sekä Royal Festival Hall Lontoossa. TSC:n toimintaan kuuluvat myös kansainvälinen opetustoiminta, yhteisölliset hankkeet sekä ulkomaisten tanssivierailujen tuottaminen Helsinkiin. Lisäksi Saarinen tekee yhteistyötä muiden nimekkäiden tanssiryhmien kanssa; hänen teoksiaan ovat esittäneet the LA Phil, NDT1, Batsheva, Lyon Opéra Ballet, Korean kansallinen tanssiryhmä -

Nothing Else Matters Apocalyptica Metallica for Strings Quartet Sheet Music

Nothing Else Matters Apocalyptica Metallica For Strings Quartet Sheet Music Download nothing else matters apocalyptica metallica for strings quartet sheet music pdf now available in our library. We give you 6 pages partial preview of nothing else matters apocalyptica metallica for strings quartet sheet music that you can try for free. This music notes has been read 38887 times and last read at 2021-09-27 18:00:17. In order to continue read the entire sheet music of nothing else matters apocalyptica metallica for strings quartet you need to signup, download music sheet notes in pdf format also available for offline reading. Instrument: Cello, Violin Ensemble: String Quartet Level: Advanced [ Read Sheet Music ] Other Sheet Music Fade To Black Metallica Apocalyptica Strings Quartet Fade To Black Metallica Apocalyptica Strings Quartet sheet music has been read 13756 times. Fade to black metallica apocalyptica strings quartet arrangement is for Advanced level. The music notes has 6 preview and last read at 2021-09-24 11:31:35. [ Read More ] Nothing Else Matters Apocalyptica Metallica For Violin Quintet Full Score And Parts Nothing Else Matters Apocalyptica Metallica For Violin Quintet Full Score And Parts sheet music has been read 13390 times. Nothing else matters apocalyptica metallica for violin quintet full score and parts arrangement is for Intermediate level. The music notes has 6 preview and last read at 2021-09-22 18:03:42. [ Read More ] Nothing Else Matters Metallica For String Quartet Nothing Else Matters Metallica For String Quartet sheet music has been read 14766 times. Nothing else matters metallica for string quartet arrangement is for Intermediate level. -

MICHIGAN MONTHLY ______May, 2018 Diane Klakulak, Editor & Publisher ______

MICHIGAN MONTHLY ________________________________________________________________________________________________________________ May, 2018 Diane Klakulak, Editor & Publisher __________________________________________________________________________________________________________________ DETROIT TIGERS – www.tigers.com FOX THEATRE – 2211 Woodward, Detroit; 313-471- 6611; 313presents.com 4/30 – 5/2 vs. Tampa Bay Rays May 3-6 at Kansas City Royals May 3 Disney Junior Dance Party on Tour May 7-9 at Texas Rangers May 5 Festival of Laughs: Sommore, Arnez J May 11-13 vs. Seattle Mariners May 8-13 Rodgers & Hamerstein’s The King & I May 14-16 vs. Cleveland Indians May 22 Vance Joy, Alice Merton May 17-20 at Seattle Mariners May 26 Hip Hop Smackdown 5! Kool Moe Dee, May 21-23 at Minnesota Twins Doug E. Fresh, more May 25-27 vs. Chicago White Sox June 18 Jake Paul and Team 10 May 28-31 vs. L.A. Angels June 24 Jill Scott June 1-3 vs. Toronto Blue Jays June 30 Yes June 5-7 at Boston Red Sox June 8-10 vs. Cleveland Indians MICHIGAN LOTTERY AMPHITHEATRE AT FREEDOM HILL – 14900 Metro Parkway, Sterling LITTLE CAESARS ARENA – 313presents.com Heights; 586-269-9700, www.freedomhill.net May 20 Daryl Hall & John Oates, Train May 27 Slayer, Lamb of God June 15 Shania Twain May 29 Post Malone, 21 Savage June 22 Sam Smith June 8 Jackson Browne June 26 Harry Styles, Kacey Musgraves June 9 Primus and Mastodon June 15 Dirty Heads, Iration MOTOR CITY CASINO HOTEL – 2901 Grand River June 16 Jazz Spectacular: Paul Taylor, Michael Avenue, Detroit; www.motorcitycasino.com; 313-237- Lington, more 7711; Ticketmaster June 30 Mix 92.3 Summer Breeze: Keith Sweat, En Vogue, more May 3 The Clairvoyants May 4 Howie Mandel DTE ENERGY THEATRE – 7774 Sashabaw Road, May 6 Anthony Hamilton Clarkston; 248-377-0100, www.palacenet.com; T.M. -

Apocalyptica Inquisition Symphony Download

Apocalyptica Inquisition Symphony Download 1 / 4 Apocalyptica Inquisition Symphony Download 2 / 4 3 / 4 альбом Apocalyptica - Inquisition Symphony (1998) бесплатно, без рекламы, с быстрых ... Скачать дискографию Apocalyptica. ... Download whole album*. Inquisition Symphony may refer to: A song that is fifth track on the studio album, Schizophrenia by Sepultura. The title and compilation album, Inquisition Symphony by Apocalyptica, ... Print/export. Download as PDF · Printable version .... Rock music CD release from Apocalyptica with the album Inquisition Symphony. Released on the label Mercury Records. Rock music CD. This hard to find pre- .... Listen to Inquisition Symphony (Live at Download Paris 2016) by Apocalyptica - Live at Download Paris 2016. Deezer: free music streaming. Discover more than .... TicketsVIP Sold Out! 04 Feb 2021 +. Add to Google Calendar · Download iCal. Los Angeles, CA (US) .... Download M.B. by Apocalyptica from Inquisition Symphony. Play M.B. song online for free or download mp3 and listen offline on Wynk Music.. Inquisition Symphony MP3 Song by Apocalyptica from the album Cult. Download Inquisition Symphony song on Gaana.com and listen Cult Inquisition .... Inquisition Symphony Songs - Download Inquisition Symphony mp3 songs to your Hungama account. Get the complete list of ... Harmageddon. Apocalyptica .... 2020/01/06 - dragon ball japanese audio download apocalyptica inquisition symphony rar vs-dvb-t 210se driver alexandra stan mr saxobeat skull iclass 9595x .... Apocalyptica - Inquisition Symphony power tab with free online tab player, speed control and loop. Download original Power tab.. Download MP3. Read more. Inquisition Symphony is the second studio album by the Finnish metal band Apocalyptica. The album branches from their previous .... Apocalyptica é uma banda finlandesa formada por três violoncelistas e, desde 2005, um baterista. -

Fact Sheet Eng.Pdf

FINLAND IS A NATION OF EXTREMES. THE SYMPHONY OF EXTREMES – BORN FROM FINNISH DNA IS A PROJECT WHERE WE EXAMINE OUR HERITAGE AND TURN IT INTO MUSIC THAT REFLECTS THESE EXTREMES. Finland is a nation of extreme differences. Not only in our seasons and nature, but people as well. To celebrate Finland’s centennial, Visit Finland delves into the nation’s character more vocally than ever before by turning the Finnish genotype into music. SymphonyofExtremes.com EASTERN AND WESTERN FINNS RESEMBLE EACH OTHER LESS THAN GERMANS AND BRITS DO. WHY? WHAT? Authenticity is currently a major trend in tourism. The Symphony of Extremes is a project where Travellers are interested in local people and Eicca Toppinen from Apocalyptica composed their ways of life. The Symphony of Extremes is a song based on DNA samples sourced from crafted to their needs. Finland. The genre-crossing song will be premiered on 13 November 2017. Our current age of media spin dictates a need for new ideas that transcend boundaries and The project’s first phase followed the composition attract attention. Ideas that feel genuinely fresh process and introduced a number of interesting and evocative. The Symphony of Extremes is people behind the genes. They each exhibit some a project that unites tourism with science and art extreme and distinctively Finnish trait, such as in a fascinating way. Finnish grit (“sisu”) or a strong bond with the Arctic. Finland is at the crossroads of east and west. The next stage focuses on the music and its Our roots straddle the border: composition. Instead of using generic travel imagery, the project dives deep into Finland’s psyche in order western Finland is influenced by Europe’s ancient to introduce international audiences to our cultural agrarian culture and Scandinavian DNA, while the core. -

Ice Rink Platinum Theater Pool Deck

POOL DECK PLATINUM THEATER STUDIO B - ICE RINK SPHYNX LOUNGE (Deck 11-12 midship) (Deck 2-3-4 forward) (Deck 3 midship) (Deck 5 forward) 05:00pm 05:00pm 05.15PM - 06.00PM 05.15PM - 06.00PM EQUILIBRIUM HELSTAR 06:00pm 06:00pm 06.00PM - 06.45PM GOD DETHRONED 07:00pm 06.45PM - 07.45PM 06.45PM - 07.30PM 07:00pm PRETTY MAIDS EINHERJER 07.30PM - 08.30PM 08:00pm 08:00pm THERION 08.15PM - 09.00PM 08.30PM - 09.30PM ENTHRONED 09:00pm PLEASE 09:00pm ANNIHILATOR BE PATIENT WHILE 09.15PM - 10.15PM 10:00pm ARCH ENEMY 09.45PM - 10.30PM 10:00pm WE ARE BUILDING THRESHOLD 10.30PM - 11.30PM 11:00pm THE WORLD’S 11:00pm KATAKLYSM BIGGEST 11.15PM - 12.00AM 11.15PM - 12.30AM HEATHEN 12:00am OPEN AIR STAGE APOCALYPTICA 12:00pm STRUCTURE 12.30PM - 01.30AM 01:00am 12.45AM - 01.30AM 01:00am KORPIKLAANI TO SAIL THE TROUBLE 01.30AM - 02.15AM 02:00am OPEN SEAS PRIMAL FEAR 02:00am 02.15AM - 03.00AM 02.15AM - 03.00AM MELECHESH LAKE OF TEARS 03:00am 03:00am 03.00AM - 03.45AM ALESTORM 04:00am 03.45AM - 04.30AM 04:00am EXUMER 03.00AM - Sunrise 70000TONS OF 04.30AM - 05.15AM KARAOKE 05:00am MASACRE 05:00am 05.15AM - 06.00AM DARK SERMON 06:00am 06:00am POOL DECK PLATINUM THEATER STUDIO B - ICE RINK SPHYNX LOUNGE (Deck 11-12 midship) (Deck 2-3-4 forward) (Deck 3 midship) (Deck 5 forward) 10:00am 10:00am 11:00am 10.45AM - 11.30AM 11:00am TANK 11.00AM - 12.30AM BLIND GUARDIAN NEW ALBUM WORLD 12:00pm PREMIERE LISTENING 12:00pm PARTY PLUS MEET & GREET 12.15PM - 01.00PM GURD 01:00pm 01:00pm 02:00pm 01.45PM - 02.30PM 01.45PM - 03.15PM 02:00pm REFUGE APOCALYPTICA NEW ALBUM WORLD -

Revelation 6:9-11: an Exegesis of the Fifth Seal in the Light of the Problem of the Eschatological Delay

Andrews University Digital Commons @ Andrews University Dissertations Graduate Research 2015 Revelation 6:9-11: An Exegesis of the Fifth Seal in the Light of the Problem of the Eschatological Delay Patrice Allet Andrews University, [email protected] Follow this and additional works at: https://digitalcommons.andrews.edu/dissertations Part of the Biblical Studies Commons Recommended Citation Allet, Patrice, "Revelation 6:9-11: An Exegesis of the Fifth Seal in the Light of the Problem of the Eschatological Delay" (2015). Dissertations. 1574. https://digitalcommons.andrews.edu/dissertations/1574 This Dissertation is brought to you for free and open access by the Graduate Research at Digital Commons @ Andrews University. It has been accepted for inclusion in Dissertations by an authorized administrator of Digital Commons @ Andrews University. For more information, please contact [email protected]. ABSTRACT REVELATION 6:9-11: AN EXEGESIS OF THE FIFTH SEAL IN THE LIGHT OF THE PROBLEM OF THE ESCHATOLOGICAL DELAY by Patrice Allet Adviser: Ranko Stefanovic ABSTRACT OF GRADUATE STUDENT RESEARCH Dissertation Andrews University Seventh-day Adventist Theological Seminary Title: REVELATION 6:9-11: AN EXEGESIS OF THE FIFTH SEAL IN THE LIGHT OF THE PROBLEM OF THE ESCHATOLOGICAL DELAY Name of researcher: Patrice Allet Name and degree of faculty adviser: Ranko Stefanovic, Ph.D. Date completed: January 2015 The fifth seal of Revelation has most often been treated from an anthropological perspective that appears to be clearly inadequate to account for the depth of this trigger passage located in a climactic setting. In and around the fifth seal, the text suggests indeed that the persecution of the last days has occurred. -

The Encyclopaedia Metallica by Malcolm Dome and Jerry Ewing

metallica.indb 1 03/10/2007 10:33:40 The Encyclopaedia Metallica by Malcolm Dome and Jerry Ewing A CHROME DREAMS PUBLICATION First Edition 2007 Published by Chrome Dreams PO BOX 230, New Malden, Surrey, KT3 6YY, UK [email protected] WWW.CHROMEDREAMS.CO.UK ISBN 9781842404034 Copyright © 2007 by Chrome Dreams Edited by Cathy Johnstone Cover Design Sylwia Grzeszczuk Layout Design Marek Niedziewicz All rights reserved. No part of this book may be reproduced without the written permission of the publishers. A catalogue record for this book is available from the British Library. Printed and bound in Great Britain by William Clowes Ltd, Beccles, Suffolk metallica.indb 2 03/10/2007 10:33:40 metallica.indb 3 03/10/2007 10:33:41 Encyclopaedia Metallica THE AUTHORS WOULD SOURCES FROM WHICH LIKE TO THANK ... QUOTES HAVE BEEN TAKEN ...the following people for their Kerrang! magazine support and assistance: Metal Hammer magazine All at Chrome Dreams. RAW magazine Adair & Roxy. Playboy magazine Q magazine All at TotalRock: Tony Wilson, Thekkles, Tabitha, Guitar World magazine Emma ‘SF’ Bellamy, The Bat, Ickle Buh, So What! official Metallica fanzine The PMQ, Katie P. www.encyclopedia-metallica. com Everyone at the Crobar/ www.metallicaworld.co.uk Evensong: Sir Barrence, The Rector, The Crazy Bitch, www.blabbermouth.net The Lonely Doctor, Steve, Rick, Johanna, Bagel, Benjy, www.metalhamer.co.uk Maisie, Laura, Stuart, Steve www.kerrang.com Hammonds, John Richards, Dave Everley, Bob Slayer, en.wikipedia.org David Kenny, The Unique One, Harjaholic, Al King, Jonty, Orange Chuffin’ Goblin (Baby), Lady E., Smelly Jacques, Anna Maria, Speccy Rachel. -

KUHLMANN-THESIS-2018.Pdf (1.113Mb)

FEMALE METAL FANS AND THE PERCEIVED POSITIVE EFFECTS OF METAL MUSIC A Thesis Submitted to the College of Graduate and Postdoctoral Studies In Partial Fulfillment of the Requirements For the Degree of Master In the Department of Educational Psychology and Special Education University of Saskatchewan By Anna Noura Kuhlmann Copyright Anna Noura Kuhlmann, January 2018. All rights reserved PERMISSION TO USE In presenting this thesis in partial fulfillment of the requirements for a Postgraduate degree from the University of Saskatchewan, I agree that the Libraries of this University may make it freely available for inspection. I further agree that permission for copying of this thesis in any manner, in whole or in part, for scholarly purposes may be granted by the professor or professors who supervised my thesis work or, in their absence, by the Head of the Department or the Dean of the College in which my thesis work was done. It is understood that any copying or publication or use of this thesis or parts thereof for financial gain shall not be allowed without my written permission. It is also understood that due recognition shall be given to me and to the University of Saskatchewan in any scholarly use which may be made of any material in my thesis. Requests for permission to copy or to make other uses of materials in this thesis in whole or part should be addressed to: Head of the Department of Educational Psychology and Special Education 28 Campus Drive University of Saskatchewan Saskatoon, Saskatchewan, S7N 0X1 Canada OR Dean College of Graduate and Postdoctoral Studies University of Saskatchewan 105 Administration Place Saskatoon, Saskatchewan, S7N 5A2 Canada i ABSTRACT Despite increasing literature that confirms the therapeutic benefits of music, metal music is still stigmatized as sexist, masculine, and detrimental to its fans.