Audio Content-Based Music Retrieval

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Estimating Complex Production Functions: the Importance of Starting Values

Version: January 26 Estimating complex production functions: The importance of starting values Mark Neal Presented at the 51st Australian Agricultural and Resource Economics Society Conference, Queenstown, New Zealand, 14-16 February, 2007. Postdoctoral Research Fellow Risk and Sustainable Management Group University of Queensland Room 519, School of Economics, Colin Clark Building (39) University of Queensland, St Lucia, QLD, 4072 Email: [email protected] Ph: +61 (0) 7 3365 6601 Fax: +61 (0) 7 3365 7299 http://www.uq.edu.au/rsmg Acknowledgements Chris O’Donnell helpfully provided access to Gauss code that he had written for estimation of latent class models so it could be translated into Shazam. Leighton Brough (UQ), Ariel Liebman (UQ) and Tom Pechey (UMelb) helped by organising a workshop with Nimrod/enFuzion at UQ in June 2006. enFuzion (via Rok Sosic) provided a limited license for use in the project. Leighton Brough, tools coordinator for the ARC Centre for Complex Systems (ACCS), assisted greatly in setting up an enFuzion grid and collating the large volume of results. Son Nghiem provided assistance in collating and analysing data as well as in creating some of the figures. Page 1 of 30 Version: January 26 ABSTRACT Production functions that take into account uncertainty can be empirically estimated by taking a state contingent view of the world. Where there is no a priori information to allocate data amongst a small number of states, the estimation may be carried out with finite mixtures model. The complexity of the estimation almost guarantees a large number of local maxima for the likelihood function. -

Apple / Shazam Merger Procedure Regulation (Ec)

EUROPEAN COMMISSION DG Competition CASE M.8788 – APPLE / SHAZAM (Only the English text is authentic) MERGER PROCEDURE REGULATION (EC) 139/2004 Article 8(1) Regulation (EC) 139/2004 Date: 06/09/2018 This text is made available for information purposes only. A summary of this decision is published in all EU languages in the Official Journal of the European Union. Parts of this text have been edited to ensure that confidential information is not disclosed; those parts are enclosed in square brackets. EUROPEAN COMMISSION Brussels, 6.9.2018 C(2018) 5748 final COMMISSION DECISION of 6.9.2018 declaring a concentration to be compatible with the internal market and the EEA Agreement (Case M.8788 – Apple/Shazam) (Only the English version is authentic) TABLE OF CONTENTS 1. Introduction .................................................................................................................. 6 2. The Parties and the Transaction ................................................................................... 6 3. Jurisdiction of the Commission .................................................................................... 7 4. The procedure ............................................................................................................... 8 5. The investigation .......................................................................................................... 8 6. Overview of the digital music industry ........................................................................ 9 6.1. The digital music distribution value -

Statistics and GIS Assistance Help with Statistics

Statistics and GIS assistance An arrangement for help and advice with regard to statistics and GIS is now in operation, principally for Master’s students. How do you seek advice? 1. The users, i.e. students at INA, make direct contact with the person whom they think can help and arrange a time for consultation. Remember to be well prepared! 2. Doctoral students and postdocs register the time used in Agresso (if you have questions about this contact Gunnar Jensen). Help with statistics Research scientist Even Bergseng Discipline: Forest economy, forest policies, forest models Statistical expertise: Regression analysis, models with random and fixed effects, controlled/truncated data, some time series modelling, parametric and non-parametric effectiveness analyses Software: Stata, Excel Postdoc. Ole Martin Bollandsås Discipline: Forest production, forest inventory Statistics expertise: Regression analysis, sampling Software: SAS, R Associate Professor Sjur Baardsen Discipline: Econometric analysis of markets in the forest sector Statistical expertise: General, although somewhat “rusty”, expertise in many econometric topics (all-rounder) Software: Shazam, Frontier Associate Professor Terje Gobakken Discipline: GIS og long-term predictions Statistical expertise: Regression analysis, ANOVA and PLS regression Software: SAS, R Ph.D. Student Espen Halvorsen Discipline: Forest economy, forest management planning Statistical expertise: OLS, GLS, hypothesis testing, autocorrelation, ANOVA, categorical data, GLM, ANOVA Software: (partly) Shazam, Minitab og JMP Ph.D. Student Jan Vidar Haukeland Discipline: Nature based tourism Statistical expertise: Regression and factor analysis Software: SPSS Associate Professor Olav Høibø Discipline: Wood technology Statistical expertise: Planning of experiments, regression analysis (linear and non-linear), ANOVA, random and non-random effects, categorical data, multivariate analysis Software: R, JMP, Unscrambler, some SAS Ph.D. -

Bab 1 Pendahuluan

BAB 1 PENDAHULUAN Bab ini akan membahas pengertian dasar statistik dengan sub-sub pokok bahasan sebagai berikut : Sub Bab Pokok Bahasan A. Sejarah dan Perkembangan Statistik B. Tokoh-tokoh Kontributor Statistika C. Definisi dan Konsep Statistik Modern D. Kegunaan Statistik E. Pembagian Statistik F. Statistik dan Komputer G. Soal Latihan A. Sejarah dan Perkembangan Statistik Penggunaan istilah statistika berakar dari istilah-istilah dalam bahasa latin modern statisticum collegium (“dewan negara”) dan bahasa Italia statista (“negarawan” atau “politikus”). Istilah statistik pertama kali digunakan oleh Gottfried Achenwall (1719-1772), seorang guru besar dari Universitas Marlborough dan Gottingen. Gottfried Achenwall (1749) menggunakan Statistik dalam bahasa Jerman untuk pertama kalinya sebagai nama bagi kegiatan analisis data kenegaraan, dengan mengartikannya sebagai “ilmu tentang negara/state”. Pada awal abad ke- 19 telah terjadi pergeseran arti menjadi “ilmu mengenai pengumpulan dan klasifikasi data”. Sir John Sinclair memperkenalkan nama dan pengertian statistics ini ke dalam bahasa Inggris. E.A.W. Zimmerman mengenalkan kata statistics ke negeri Inggris. Kata statistics dipopulerkan di Inggris oleh Sir John Sinclair dalam karyanya: Statistical Account of Scotland 1791-1799. Namun demikian, jauh sebelum abad XVIII masyarakat telah mencatat dan menggunakan data untuk keperluan mereka. Pada awalnya statistika hanya mengurus data yang dipakai lembaga- lembaga administratif dan pemerintahan. Pengumpulan data terus berlanjut, khususnya melalui sensus yang dilakukan secara teratur untuk memberi informasi kependudukan yang selalu berubah. Dalam bidang pemerintahan, statistik telah digunakan seiring dengan perjalanan sejarah sejak jaman dahulu. Kitab perjanjian lama (old testament) mencatat adanya kegiatan sensus penduduk. Pemerintah kuno Babilonia, Mesir, dan Roma mengumpulkan data lengkap tentang penduduk dan kekayaan alam yang dimilikinya. -

Conductor, Composer and Violinist Jaakko Kuusisto Enjoys An

Conductor, composer and violinist Jaakko Kuusisto enjoys an extensive career that was launched by a series of successes in international violin competitions in the 1990s. Having recorded concertos by some of the most prominent Finnish contemporary composers, such as Rautavaara, Aho, Sallinen and Pulkkis, he is one of the most frequently recorded Finnish instrumentalists. When BIS Records launched a project of complete Sibelius recordings, Kuusisto had a major role in the violin works and string chamber music. Moreover, his discography contains several albums outside the classical genre. Kuusisto’s conducting repertoire is equally versatile and ranges from baroque to the latest new works, regardless of genre. He has worked with many leading orchestras, including Minnesota Orchestra; Sydney, Melbourne and Adelaide Symphony Orchestras; NDR Hannover, DeFilharmonie in Belgium; Chamber Orchestras of Tallinn and Lausanne; Helsinki, Turku and Tampere Philharmonic Orchestras; Oulu Symphony Orchestra as well as the Avanti! Chamber Orchestra. Kuusisto recently conducted his debut performances with the Krakow Sinfonietta and Ottawa’s National Arts Center Orchestra, as well as at the Finnish National Opera’s major production Indigo, an opera by heavy metal cello group Apocalyptica’s Eicca Toppinen and Perttu Kivilaakso. The score of the opera is also arranged and orchestrated by Kuusisto. Kuusisto performs regularly as soloist and chamber musician and has held the position of artistic director at several festivals. Currently he is the artistic director of the Oulu Music Festival with a commitment presently until 2017. Kuusisto also enjoys a longstanding relationship with the Lahti Symphony Orchestra: having served as concertmaster during 1999-2012, his collaboration with the orchestra now continues with frequent guest conducting and recordings, to name a few. -

1 Reliability of Programming Software: Comparison of SHAZAM and SAS

Reliability of Programming Software: Comparison of SHAZAM and SAS. Oluwarotimi Odeh Department of Agricultural Economics, Kansas State University, Manhattan, KS 66506-4011 Phone: (785)-532-4438 Fax: (785)-523-6925 Email: [email protected] Allen M. Featherstone Department of Agricultural Economics, Kansas State University, Manhattan, KS 66506-4011 Phone: (785)-532-4441 Fax: (785)-523-6925 Email: [email protected] Selected Paper for Presentation at the Western Agricultural Economics Association Annual Meeting, Honolulu, HI, June 30-July 2, 2004 Copyright 2004 by Odeh and Featherstone. All rights reserved. Readers may make verbatim copies for commercial purposes by any means, provided that this copyright notice appears on all such copies. 1 Reliability of Programming Software: Comparison of SHAZAM and SAS. Introduction The ability to combine quantitative methods, econometric techniques, theory and data to analyze societal problems has become one of the major strengths of agricultural economics. The inability of agricultural economists to perform this task perfectly in some cases has been linked to the fragility of econometric results (Learner, 1983; Tomek, 1993). While small changes in model specification may result in considerable impact and changes in empirical results, Hendry and Richard (1982) have shown that two models of the same relationship may result in contradicting result. Results like these weaken the value of applied econometrics (Tomek, 1993). Since the study by Tice and Kletke (1984) computer programming software have undergone tremendous improvements. However, experience in recent times has shown that available software packages are not foolproof and may not be as efficient and consistent as researchers often assume. Compounding errors, convergence, error due to how software read, interpret and process data impact the values of analytical results (see Tomek, 1993; Dewald, Thursby and Anderson, 1986). -

Peer Institution Research: Recommendations and Trends 2016

Peer Institution Research: Recommendations and Trends 2016 New Mexico State University Abstract This report evaluates the common technology services from New Mexico State University’s 15 peer institutions. Based on the findings, a summary of recommendations and trends are explained within each of the general areas researched: peer institution enrollment, technology fees, student computing, software, help desk services, classroom technology, equipment checkout and loan programs, committees and governing bodies on technology, student and faculty support, printing, emerging technologies and trends, homepage look & feel and ease of navigation, UNM and UTEP my.nmsu.edu comparison, top IT issues, and IT organization charts. Peer Institution Research 1 Table of Contents Peer Institution Enrollment ................................................................................. 3 Technology Fees ................................................................................................. 3 Student Computing ............................................................................................. 6 Software ............................................................................................................. 8 Help Desk Services .............................................................................................. 9 Classroom Technology ...................................................................................... 11 Equipment Checkout and Loan Programs ......................................................... -

Insight MFR By

Manufacturers, Publishers and Suppliers by Product Category 11/6/2017 10/100 Hubs & Switches ASCEND COMMUNICATIONS CIS SECURE COMPUTING INC DIGIUM GEAR HEAD 1 TRIPPLITE ASUS Cisco Press D‐LINK SYSTEMS GEFEN 1VISION SOFTWARE ATEN TECHNOLOGY CISCO SYSTEMS DUALCOMM TECHNOLOGY, INC. GEIST 3COM ATLAS SOUND CLEAR CUBE DYCONN GEOVISION INC. 4XEM CORP. ATLONA CLEARSOUNDS DYNEX PRODUCTS GIGAFAST 8E6 TECHNOLOGIES ATTO TECHNOLOGY CNET TECHNOLOGY EATON GIGAMON SYSTEMS LLC AAXEON TECHNOLOGIES LLC. AUDIOCODES, INC. CODE GREEN NETWORKS E‐CORPORATEGIFTS.COM, INC. GLOBAL MARKETING ACCELL AUDIOVOX CODI INC EDGECORE GOLDENRAM ACCELLION AVAYA COMMAND COMMUNICATIONS EDITSHARE LLC GREAT BAY SOFTWARE INC. ACER AMERICA AVENVIEW CORP COMMUNICATION DEVICES INC. EMC GRIFFIN TECHNOLOGY ACTI CORPORATION AVOCENT COMNET ENDACE USA H3C Technology ADAPTEC AVOCENT‐EMERSON COMPELLENT ENGENIUS HALL RESEARCH ADC KENTROX AVTECH CORPORATION COMPREHENSIVE CABLE ENTERASYS NETWORKS HAVIS SHIELD ADC TELECOMMUNICATIONS AXIOM MEMORY COMPU‐CALL, INC EPIPHAN SYSTEMS HAWKING TECHNOLOGY ADDERTECHNOLOGY AXIS COMMUNICATIONS COMPUTER LAB EQUINOX SYSTEMS HERITAGE TRAVELWARE ADD‐ON COMPUTER PERIPHERALS AZIO CORPORATION COMPUTERLINKS ETHERNET DIRECT HEWLETT PACKARD ENTERPRISE ADDON STORE B & B ELECTRONICS COMTROL ETHERWAN HIKVISION DIGITAL TECHNOLOGY CO. LT ADESSO BELDEN CONNECTGEAR EVANS CONSOLES HITACHI ADTRAN BELKIN COMPONENTS CONNECTPRO EVGA.COM HITACHI DATA SYSTEMS ADVANTECH AUTOMATION CORP. BIDUL & CO CONSTANT TECHNOLOGIES INC Exablaze HOO TOO INC AEROHIVE NETWORKS BLACK BOX COOL GEAR EXACQ TECHNOLOGIES INC HP AJA VIDEO SYSTEMS BLACKMAGIC DESIGN USA CP TECHNOLOGIES EXFO INC HP INC ALCATEL BLADE NETWORK TECHNOLOGIES CPS EXTREME NETWORKS HUAWEI ALCATEL LUCENT BLONDER TONGUE LABORATORIES CREATIVE LABS EXTRON HUAWEI SYMANTEC TECHNOLOGIES ALLIED TELESIS BLUE COAT SYSTEMS CRESTRON ELECTRONICS F5 NETWORKS IBM ALLOY COMPUTER PRODUCTS LLC BOSCH SECURITY CTC UNION TECHNOLOGIES CO FELLOWES ICOMTECH INC ALTINEX, INC. -

2017 MAJOR EURO Music Festival CALENDAR Sziget Festival / MTI Via AP Balazs Mohai

2017 MAJOR EURO Music Festival CALENDAR Sziget Festival / MTI via AP Balazs Mohai Sziget Festival March 26-April 2 Horizon Festival Arinsal, Andorra Web www.horizonfestival.net Artists Floating Points, Motor City Drum Ensemble, Ben UFO, Oneman, Kink, Mala, AJ Tracey, Midland, Craig Charles, Romare, Mumdance, Yussef Kamaal, OM Unit, Riot Jazz, Icicle, Jasper James, Josey Rebelle, Dan Shake, Avalon Emerson, Rockwell, Channel One, Hybrid Minds, Jam Baxter, Technimatic, Cooly G, Courtesy, Eva Lazarus, Marc Pinol, DJ Fra, Guim Lebowski, Scott Garcia, OR:LA, EL-B, Moony, Wayward, Nick Nikolov, Jamie Rodigan, Bahia Haze, Emerald, Sammy B-Side, Etch, Visionobi, Kristy Harper, Joe Raygun, Itoa, Paul Roca, Sekev, Egres, Ghostchant, Boyson, Hampton, Jess Farley, G-Ha, Pixel82, Night Swimmers, Forbes, Charline, Scar Duggy, Mold Me With Joy, Eric Small, Christer Anderson, Carina Helen, Exswitch, Seamus, Bulu, Ikarus, Rodri Pan, Frnch, DB, Bigman Japan, Crawford, Dephex, 1Thirty, Denzel, Sticky Bandit, Kinno, Tenbagg, My Mate From College, Mr Miyagi, SLB Solden, Austria June 9-July 10 DJ Snare, Ambiont, DLR, Doc Scott, Bailey, Doree, Shifty, Dorian, Skore, March 27-April 2 Web www.electric-mountain-festival.com Jazz Fest Vienna Dossa & Locuzzed, Eksman, Emperor, Artists Nervo, Quintino, Michael Feiner, Full Metal Mountain EMX, Elize, Ernestor, Wastenoize, Etherwood, Askery, Rudy & Shany, AfroJack, Bassjackers, Vienna, Austria Hemagor, Austria F4TR4XX, Rapture,Fava, Fred V & Grafix, Ostblockschlampen, Rafitez Web www.jazzfest.wien Frederic Robinson, -

OFFICIAL RULES Summitmedia, LLC Contesting Apocalyptica Plays Metallica Concert Tickets WBPT-FM

OFFICIAL RULES SummitMedia, LLC Contesting Apocalyptica plays Metallica Concert Tickets WBPT-FM The following are the official rules of SummitMedia, LLC (“Sponsor”) for the Apocalyptica plays Metallica Concert Ticket Contest (“Contest”). By participating, each participant agrees as follows: 1. NO PURCHASE IS NECESSARY. Void where prohibited by law. All federal, state, and local regulations apply. 2. ELIGIBILITY. Unless otherwise specified, the Contest is open only to legal U.S. residents age eighteen (18) years or older at the time of entry with a valid Social Security number and who reside in the WBPT-FM listening area. Unless otherwise specified, employees of SummitMedia, LLC , its parent company, affiliates, related entities and subsidiaries, promotional sponsors, prize providers, advertising agencies, other radio stations serving the SummitMedia, LLC radio station’s listening area, and the immediate family members and household members of all such employees are not eligible to participate. The term “immediate family members” includes spouses, parents and step-parents, siblings and step-siblings, and children and stepchildren. The term “household members” refers to people who share the same residence at least three (3) months out of the year. Only one winner per household or family is permitted. There is no limit to the number of times an individual may attempt to enter, but each individual may qualify only once per Contest. An individual who has won more than $500 in a SummitMedia, LLC Contest or Sweepstakes in a particular calendar quarter is not eligible to participate in another SummitMedia, LLC Contest or Sweepstakes in that quarter unless otherwise specifically stated. Entrants may not use an assumed name or alias (other than a screen name where a contest involves use of a social media site). -

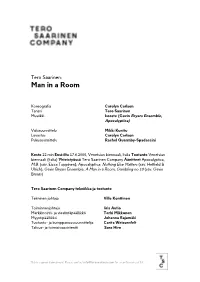

Man in a Room

Tero Saarinen: Man in a Room Koreografia Carolyn Carlson Tanssi Tero Saarinen Musiikki kooste (Gavin Bryars Ensemble, Apocalyptica) Valosuunnittelu Mikki Kunttu Lavastus Carolyn Carlson Pukusuunnittelu Rachel Quarmby-Spadaccini Kesto 22 min Ensi-ilta 17.6.2000, Venetsian biennaali, Italia Tuotanto Venetsian biennaali (Italia) Yhteistyössä Tero Saarinen Company Äänitteet Apocalyptica, M.B. (säv. Eicca Toppinen); Apocalyptica: Nothing Else Matters (säv. Hetfield & Ulrich); Gavin Bryars Ensemble, A Man in a Room, Gambling no 10 (säv. Gavin Bryars) Tero Saarinen Company tekniikka ja tuotanto Tekninen johtaja Ville Konttinen Toiminnanjohtaja Iiris Autio Markkinointi- ja viestintäpäällikkö Terhi Mikkonen Myyntipäällikkö Johanna Rajamäki Tuotanto- ja kumppanuussuunnittelija Carita Weissenfelt Talous- ja toimistoassistentti Sara Hirn This is a general document. Please contact [email protected] for a confirmed cast list. Tero Saarinen Company Vuonna 1996 perustettu Tero Saarinen Company on kiertänyt jo 40 maassa, kuudella eri mantereella. Ryhmä on esiintynyt lukuisilla arvostetuilla festivaaleilla ja teattereissa kuten BAM ja The Joyce New Yorkissa, Chaillot ja Châtelet Pariisissa sekä Royal Festival Hall Lontoossa. TSC:n toimintaan kuuluvat myös kansainvälinen opetustoiminta, yhteisölliset hankkeet sekä ulkomaisten tanssivierailujen tuottaminen Helsinkiin. Lisäksi Saarinen tekee yhteistyötä muiden nimekkäiden tanssiryhmien kanssa; hänen teoksiaan ovat esittäneet the LA Phil, NDT1, Batsheva, Lyon Opéra Ballet, Korean kansallinen tanssiryhmä -

Nothing Else Matters Apocalyptica Metallica for Strings Quartet Sheet Music

Nothing Else Matters Apocalyptica Metallica For Strings Quartet Sheet Music Download nothing else matters apocalyptica metallica for strings quartet sheet music pdf now available in our library. We give you 6 pages partial preview of nothing else matters apocalyptica metallica for strings quartet sheet music that you can try for free. This music notes has been read 38887 times and last read at 2021-09-27 18:00:17. In order to continue read the entire sheet music of nothing else matters apocalyptica metallica for strings quartet you need to signup, download music sheet notes in pdf format also available for offline reading. Instrument: Cello, Violin Ensemble: String Quartet Level: Advanced [ Read Sheet Music ] Other Sheet Music Fade To Black Metallica Apocalyptica Strings Quartet Fade To Black Metallica Apocalyptica Strings Quartet sheet music has been read 13756 times. Fade to black metallica apocalyptica strings quartet arrangement is for Advanced level. The music notes has 6 preview and last read at 2021-09-24 11:31:35. [ Read More ] Nothing Else Matters Apocalyptica Metallica For Violin Quintet Full Score And Parts Nothing Else Matters Apocalyptica Metallica For Violin Quintet Full Score And Parts sheet music has been read 13390 times. Nothing else matters apocalyptica metallica for violin quintet full score and parts arrangement is for Intermediate level. The music notes has 6 preview and last read at 2021-09-22 18:03:42. [ Read More ] Nothing Else Matters Metallica For String Quartet Nothing Else Matters Metallica For String Quartet sheet music has been read 14766 times. Nothing else matters metallica for string quartet arrangement is for Intermediate level.