Brian J. Filko 2011

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Stanley: the Robot That Won the DARPA Grand Challenge

STANLEY Winning the DARPA Grand Challenge with an AI Robot_ Michael Montemerlo, Sebastian Thrun, Hendrik Dahlkamp, David Stavens Stanford AI Lab, Stanford University 353 Serra Mall Stanford, CA 94305-9010 fmmde,thrun,dahlkamp,[email protected] Sven Strohband Volkswagen of America, Inc. Electronics Research Laboratory 4009 Miranda Avenue, Suite 150 Palo Alto, California 94304 [email protected] http://www.cs.stanford.edu/people/dstavens/aaai06/montemerlo_etal_aaai06.pdf Stanley: The Robot that Won the DARPA Grand Challenge Sebastian Thrun, Mike Montemerlo, Hendrik Dahlkamp, David Stavens, Andrei Aron, James Diebel, Philip Fong, John Gale, Morgan Halpenny, Gabriel Hoffmann, Kenny Lau, Celia Oakley, Mark Palatucci, Vaughan Pratt, and Pascal Stang Stanford Artificial Intelligence Laboratory Stanford University Stanford, California 94305 http://robots.stanford.edu/papers/thrun.stanley05.pdf DARPA Grand Challenge: Final Part 1 Stanley from Stanford 10.54 https://www.youtube.com/watch?v=M2AcMnfzpNg Sebastian Thrun helped build Google's amazing driverless car, powered by a very personal quest to save lives and reduce traffic accidents. 4 minutes https://www.ted.com/talks/sebastian_thrun_google_s_driverless_car THE GREAT ROBOT RACE – documentary Published on Jan 21, 2016 DARPA Grand Challenge—a raucous race for robotic, driverless vehicles sponsored by the Pentagon, which awards a $2 million purse to the winning team. Armed with artificial intelligence, laser-guided vision, GPS navigation, and 3-D mapping systems, the contenders are some of the world's most advanced robots. Yet even their formidable technology and mechanical prowess may not be enough to overcome the grueling 130-mile course through Nevada's desert terrain. From concept to construction to the final competition, "The Great Robot Race" delivers the absorbing inside story of clever engineers and their unyielding drive to create a champion, capturing the only aerial footage that exists of the Grand Challenge. -

Mapping Planetary Caves with an Autonomous, Heterogeneous Robot Team

Mapping Planetary Caves with an Autonomous, Heterogeneous Robot Team Ammar Husain Heather Jones Balajee Kannan Robotics Institute Robotics Institute GE Global Research Carnegie Mellon University Carnegie Mellon University Schenectady, NY 12309 Pittsburgh, PA 15213 Pittsburgh, PA 15213 [email protected] [email protected] [email protected] Uland Wong Tiago Pimentel Martins da Silva Sarah Tang Robotics Institute Mechatronics Engineering Dept. Mechanical and Aerospace Eng. Dept. Carnegie Mellon University University of Brasilia Princeton University Pittsburgh, PA 15213 Brasilia, DF, Brazil Princeton, NJ 08544 [email protected] [email protected] [email protected] Shreyansh Daftry Steven Huber William L. “Red” Whittaker Electronics and Communication Dept. Astrobotic Technology, Inc. Robotics Institute Manipal Institute of Technology Pittsburgh, PA 15213 Carnegie Mellon University Manipal, Karnataka, India [email protected] Pittsburgh, PA 15213 [email protected] [email protected] Abstract— d Caves on other planetary bodies offer sheltered habitat for future human explorers and numerous clues to a planet’s past for scientists. While recent orbital imagery pro- vides exciting new details about cave entrances on the Moon and Mars, the interiors of these caves are still unknown and not observable from orbit. Multi-robot teams offer unique solutions for exploration and modeling subsurface voids during precursor missions. Robot teams that are diverse in terms of size, mobility, sensing, and capability can provide great advantages, but this diversity, coupled with inherently distinct low-level behavior (a) Lunar skylight (b) Skylight in a funnel- architectures, makes coordination a challenge. This paper shaped pit on Mars presents a framework that consists of an autonomous frontier and capability-based task generator, a distributed market-based Figure 1. -

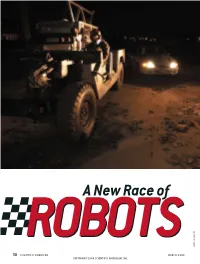

A New Race of ROBOTS

A New Race of ROBOTSROBOTS SCOTT GOLDSMITH 58 SCIENTIFIC AMERICAN MARCH 2004 COPYRIGHT 2004 SCIENTIFIC AMERICAN, INC. DECEMBER 2, 2003: The Red Team prepares its robotic vehicle, Sandstorm, for its maiden voyage. As Nick Miller, one of several dozen Carnegie Mellon University undergraduates on the team, drives the robot around a test loop between abandoned steel mills in Pittsburgh, onboard computers (in metal box) record the test path. Five days later the robot drives the loop with no one at the wheel. Around the U.S., engineers are finishing one-year crash projects to create robots able to dash 200 miles through the Mojave Desert in a day, unaided by humans. Scientific American tailed the odds-on favorite team for 10 months and found that major innovations in robotics are not enough to win such a contest. Obsession is also required BY W. WAYT GIBBS PITTSBURGH, DECEMBER 10, 2003: A cold rain blows sideways through the night into the face of Chris Urmson as he frets over Sandstorm, the robotic vehicle idling next to him on an overgrown lot between two empty steel mills. Urmson checks a tarp protecting the metal cage full of computers and custom electronics that serves as the sensate head of the chimeric robot, which has the body of an old Marine Corps Humvee. His ungloved hands shivering and his body aching from three sleep-deprived days and nights of work in the field, Urmson stares glumly at the machine and weighs his options. None of them are good. www.sciam.com SCIENTIFIC AMERICAN 59 COPYRIGHT 2004 SCIENTIFIC AMERICAN, INC. -

Reid Simmons

Pittsburgh PA 1:01:53 m4v Reid Simmons An interview conducted by Peter Asaro with Selma Šabanović November 23 2010 Q: We’ll just start by asking where you were born and where you grew up? Reid Simmons: Okay. I was born in Akron, Ohio, lived there for two years and then moved to Buffalo, New York, where I spent most of my formative years. I was an undergraduate at University of Buffalo, so hometown and then went on to spent a year working for a company in Ann Arbor doing computer design, graphics, and then moved to MIT to do my graduate work in artificial intelligence. Q: And when did you first encounter robotics? Reid Simmons: When I first encountered robotics, so Rod Brooks was at MIT at the time and he was very active doing robotics, so a number of his grad students were contemporaries of mine and I saw what they were doing and they had robots running around the lab, but I was kind of a good old-fashioned AI guy and didn’t actually do anything in robotics during my graduate work. When I graduated, I was intended to go into industrial research and that’s where I spent most of my time looking for jobs and then towards the very end of my job search, I get a call out of the blue from Tom Mitchell who along with Red Whittaker and Takeo Kanade had landed a fairly large NASA contract to build a prototype Mars rover and Red was going to do the mechanism and control and Takeo was going to be doing the perception and Tom was going to be doing the AI. -

Feigenbaum Prize 2011

Association for the Advancement of Artificial Intelligence 445 Burgess Drive Menlo Park, CA 94025 (650) 328-3123 www.aaai.org For press inquiries only, contact: Carol Hamilton (650) 328-3123 [email protected] FOR IMMEDIATE RELEASE AAAI Awards Inaugural Feigenbaum Prize Menlo Park, CA – May 23, 2011. The Association for the Advancement of Artificial Intelligence (AAAI) is delighted to announce that Sebastian Thrun, Professor of Computer Science and Electrical Engineering and Director of the Stanford AI Lab at Stanford University, and William A. “Red” Whittaker, Professor, Robotics Institute at Carnegie Mellon University, have been selected as the joint winners of the inaugural 2011 AAAI Feigenbaum Prize. Thrun and Whittaker, whose teams won the 2005 DARPA Grand Challenge and 2007 DARPA Urban Challenge, respectively, are being recognized in particular for high-impact contributions to the field of artificial intelligence through innovation and achievement in autonomous vehicle research, transitioning the concept of an autonomous vehicle from the realm of fiction to reality. “The shared award recognizes the technical leadership and vision of Red and Sebastian who worked with their respective teams at CMU and Stanford to solve challenging problems with machine perception, learning, and decision making in the open world,” said, Eric Horvitz, Chair of the Feigenbaum Prize Committee and the Immediate Past President of AAAI. The associated cash prize of $10,000 is provided by the Feigenbaum Nii Foundation. The prize will be presented during the opening ceremony of AAAI-11, to be held Tuesday, August 9, at the Hyatt Regency San Francisco. The AAAI Feigenbaum Prize was established in 2010 and is awarded biennially to recognize and encourage outstanding Artificial Intelligence research advances that are made by using experimental methods of computer science. -

KVH Gyros Used in Carnegie Mellon ''Red Team'' Quest for Victory in DARPA Grand Challenge

KVH Gyros Used in Carnegie Mellon ''Red Team'' Quest for Victory in DARPA Grand Challenge June 16, 2005 KVH's Fiber Optic Gyros Helping Carnegie Mellon University in $2 Million Robotic Vehicle Race Across Mojave Desert MIDDLETOWN, R.I., Jun 16, 2005 (BUSINESS WIRE) -- On October 8, 2005, Carnegie Mellon University's Red Team will compete in the Defense Advanced Research Projects Agency's (DARPA) Grand Challenge. The objective: successfully race two driverless Hummers against other teams across 175 miles of Mojave Desert terrain in ten hours or less. It is the ultimate challenge in robotics engineering with a winner-take-all prize of $2 million. The one catch- the vehicles have no drivers. Created in response to a Congressional and Department of Defense mandate, the DARPA Grand Challenge is a field test proposed to expedite research and development of autonomous ground vehicles to assist on the battlefield. The teams are using fiber optic gyros (FOGs) from KVH Industries, Inc., (Nasdaq: KVHI), a Red Team Silver Sponsor, to help them go the distance. "KVH components provide outstanding performance for stabilizing and pointing our sensors," said Red Whittaker, Team Leader for Carnegie Mellon University's Robotics Institute. "Red Team chose KVH products due to a cost vs. performance equation. KVH was also chosen due to its recognized industry expertise in dynamic sensing-related projects and its long track record of providing sensing equipment at Carnegie Mellon University." KVH's DSP-3000 FOGs are used on a stabilized sensor platform to sense pitch, roll, and yaw angular rates relative to the vehicle's position. -

Utilization of Position and Orientation Data for Preplanning and Real Time Autonomous Vehicle Navigation

Utilization of Position and Orientation Data for Preplanning and Real Time Autonomous Vehicle Navigation Dr. William "Red" Whittaker, Carnegie Mellon University, Pittsburgh, Pennsylvania Louis Nastro, Applanix Corporation, Richmond Hill, Ontario, Canada BIOGRAPHIES environments and demonstrates how position and orientation information is used for pre-planning. Dr. William "Red" Whittaker leads the Robot City development effort. He is the Fredkin Research INTRODUCTION Professor of Robotics at Carnegie Mellon University 's Robotics Institute and the founder of the Field Robotics This paper addresses the problem of how to achieve Center and the Robotics Engineering Consortium. Dr. reliable and repeatable positioning data and how to use Whittaker received his BS from Princeton. MS and that for maximizing the performance of autonomous PhD are from Carnegie Mellon University. vehicles. Robust positioning (which is the ability of a positioning system to maintain accurate position Louis Nastro received his BA (Hons) degree from York information even during GPS outages), is a necessary University in 1994. He also received MA in Strategic component of successfully navigating the vehicle. Studies and MS. from the Odegard School of However, accurate orientation of the vehicle to derive Aerospace Sciences, University of North Dakota. He is very precise measures of vehicle dynamics for both pre- Director of Land Products at Applanix Corporation. planning functions and real time navigation are absolutely essential to provide onboard sensors with ABSTRACT relevant data to steer autonomous vehicles on their intended track, and deal with unanticipated conditions Pre-planning information about terrain is as important upon routes. as real time navigation for achieving peak performance in autonomous driving. -

Download This Issue

Princeton M Alumni Weekly February 8, 2012 Alumni compete in race to the moon Princeton’s changing restaurant scene Occupy Princeton Ellie Kemper’02 Web exclusives and breaking news @ paw.princeton.edu Princeton Alumni Weekly An editorially independent magazine by alumni for alumni since 1900 FEBRUARY 8, 2012 VOLUME 112 NUMBER 7 President’s Page 2 Inbox 3 From the Editor 5 B E T George F. Kennan ’25, T Campus Notebook 12 M A N whose papers are in N Occupy Princeton • ’11 career choices / C O R Mudd Library; page 44 B I • Report findings shed light on S student perceptions • Farewell to a Firestone tradition • University pays more to towns • BREAKING GROUND: Funny Girl 26 3-D sound • Changing restaurant From Princeton’s stages to television and film, actress and writer scene • Historical photos online • Ellie Kemper ’02 brings a fresh voice to comedy. ON THE CAMPUS: Women’s leadership, By Mark F. Bernstein ’83 redux • Funny scientists • FROM PRINCETON’S VAULT: Tiger, Tiger • More Sports 24 Fly me to the moon 32 A special bond in basketball • EXTRA Two Princetonians are competing in a race to the moon — and with POINT: Reversal of fortune for wrestling a $20 million prize, there’s more than ego at stake. A Moment With 37 By Kenneth Chang ’87 Professor Maurizio Viroli, on Italy and Berlusconi Alumni Scene 38 Kate Finn ’06 works on behalf of What’s n ew @ PAW ONLINE assault victims • STARTING OUT: Emma Bedard ’11 • TIGER PROFILE: History Bowl HISTORY QUIZ Rally ’Round the Cannon founder David Madden ’03 • READING Test your skills with five Gregg Lange ’70 looks at ROOM: Alan Lightman ’70 considers zingers from History Bee a concrete symbol of science and G od • Max Gomez ’73 founder David Madden ’03. -

Forging the Future Lessons from Pittsburgh, Pennsylvania

FORGING THE FUTURE LESSONS FROM PITTSBURGH, PENNSYLVANIA Introduction Transition Pennsylvania’s second largest city, Pittsburgh is traditionally The groundwork for Pittsburgh’s transformation was laid known for its steel industry, NFL team the ‘Steelers’ and as during the 1980’s when economic hardship inspired the city the ‘City of Bridges’. Over the past two decades, Pittsburgh to emphasise its educational institutions as pillars of strength. has become an archetype for industrial transformation; from Pittsburgh’s universities worked together to establish new a declining and polluted industry powerhouse to an institutes that would raise the standard of education for the increasingly environmentally friendly tech hub. next generation and safeguard the region’s economy. If there is one lesson that came out of the 1980’s in History Pittsburgh, it is that you need to diversify. Pittsburgh experienced its first boom as a commercial city Joel Tarr, Pittsburgh historian, Carnegie Mellon University3 after the American Revolution. A vibrant port developed at the intersection of Pittsburgh’s three major rivers and the In 1979, Carnegie Mellon University (CMU) was the first arrival of the Pennsylvania Railroad allowed industry to grow university to open a Robotics Institute. The institute was rapidly. Pittsburgh’s dominance in the crucible steel industry began in the mid 1800’s. At its peak, the region produced Employment EMPLOYMENT GROWTH 1 growth (%) nearly three quarters of the nation’s output. Index = FY1990 40% During the Great Depression, Pittsburgh’s industrial PITTSBURGH PENNSYLVANIA production fell by more than 50 per cent and the city’s 30% USA workforce took a severe hit.2 Pittsburgh’s role supplying materials during World War II revived the economy and, 20% although the city suffered from stifling air pollution, industrial production created jobs and supported a blue-collar middle 10% class. -

AI MAGAZINE AAAI News

AAAI News Congratulations to the 2011 AAAI Award Winners! Henry Kautz, AAAI President, and Eric AAAI News Horvitz, AAAI Past President and Awards Committee Chair, presented the AAAI Awards in August at AAAI-11 Fall News from the in San Francisco. Association for the Advancement Classic Paper Award of Artificial Intelligence The 2011 AAAI Classic Paper Award was given to the authors of the most influential papers from the Tenth Na - tional Conference on Artificial Intelli - gence, held in 1992 in San Jose, Cali - fornia. The award was presented to Mitchell received his BSc in cognitive process. The winning papers were se - Hector Levesque, David Mitchell, and science and artificial intelligence at lected by the program chairs with the Bart Selman for their two papers, Hard the University of Toronto, his MSc in help of area chairs and members of the and Easy Distribution of SAT Problems computing science from Simon Fraser senior program committee. Honors and A New Method for Solving Hard University, and his PhD in computer went to Jessica Davies (University of Satisfiability Problems. These papers science from the University of Toron - Toronto), George Katsirelos (LRI, Uni - were honored for their significant con - tributions to the area of automated to. versité Paris Sud 11), Nina Narodytska reasoning via methods and analyses Bart Selman is a professor of com - (NICTA and University of New South on satisfiability, providing founda - puter science at Cornell University. He Wales), and Toby Walsh (NICTA and tional insights about constraint satis - previously was at AT&T Bell Laborato - University of New South Wales) for faction and search. -

The Boss Robot Developed by Carnegie Mellon's Tartan Racing

The Boss robot developed by Carnegie Mellon’s Tartan Racing team is dominating the automated driving landscape. ECONOMICS 25 CARNEGIE MELLON UNIVERSITY TOOK THE TOP PRIZE IN LAST FALL’S DRIVERLESS ROBOT COMPETITION; NOW NATIONAL CORPORATIONS AND LOCAL FOUNDATIONS WANT TO APPLY THE NEW TECHNOLOGY IN WAYS THAT WILL MAKE DRIVING AND OTHER TASKS SAFER WHILE EXPANDING ECONOMIC OPPORTUNITIES IN THE PITTSBURGH REGION. BY CARMEN J. LEE AND C. D. JOHNSON PHOTOS BY TARTAN RACING 26 n Pittsburgh’s South Side, there’s an old locomotive roundhouse — a hodgepodge of brick, wood and sheetmetal that’s weathered and discolored with age — where two SUVs sit loaded with enough technology to catapult the evolution of the car to levels only dreamed of not too long ago. The 2007 Chevy Tahoes — each dubbed “Boss”— look like racing contenders with large sponsor logos emblazoned on their two-toned, black-and-blue exteriors. But the real action takes place in the elaborate network of software and hardware that stretches from a bank of computers in the rear of the vehicles to control monitors at the front and in the high-tech sensors strategically attached to various places on the bodies. Using lasers, radar and global positioning systems, the two SUVs drive themselves with such precision that the Carnegie Mellon University team responsible for transforming them into driverless robots won the $2 million fi rst prize at the Urban Challenge Robotics Competition in November. The race across a 52.8-mile cityscape course in Victorville, Calif., was sponsored by the Pentagon’s Defense Advanced Research Project Agency, or DARPA. -

Christopher Urmson 5517 Phillips Avenue Pittsburgh, PA 15217 (412) 421-2701 [email protected]

Christopher Urmson 5517 Phillips Avenue Pittsburgh, PA 15217 (412) 421-2701 [email protected] Research Interests Autonomous vehicles Algorithms for perception and navigation Software architectures for autonomous systems Education Ph.D. Robotics: Navigation Regimes for Off-Road Autonomy (2005) Carnegie Mellon University, Pittsburgh, PA. Advisors: Prof. William “Red” Whittaker and Prof. Reid Simmons B.Sc. Computer Engineering with Distinction (1998) University of Manitoba, Winnipeg, Canada. CGPA: 4.25/4.5 Professional Experience Director of Technology for the Urban Challenge (June 2006 – Present) Robotics Institute, Carnegie Mellon University Robotics Research Scientist (November 2004 – June 2006) Advanced Concepts Business Unit, Science Applications International Corporation Adjunct Faculty (March 2005 – June 2006) Robotics Institute, Carnegie Mellon University Red Team Technical Leader (August 2003 – May 2004) Robotics Institute, Carnegie Mellon University Research Assistant ( 1998 – 2005) Robotics Institute, Carnegie Mellon University Research Experience Research Program for Caterpillar Inc. (co-PI) The research topic and details for this work are protected by a non-disclosure agreement. Autonomous Urban Driving (Director of Technology) Tartan Racing, DARPA Urban Challenge This project developed a complete autonomous vehicle capable of safely and reliably navigating in urban traffic. Our research encompassed perception, behavioral reasoning, motion planning, and mechatronic design. We demonstrated these capabilities in the context of the DARPA Urban Challenge, winning the competition in 2007. Pedestrian Detection & Tracking from a Moving Vehicle (PI) SAIC IR&D This project developed techniques to detect and track pedestrians from a moving vehicle. Our approach used simple image transforms to detect potential pedestrians in a scene. Christopher Urmson 1 of 7 Pose Estimation & Modeling from a Walking Robot DARPA RATPACK program This project developed algorithms to perform six degree-of-freedom pose estimation and height map building for environments without GPS.