Slideslive, and to Everyone Who Submitted, Reviewed, and Prepared Content! the 5 Minute History of AI and Cognitive Science Jessica Hamrick What Is Cognitive Science?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Developmental Dynamics: Toward a Biologically Plausible Evolutionary Psychology

Psychological Bulletin Copyright 2003 by the American Psychological Association, Inc. 2003, Vol. 129, No. 6, 819–835 0033-2909/03/$12.00 DOI: 10.1037/0033-2909.129.6.819 Developmental Dynamics: Toward a Biologically Plausible Evolutionary Psychology Robert Lickliter Hunter Honeycutt Florida International University Indiana University Bloomington There has been a conceptual revolution in the biological sciences over the past several decades. Evidence from genetics, embryology, and developmental biology has converged to offer a more epigenetic, contingent, and dynamic view of how organisms develop. Despite these advances, arguments for the heuristic value of a gene-centered, predeterministic approach to the study of human behavior and development have become increasingly evident in the psychological sciences during this time. In this article, the authors review recent advances in genetics, embryology, and developmental biology that have transformed contemporary developmental and evolutionary theory and explore how these advances challenge gene-centered explanations of human behavior that ignore the complex, highly coordinated system of regulatory dynamics involved in development and evolution. The prestige of success enjoyed by the gene theory might become a evolutionary psychology assert that applying insights from evolu- hindrance to the understanding of development by directing our tionary theory to explanations of human behavior will stimulate attention solely to the genome....Already we have theories that refer more fruitful research programs and provide a powerful frame- the processes of development to genic action and regard the whole work for discovering evolved psychological mechanisms thought performance as no more than the realization of the potencies of the genes. Such theories are altogether too one-sided. -

Outline of Michael Tomasello, a Natural History of Human Morality (Cambridge MA: Harvard University Press, 2016)

Outline of Michael Tomasello, A Natural History of Human Morality (Cambridge MA: Harvard University Press, 2016). John Protevi LSU HNRS 2030.2: “Evolution and Biology of Morality” I Chapter 1: The Interdependence Hypothesis A) Parallels of natural and moral cooperation 1) Natural cooperation (a) Altruistic helping (b) Mutualist collaboration 2) Human morality (a) Parallel human morality types: (i) Altruistic helping via compassion, concern, benevolence: ethic of the good / sympathy (ii) Mutualist collaboration via fairness: ethic of right / justice (b) Simplicity vs complexity (i) Sympathetic altruism is simpler and more basic: (i) Pure cooperation (ii) Proximate mechanisms: based in mammalian parental care / kin selection (ii) Fair collaboration is more complex: interactions of multiple individuals w/ different interests (i) “cooperativization of competition” (ii) Proximate mechanisms: moral emotions / judgments 1. Deservingness 2. Punishment: feelings of resentment / indignation toWard Wrong-doers 3. Accountability: judgments of responsibility, obligation, etc B) Goal of the book: evolutionary account of emergence of human morality of sympathy and fairness 1) Morality is “form of cooperation” (a) Emerging via human adaptation to neW social forms required by neW ecological / economic needs (b) Bringing With it “species-unique proximate mechanisms” or psychological processes (i) cognition (ii) social interaction (iii) self-regulation 2) Based on these assumptions, tWo goals: (a) Specify hoW human cooperation differs from other primates -

Dynamic Behavior of Constained Back-Propagation Networks

642 Chauvin Dynamic Behavior of Constrained Back-Propagation Networks Yves Chauvin! Thomson-CSF, Inc. 630 Hansen Way, Suite 250 Palo Alto, CA. 94304 ABSTRACT The learning dynamics of the back-propagation algorithm are in vestigated when complexity constraints are added to the standard Least Mean Square (LMS) cost function. It is shown that loss of generalization performance due to overtraining can be avoided when using such complexity constraints. Furthermore, "energy," hidden representations and weight distributions are observed and compared during learning. An attempt is made at explaining the results in terms of linear and non-linear effects in relation to the gradient descent learning algorithm. 1 INTRODUCTION It is generally admitted that generalization performance of back-propagation net works (Rumelhart, Hinton & Williams, 1986) will depend on the relative size ofthe training data and of the trained network. By analogy to curve-fitting and for theo retical considerations, the generalization performance of the network should de crease as the size of the network and the associated number of degrees of freedom increase (Rumelhart, 1987; Denker et al., 1987; Hanson & Pratt, 1989). This paper examines the dynamics of the standard back-propagation algorithm (BP) and of a constrained back-propagation variation (CBP), designed to adapt the size of the network to the training data base. The performance, learning dynamics and the representations resulting from the two algorithms are compared. 1. Also in the Psychology Department, Stanford University, Stanford, CA. 94305 Dynamic Behavior of Constrained Back-Propagation Networks 643 2 GENERALIZATION PERFORM:ANCE 2.1 STANDARD BACK-PROPAGATION In Chauvin (In Press). the generalization performance of a back-propagation net work was observed for a classification task from spectrograms into phonemic cate gories (single speaker. -

ARCHITECTS of INTELLIGENCE for Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS of INTELLIGENCE

MARTIN FORD ARCHITECTS OF INTELLIGENCE For Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS OF INTELLIGENCE THE TRUTH ABOUT AI FROM THE PEOPLE BUILDING IT MARTIN FORD ARCHITECTS OF INTELLIGENCE Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Acquisition Editors: Ben Renow-Clarke Project Editor: Radhika Atitkar Content Development Editor: Alex Sorrentino Proofreader: Safis Editing Presentation Designer: Sandip Tadge Cover Designer: Clare Bowyer Production Editor: Amit Ramadas Marketing Manager: Rajveer Samra Editorial Director: Dominic Shakeshaft First published: November 2018 Production reference: 2201118 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK ISBN 978-1-78913-151-2 www.packt.com Contents Introduction ........................................................................ 1 A Brief Introduction to the Vocabulary of Artificial Intelligence .......10 How AI Systems Learn ........................................................11 Yoshua Bengio .....................................................................17 Stuart J. -

Cogsci Little Red Book.Indd

OUTSTANDING QUESTIONS IN COGNITIVE SCIENCE A SYMPOSIUM HONORING TEN YEARS OF THE DAVID E. RUMELHART PRIZE IN COGNITIVE SCIENCE August 14, 2010 Portland Oregon, USA OUTSTANDING QUESTIONS IN COGNITIVE SCIENCE A SYMPOSIUM HONORING TEN YEARS OF THE DAVID E. RUMELHART PRIZE IN COGNITIVE SCIENCE August 14, 2010 Portland Oregon, USA David E. Rumelhart David E. Rumelhart ABOUT THE RUMELHART PRIZE AND THE 10 YEAR SYMPOSIUM At the August 2000 meeting of the Cognitive Science Society, Drs. James L. McClelland and Robert J. Glushko presented the initial plan to honor the intellectual contributions of David E. Rumelhart to Cognitive Science. Rumelhart had retired from Stanford University in 1998, suffering from Pick’s disease, a degenerative neurological illness. The plan involved honoring Rumelhart through an annual prize of $100,000, funded by the Robert J. Glushko and Pamela Samuelson Foundation. McClelland was a close collaborator of Rumelhart, and, together, they had written numerous articles and books on parallel distributed processing. Glushko, who had been Rumelhart’s Ph.D student in the late 1970s and a Silicon Valley entrepreneur in the 1990s, is currently an adjunct professor at the University of California – Berkeley. The David E. Rumelhart prize was conceived to honor outstanding research in formal approaches to human cognition. Rumelhart’s own seminal contributions to cognitive science included both connectionist and symbolic models, employing both computational and mathematical tools. These contributions progressed from his early work on analogies and story grammars to the development of backpropagation and the use of parallel distributed processing to model various cognitive abilities. Critically, Rumelhart believed that future progress in cognitive science would depend upon researchers being able to develop rigorous, formal theories of mental structures and processes. -

Empathy and Altruism. the Hypothesis of Somasia

A Clinical Perspective on “Theory of Mind”, Empathy and Altruism The Hypothesis of Somasia Jean-Michel Le Bot PhD, University Rennes 2, Rennes doi: 10.7358/rela-2014-001-lebo [email protected] ABSTRACT The article starts by recalling the results of recent experiments that have revealed that, to a certain extent, the “ability to simultaneously distinguish between different possible perspec- tives on the same situation” (Decety and Lamm 2007) exists in chimpanzees. It then describes a case study of spatial and temporal disorientation in a young man following a cerebral lesion in order to introduce the hypothesis that this ability is based on a specific process of somasia. By permitting self-other awareness, this process also provides subjects with anchor points in time and space from which they can perform the mental decentring that enables them to adopt various perspectives. This process seems to be shared by humans and certain animal species and appears to be subdivided into the processing of the identity of experienced situa- tions, on the one hand, and of their unity on the other. The article concludes with a critique of overly reflexive and “representational” conceptions of theory of mind which do not distin- guish adequately between the ability to “theorise” about the mental states of others and the self-other awareness ability (which is automatic and non-reflexive). Keywords: Social cognition, theory of mind, empathy, altruism, neuropsychology, somasia, humans, animals, mental states, decentring. 1. INTRODUCTION In their seminal paper published in 1978, Premack and Woodruff stated that “an individual has a theory of mind [ToM] if he imputes mental states to himself and others” (Premack and Woodruff 1978). -

The 100 Most Eminent Psychologists of the 20Th Century

Review of General Psychology Copyright 2002 by the Educational Publishing Foundation 2002, Vol. 6, No. 2, 139–152 1089-2680/02/$5.00 DOI: 10.1037//1089-2680.6.2.139 The 100 Most Eminent Psychologists of the 20th Century Steven J. Haggbloom Renee Warnick, Jason E. Warnick, Western Kentucky University Vinessa K. Jones, Gary L. Yarbrough, Tenea M. Russell, Chris M. Borecky, Reagan McGahhey, John L. Powell III, Jamie Beavers, and Emmanuelle Monte Arkansas State University A rank-ordered list was constructed that reports the first 99 of the 100 most eminent psychologists of the 20th century. Eminence was measured by scores on 3 quantitative variables and 3 qualitative variables. The quantitative variables were journal citation frequency, introductory psychology textbook citation frequency, and survey response frequency. The qualitative variables were National Academy of Sciences membership, election as American Psychological Association (APA) president or receipt of the APA Distinguished Scientific Contributions Award, and surname used as an eponym. The qualitative variables were quantified and combined with the other 3 quantitative variables to produce a composite score that was then used to construct a rank-ordered list of the most eminent psychologists of the 20th century. The discipline of psychology underwent a eve of the 21st century, the APA Monitor (“A remarkable transformation during the 20th cen- Century of Psychology,” 1999) published brief tury, a transformation that included a shift away biographical sketches of some of the more em- from the European-influenced philosophical inent contributors to that transformation. Mile- psychology of the late 19th century to the stones such as a new year, a new decade, or, in empirical, research-based, American-dominated this case, a new century seem inevitably to psychology of today (Simonton, 1992). -

CNS 2014 Program

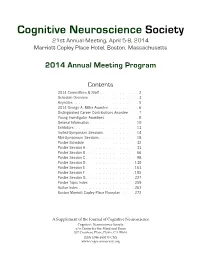

Cognitive Neuroscience Society 21st Annual Meeting, April 5-8, 2014 Marriott Copley Place Hotel, Boston, Massachusetts 2014 Annual Meeting Program Contents 2014 Committees & Staff . 2 Schedule Overview . 3 . Keynotes . 5 2014 George A . Miller Awardee . 6. Distinguished Career Contributions Awardee . 7 . Young Investigator Awardees . 8 . General Information . 10 Exhibitors . 13 . Invited-Symposium Sessions . 14 Mini-Symposium Sessions . 18 Poster Schedule . 32. Poster Session A . 33 Poster Session B . 66 Poster Session C . 98 Poster Session D . 130 Poster Session E . 163 Poster Session F . 195 . Poster Session G . 227 Poster Topic Index . 259. Author Index . 261 . Boston Marriott Copley Place Floorplan . 272. A Supplement of the Journal of Cognitive Neuroscience Cognitive Neuroscience Society c/o Center for the Mind and Brain 267 Cousteau Place, Davis, CA 95616 ISSN 1096-8857 © CNS www.cogneurosociety.org 2014 Committees & Staff Governing Board Mini-Symposium Committee Roberto Cabeza, Ph.D., Duke University David Badre, Ph.D., Brown University (Chair) Marta Kutas, Ph.D., University of California, San Diego Adam Aron, Ph.D., University of California, San Diego Helen Neville, Ph.D., University of Oregon Lila Davachi, Ph.D., New York University Daniel Schacter, Ph.D., Harvard University Elizabeth Kensinger, Ph.D., Boston College Michael S. Gazzaniga, Ph.D., University of California, Gina Kuperberg, Ph.D., Harvard University Santa Barbara (ex officio) Thad Polk, Ph.D., University of Michigan George R. Mangun, Ph.D., University of California, -

Introspection:Accelerating Neural Network Training by Learning Weight Evolution

Published as a conference paper at ICLR 2017 INTROSPECTION:ACCELERATING NEURAL NETWORK TRAINING BY LEARNING WEIGHT EVOLUTION Abhishek Sinha∗ Mausoom Sarkar Department of Electronics and Electrical Comm. Engg. Adobe Systems Inc, Noida IIT Kharagpur Uttar Pradesh,India West Bengal, India msarkar at adobe dot com abhishek.sinha94 at gmail dot com Aahitagni Mukherjee∗ Balaji Krishnamurthy Department of Computer Science Adobe Systems Inc, Noida IIT Kanpur Uttar Pradesh,India Uttar Pradesh, India kbalaji at adobe dot com ahitagnimukherjeeam at gmail dot com ABSTRACT Neural Networks are function approximators that have achieved state-of-the-art accuracy in numerous machine learning tasks. In spite of their great success in terms of accuracy, their large training time makes it difficult to use them for various tasks. In this paper, we explore the idea of learning weight evolution pattern from a simple network for accelerating training of novel neural networks. We use a neural network to learn the training pattern from MNIST classifi- cation and utilize it to accelerate training of neural networks used for CIFAR-10 and ImageNet classification. Our method has a low memory footprint and is computationally efficient. This method can also be used with other optimizers to give faster convergence. The results indicate a general trend in the weight evolution during training of neural networks. 1 INTRODUCTION Deep neural networks have been very successful in modeling high-level abstractions in data. How- ever, training a deep neural network for any AI task is a time-consuming process. This is because a arXiv:1704.04959v1 [cs.LG] 17 Apr 2017 large number of parameters need to be learnt using training examples. -

Tribal Social Instincts and the Cultural Evolution of Institutions to Solve Collective Action Problems

UC Riverside Cliodynamics Title Tribal Social Instincts and the Cultural Evolution of Institutions to Solve Collective Action Problems Permalink https://escholarship.org/uc/item/981121t8 Journal Cliodynamics, 3(1) Authors Richerson, Peter Henrich, Joe Publication Date 2012 DOI 10.21237/C7clio3112453 Peer reviewed eScholarship.org Powered by the California Digital Library University of California Cliodynamics: the Journal of Theoretical and Mathematical History Tribal Social Instincts and the Cultural Evolution of Institutions to Solve Collective Action Problems Peter Richerson University of California-Davis Joseph Henrich University of British Columbia Human social life is uniquely complex and diverse. Much of that complexity and diversity arises from culturally transmitted ideas, values and skills that underpin the operation of social norms and institutions that structure our social life. Considerable theoretical and empirical work has been devoted to the role of cultural evolutionary processes in the evolution of social norms and institutions. The most persistent controversy has been over the role of cultural group selection and gene- culture coevolution in early human populations during Pleistocene. We argue that cultural group selection and related cultural evolutionary processes had an important role in shaping the innate components of our social psychology. By the Upper Paleolithic humans seem to have lived in societies structured by institutions, as do modern populations living in small-scale societies. The most ambitious attempts to test these ideas have been the use of experimental games in field settings to document human similarities and differences on theoretically interesting dimensions. These studies have documented a huge range of behavior across populations, although no societies so far examined follow the expectations of selfish rationality. -

![Michael Tomasello [March, 2020]](https://docslib.b-cdn.net/cover/5776/michael-tomasello-march-2020-685776.webp)

Michael Tomasello [March, 2020]

CURRICULUM VITAE MICHAEL TOMASELLO [MARCH, 2020] Department of Psychology & Neuroscience Max Planck Institute for Evolutionary Anthropology Duke University; Durham, NC; 27708; USA Deutscher Platz 6; D-04103 Leipzig, GERMANY E-MAIL: [email protected] E-MAIL: [email protected] EDUCATION: DUKE UNIVERSITY B.A. Psychology, 1972 UNIVERSITY OF GEORGIA Ph.D. Experimental Psychology, 1980 UNIVERSITY OF LEIPZIG Doctorate, honoris causa, 2016 EMPLOYMENT: 1980 - 1998 Assistant-Associate-Full Professor of Psychology; Adjunct Professor of Anthropology, EMORY UNIVERSITY 1982 - 1998 Affiliate Scientist, Psychobiology, YERKES PRIMATE CENTER 1998 - 2018 Co-Director, MAX PLANCK INSTITUTE FOR EVOLUTIONARY ANTHROPOLOGY 1999 - 2018 Honorary Professor, Dept of Psychology, University of Leipzig 2001 - 2018 Co-Director, WOLFGANG KÖHLER PRIMATE RESEARCH CENTER 2016 - James Bonk Professor of Psychology & Neuroscience, DUKE UNIVERSITY - Director of Developmental Psychology Program - Secondary App’ts: Philosophy, Evol. Anthropology, Linguistics 2016 - Faculty of Center for Developmental Science, UNC AD HOC: 1987 - 1988 Visiting Scholar, HARVARD UNIVERSITY 1994 (summer) Instructor, INTERNATIONAL COGNITIVE SCIENCE INSTITUTE 1994 (summer) Visiting Fellow, BRITISH PSYCHOLOGICAL SOCIETY 1995 (spring) Visiting Professor, UNIVERSITY OF ROME 1996 (spring) Visiting Professor, THE BRITISH ACADEMY 1998 (spring) Visiting Scholar, MPI FOR PSYCHOLINGUISTICS 1999 (summer) Instructor, INTERNATIONAL COGNITIVE SCIENCE INSTITUTE 2001 (winter) Instructor, LOT (DUTCH GRADUATE -

Mental Imagery: in Search of a Theory

BEHAVIORAL AND BRAIN SCIENCES (2002) 25, 157–238 Printed in the United States of America Mental imagery: In search of a theory Zenon W. Pylyshyn Rutgers Center for Cognitive Science, Rutgers University, Busch Campus, Piscataway, NJ 08854-8020. [email protected] http://ruccs.rutgers.edu/faculty/pylyshyn.html Abstract: It is generally accepted that there is something special about reasoning by using mental images. The question of how it is spe- cial, however, has never been satisfactorily spelled out, despite more than thirty years of research in the post-behaviorist tradition. This article considers some of the general motivation for the assumption that entertaining mental images involves inspecting a picture-like object. It sets out a distinction between phenomena attributable to the nature of mind to what is called the cognitive architecture, and ones that are attributable to tacit knowledge used to simulate what would happen in a visual situation. With this distinction in mind, the paper then considers in detail the widely held assumption that in some important sense images are spatially displayed or are depictive, and that examining images uses the same mechanisms that are deployed in visual perception. I argue that the assumption of the spatial or depictive nature of images is only explanatory if taken literally, as a claim about how images are physically instantiated in the brain, and that the literal view fails for a number of empirical reasons – for example, because of the cognitive penetrability of the phenomena cited in its favor. Similarly, while it is arguably the case that imagery and vision involve some of the same mechanisms, this tells us very little about the nature of mental imagery and does not support claims about the pictorial nature of mental images.