Understanding and Addressing the Disinformation Ecosystem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Journalistic Ethics and the Right-Wing Media Jason Mccoy University of Nebraska-Lincoln, [email protected]

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Professional Projects from the College of Journalism Journalism and Mass Communications, College of and Mass Communications Spring 4-18-2019 Journalistic Ethics and the Right-Wing Media Jason McCoy University of Nebraska-Lincoln, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/journalismprojects Part of the Broadcast and Video Studies Commons, Communication Technology and New Media Commons, Critical and Cultural Studies Commons, Journalism Studies Commons, Mass Communication Commons, and the Other Communication Commons McCoy, Jason, "Journalistic Ethics and the Right-Wing Media" (2019). Professional Projects from the College of Journalism and Mass Communications. 20. https://digitalcommons.unl.edu/journalismprojects/20 This Thesis is brought to you for free and open access by the Journalism and Mass Communications, College of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Professional Projects from the College of Journalism and Mass Communications by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Journalistic Ethics and the Right-Wing Media Jason Mccoy University of Nebraska-Lincoln This paper will examine the development of modern media ethics and will show that this set of guidelines can and perhaps should be revised and improved to match the challenges of an economic and political system that has taken advantage of guidelines such as “objective reporting” by creating too many false equivalencies. This paper will end by providing a few reforms that can create a better media environment and keep the public better informed. As it was important for journalism to improve from partisan media to objective reporting in the past, it is important today that journalism improves its practices to address the right-wing media’s attack on journalism and avoid too many false equivalencies. -

Coming to Grips with Life in the Disinformation Age

Coming to Grips with Life in the Disinformation Age “The Information Age” is the term given to post-World War II contemporary society, particularly from the Sixties, onward, when we were coming to the realization that our ability to publish information of all kinds, fact and fiction, was increasing at an exponential rate so as to make librarians worry that they would soon run out of storage space. Their storage worries were temporarily allayed by the advent of the common use of microform analog photography of printed materials, and eventually further by digital technology and nanotechnology. It is noted that in this era, similar to other past eras of great growth in communications technology such as written language, the printing press, analog recording, and telecommunication, dramatic changes occurred as well in human thinking and socialness. More ready archiving negatively impacted our disposition to remember things by rote, including, in recent times, our own phone numbers. Moreover, many opportunities for routine socializing were lost, particularly those involving public speech and readings, live music and theater, live visits to neighbors and friends, etc. The recognition that there had been a significant tradeoff from which there seemed to be no turning back evoked discussion of how to come to grips with “life in the Information Age”. Perhaps we could discover new social and intellectual opportunities from our technological advances to replace the old ones lost, ways made available by new technology. Today we are facing a new challenge, also brought on largely by new technology: that of coming to grips with life in the “Disinformation Age”. -

Many People Think That an Argument Means an Emotional Or Angry Dispute with Raised Voices and Hot Tempers

How to Form a Good Argument To begin with, it is important to define the word “argument.” Many people think that an argument means an emotional or angry dispute with raised voices and hot tempers. That is one definition of an argument, but, academically, an argument simply means “a reason or set of reasons presented with the aim of persuading others that an action or idea is right or wrong.” Hence, most arguments are merely a series of statements for or against something or a discussion in which people calmly express different opinions. A good argument can be very educational, enriching, and pleasant. Many people have bad experiences with the “disagreement” type of argument, but the academic version is actually enjoyed by many people, who don’t consider it uncomfortable or threatening at all. They consider it intellectually stimulating and a satisfying way to wrap their heads around interesting ideas, and they find it disappointing when other people become hotheaded during a rational discussion. An academic argument is a process of reasoning through which we present evidence and reach conclusions based on it. When it is approached as an intellectual exercise, there is no reason to get upset when another person has different ideas or opinions or produces different, valid evidence. When you understand the rules of logic, or become practiced in debating skills, your discomfort and emotional defensiveness will subside, and you will begin to relax and have more confidence in your ability to argue rationally and logically and to defend your own position. You might even begin to find that it’s fun! One of the best ways to learn to form a good argument is to become familiar with the rules of logic. -

Learn to Discern #News

#jobs #fakenews #economy #budget #budget #election #news #news #voting #protest #law #jobs #election #news #war #protest #immigration #budget #war #candidate #voting #reform #economy #war #investigation Learn to Discern #voting #war #politics #fakenews #news #protest #jobs Media Literacy: #law Trainers Manual #election #war IREX 1275 K Street, NW, Suite 600 Washington, DC 20005 © 2020 IREX. All rights reserved. This work is licensed under the Creative Commons Attribution-NonCommercial- NoDerivatives 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042, USA. Table of contents How-To Guide 4 Part B. Checking Your Emotions 83 Lesson 1: Checking your emotions 83 Principles 4 Lesson 2: Understanding headlines 86 Learn to Discern training principles 4 Lesson 3: Check Your Phone 89 General training principles 5 Part C. Stereotypes 90 Adult learner principles 6 Lesson 1: Stereotypes 90 Choosing lessons 7 Lesson 2: Stereotypes and biased reporting in the media 94 Preparation 8 Gather supplies and create handouts 8 Unit 3: Fighting Misinformation Part A: Evaluating written content: Checking sources, Unit 1: Understanding Media 99 citations, and evidence Part A: Media Landscape 11 Lesson 1: Overview: your trust gauge 99 Lesson 1: Introduction 11 Lesson 2: Go to the source 101 Lesson 2: Types of Content 19 Lesson 3: Verifying Sources and Citations 104 Lesson 3: Information vs. persuasion 24 Lesson 4: Verifying Evidence -

S New School of Analytic Journalism

Central Asia’s New School of Analytic Journalism Ten-day course equips young journalists with specialist tools. IWPR has launched its comprehensive training course to develop analytic journalism among a new generation of journalists in Central Asia. Young writers from across the region spent ten days in the Kyrgyz capital Bishkek learning investigative techniques and analytical skills, as well as data visualisation and story design. The stories the 28 participants produce will be published in the media outlets they currently work for and also on IWPR’s analytical portal cabar.asia. This School of Analytic Journalism is the first stage of IWPR’s three-year project to train a fresh wave of journalists and experts. The project is funded by the Foreign Ministry of Norway, with the OSCE Academy in Bishkek as partner. Central Asia’s New School of Analytic Journalism (See IWPR Launches New Central Asia Programme). “We have become a big Central Asian family over these ten days. Every journalist has contributed something special to the school,” Bishkek-based journalist Bermet Ulanova said. “Now I know where to seek information, who to cooperate with for a joint story and who to talk to in general. I have learned many new things about neighbouring countries, their citizens, traditions and culture.” After finishing the course, which ran from 18-27 June, Khadisha Akayeva from Semey, eastern Kazakstan, also said, “I know what to write about, where to seek information, how to process it, and what to expect as a result.” Abakhon Sultonazarov (l) and Nadezhda Nam, a journalist from Tashkent, Uzbekistan. -

Exploring the Ideological Nature of Journalists' Social

Exploring the Ideological Nature of Journalists’ Social Networks on Twitter and Associations with News Story Content John Wihbey Thalita Dias Coleman School of Journalism Network Science Institute Northeastern University Northeastern University 177 Huntington Ave. 177 Huntington Ave. Boston, MA Boston, MA [email protected] [email protected] Kenneth Joseph David Lazer Network Science Institute Network Science Institute Northeastern University Northeastern University 177 Huntington Ave. 177 Huntington Ave. Boston, MA Boston, MA [email protected] [email protected] ABSTRACT 1 INTRODUCTION The present work proposes the use of social media as a tool for Discussions of news media bias have dominated public and elite better understanding the relationship between a journalists’ social discourse in recent years, but analyses of various forms of journalis- network and the content they produce. Specifically, we ask: what tic bias and subjectivity—whether through framing, agenda-setting, is the relationship between the ideological leaning of a journalist’s or false balance, or stereotyping, sensationalism, and the exclusion social network on Twitter and the news content he or she produces? of marginalized communities—have been conducted by communi- Using a novel dataset linking over 500,000 news articles produced cations and media scholars for generations [24]. In both research by 1,000 journalists at 25 different news outlets, we show a modest and popular discourse, an increasing area of focus has been ideo- correlation between the ideologies of who a journalist follows on logical bias, and how partisan-tinged media may help explain other Twitter and the content he or she produces. -

S:\FULLCO~1\HEARIN~1\Committee Print 2018\Henry\Jan. 9 Report

Embargoed for Media Publication / Coverage until 6:00AM EST Wednesday, January 10. 1 115TH CONGRESS " ! S. PRT. 2d Session COMMITTEE PRINT 115–21 PUTIN’S ASYMMETRIC ASSAULT ON DEMOCRACY IN RUSSIA AND EUROPE: IMPLICATIONS FOR U.S. NATIONAL SECURITY A MINORITY STAFF REPORT PREPARED FOR THE USE OF THE COMMITTEE ON FOREIGN RELATIONS UNITED STATES SENATE ONE HUNDRED FIFTEENTH CONGRESS SECOND SESSION JANUARY 10, 2018 Printed for the use of the Committee on Foreign Relations Available via World Wide Web: http://www.gpoaccess.gov/congress/index.html U.S. GOVERNMENT PUBLISHING OFFICE 28–110 PDF WASHINGTON : 2018 For sale by the Superintendent of Documents, U.S. Government Publishing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 VerDate Mar 15 2010 04:06 Jan 09, 2018 Jkt 000000 PO 00000 Frm 00001 Fmt 5012 Sfmt 5012 S:\FULL COMMITTEE\HEARING FILES\COMMITTEE PRINT 2018\HENRY\JAN. 9 REPORT FOREI-42327 with DISTILLER seneagle Embargoed for Media Publication / Coverage until 6:00AM EST Wednesday, January 10. COMMITTEE ON FOREIGN RELATIONS BOB CORKER, Tennessee, Chairman JAMES E. RISCH, Idaho BENJAMIN L. CARDIN, Maryland MARCO RUBIO, Florida ROBERT MENENDEZ, New Jersey RON JOHNSON, Wisconsin JEANNE SHAHEEN, New Hampshire JEFF FLAKE, Arizona CHRISTOPHER A. COONS, Delaware CORY GARDNER, Colorado TOM UDALL, New Mexico TODD YOUNG, Indiana CHRISTOPHER MURPHY, Connecticut JOHN BARRASSO, Wyoming TIM KAINE, Virginia JOHNNY ISAKSON, Georgia EDWARD J. MARKEY, Massachusetts ROB PORTMAN, Ohio JEFF MERKLEY, Oregon RAND PAUL, Kentucky CORY A. BOOKER, New Jersey TODD WOMACK, Staff Director JESSICA LEWIS, Democratic Staff Director JOHN DUTTON, Chief Clerk (II) VerDate Mar 15 2010 04:06 Jan 09, 2018 Jkt 000000 PO 00000 Frm 00002 Fmt 5904 Sfmt 5904 S:\FULL COMMITTEE\HEARING FILES\COMMITTEE PRINT 2018\HENRY\JAN. -

The Prevalence and Rationale for Presenting an Opposing Viewpoint in Climate Change Reporting: Findings from a U.S

JANUARY 2020 T I M M E T A L . 103 The Prevalence and Rationale for Presenting an Opposing Viewpoint in Climate Change Reporting: Findings from a U.S. National Survey of TV Weathercasters KRISTIN M. F. TIMM AND EDWARD W. MAIBACH George Mason University, Fairfax, Virginia MAXWELL BOYKOFF Downloaded from http://journals.ametsoc.org/wcas/article-pdf/12/1/103/4914613/wcas-d-19-0063_1.pdf by guest on 21 July 2020 University of Colorado Boulder, Boulder, Colorado TERESA A. MYERS AND MELISSA A. BROECKELMAN-POST George Mason University, Fairfax, Virginia (Manuscript received 29 May 2019, in final form 23 October 2019) ABSTRACT The journalistic norm of balance has been described as the practice of giving equal weight to different sides of a story; false balance is balanced reporting when the weight of evidence strongly favors one side over others—for example, the reality of human-caused climate change. False balance is problematic because it skews public perception of expert agreement. Through formative interviews and a survey of American weathercasters about climate change reporting, we found that objectivity and balance—topics that have frequently been studied with environmental journalists—are also relevant to understanding climate change reporting among weathercasters. Questions about the practice of and reasons for presenting an opposing viewpoint when reporting on climate change were included in a 2017 census survey of weathercasters working in the United States (N 5 480; response rate 5 22%). When reporting on climate change, 35% of weather- casters present an opposing viewpoint ‘‘always’’ or ‘‘most of the time.’’ Their rationale for reporting opposing viewpoints included the journalistic norms of objectivity and balanced reporting (53%), their perceived uncertainty of climate science (21%), to acknowledge differences of opinion (17%), to maintain credibility (14%), and to strengthen the story (7%). -

Responding to False Or Misleading Media Communications About HPV Vaccines 73 Figure 1 HPV Vaccine Safety Balance: the Weight of the Evidence

because we can envisage cervical cancer elimination nº 73 Responding to false or misleading media communications Talía Malagón, PhD about HPV vaccines Division of Cancer Epidemiology, Faculty of Medicine, Scientists have traditionally responded to vaccina- fidence in vaccination and to support program McGill University, Montréal, Canada tion opposition by providing reassuring safety and resilience. We believe that scientists have a moral [email protected] efficacy evidence from clinical trials and post-li- duty to participate in public life by sharing their censure surveillance systems. However, it is equa- knowledge when false or misleading media covera- lly critical for scientists to communicate effecti- ge threatens public health. This moral duty exists vely the scientific evidence and the public health because: 1) misinformation can directly cause benefits of implemented vaccine programs. This harm (e.g. by preventing the potentially life-sa- is most challenging when the media focus on ad- ving benefits of vaccination from being achieved); verse events, whether real or perceived, and when and 2) scientists are able to explain the scientific non-scientific information about vaccination is evidence that can counter false and misleading presented as fact. Such attention has often been claims. To increase our effectiveness in communi- Juliet Guichon, SJD handled effectively (e.g. Australia, Canada, and cating such knowledge, it is essential to unders- Department of Community Health Sciences, University of Calgary, UK). In some countries however, media coverage tand the fundamental differences between scienti- Calgary, Canada has negatively influenced public perception and fic and journalistic modes of communication. [email protected] HPV vaccine uptake because of the lack of a rapid, organized scientific response. -

Propaganda As Communication Strategy: Historic and Contemporary Perspective

Academy of Marketing Studies Journal Volume 24, Issue 4, 2020 PROPAGANDA AS COMMUNICATION STRATEGY: HISTORIC AND CONTEMPORARY PERSPECTIVE Mohit Malhan, FPM Scholar, Indian Institute of Management, Lucknow Dr. Prem Prakash Dewani, Associate Professor, Indian Institute of Management, Lucknow ABSTRACT In a world entrapped in their own homes during the Covid-19 crisis, digital communication has taken a centre stage in most people’s lives. Where before the pandemic we were facing a barrage of fake news, the digitally entrenched pandemic world has deeply exacerbated the problem. The purpose of choosing this topic is that the topic is new and challenging. In today’s context, individuals are bound to face the propaganda, designed by firms as a communication strategy. The study is exploratory is nature. The study is done using secondary data from published sources. In our study, we try and study a particular type of communication strategy, propaganda, which employs questionable techniques, through a comprehensive literature review. We try and understand the history and use of propaganda and how its research developed from its nascent stages and collaborated with various communications theories. We then take a look at the its contemporary usages and tools employed. It is pertinent to study the impact of propaganda on individual and the society. We explain that how individual/firms/society can use propaganda to build a communication strategy. Further, we theories and elaborate on the need for further research on this widely prevalent form of communication. Keywords: Propaganda, Internet, Communication, Persuasion, Politics, Social Network. INTRODUCTION Propaganda has been in operation in the world for a long time now. -

Logical Fallacies in an Argument

Logical Fallacies in an Argument: From the use of gross generalizations (i.e., “poor people are lazy”) to the reliance on self-evident logic (i.e., “hard work builds success”), there are many ways to make errors in reasoning when arguing. Scientists call these errors “logical fallacies.” Let’s examine some types of flawed reasoning below. As you read through these categories, you might be alarmed at how many you recognize from your own experiences with writing this semester. 1. False Equivalence: False Equivalence is a logical fallacy in which two opposing arguments appear to be logically equivalent or equally meritorious when in fact they are not. This occurs when an argument is positioned as a binary and one, often unscientific side is set as the converse or rejection of a scientifically demonstrated principle. When this occurs, that secondary position is overly elevated in status. In simple English -- we often assume that every issue has two equivalent sides. As an example, imagine you’re in a debate re: the geometry of the earth. One side asserts, “circular” and the other side asserts, “flat.” Should we listen equally to both sides? If we do, we assign a False Equivalence to the flat camp. False equivalencies are often seen in the debate over global warming and other politicized issues. See: https://en.wikipedia.org/wiki/Global_warming_controversy In the case of your papers, many of you used the undefined variable of “healthy” or ”unhealthy” to define GMO’s – however, even when you define “unhealthy” as “reduced Vitamin A soy” or “high fat beef” – you create a false equivalence because you leave out all the potential good by negating “higher yield rates” “lower costs for 3rd world nations” – so, what, if any, is the technical formula for weighing “good” against “bad” factors? 2. -

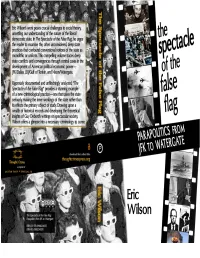

The Spectacle of the False-Flag

The Spectacle of the False-Flag THE SPECTACLE OF THE FALSE-FLAG: PARAPOLITICS FROM JFK TO WATERGATE Eric Wilson THE SPECTACLE OF THE FALSE-FLAG: PARAPOLITICS from JFK to WATERGATE Eric Wilson, Monash University 2015 http://creativecommons.org/licenses/by-nc-nd/4.0/ This work is Open Access, which means that you are free to copy, distribute, display, and perform the work as long as you clearly attribute the work to the author, that you do not use this work for commercial gain in any form whatsoever, and that you in no way, alter, transform, or build upon the work outside of its normal use in academic scholarship without express permission of the author and the publisher of this volume. For any reuse or distribution, you must make clear to others the license terms of this work. First published in 2015 by Thought | Crimes an imprint of punctumbooks.com ISBN-13: 978-0988234055 ISBN-10: 098823405X and the full book is available for download via our Open Monograph Press website (a Public Knowledge Project) at: www.thoughtcrimespress.org a project of the Critical Criminology Working Group, publishers of the Open Access Journal: Radical Criminology: journal.radicalcriminology.org Contact: Jeff Shantz (Editor), Dept. of Criminology, KPU 12666 72 Ave. Surrey, BC V3W 2M8 [ + design & open format publishing: pj lilley ] I dedicate this book to my Mother, who watched over me as I slept through the spectacle in Dallas on November 22, 1963 and who was there to celebrate my birthday with me during the spectacle at the Watergate Hotel on June 17, 1972 Contents Editor©s Preface ................................................................