A Quality Attributes Guide for Space Flight Software Architects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Jjmonl 1712.Pmd

alactic Observer John J. McCarthy Observatory G Volume 10, No. 12 December 2017 Holiday Theme Park See page 19 for more information The John J. McCarthy Observatory Galactic Observer New Milford High School Editorial Committee 388 Danbury Road Managing Editor New Milford, CT 06776 Bill Cloutier Phone/Voice: (860) 210-4117 Production & Design Phone/Fax: (860) 354-1595 www.mccarthyobservatory.org Allan Ostergren Website Development JJMO Staff Marc Polansky Technical Support It is through their efforts that the McCarthy Observatory Bob Lambert has established itself as a significant educational and recreational resource within the western Connecticut Dr. Parker Moreland community. Steve Barone Jim Johnstone Colin Campbell Carly KleinStern Dennis Cartolano Bob Lambert Route Mike Chiarella Roger Moore Jeff Chodak Parker Moreland, PhD Bill Cloutier Allan Ostergren Doug Delisle Marc Polansky Cecilia Detrich Joe Privitera Dirk Feather Monty Robson Randy Fender Don Ross Louise Gagnon Gene Schilling John Gebauer Katie Shusdock Elaine Green Paul Woodell Tina Hartzell Amy Ziffer In This Issue "OUT THE WINDOW ON YOUR LEFT"............................... 3 REFERENCES ON DISTANCES ................................................ 18 SINUS IRIDUM ................................................................ 4 INTERNATIONAL SPACE STATION/IRIDIUM SATELLITES ............. 18 EXTRAGALACTIC COSMIC RAYS ........................................ 5 SOLAR ACTIVITY ............................................................... 18 EQUATORIAL ICE ON MARS? ........................................... -

2001 Mars Odyssey 1/24 Scale Model Assembly Instructions

2001 Mars Odyssey 1/24 Scale Model Assembly Instructions This scale model of the 2001 Mars Odyssey spacecraft is designed for anyone interested, although it might be inappropriate for children younger than about ten years of age. Children should have adult supervision to assemble the model. Copyright (C) 2002 Jet Propulsion Laboratory, California Institute of Technology. All rights reserved. Permission for commercial reproduction other than for single-school in- classroom use must be obtained from JPL Commercial Programs Office. 1 SETUP 1.1 DOWNLOAD AND PRINT o You'll need Adobe Acrobat Reader software to read the Parts Sheet file. You'll find instructions for downloading the software free of charge from Adobe on the web page where you found this model. o Download the Parts file from the web page to your computer. It contains paper model parts on several pages of annotated graphics. o Print the Parts file with a black & white printer; a laser printer gives best results. It is highly recommended to print onto card stock (such as 110 pound cover paper). If you can't print onto card stock, regular paper will do, but assembly will be more difficult, and the model will be much more fragile. In any case, the card stock or paper should be white. The Parts file is designed for either 8.5x11-inch or A4 sheet sizes. o Check the "PRINTING CALIBRATION" on each Parts Sheet with a ruler, to be sure the cm or inch scale is full size. If it isn't, adjust the printout size in your printing software. -

The Isis3 Bundle Adjustment for Extraterrestrial Photogrammetry

ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume I-4, 2012 XXII ISPRS Congress, 25 August – 01 September 2012, Melbourne, Australia JIGSAW: THE ISIS3 BUNDLE ADJUSTMENT FOR EXTRATERRESTRIAL PHOTOGRAMMETRY K. L. Edmundson*, D. A. Cook, O. H. Thomas, B. A. Archinal, R. L. Kirk Astrogeology Science Center, U.S. Geological Survey, Flagstaff, AZ, USA, 86001 - [email protected] Commission IV, WG IV/7 KEY WORDS: Bundle Adjustment, Estimation, Extraterrestrial, Planetary, Space ABSTRACT: The Integrated Software for Imagers and Spectrometers (ISIS) package was developed by the Astrogeology Science Center of the U.S. Geological Survey in the late 1980s for the cartographic and scientific processing of planetary image data. Initial support was implemented for the Galileo NIMS instrument. Constantly evolving, ISIS has since added support for numerous missions, including most recently the Lunar Reconnaissance Orbiter, MESSENGER, and Dawn missions, plus support for the Metric Cameras flown onboard Apollo 15, 16, and 17. To address the challenges posed by extraterrestrial photogrammetry, the ISIS3 bundle adjustment module, jigsaw, is evolving as well. Here, we report on the current state of jigsaw including improvements such as the implementation of sparse matrix methods, parameter weighting, error propagation, and automated robust outlier detection. Details from the recent processing of Apollo Metric Camera images and from recent missions such as LRO and MESSENGER are given. Finally, we outline future plans for jigsaw, including the implementation of sequential estimation; free network adjustment; augmentation of the functional model of the bundle adjustment to solve for camera interior orientation parameters and target body parameters of shape, spin axis position, and spin rate; the modeling of jitter in line scan sensors; and the combined adjustment of images from a variety of platforms. -

Planetary Science

Mission Directorate: Science Theme: Planetary Science Theme Overview Planetary Science is a grand human enterprise that seeks to discover the nature and origin of the celestial bodies among which we live, and to explore whether life exists beyond Earth. The scientific imperative for Planetary Science, the quest to understand our origins, is universal. How did we get here? Are we alone? What does the future hold? These overarching questions lead to more focused, fundamental science questions about our solar system: How did the Sun's family of planets, satellites, and minor bodies originate and evolve? What are the characteristics of the solar system that lead to habitable environments? How and where could life begin and evolve in the solar system? What are the characteristics of small bodies and planetary environments and what potential hazards or resources do they hold? To address these science questions, NASA relies on various flight missions, research and analysis (R&A) and technology development. There are seven programs within the Planetary Science Theme: R&A, Lunar Quest, Discovery, New Frontiers, Mars Exploration, Outer Planets, and Technology. R&A supports two operating missions with international partners (Rosetta and Hayabusa), as well as sample curation, data archiving, dissemination and analysis, and Near Earth Object Observations. The Lunar Quest Program consists of small robotic spacecraft missions, Missions of Opportunity, Lunar Science Institute, and R&A. Discovery has two spacecraft in prime mission operations (MESSENGER and Dawn), an instrument operating on an ESA Mars Express mission (ASPERA-3), a mission in its development phase (GRAIL), three Missions of Opportunities (M3, Strofio, and LaRa), and three investigations using re-purposed spacecraft: EPOCh and DIXI hosted on the Deep Impact spacecraft and NExT hosted on the Stardust spacecraft. -

UNA Planetarium Newsletter Vol. 4. No. 2

UNA Planetarium Image of the Month Newsletter Vol. 4. No. 2 Feb, 2012 I was asked by a student recently why NASA was being closed down. This came as a bit of a surprise but it is somewhat understandable. The retirement of the space shuttle fleet last year was the end of an era. The shuttle serviced the United States’ space program for more than twenty years and with no launcher yet ready to replace the shuttles it might appear as if NASA is done. This is in part due to poor long-term planning on the part of NASA. However, contrary to what This image was obtained by the Cassini spacecraft in orbit around Saturn. It shows some people think, the manned the small moon Dione with the limb of the planet in the background. Dione is spaceflight program at NASA is alive and about 1123km across and the spacecraft was about 57000km from the moon well. In fact NASA is currently taking when the image was taken. Dione, like many of Saturn’s moons is probably mainly applications for the next astronaut corps ice. The shadows of Saturn’s rings appear on the planet as the stripping you see to and will send spacefarers to the the left of Dione. The Image courtesy NASA. International Space Station to conduct research in orbit. They will ride on Russian rockets, but they will be American astronauts. The confusion over the fate of NASA also Astro Quote: “Across the exposes the fact that many of the Calendar for Feb/Mar 2012 important missions and projects NASA is sea of space, the stars involved in do not have the high public are other suns.” Feb 14 Planetarium Public Night profile 0f the Space Shuttles. -

The Evolving Launch Vehicle Market Supply and the Effect on Future NASA Missions

Presented at the 2007 ISPA/SCEA Joint Annual International Conference and Workshop - www.iceaaonline.com The Evolving Launch Vehicle Market Supply and the Effect on Future NASA Missions Presented at the 2007 ISPA/SCEA Joint International Conference & Workshop June 12-15, New Orleans, LA Bob Bitten, Debra Emmons, Claude Freaner 1 Presented at the 2007 ISPA/SCEA Joint Annual International Conference and Workshop - www.iceaaonline.com Abstract • The upcoming retirement of the Delta II family of launch vehicles leaves a performance gap between small expendable launch vehicles, such as the Pegasus and Taurus, and large vehicles, such as the Delta IV and Atlas V families • This performance gap may lead to a variety of progressions including – large satellites that utilize the full capability of the larger launch vehicles, – medium size satellites that would require dual manifesting on the larger vehicles or – smaller satellites missions that would require a large number of smaller launch vehicles • This paper offers some comparative costs of co-manifesting single- instrument missions on a Delta IV/Atlas V, versus placing several instruments on a larger bus and using a Delta IV/Atlas V, as well as considering smaller, single instrument missions launched on a Minotaur or Taurus • This paper presents the results of a parametric study investigating the cost- effectiveness of different alternatives and their effect on future NASA missions that fall into the Small Explorer (SMEX), Medium Explorer (MIDEX), Earth System Science Pathfinder (ESSP), Discovery, -

Insight Spacecraft Launch for Mission to Interior of Mars

InSight Spacecraft Launch for Mission to Interior of Mars InSight is a robotic scientific explorer to investigate the deep interior of Mars set to launch May 5, 2018. It is scheduled to land on Mars November 26, 2018. It will allow us to better understand the origin of Mars. First Launch of Project Orion Project Orion took its first unmanned mission Exploration flight Test-1 (EFT-1) on December 5, 2014. It made two orbits in four hours before splashing down in the Pacific. The flight tested many subsystems, including its heat shield, electronics and parachutes. Orion will play an important role in NASA's journey to Mars. Orion will eventually carry astronauts to an asteroid and to Mars on the Space Launch System. Mars Rover Curiosity Lands After a nine month trip, Curiosity landed on August 6, 2012. The rover carries the biggest, most advanced suite of instruments for scientific studies ever sent to the martian surface. Curiosity analyzes samples scooped from the soil and drilled from rocks to record of the planet's climate and geology. Mars Reconnaissance Orbiter Begins Mission at Mars NASA's Mars Reconnaissance Orbiter launched from Cape Canaveral August 12. 2005, to find evidence that water persisted on the surface of Mars. The instruments zoom in for photography of the Martian surface, analyze minerals, look for subsurface water, trace how much dust and water are distributed in the atmosphere, and monitor daily global weather. Spirit and Opportunity Land on Mars January 2004, NASA landed two Mars Exploration Rovers, Spirit and Opportunity, on opposite sides of Mars. -

P6.31 Long-Term Total Solar Irradiance (Tsi) Variability Trends: 1984-2004

P6.31 LONG-TERM TOTAL SOLAR IRRADIANCE (TSI) VARIABILITY TRENDS: 1984-2004 Robert Benjamin Lee III∗ NASA Langley Research Center, Atmospheric Sciences, Hampton, Virginia Robert S. Wilson and Susan Thomas Science Application International Corporation (SAIC), Hampton, Virginia ABSTRACT additional long-term TSI variability component, 0.05 %, with a period longer than a decade. The incoming total solar irradiance (TSI), Analyses of the ERBS/ERBE data set do not typically referred to as the “solar constant,” is support the Wilson and Mordvinor analyses being studied to identify long-term TSI changes, approach because it used the Nimbus-7 data which may trigger global climate changes. The set which exhibited a significant ACR response TSI is normalized to the mean earth-sun shift of 0.7 Wm-2 (Lee et al,, 1995; Chapman et distance. Studies of spacecraft TSI data sets al., 1996). confirmed the existence of 0.1 %, long-term TSI variability component with a period of 10 years. In our current paper, analyses of the 1984- The component varied directly with solar 2004, ERBS/ERBE measurements, along with magnetic activity associated with recent 10-year the other spacecraft measurements, are sunspot cycles. The 0.1 % TSI variability presented as well as the shortcoming of the component is clearly present in the spacecraft ACRIM study. Long-term, incoming total solar data sets from the 1984-2004, Earth Radiation irradiance (TSI) measurement trends were Budget Experiment (ERBE) active cavity validated using proxy TSI values, derived from radiometer (ACR) solar monitor; 1978-1993, indices of solar magnetic activity. Typically, Nimbus-7 HF; 1980-1989, Solar Maximum three overlapping spacecraft data sets were Mission [SMM] ACRIM; 1991-2004, Upper used to validate long-term TSI variability trends. -

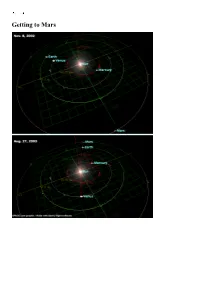

Getting to Mars How Close Is Mars?

Getting to Mars How close is Mars? Exploring Mars 1960-2004 Of 42 probes launched: 9 crashed on launch or failed to leave Earth orbit 4 failed en route to Mars 4 failed to stop at Mars 1 failed on entering Mars orbit 1 orbiter crashed on Mars 6 landers crashed on Mars 3 flyby missions succeeded 9 orbiters succeeded 4 landers succeeded 1 lander en route Score so far: Earthlings 16, Martians 25, 1 in play Mars Express Mars Exploration Rover Mars Exploration Rover Mars Exploration Rover 1: Meridiani (Opportunity) 2: Gusev (Spirit) 3: Isidis (Beagle-2) 4: Mars Polar Lander Launch Window 21: Jun-Jul 2003 Mars Express 2003 Jun 2 In Mars orbit Dec 25 Beagle 2 Lander 2003 Jun 2 Crashed at Isidis Dec 25 Spirit/ Rover A 2003 Jun 10 Landed at Gusev Jan 4 Opportunity/ Rover B 2003 Jul 8 Heading to Meridiani on Sunday Launch Window 1: Oct 1960 1M No. 1 1960 Oct 10 Rocket crashed in Siberia 1M No. 2 1960 Oct 14 Rocket crashed in Kazakhstan Launch Window 2: October-November 1962 2MV-4 No. 1 1962 Oct 24 Rocket blew up in parking orbit during Cuban Missile Crisis 2MV-4 No. 2 "Mars-1" 1962 Nov 1 Lost attitude control - Missed Mars by 200000 km 2MV-3 No. 1 1962 Nov 4 Rocket failed to restart in parking orbit The Mars-1 probe Launch Window 3: November 1964 Mariner 3 1964 Nov 5 Failed after launch, nose cone failed to separate Mariner 4 1964 Nov 28 SUCCESS, flyby in Jul 1965 3MV-4 No. -

EDITOR's in This Issue

rvin bse g S O ys th t r e a m E THE EARTH OBSERVER May/June 2001 Vol. 13 No. 3 In this issue . EDITOR’S SCIENCE MEETINGS Joint Advanced Microwave Scanning Michael King Radiometer (AMSR) Science Team EOS Senior Project Scientist Meeting. 3 Clouds and The Earth’s Radiant Energy I’m pleased to announce that NASA’s Earth Science Enterprise is sponsoring System (CERES) Science Team Meeting a problem for the Odyssey of the Mind competitions during the 2001-2002 . 9 school year. It is a technical problem involving an original performance about environmental preservation. The competitions will involve about The ACRIMSAT/ACRIM3 Experiment — 450,000 students from kindergarten through college worldwide, and will Extending the Precision, Long-Term Total culminate in a World Finals next May in Boulder, CO where the “best of the Solar Irradiance Climate Database . .14 best” will compete for top awards in the international competitions. It is estimated that we reach 1.5 to 2 million students, parents, teachers/ SCIENCE ARTICLES administrators, coaches, etc. internationally through participation in this Summary of the International Workshop on LAI Product Validation. 18 program. An Introduction to the Federation of Earth The Odyssey of the Mind Program fosters creative thinking and problem- Science Information Partners . 19 solving skills among participating students from kindergarten through col- lege. Students solve problems in a variety of areas from building mechanical Tools and Systems for EOS Data . 23 devices such as spring-driven vehicles to giving their own interpretation of literary classics. Through solving problems, students learn lifelong skills Status and Plans for HDF-EOS, NASA’s such as working with others as a team, evaluating ideas, making decisions, Format for EOS Standard Products . -

Budget of the United States Government

FISCAL YEAR 2002 BUDGET BUDGET OF THE UNITED STATES GOVERNMENT THE BUDGET DOCUMENTS Budget of the United States Government, Fiscal Year 2002 A Citizen's Guide to the Federal Budget, Budget of the contains the Budget Message of the President and information on United States Government, Fiscal Year 2002 provides general the President's 2002 proposals by budget function. information about the budget and the budget process. Analytical Perspectives, Budget of the United States Govern- Budget System and Concepts, Fiscal Year 2002 contains an ment, Fiscal Year 2002 contains analyses that are designed to high- explanation of the system and concepts used to formulate the Presi- light specified subject areas or provide other significant presentations dent's budget proposals. of budget data that place the budget in perspective. The Analytical Perspectives volume includes economic and account- Budget Information for States, Fiscal Year 2002 is an Office ing analyses; information on Federal receipts and collections; analyses of Management and Budget (OMB) publication that provides proposed of Federal spending; detailed information on Federal borrowing and State-by-State obligations for the major Federal formula grant pro- debt; the Budget Enforcement Act preview report; current services grams to State and local governments. The allocations are based estimates; and other technical presentations. It also includes informa- on the proposals in the President's budget. The report is released tion on the budget system and concepts and a listing of the Federal after the budget. programs by agency and account. Historical Tables, Budget of the United States Government, AUTOMATED SOURCES OF BUDGET INFORMATION Fiscal Year 2002 provides data on budget receipts, outlays, sur- pluses or deficits, Federal debt, and Federal employment over an The information contained in these documents is available in extended time period, generally from 1940 or earlier to 2006. -

Odyssey NASA’S Newest Mars Orbiter Is a Spacecraft Key Goals

NASA Facts National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, CA 91109 2001 Mars Odyssey NASA’s newest Mars orbiter is a spacecraft key goals. The orbiter will also provide informa- designed to find out what the planet is made of, tion on the structure of the Martian surface and detect water and shallow buried ice and study the about the geological processes that may have radiation environment. caused it. Finally, the orbiter will take important The surface of Mars has long been thought to measurements of the planet’s radiation environ- consist of a mixture of rock, soil and icy material. ment that can be used to evaluate the potential However, the exact composition of these materials health risks to future human explorers. is unknown, except for the few specific locations where spacecraft have landed and taken measure- Science Instruments ments. Current observa- tions from Odyssey and The orbiter carries Mars Global Surveyor three science instruments: spacecraft are changing The Thermal Emission the way scientists think Imaging System about Mars. (THEMIS), the Gamma Ray Spectrometer (GRS), and the Mars Radiation Mission Overview Environment Experiment On April 7, 2001, the (MARIE). 2001 Mars Odyssey launched on a Delta II Studying Minerals and launch vehicle from Cape Temperature Canaveral, Florida. On October 24, 2001, after The thermal emission firing its main engine, the imaging system will col- spacecraft was captured lect images that will be by Mars’ gravity. Over the next 76 days, the space- used to identify the minerals present in the soils craft gradually edged closer to Mars, using the fric- and rocks at the surface.