Multiple Faces Tracking Using Feature Fusion and Neural Network in Video

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Series Deformation Monitoring Over Large Infrastructures Around Dongting Lake Using X-Band PSI with a Combined Thermal Expansion and Seasonal Model

Hindawi Journal of Sensors Volume 2021, Article ID 6664933, 17 pages https://doi.org/10.1155/2021/6664933 Research Article Time Series Deformation Monitoring over Large Infrastructures around Dongting Lake Using X-Band PSI with a Combined Thermal Expansion and Seasonal Model Liang Bao ,1,2 Xuemin Xing ,1,2 Lifu Chen ,1,3 Zhihui Yuan ,1,3 Bin Liu ,1,2 Qing Xia ,1,2 and Wei Peng 1,2 1Laboratory of Radar Remote Sensing Applications, Changsha University of Science & Technology, Changsha 410014, China 2School of Traffic and Transportation Engineering, Changsha University of Science & Technology, Changsha 410014, China 3School of Electrical and Information Engineering, Changsha University of Science & Technology, Changsha 410014, China Correspondence should be addressed to Xuemin Xing; [email protected] Received 26 November 2020; Revised 5 March 2021; Accepted 10 March 2021; Published 31 March 2021 Academic Editor: Zhenxing Zhang Copyright © 2021 Liang Bao et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. The long-term spatial-temporal deformation monitoring of densely distributed infrastructures near the lake area is of great significance to understand the urban health status and prevent the potential traffic safety problems. In this paper, the permanent scatterer interferometry (PSI) technology with TerraSAR-X imagery over the area around Dongting Lake was utilized to generate the long-term spatial-temporal deformation. Since the X-band SAR interferometric phases are highly influenced by the thermal dilation of the observed objects, and the deformation of large infrastructures are highly related to external temperature, a combined deformation model considering the thermal expansion and the seasonal environmental factors was proposed to model the temporal variations of the deformation. -

University of Leeds Chinese Accepted Institution List 2021

University of Leeds Chinese accepted Institution List 2021 This list applies to courses in: All Engineering and Computing courses School of Mathematics School of Education School of Politics and International Studies School of Sociology and Social Policy GPA Requirements 2:1 = 75-85% 2:2 = 70-80% Please visit https://courses.leeds.ac.uk to find out which courses require a 2:1 and a 2:2. Please note: This document is to be used as a guide only. Final decisions will be made by the University of Leeds admissions teams. -

Title: Abstract: Scope and Topics: Program Committee Chairs

Title: Content Protection, Analysis, and Forensics for Big Text Data Abstract: Modern Big Data increasingly appears in a variety forms, and text is a commonly used medium of information storage and transmission. Examples include the web pages, the formatted texts, the plain texts, the financial texts, the electronic bill, the short texts in social network, and etc.. These big text data have provided a lot of opportunities. However, big text data also brings many challenges about many problems of text security.This workshop aims to bring together researchers from different paradigms solving big problems under a unified platform for sharing their work and exchanging ideas. Scope and Topics: We are soliciting novel and original research contributions related to content protection, analysis, and forensics for big text data (algorithms, software systems, applications, best practices, performance). Significant work-in-progress papers are also encouraged. Papers can be from any of the following areas, including but not limited to: Big data text automation Text big data mining and deep learning Text watermarking and steganography Text steganalysis and forensics Coverless covert communication based on text documents Text content security based on big data environment Copyright protection for text documents Information tracking for text documents Electronic Bill (Ticket) Security based on Blockchain Financial Information Security Sentiment analysis for short texts Encrypted text retrieval Program Committee Chairs: Yuling Liu, Hunan University, China [email protected] Yuling Liu is an Associate professor of Hunan University. She obtained the Ph.D. degree from Hunan University. Her research interests include big text data, text steganography, natural language processing, information security, etc. -

Impact of Road-Block on Peak-Load of Coupled Traffic and Energy

energies Article Impact of Road-Block on Peak-Load of Coupled Traffic and Energy Transportation Networks Xian Yang 1,2, Yong Li 1,* ID , Ye Cai 3, Yijia Cao 1, Kwang Y. Lee 4 and Zhijian Jia 5 1 College of Electrical and Information Engineering, Hunan University, Changsha 410082, China; [email protected] (X.Y.); [email protected] (Y.C.) 2 School of Mechanical and Electrical Engineering, Hunan City University, Yiyang 413002, China 3 College of Electrical and Information Engineering, Changsha University of Science &Technology, Changsha 410114, China; [email protected] 4 Department of Electrical and Computer Engineering, Baylor University, Waco, TX 76798-7356, USA; [email protected] 5 State Grid Yiyang Power Supply Company, State Grid, Yiyang 413000, China; [email protected] * Correspondence: [email protected]; Tel.: +86-152-1107-6213 Received: 19 May 2018; Accepted: 25 June 2018; Published: 6 July 2018 Abstract: With electric vehicles (EVs) pouring into infrastructure systems, coupled traffic and energy transportation networks (CTETNs) can be applied to capture the interactions between the power grids and transportation networks. However, most research has focused solely on the impacts of EV penetration on power grids or transportation networks. Therefore, a simulation model was required for the interactions between the two critical infrastructures, as one had yet to be developed. In this paper, we build a framework with four domains and propose a new method to simulate the interactions and the feedback effects among CTETNs. Considered more accurately reflecting a realistic situation, an origin-destination (OD) pair strategy, a charging strategy, and an attack strategy are modeled based on the vehicle flow and power flow. -

Organizing Committee-Volume 1

Organizing Committee Organizing Committee Chairs Zhixiang Hou, Changsha University of Science and Technology, China Junnian Wang, Hunan University of Science and Technology, China Program Committee Chairs Bin Xie, Carnegie Mellon University, USA Zhixiong Huang, Central South University, China Publication Chairs Zhixiang Hou, Changsha University of Science and Technology, China Weiming Zhou, University of Metz, France Finance Chairs Zhixiang Hou, Changsha University of Science and Technology, China Yihu Wu, Changsha University of Science and Technology, China Publicity Chair P. Zhang, Victoria University, Australian xxiv Program Committee Bin Xie, Carnegie Mellon University, USA Helen Shang, Laurentian University, Canada Hua Deng, Central South University, China Jianxun Liu, Hunan University of Science and Technology, China Yucel Saygin, Sabanci University, Turkey Zhixiong Huang, Central South University, China Xiaojiao Tong, Changsha University of Science and Technology, China Jonas Larsson, Linköping University, Sweden Ming Fu, Changsha University of Science and Technology, China Jiafu Jiang, Changsha University of Science and Technology, China Ben K. M. Sim, Hong Kong Baptist University, Hong Kong Xiaoxiong Weng, South China University of Technology, China Sanjay Chawla, University of Sydney, Australia Xichun Liu, Hunan Normal University, China Jianhua Rong, Changsha University of Science and Technology, China Sharma Chakravarthy, University of Texas at Arlington, USA J. H. Rong, Changsha University of Science and Technology, China W. -

Title: Abstract: Scope and Topics: Program Committee

ICAIS/ICCCS2019 Workshop/Session Proposal Title: Content Protection, Analysis, and Forensics for Big Text Data Abstract: Modern Big Data increasingly appears in a variety forms, and text is a commonly used medium of information storage and transmission. Examples include the web pages, the formatted texts, the plain texts, the financial texts, the electronic bill, the short texts in social network, and etc.. These big text data have provided a lot of opportunities. However, big text data also brings many challenges about many problems of text security. This workshop aims to bring together researchers from different paradigms solving big problems under a unified platform for sharing their work and exchanging ideas. Scope and Topics: We are soliciting novel and original research contributions related to content protection, analysis, and forensics for big text data (algorithms, software systems, applications, best practices, performance). Significant work-in-progress papers are also encouraged. Papers can be from any of the following areas, including but not limited to: Big data text automation Text big data mining and deep learning Text watermarking and steganography Text steganalysis and forensics Coverless covert communication based on text documents Text content security based on big data environment Copyright protection for text documents Information tracking for text documents Electronic bill (ticket) security based on blockchain Financial information security Text data deduplication and storage security Sentiment analysis and opinion mining Encrypted text retrieval Text categorization and topic modeling Web, social media and computational social science Information retrieval Automatic text generation Program Committee Chairs: Yuling Liu, Hunan University, China [email protected] ICAIS/ICCCS2019 Workshop/Session Proposal Yuling Liu is an Associate professor of Hunan University. -

Synchronous Screening Multiplexed Biomarkers of Alzheimer’S

Electronic Supplementary Material (ESI) for Chemical Communications. This journal is © The Royal Society of Chemistry 2019 Electronic Supplementary Information (ESI) Synchronous Screening Multiplexed Biomarkers of Alzheimer’s Disease by Length-encoded Aerolysin Nanopore-Integrated Triple- helix Molecular Switch Zhen Zou,a Hua Yang,a Qi Yan,a Peng Qi, a Zhihe Qing,a Jing Zheng,b Xuan Xu,c Lihua Zhang,d Feng Feng,d and Ronghua Yang a,b* aSchool of Chemistry and Food Engineering, Changsha University of Science and Technology, Changsha, 410114, P. R. China. bState Key Laboratory of Chemo/Biosensing and Chemometrics, Hunan University, Changsha 410082, P. R. China cChildren's Medical Center, People's Hospital of Hunan Province, Changsha, Hunan 410002, P. R. China. dCollege of Chemistry and Environmental Engineering, Shanxi Datong University, Datong, Shanxi ,037009, PR China *To whom correspondence should be addressed: E-mail: [email protected]; Fax: +86-731-88822523. EXPERIMENTAL SECTION Chemicals. All deoxyribonucleic acids (DNAs) were synthesized by SangonBiotech Company, Ltd., (Shanghai, China) and their detailed sequence information is shown in Table S1. The oligonucleotides were purified by high- performance liquid chromatography (HPLC) and dissolved in ultrapure water as stock solutions. Trypsin-EDTA, trypsin-agarose, decane (anhydrous, ≥99%), and protein biomarkers of AD including alpha-1 antitrypsin (AAT), Tau protein (the Tau 381 isoform) and the purified human BACE1 extracellular domain was purchased from Sigma-Aldrich Co., Ltd. (St. Louis, MO). Immunoglobulin G (IgG), Human serum albumin (HSA), Glucose Oxidase (GOx), lysozyme, thrombin, and insulin were commercially obtained from Dingguo Biotechnology CO., Ltd (Beijing, China). Proaerolysin was kindly provided by Prof. -

ANTHEMS of DEFEAT Crackdown in Hunan

ANTHEMS OF DEFEAT Crackdown in Hunan Province, 19891989----9292 May 1992 An Asia Watch Report A Division of Human Rights Watch 485 Fifth Avenue 1522 K Street, NW, Suite 910 New York, NY 10017 Washington, DC 20005 Tel: (212) 974974----84008400 Tel: (202) 371371----65926592 Fax: (212) 972972----09050905 Tel: (202) 371371----01240124 888 1992 by Human Rights Watch All rights reserved Printed in the United States of America ISBN 1-56432-074-X Library of Congress Catalog No. 92-72352 Cover Design by Patti Lacobee Photo: AP/ Wide World Photos The cover photograph shows the huge portrait of Mao Zedong which hangs above Tiananmen Gate at the north end of Tiananmen Square, just after it had been defaced on May 23, 1989 by three pro-democracy demonstrators from Hunan Province. The three men, Yu Zhijian, Yu Dongyue and Lu Decheng, threw ink and paint at the portrait as a protest against China's one-party dictatorship and the Maoist system. They later received sentences of between 16 years and life imprisonment. THE ASIA WATCH COMMITTEE The Asia Watch Committee was established in 1985 to monitor and promote in Asia observance of internationally recognized human rights. The chair is Jack Greenberg and the vice-chairs are Harriet Rabb and Orville Schell. Sidney Jones is Executive Director. Mike Jendrzejczyk is Washington Representative. Patricia Gossman, Robin Munro, Dinah PoKempner and Therese Caouette are Research Associates. Jeannine Guthrie, Vicki Shu and Alisha Hill are Associates. Mickey Spiegel is a Consultant. Introduction 1. The 1989 Democracy Movement in Hunan Province.................................................................................... 1 Student activism: a Hunan tradition.................................................................................................. -

Gypenosides Alleviate Cone Cell Death in a Zebrafish Model

antioxidants Article Gypenosides Alleviate Cone Cell Death in a Zebrafish Model of Retinitis Pigmentosa Xing Li 1,†, Reem Hasaballah Alhasani 2,3,† , Yanqun Cao 1, Xinzhi Zhou 2, Zhiming He 1, Zhihong Zeng 4, Niall Strang 5 and Xinhua Shu 1,2,5,* 1 School of Basic Medical Sciences, Shaoyang University, Shaoyang 422000, China; [email protected] (X.L.); [email protected] (Y.C.); [email protected] (Z.H.) 2 Department of Biological and Biomedical Sciences, Glasgow Caledonian University, Glasgow G4 0BA, UK; [email protected] (R.H.A.); [email protected] (X.Z.) 3 Department of Biology, Faculty of Applied Science, Umm Al-Qura University, Makkah 21961, Saudi Arabia 4 College of Biological and Environmental Engineering, Changsha University, Changsha 410022, China; [email protected] 5 Department of Vision Science, Glasgow Caledonian University, Glasgow G4 0BA, UK; [email protected] * Correspondence: [email protected] † Joint first authors. Abstract: Retinitis pigmentosa (RP) is a group of visual disorders caused by mutations in over 70 genes. RP is characterized by initial degeneration of rod cells and late cone cell death, regardless of genetic abnormality. Rod cells are the main consumers of oxygen in the retina, and after the death of rod cells, the cone cells have to endure high levels of oxygen, which in turn leads to oxidative damage and cone degeneration. Gypenosides (Gyp) are major dammarane-type saponins of Gynostemma pentaphyllum that are known to reduce oxidative stress and inflammation. In this project we assessed Citation: Li, X.; Alhasani, R.H.; Cao, the protective effect of Gyp against cone cell death in the rpgrip1 mutant zebrafish, which recapitulate Y.; Zhou, X.; He, Z.; Zeng, Z.; Strang, the classical pathological features found in RP patients. -

1 Please Read These Instructions Carefully

PLEASE READ THESE INSTRUCTIONS CAREFULLY. MISTAKES IN YOUR CSC APPLICATION COULD LEAD TO YOUR APPLICATION BEING REJECTED. Visit http://studyinchina.csc.edu.cn/#/login to CREATE AN ACCOUNT. • The online application works best with Firefox or Internet Explorer (11.0). Menu selection functions may not work with other browsers. • The online application is only available in Chinese and English. 1 • Please read this page carefully before clicking on the “Application online” tab to start your application. 2 • The Program Category is Type B. • The Agency No. matches the university you will be attending. See Appendix A for a list of the Chinese university agency numbers. • Use the + by each section to expand on that section of the form. 3 • Fill out your personal information accurately. o Make sure to have a valid passport at the time of your application. o Use the name and date of birth that are on your passport. Use the name on your passport for all correspondences with the CLIC office or Chinese institutions. o List Canadian as your Nationality, even if you have dual citizenship. Only Canadian citizens are eligible for CLIC support. o Enter the mailing address for where you want your admission documents to be sent under Permanent Address. Leave Current Address blank. Contact your home or host university coordinator to find out when you will receive your admission documents. Contact information for you home university CLIC liaison can be found here: http://clicstudyinchina.com/contact-us/ 4 • Fill out your Education and Employment History accurately. o For Highest Education enter your current degree studies. -

4-Traffic Flow Data

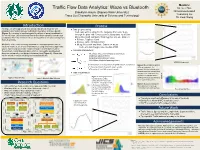

Mentors: Traffic Flow Data Analytics: Waze vs Bluetooth Dr. Lee Han Brooklynn Hauck (Slippery Rock University) Nima Hoseinzadeh Tracy Liu (Changsha University of Science and Technology) Yuandong Liu Dr. Kwai Wong Introduction Process As Waze, an GPS app provided by Google that provides turn-by-turn ● Data preprocessing navigation information and user-submitted travel times and route details Code was written using the the language R in order to go (Figure 1), is starting to be downloaded by millions of people worldwide in the recent years. Universities and Researches have started to use the data through the data and removes unnecessary data. As well as provided by Waze for research without testing whether the data coming from Merge Bluetooth and Waze Data together into one data set. Waze is accurate. ● Remove Duplicate Data ● Remove Waze Historical Data Bluetooth, a wireless technology standard for exchanging data between ● Merge Bluetooth and Waze Data in 24 hours fixed and mobile devices over short distances using short-wavelength radio Code was ran though Comet on OpenDIEL waves, has been proven to be reliable through research done by other Researchers. Using Bluetooth data, which is collected by two Bluetooth ● Calculate Parameters: detectors set up with a set distance between them (Figure 2), Waze data We choose three error formulas as parameters: can be tested to confirm reliability by comparing the two. MAE(Mean Absolute Error) RMSE(Root Mean Standard Error) MAPE(Mean Absolute Percentage Error) MAPE (31 Segments) N=The number of Bluetooth/Waze speed samples in each hour Figure 6:This MAPE line plots of y =The value of each Bluetooth speed sample different segments. -

Talent Cultivation in the Field of Infrastructure Construction and Professional Evaluation of Civil Engineering

2020 Conference on Social Science and Natural Science (SSNS2020) Talent Cultivation in the Field of Infrastructure Construction and Professional Evaluation of Civil Engineering Mingyi Qi Department of Engineering cost, College of Construction Engineering, Yunnan Technology and Business University, Kunming city, Yunnan Province, China [email protected] Keywords: Neighborhood of Infrastructure Construction; Talent Training; Civil Engineering; Professional Evaluation Abstract: Due to the gaps in educational resources and teaching levels among colleges and universities, the level of graduates is uneven, and social evaluations are also different. Therefore, employers and parents of candidates have an urgent need for objective and reasonable evaluation of the setting, running level and teaching quality of civil engineering majors in colleges and universities. Based on the above requirements, this article selects the professional school level evaluation as the research direction, using a combination of journal literature review and online literature survey, combined with theoretical research and empirical analysis, to discuss the professional evaluation method and index system, and establish it in the analytic hierarchy process On the basis of the civil engineering professional evaluation system, the empirical analysis and evaluation of the civil engineering majors in four colleges and universities in Hunan Province. The empirical analysis results show that the evaluation results of the civil engineering professional evaluation system established