City Research Online

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

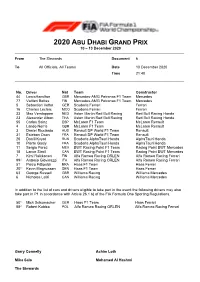

2020 ABU DHABI GRAND PRIX 10 – 13 December 2020

2020 ABU DHABI GRAND PRIX 10 – 13 December 2020 From The Stewards Document 6 To All Officials, All Teams Date 10 December 2020 Time 21:40 No. Driver Nat Team Constructor 44 LewisHamilton GBR Mercedes-AMG Petronas F1 Team Mercedes 77 Valtteri Bottas FIN Mercedes-AMG Petronas F1 Team Mercedes 5 Sebastian Vettel GER Scuderia Ferrari Ferrari 16 Charles Leclerc MCO Scuderia Ferrari Ferrari 33 Max Verstappen NED Aston Martin Red Bull Racing Red Bull Racing Honda 23 Alexander Albon THA Aston Martin Red Bull Racing Red Bull Racing Honda 55 Carlos Sainz ESP McLaren F1 Team McLaren Renault 4 Lando Norris GBR McLaren F1 Team McLaren Renault 3 Daniel Ricciardo AUS Renault DP World F1 Team Renault 31 Esteban Ocon FRA Renault DP World F1 Team Renault 26 Daniil Kvyat RUS Scuderia AlphaTauri Honda AlphaTauri Honda 10 Pierre Gasly FRA Scuderia AlphaTauri Honda AlphaTauri Honda 11 Sergio Perez MEX BWT Racing Point F1 Team Racing Point BWT Mercedes 18 Lance Stroll CAN BWT Racing Point F1 Team Racing Point BWT Mercedes 7 Kimi Raikkonen FIN Alfa Romeo Racing ORLEN Alfa Romeo Racing Ferrari 992 Antonio Giovinazzi ITA Alfa Romeo Racing ORLEN Alfa Romeo Racing Ferrari 51 Pietro Fittipaldi BRA Haas F1 Team Haas Ferrari 201 Kevin Magnussen DEN Haas F1 Team Haas Ferrari 63 George Russell GBR Williams Racing Williams Mercedes 6 Nicholas Latifi CAN Williams Racing Williams Mercedes In addition to the list of cars and drivers eligible to take part in the event the following drivers may also take part in P1 in accordance with Article 26.1 b) of the FIA Formula One Sporting Regulations. -

2013 Formula One Grand Prix

1 Cover Sheet Event: 2013 Austin Formula One Grand Prix (F1 USGP) Date: November 15 – 17, 2013; Location: Austin, Texas Report Date: September 15, 2014 2 Post Event Analysis 2013 Austin Formula One Grand Prix (F1 USGP) I. Summary of Conclusion This office concludes that the initial estimate of direct, indirect, and induced tax impact of $25,024,710 is reasonable based on the tax increases that occurred in the market area during the period in which the 2012 Austin Formula One Grand Prix (F1 USGP) event occurred. II. Introduction This post-event analysis of the F1 USGP is intended to evaluate the short-term tax benefits to the state through review of tax revenue data to determine the level of incremental tax impact from the event. A primary purpose of this analysis is to determine the reasonableness of the initial incremental tax estimate made by the Comptroller’s office prior to the event, in support of a request for a Major Events Trust Fund (METF). The initial estimate is included in Appendix A. Long term tax benefits due to the construction and ongoing operations of the venue are not considered in this analysis, but are significant1. Large events, particularly “premier events” such as this one, with heavy promotion, corporate sponsorship and spending, and “luxury” spending by visitors, will tend to create significant ripples in the local economy, and in fact, even the regional economy outside the immediate area considered in this report. The purpose of this report is to analyze the changes in tax collections during the period in which the event occurred. -

2017 Sustainability Report

Ferrari N.V. SUSTAINABILITY REPORT 2017 Ferrari N.V. Official Seat: Amsterdam, The Netherlands Dutch Trade Registration Number: 64060977 Administrative Offices: Via Abetone Inferiore 4 I- 41053, Maranello (MO) Italy Ferrari N.V. SUSTAINABILITY REPORT 2017 2 Table of contents Letter from the Chairman and Chief Executive Officer 05 A 70-year journey to sustainability 09 Ferrari Group 13 About Ferrari 13 Our DNA 14 Our Values 15 Our Strategy 15 Our Business 17 Sports and GT Cars 17 Formula 1 Activities 29 Brand Activities 30 Materiality Matrix of Ferrari Group 32 Stakeholder engagement 36 Our governance 39 Sustainability Risks 43 Product Responsibility 47 Research, Innovation and Technology 47 Client Relations 53 Vehicle Safety 60 Responsible Supply Chain 63 Production process 64 Conflict minerals 67 Our people 69 Working environment 69 Training and talent development 73 Occupational Health and Safety 77 Our employees in numbers 79 Our Environmental Responsibility 85 Plants and circuits 85 Vehicles environmental impact 94 Economic value generated and distributed 103 Ferrari contributes towards the community 105 Ferrari & universities 105 Ferrari Museum Maranello & Museo Enzo Ferrari (MEF) 106 Scuderia Ferrari Club 106 Ferrari Driver Academy 108 Methodology and scope 111 GRI content index 113 Independent Auditor’s Report 124 3 Letter from the Chairman and Chief Executive Officer Dear Shareholders, 2017 marked Ferrari’s 70th Anniversary. We were surprised and delighted by the enthusiasm and the extraordinary turnout, with tens of thousands of clients and fans participating in the yearlong tour of celebrations all over the world. Events were held in over 60 different countries, providing a truly vivid and unforgettable display of the brand’s power. -

Latest Offerings

ChicLuxury Welcome to Roadtrips, Cruises Resorts Tours the Ultimate in Sports Travel Together with our partner Roadtrips, we are proud to offer completely customized luxury sports travel experiences to the world’s most sought after events. With over 28 years of experience behind them, Roadtrips has access to an extensive collection of upmarket accommodations, VIP viewing options, and plenty of extras you can choose from to personalize your ultimate sports travel experience. We’ll work closely with Roadtrips’ team of Sports Travel Experts and professional on-site hosts to ensure you receive high-touch service of every step of the way. 2020 Masters Nov 9-15, 2020 Augusta, Georgia 2020 Summer Games Tokyo, Japan | Jul 23 - Aug 8, 2021 The ultimate bucket list experience for golf fans. Feature Hotel: Ritz-Carlton Reynolds, Lake Oconee Luxury travel packages to the highly anticipated Summer Games AAA Five Diamond Award in Tokyo. Featuring some of Tokyo’s most sought after hotels: Four Seasons Otemachi Tokyo’s newest luxury property, overlooking the Imperial Palace. Four Seasons Marunouchi Stylish yet cozy, an intimate anomaly among Tokyo’s expansive luxury properties. 2021 Super Bowl Feb 4-8, 2021 Park Hyatt Tokyo Tampa, Florida One of Tokyo’s most loved luxury hotels located just 1.5 miles from New National Stadium. Experience North America’s single biggest sporting event in sunny Florida. Contact us for current availability on luxury downtown hotel options. Calendar of Sporting Events Custom Sports Travel Packages Including Your Preferred: -

Scaricamento

n. 357 26 luglio 2016 Ferrari, dove vai? A un anno dalla vittoria di Vettel In Ungheria la Rossa è fuori dal podio, lontana dalla Mercedes, incalzata dalla Red Bull. E anche sul 2017 non ci sono certezze FORMULA 1 Test a Barcellona Registrazione al tribunale Civile di Bologna con il numero 4/06 del 30/04/2003 www.italiaracing.net A cura di: Massimo Costa Stefano Semeraro Marco Minghetti Fotografie: Photo4 Realizzazione: Inpagina srl Via Giambologna, 2 40138 Bologna Tel. 051 6013841 Fax 051 5880321 [email protected] © Tutti gli articoli e le immagini contenuti nel Magazine Italiaracing sono da intendersi a riproduzione riservata ai sensi dell'Art. 7 R.D. 18 maggio 1942 n.1369 diBaffi Il graffio GP UNGHERIA Il caso 4 Bandiere gialle non rispettate che passano impunite, regole cambiate fra un gara e l'altra: la F.1 è sempre più incomprensibile. Non più soltanto ai fan, ma anche a chi deve interpretarla in pista Quando la regola sregola 5 GP UNGHERIA Il caso 6 Stefano Semeraro Le regole servono, ci mancherebbe altro. Rules are rules, dicono gli inglesi. Ma troppe regole? E troppi cambiamenti alle regole? E regole incerte nel- l'applicazione? Il weekend di Budapest ha riaperto il fronte della contesta- zione alla FIA, colpevole secondo tanti di aver aggrovigliato troppo la matassa della Massima serie, che peraltro liscia e senza nodi non è stata mai, anzi. Sta- volta però il fronte è ampio, comprende un'ampia fetta di spettatori che fa- ticano a stare dietro alle regole e al funzionamento del Circus, i giornalisti – il cui compito, va bene, è quello di criticare, si spera con criterio – e anche i piloti che sono stanchi di vedersi cambiare sotto il naso codici e procedure. -

2014 Monaco Grand Prix

2014 MONACO GRAND PRIX From The FIA Formula One Technical Delegate Document 11 To The FIA Stewards of the Meeting Date 22 May 2014 Time 11:45 Title Technical Delegate's Report Description New PU components used by drivers Enclosed 06 Monaco GP 14 TDR5.pdf Jo Bauer The FIA Formula One Technical Delegate 2014 MONACO GRAND PRIX From : The FIA Formula One Technical Delegate Date : 22 May 2014 To : The Stewards of the Meeting Time : 11:45 Technical Delegate’s Report The following drivers will start the sixth Event of the 2014 Formula One World Championship with a new control electronics DC-DC (CE DC-DC): Number Car Driver Previously used CE 99 Sauber Ferrari Adrian Sutil 3 The control electronics DC-DC used by the above drivers is one of the five new control electronics DC-DC allowed for the 2014 Championship season and this is in conformity with Article 28.4a of the 2014 Formula One Sporting Regulations. The following drivers will start the sixth Event of the 2014 Formula One World Championship with a new control electronics CUK (CE CUK): Number Car Driver Previously used CE 08 Lotus Renault Romain Grosjean 1 13 Lotus Renault Pastor Maldonado 2 10 Caterham Renault Kamui Kobayashi 4 The control electronics CUK used by the above drivers is one of the five new control electronics CUK allowed for the 2014 Championship season and this is in conformity with Article 28.4a of the 2014 Formula One Sporting Regulations. The following drivers will start the sixth Event of the 2014 Formula One World Championship with a new control electronics CUH (CE CUH): Number Car Driver Previously used CE 01 Red Bull Racing Renault Sebastian Vettel 3 03 Red Bull Racing Renault Daniel Ricciardo 2 08 Lotus Renault Romain Grosjean 1 13 Lotus Renault Pastor Maldonado 2 The control electronics CUH used by the above drivers is one of the five new control electronics CUH allowed for the 2014 Championship season and this is in conformity with Article 28.4a of the 2014 Formula One Sporting Regulations. -

Formula 1 Race Car Performance Improvement by Optimization of the Aerodynamic Relationship Between the Front and Rear Wings

The Pennsylvania State University The Graduate School College of Engineering FORMULA 1 RACE CAR PERFORMANCE IMPROVEMENT BY OPTIMIZATION OF THE AERODYNAMIC RELATIONSHIP BETWEEN THE FRONT AND REAR WINGS A Thesis in Aerospace Engineering by Unmukt Rajeev Bhatnagar © 2014 Unmukt Rajeev Bhatnagar Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Science December 2014 The thesis of Unmukt R. Bhatnagar was reviewed and approved* by the following: Mark D. Maughmer Professor of Aerospace Engineering Thesis Adviser Sven Schmitz Assistant Professor of Aerospace Engineering George A. Lesieutre Professor of Aerospace Engineering Head of the Department of Aerospace Engineering *Signatures are on file in the Graduate School ii Abstract The sport of Formula 1 (F1) has been a proving ground for race fanatics and engineers for more than half a century. With every driver wanting to go faster and beat the previous best time, research and innovation in engineering of the car is really essential. Although higher speeds are the main criterion for determining the Formula 1 car’s aerodynamic setup, post the San Marino Grand Prix of 1994, the engineering research and development has also targeted for driver’s safety. The governing body of Formula 1, i.e. Fédération Internationale de l'Automobile (FIA) has made significant rule changes since this time, primarily targeting car safety and speed. Aerodynamic performance of a F1 car is currently one of the vital aspects of performance gain, as marginal gains are obtained due to engine and mechanical changes to the car. Thus, it has become the key to success in this sport, resulting in teams spending millions of dollars on research and development in this sector each year. -

FOR the FIRST TIME BUEMI and DA COSTA STORM to VICTORY 26-Page Special “Formula E Goes South America“

January 2015 2nd volume Number 002 eNews FOR THE FIRST TIME BUEMI AND DA COSTA STORM TO VICTORY 26-page special “Formula E goes South America“ The big Formula E Social Media Analysis Pros and Cons: Minimum pit stop time Energy Recovery in Formula E Formula E testing in Uruguay GET CHARGED! How Qualcomm revolutionises our understanding of battery charging EDITORIAL| 01 365 BLANK PAGES - LET‘S WRITE SOME GOOD ONES appy new year everyone! Half of January is already gone and 2015 does not feel very new to me anymore but yet I am still highly moti- H vated. 2015 is going to be a great year, at least I am convinced of this. So you can see that my early year‘s optimisn still hasn‘t passed. 2015 is the year when Formula E finally makes its way to Europe and to e-racing.net‘s and my hometown - Berlin. We seriously cannot wait to have the electrifying Formula E buzz over here and thanks to our high-running motiva- The 2015 Berlin ePrix - we cannot wait! eNews release dates 2015 tion we will present you with a content-packed „Europe Special“ edition of eNews which will be released in time for the Monte Carlo ePrix. 16-01-2015 eNews 002 But the „European Special“ is not the only suprise we have 13-02-2015 eNews 003 in store for you. When we are all still drying our tears about 13-03-2015 eNews 004 the first Formula E season of all time ending, we will give you the amazing oppertunity to travel back in time and experience it all again - in our big „Formula E season review 14-03-2015 Miami ePrix 2014-2015“. -

Drivers Hoping Barrichello Returns to F1 Next Year

14 Tuesday 29th November, 2011 Drivers hoping Barrichello returns to F1 next year Rameez Raja Restoring cricket ties with India most important: Rameez Karachi, Nov 27: “I think he (Zaka Pakistan’s former captain Ashraf) has made just the Rameez Raja feels restoring right start, since this (Indo- bilateral cricket ties with Pak) series benefits both India should be the top pri- countries and benefits ority of the PCB. cricket. There is no bigger “Our cricket has got a series than India-Pakistan Williams driver Rubens Barrichello, of Brazil, center, shares a moment with Ferrari driver Felipe Massa, also from Brazil, right, as Ferrari driver Fernando Alonso, few very important priori- and they should anoint it from Spain, stands next during a meeting to celebrate Massa’s 100th grand prix as a Ferrari driver. The Brazilian Formula One Grand Prix was held on Sunday. (AP ties which need to be as an ‘icon’ series, as Test looked at. First one obvi- cricket is already getting Photo/Victor R. Caivano) ously is how to relaunch ‘knockout punches’ from BY TALES AZZONI Brazilian GP on Sunday because would be in his longtime friend’s time I can do something like this.” cricket back in Pakistan. the limited overs formats,” Williams is yet to announce its best interests. Teammate Pastor Maldonado Number two is how to the cricketer turned com- SAO PAULO (AP) — If driver lineup. There have been rumors Massa said he advised will start 18th in Sunday’s season- improve our standing and mentator said. support counted, Rubens that Kimi Raikkonen may return Barrichello to retire to avoid hav- ending Brazilian GP. -

BRDC Bulletin

BULLETIN BULLETIN OF THE BRITISH RACING DRIVERS’ CLUB DRIVERS’ RACING BRITISH THE OF BULLETIN Volume 30 No 2 • SUMMER 2009 OF THE BRITISH RACING DRIVERS’ CLUB Volume 30 No 2 2 No 30 Volume • SUMMER 2009 SUMMER THE BRITISH RACING DRIVERS’ CLUB President in Chief HRH The Duke of Kent KG Volume 30 No 2 • SUMMER 2009 President Damon Hill OBE CONTENTS Chairman Robert Brooks 04 PRESIDENT’S LETTER 56 OBITUARIES Directors 10 Damon Hill Remembering deceased Members and friends Ross Hyett Jackie Oliver Stuart Rolt 09 NEWS FROM YOUR CIRCUIT 61 SECRETARY’S LETTER Ian Titchmarsh The latest news from Silverstone Circuits Ltd Stuart Pringle Derek Warwick Nick Whale Club Secretary 10 SEASON SO FAR 62 FROM THE ARCHIVE Stuart Pringle Tel: 01327 850926 Peter Windsor looks at the enthralling Formula 1 season The BRDC Archive has much to offer email: [email protected] PA to Club Secretary 16 GOING FOR GOLD 64 TELLING THE STORY Becky Simm Tel: 01327 850922 email: [email protected] An update on the BRDC Gold Star Ian Titchmarsh’s in-depth captions to accompany the archive images BRDC Bulletin Editorial Board 16 Ian Titchmarsh, Stuart Pringle, David Addison 18 SILVER STAR Editor The BRDC Silver Star is in full swing David Addison Photography 22 RACING MEMBERS LAT, Jakob Ebrey, Ferret Photographic Who has done what and where BRDC Silverstone Circuit Towcester 24 ON THE UP Northants Many of the BRDC Rising Stars have enjoyed a successful NN12 8TN start to 2009 66 MEMBER NEWS Sponsorship and advertising A round up of other events Adam Rogers Tel: 01423 851150 32 28 SUPERSTARS email: [email protected] The BRDC Superstars have kicked off their season 68 BETWEEN THE COVERS © 2009 The British Racing Drivers’ Club. -

List of Turbografx16 Games

List of TurboGrafx16 Games 1) 1941: Counter Attack 28) Blodia 2) 1943: Kai 29) Bloody Wolf 3) 21-Emon 30) Body Conquest II ~The Messiah~ 4) Adventure Island 31) Bomberman 5) Aero Blasters 32) Bomberman '93 6) After Burner II 33) Bomberman '93 Special 7) Air Zonk 34) Bomberman '94 8) Aldynes: The Mission Code for Rage Crisis 35) Bomberman: Users Battle 9) Alice in Wonderdream 36) Bonk 3: Bonk's Big Adventure 10) Alien Crush 37) Bonk's Adventure 11) Andre Panza Kick Boxing 38) Bonk's Revenge 12) Ankoku Densetsu 39) Bouken Danshaku Don: The Lost Sunheart 13) Aoi Blink 40) Boxyboy 14) Appare! Gateball 41) Bravoman 15) Artist Tool 42) Break In 16) Atomic Robo-Kid Special 43) Bubblegum Crash!: Knight Sabers 2034 17) Ballistix 44) Bull Fight: Ring no Haja 18) Bari Bari Densetsu 45) Burning Angels 19) Barunba 46) Cadash 20) Batman 47) Champion Wrestler 21) Battle Ace 48) Champions Forever Boxing 22) Battle Lode Runner 49) Chase H.Q. 23) Battle Royale 50) Chew Man Fu 24) Be Ball 51) China Warrior 25) Benkei Gaiden 52) Chozetsu Rinjin Berabo Man 26) Bikkuri Man World 53) Circus Lido 27) Blazing Lazers 54) City Hunter 55) Columns 84) Dragon's Curse 56) Coryoon 85) Drop Off 57) Cratermaze 86) Drop Rock Hora Hora 58) Cross Wiber: Cyber-Combat-Police 87) Dungeon Explorer 59) Cyber Cross 88) Dungeons & Dragons: Order of the Griffon 60) Cyber Dodge 89) Energy 61) Cyber Knight 90) F1 CIRCUS 62) Cyber-Core 91) F1 Circus '91 63) DOWNLOAD 92) F1 Circus '92 64) Daichikun Crisis: Do Natural 93) F1 Dream 65) Daisenpuu 94) F1 Pilot 66) Darius Alpha 95) F1 Triple -

F1-2014-Equipos.Pdf

1 Infiniti RED BULL Racing 2 Scuderia FERRARI 3 MCLAREN Mercedes 4 Lotus F1 Team 5 MERCEDES AMG Petronas F16 SAUBER F1 Team ESCUDERIA RED BULL FERRARI MCLAREN LOTUS MERCEDES Sui SAUBER Chasis RB10 F14-T MP4-29 E22 F1 W05 C33 Oficinas Milton Keynes, ING Maranello, ITA Woking, ING Enstone, ING Brackley, ING Sui Hinwill, SUI Patrocinador 1 INFINITI MARLBORO Rus GENII PETRONAS 1 CLARO Motor Renault F1-2014 Ferrari Mercedes-Benz PU106A Renault F1-2014 Mercedes-Benz PU106A-H Ferrari Aceite, PILOTOSCombust TOTAL SHELL MOBIL TOTAL PETRONAS SHELL Piloto 1 14 Sebastian VETTEL 1 Fernando ALONSO 1 Jenson BUTTON Romain GROSJEAN 1 Lewis HAMILTON Adrian SUTIL Din Ven Mex PERSONALPiloto 2 13 Daniel RICCIARDO Fin Kimi RAIKKONEN Kevin MAGNUSSEN Pastor MALDONADO Nico ROSBERG Esteban GUTIERREZ Director EQUIPO Christian Horner Marco Mattiacci Eric Boulliier Rus Gerard Lopez Toto Wolff Aut Monisha Kaltenborn Técnico Adrian Newey Pat Fry Tim Goss Nick Chester Paddy Lowe Diseño Rob Marshall ECE Nikolas Tombazis Neil Oatley Tim Densham Christophe Mary Sui Christoph Zimmermann Aerodinámica GBr Peter Prodromou Nicolas Hennel Doug McKiernan Jon Tomlinson Loic Bigois Willem Toet Ing.1 Guillaume Rocquelin Ita Andrea Stella 1 Dave Robson Ciaron Pilbeam Jock Clear Francesco Nenci PRUEBAS:Ing.2 Simon Rennie GBr Rob Smedley 2 Andy Latham Mark Slade Tony Ross Sui Marco Schüpbach Reserva: Sui Sebastien Buemi ESP Pedro De la Rosa BEL Stofel Vandoorne Charles Pic Rus Sergei Sirotkin Pruebas: 2 Antonio Felix da Costa Giedo Van der Garde 3o: 7 Sahara FORCE INDIA F1 Team 8 WILLIAMS