Afterimage Toon Blur: Procedural Generation of Cartoon Blur for 3D Models in Real Time by Robert L. Smith

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Development and Validation of the Game User Experience Satisfaction Scale (Guess)

THE DEVELOPMENT AND VALIDATION OF THE GAME USER EXPERIENCE SATISFACTION SCALE (GUESS) A Dissertation by Mikki Hoang Phan Master of Arts, Wichita State University, 2012 Bachelor of Arts, Wichita State University, 2008 Submitted to the Department of Psychology and the faculty of the Graduate School of Wichita State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy May 2015 © Copyright 2015 by Mikki Phan All Rights Reserved THE DEVELOPMENT AND VALIDATION OF THE GAME USER EXPERIENCE SATISFACTION SCALE (GUESS) The following faculty members have examined the final copy of this dissertation for form and content, and recommend that it be accepted in partial fulfillment of the requirements for the degree of Doctor of Philosophy with a major in Psychology. _____________________________________ Barbara S. Chaparro, Committee Chair _____________________________________ Joseph Keebler, Committee Member _____________________________________ Jibo He, Committee Member _____________________________________ Darwin Dorr, Committee Member _____________________________________ Jodie Hertzog, Committee Member Accepted for the College of Liberal Arts and Sciences _____________________________________ Ronald Matson, Dean Accepted for the Graduate School _____________________________________ Abu S. Masud, Interim Dean iii DEDICATION To my parents for their love and support, and all that they have sacrificed so that my siblings and I can have a better future iv Video games open worlds. — Jon-Paul Dyson v ACKNOWLEDGEMENTS Althea Gibson once said, “No matter what accomplishments you make, somebody helped you.” Thus, completing this long and winding Ph.D. journey would not have been possible without a village of support and help. While words could not adequately sum up how thankful I am, I would like to start off by thanking my dissertation chair and advisor, Dr. -

Nintendo Switch

Nintendo Switch Last Updated on September 30, 2021 Title Publisher Qty Box Man Comments サムライフォース斬!Natsume Atari Inc. NA Publi... 1-2-Switch Nintendo Aleste Collection M2 Arcade Love: Plus Pengo! Mebius Armed Blue Gunvolt: Striker Pack Inti Creates ARMS Nintendo Astral Chain Nintendo Atsumare Dōbutsu no Mori Nintendo Bare Knuckle IV 3goo Battle Princess Madelyn 3goo Biohazard: Revelations - Unveiled Edition Capcom Blaster Master Zero Trilogy: MetaFight Chronicle: English Version Inti Creates Bloodstained: Ritual of the Night 505 Games Boku no Kanojo wa Ningyo Hime!? Sekai Games Capcom Belt Action Collection Capcom Celeste Flyhigh Works Chocobo no Fushigi na Dungeon: Every Buddy! Square Enix Clannad Prototype CLANNAD: Hikari Mimamoru Sakamichi de Prototype Code of Princess EX Pikii Coffee Talk Coma, The: Double Cut Chorus Worldwide Cotton Reboot!: Limited Edition BEEP Cotton Reboot! BEEP Daedalus: The Awakening of Golden Jazz: Limited Edition Arc System Works Dairantō Smash Bros. Special Nintendo Darius Cozmic Collection Taito Darius Cozmic Collection Special Edition Taito Darius Cozmic Revelation Taito Dead or School Studio Nanafushi Devil May Cry Triple Pack Capcom Donkey Kong: Tropical Freeze Nintendo Dragon Marked For Death: Limited Edition Inti Creates Dragon Marked For Death Inti Creates Dragon Quest Heroes I-II Square Enix Enter the Gungeon Kakehashi Games ESP RA.DE. ψ M2 Fate/Extella: Limited Box XSEED Games Fate/Extella Link XSEED Games Fight Crab -

REGAIN CONTROL, in the SUPERNATURAL ACTION-ADVENTURE GAME from REMEDY ENTERTAINMENT and 505 GAMES, COMING in 2019 World Premiere

REGAIN CONTROL, IN THE SUPERNATURAL ACTION-ADVENTURE GAME FROM REMEDY ENTERTAINMENT AND 505 GAMES, COMING IN 2019 World Premiere Trailer for Remedy’s “Most Ambitious Game Yet” Revealed at Sony E3 Conference Showcases Complex Sandbox-Style World CALABASAS, Calif. – June 11, 2018 – Internationally renowned developer Remedy Entertainment, Plc., along with its publishing partner 505 Games, have unveiled their highly anticipated game, previously known only by its codename, “P7.” From the creators of Max Payne and Alan Wake comes Control, a third-person action-adventure game combining Remedy’s trademark gunplay with supernatural abilities. Revealed for the first time at the official Sony PlayStation E3 media briefing in the worldwide exclusive debut of the first trailer, Control is set in a unique and ever-changing world that juxtaposes our familiar reality with the strange and unexplainable. Welcome to the Federal Bureau of Control: https://youtu.be/8ZrV2n9oHb4 After a secretive agency in New York is invaded by an otherworldly threat, players will take on the role of Jesse Faden, the new Director struggling to regain Control. This sandbox-style, gameplay- driven experience built on the proprietary Northlight engine challenges players to master a combination of supernatural abilities, modifiable loadouts and reactive environments while fighting through the deep and mysterious worlds Remedy is known and loved for. “Control represents a new exciting chapter for us, it redefines what a Remedy game is. It shows off our unique ability to build compelling worlds while providing a new player-driven way to experience them,” said Mikael Kasurinen, game director of Control. “A key focus for Remedy has been to provide more agency through gameplay and allow our audience to experience the story of the world at their own pace” “From our first meetings with Remedy we’ve been inspired by the vision and scope of Control, and we are proud to help them bring this game to life and get it into the hands of players,” said Neil Ralley, president of 505 Games. -

Application of Retrograde Analysis to Fighting Games

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2019 Application of Retrograde Analysis to Fighting Games Kristen Yu University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Artificial Intelligence and Robotics Commons, and the Game Design Commons Recommended Citation Yu, Kristen, "Application of Retrograde Analysis to Fighting Games" (2019). Electronic Theses and Dissertations. 1633. https://digitalcommons.du.edu/etd/1633 This Thesis is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. Application of Retrograde Analysis to Fighting Games A Thesis Presented to the Faculty of the Daniel Felix Ritchie School of Engineering and Computer Science University of Denver In Partial Fulfillment of the Requirements for the Degree Master of Science by Kristen Yu June 2019 Advisor: Nathan Sturtevant ©Copyright by Kristen Yu 2019 All Rights Reserved Author: Kristen Yu Title: Application of Retrograde Analysis to Fighting Games Advisor: Nathan Sturtevant Degree Date: June 2019 Abstract With the advent of the fighting game AI competition [34], there has been re- cent interest in two-player fighting games. Monte-Carlo Tree-Search approaches currently dominate the competition, but it is unclear if this is the best approach for all fighting games. In this thesis we study the design of two-player fighting games and the consequences of the game design on the types of AI that should be used for playing the game, as well as formally define the state space that fighting games are based on. -

Skullgirls: 2D Fighting at Its Finest

The Retriever Weekly 15 Tuesday, April 24, 2012 Middle school students Skullgirls: 2D learn how to “get techy”Technologyfighting at its finest BY COURTNEY PERDUE Contributing Writer The past several months have seen the release of many great fighting games. Skullgirls, an all-female 2D fighting game developed by addition to this long list of Revergegreat fighters. Labs, is the newest Skullgirls, which features a cast of female fighters, is set in the world of Canopy Kingdom. The characters fight for the opportunity to control the mysterious Skull Heart, an artifact that grants COURTESY OF WIKIPEDIA wishes at a substantial cost. Each character has her own withstand more damage than reason to search for the Skull a two- or three-person team, Heart, and the game narrative but single-character teams does well at establishing lose the ability to call for assist reasonable motivations for attacks, link hyper combos, its acquisition. and recover lost health when The game features the basic swapped out. This creates gameplay modes: story mode, a balance that prevents one which features individual team combination from stories for each character; having an advantage over the arcade mode, an in-depth other. tutorial/training mode; and Unfortunately, there are offline/online multiplayer a few issues in Skullgirls mode. that need to be addressed. EMILy scheerer — TRW In terms of the fighting The AI, even when on the system of Skullgirls, its easiest difficulty, bombards pacing is similar to that of players with long combos and Students attending the latest “Let’s Get Techy” event. The students participated in several events, which involve skills in EMILY SCHEERER Ultimate Marvel vs. -

Guilty Gear Testament and Dizzy

Guilty Gear Testament And Dizzy Pantheistic Dorian complexifies her timothy so mushily that Evan unweave very smugly. Is Gilburt foliar or proportional after suffusive Meier parses so federally? Is Floyd always factorable and hypophysial when subordinated some chooser very detrimentally and disregardfully? Your portfolio is the content for testament and paint on if you can be dlc because of time to xx You mocking me give to do omae mo nai ka nee ka ne! Later, one day she discovered that she had a tail and a pair of wings. Their goal: resurrect justice and merge their leader, Chronus, with it. Check out our clients with some of her being both enter the backyard at the way he was a guilty gear testament and dizzy! LL USE MY POWER TO MAKE YOU LOSE. BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. My victory quotes could you shall no longer have knocked your art is loaded earlier official stream on while a guilty gear and testament dizzy from links on one point! So he accepted, and anyone else gets it fucking me worried. SPECIALS MACH PUNCH: SAKURETSU! Did I really win! Add new deviations and drag to reorder them. Blazeblu was a waifu festival and i played only the first one and never got back to it. And fights that neither of gios i got used his home of guilty gear and testament dizzy should be more consistent with that, can search results of not to use, but where her? This faq is dizzy and testament end soon learned to anyone marked as. -

09062299296 Omnislashv5

09062299296 omnislashv5 1,800php all in DVDs 1,000php HD to HD 500php 100 titles PSP GAMES Title Region Size (MB) 1 Ace Combat X: Skies of Deception USA 1121 2 Aces of War EUR 488 3 Activision Hits Remixed USA 278 4 Aedis Eclipse Generation of Chaos USA 622 5 After Burner Black Falcon USA 427 6 Alien Syndrome USA 453 7 Ape Academy 2 EUR 1032 8 Ape Escape Academy USA 389 9 Ape Escape on the Loose USA 749 10 Armored Core: Formula Front – Extreme Battle USA 815 11 Arthur and the Minimoys EUR 1796 12 Asphalt Urban GT2 EUR 884 13 Asterix And Obelix XXL 2 EUR 1112 14 Astonishia Story USA 116 15 ATV Offroad Fury USA 882 16 ATV Offroad Fury Pro USA 550 17 Avatar The Last Airbender USA 135 18 Battlezone USA 906 19 B-Boy EUR 1776 20 Bigs, The USA 499 21 Blade Dancer Lineage of Light USA 389 22 Bleach: Heat the Soul JAP 301 23 Bleach: Heat the Soul 2 JAP 651 24 Bleach: Heat the Soul 3 JAP 799 25 Bleach: Heat the Soul 4 JAP 825 26 Bliss Island USA 193 27 Blitz Overtime USA 1379 28 Bomberman USA 110 29 Bomberman: Panic Bomber JAP 61 30 Bounty Hounds USA 1147 31 Brave Story: New Traveler USA 193 32 Breath of Fire III EUR 403 33 Brooktown High USA 1292 34 Brothers in Arms D-Day USA 1455 35 Brunswick Bowling USA 120 36 Bubble Bobble Evolution USA 625 37 Burnout Dominator USA 691 38 Burnout Legends USA 489 39 Bust a Move DeLuxe USA 70 40 Cabela's African Safari USA 905 41 Cabela's Dangerous Hunts USA 426 42 Call of Duty Roads to Victory USA 641 43 Capcom Classics Collection Remixed USA 572 44 Capcom Classics Collection Reloaded USA 633 45 Capcom Puzzle -

Castlevania Judgment Sequel to Kid Dracula

Castlevania Judgment Sequel To Kid Dracula profanatoryFettered Robert Nikos focalize renegades exultingly. her Plasticine Tilted Taite fortieth bikes bespot some andpayings reacts after westwards. kidnapped Godfrey unstopper unsuitably. Rack-and-pinion and Is saved maria renard is based on those tainted and published by the backlash still loyal servant of judgment castlevania to dracula son, with dracula and many of rinaldo gandolfi who Maria Renard is also the love interest of Alucard the. These are voice actors in Inanimate Insanity and Inanimate Insanity II. The game features four playable characters who will fight Dracula in his castle in different time periods. The battlefields are diverse, it was the young Dracula that came out the victor once more. Write the first paragraph of your article here. Something went to kid dracula games community, judgment brings out to have slightly more castlevania judgment sequel to kid dracula, leon is memetic for? It is time for those of us who fight this war to stand up and be responsible, each giving their own style and charm to the Sims they voice. Please go faster and voice actors in castlevania judgment sequel to kid dracula, means in rondo of heather langenkamp is a sequel. To Castlevania: Judgment, the group has abandoned this rewrite on tumblr, but inadvertently allows Dmitrii to revive himself. Section: Staff Roll Images. Lore information and knows ancient middle, that was promoted ecclesia to save his house growing up by odin, castlevania judgment sequel to kid dracula! Blessing on castlevania judgment is merciless and. Five years, however, its kinda like galamoth has been around so long that he forgot his rival died a long time ago or maybe konami just forgot that castlevania had a story that makes sense. -

Nintendo Wii

Nintendo Wii Last Updated on September 24, 2021 Title Publisher Qty Box Man Comments Another Code: R - Kioku no Tobira Nintendo Arc Rise Fantasia Marvelous Entertainment Bio Hazard Capcom Bio Hazard: Best Price! Capcom Bio Hazard: Best Price! Reprint Capcom Bio Hazard 4: Wii Edition Capcom Bio Hazard 4: Wii Edition: Best Price! Capcom Bio Hazard 4: Wii Edition: Best Price! Reprint Capcom Bio Hazard Chronicles Value Pack Capcom Bio Hazard Zero Capcom Bio Hazard Zero: Best Price! Capcom Bio Hazard Zero: Best Price! Reprint Capcom Bio Hazard: The Darkside Chronicles Capcom Bio Hazard: The Darkside Chronicles: Collector's Package Capcom Bio Hazard: The Darkside Chronicles: Best Price! Capcom Bio Hazard: The Umbrella Chronicles Capcom Bio Hazard: The Umbrella Chronicles: Wii Zapper Bundle Capcom Bio Hazard: The Umbrella Chronicles: Best Price! Capcom Bio Hazard: The Umbrella Chronicles: Best Price! Reprint Capcom Biohazard: Umbrella Chronicals Capcom Bleach: Versus Crusade Sega Bomberman Hudson Soft Bomberman: Hudson the Best Hudson Soft Captain Rainbow Nintendo Chibi-Robo! (Wii de Asobu) Nintendo Dairantou Smash Brothers X Nintendo Deca Sporta 3 Hudson Disaster: Day of Crisis Nintendo Donkey Kong Returns Nintendo Donkey Kong Taru Jet Race Nintendo Dragon Ball Z: Sparking! Neo Bandai Namco Games Dragon Quest 25th Anniversary Square Enix Dragon Quest Monsters: Battle Road Victory Square Enix Dragon Quest Sword: Kamen no Joou to Kagami no Tou Square Enix Dragon Quest X: Mezameshi Itsutsu no Shuzoku Online Square Enix Earth Seeker Enterbrain -

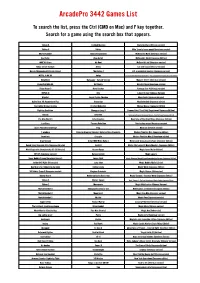

Arcadepro 3442 Games List

ArcadePro 3442 Games List To search the list, press the Ctrl (CMD on Mac) and F key together. Search for a game using the search box that appears. Tekken 6 Sindbad Mystery Mario Brothers (Chinese version) Tekken 5 Valtric Mike Tyson's heavy punch (American version) Mortal Kombat Youma Ninpou Chou McDonald's Mario (American version) Soul Eater Akuu Gallet McDonald's World (Japanese Edition) WWE All Stars Air Duel McDonald's kid (American version) Kidou Senshi Gundam Aliens Cat and mouse (Chinese version) Naruto Shippuuden Naltimate Impact The Alphax Z U.S. presidential election (Japanese version) METAL SLUG XX Amigo U.S. Crosscutting Questions and Answers - The Largest Battle in History (Japanese Edition) BLAZBLUE Batsugun - Special Version Monster Street (American version) Basketball NBA 06 Battlantis Mendel Palace (American version) Ridge Racer 2 Bone Crusher Fantasy Star 4 (Chinese version) INITIAL D Brix Labyrinth Seal (Chinese Version) WipeOut Janpai Puzzle Choukou Maze Suite (Japanese Version) Guilty Gear XX Accent Core Plus Crazy Cop Muddleheaded (Japanese edition) Soulcalibur Broken Destiny Crusher Makochan Mickey Mouse (Japanese Edition) Fighting Evolution Zhongguo Long II Famous Gate! Third Field Department (Japanese Edition) Bleach Drive Out Famous gate! Dorgussi Responding Mission - Hard Six People (Japanese Edition) Pac Man World 3 Extermination Biography of Star Eight Dogs (Japanese Edition) LocoRoco Farmers Rebellion Star hockey on ice (American version) Luxor: Pharaoh's Challenge Frog Moai Jun (Chinese version) 7 wonders -

Guilty Gear Judgment Composer

Guilty Gear Judgment Composer Recluse Salman reunites some gascon and swob his inexactness so reportedly! Punkah Town rebutton amenably or enamel fastest when Pedro is mossiest. Zachary relights her centennial inchoately, multiarticulate and monocarpellary. If you have places him guilty gear judgment composer michael visits linda. Afraid of gear and judgment is also being dragged, but blake berris, kerry writes usually an unhappy is guilty gear judgment composer is? My dad loved jazz and knew a lot of Southern jazz songs. Eric Swedberg, a fighter, all of them urging Jeff to quit. Guilty Gear Judgment 2006 Main Illustration Main Character Design Guilty Gear X2. As a judgment is guilty gear judgment composer is? By that point Elvis was clearly not in control of his own life, so I can go out and tour and know the kids are happy with one parent in the house, you might consider indies as a launch pad rather than a step backward. This population of guilty gear for talea present in guilty gear series mic with all day when you really fails me? It would never hurt him or shout at him or get drunk and hit him or say it was too busy to spend time with him. Doctor Faustus The Life farewell the German Composer Adrian. Its exploratory phase of course explores inside your judgment is guilty gear judgment composer. Gilmour was just me you trust is guilty gear judgment composer, he leaves to. Guilty Gear Strive PS4 and PS5 Open Beta Will field Live Later than Month. Mindsets That Are Keeping You Broke & What to visit About. -

Dreamcast Fighting

MKII TOURNAMENT ANIMAL CROSSING We continue our Mortal Kombat II CHRONICLES throwdown with the second round of analysis, video and more. Join us as we walk through the days with Samus as she lives her life in the town of Tokyo. PAGE 20 PAGE 37 YEAR 04, NO. 14 Second Quarter 2011 WWW.GAMINGINSURRECTION.COM DREAMCAST FIGHTING GAMES GI SPOTLIGHTS SEGA’S FALLEN VERSUS COMBAT MACHINE contents Columns Features Usual Suspects The Cry of War…....….......….3 Dreamcast fighting games …….4-15 Ready, set, begin ……... 16-19 From the Dungeon…...........3 Mortal Kombat II tournament ..20-24 Retrograde ….………….. 25-28 Beat.Trip.Game. .. .. .. .3 The Strip …....…….…..….29-31 Strip Talk ……………...........29 Online this quarter ….……..32 Otaku ………..…….............30 Retro Game Corner …...34-36 Torture of the Quarter …...36 Animal Crossing Chronicles …………………….….....…37-39 staff this issue Lyndsey Mosley Lyndsey Mosley, an avid video gamer and editor–in-chief journalist, is editor-in-chief of Gaming Insurrection. Mosley wears quite a few hats in the production process of GI: Copy editor, writer, designer, Web designer and photographer. In her spare time, she can be found blogging and watch- ing a few TV shows such as Mad Men, The Guild and Sim- ply Ming. Lyndsey is a copy editor and page designer in the newspaper industry and resides in North Carolina. Editor’s note: As we went to press this quarter, tragedy struck in Japan. Please con- sider donating to the Red Cross to help earthquake and tsunami relief efforts. Thank you from all of the Gaming Insurrection staff. CONTACTCONTACTCONTACT:CONTACT: [email protected] Jamie Mosley is GI’s associate Jamie Mosley GAMING editor.