Improving Input Prediction in Online Fighting Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Development and Validation of the Game User Experience Satisfaction Scale (Guess)

THE DEVELOPMENT AND VALIDATION OF THE GAME USER EXPERIENCE SATISFACTION SCALE (GUESS) A Dissertation by Mikki Hoang Phan Master of Arts, Wichita State University, 2012 Bachelor of Arts, Wichita State University, 2008 Submitted to the Department of Psychology and the faculty of the Graduate School of Wichita State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy May 2015 © Copyright 2015 by Mikki Phan All Rights Reserved THE DEVELOPMENT AND VALIDATION OF THE GAME USER EXPERIENCE SATISFACTION SCALE (GUESS) The following faculty members have examined the final copy of this dissertation for form and content, and recommend that it be accepted in partial fulfillment of the requirements for the degree of Doctor of Philosophy with a major in Psychology. _____________________________________ Barbara S. Chaparro, Committee Chair _____________________________________ Joseph Keebler, Committee Member _____________________________________ Jibo He, Committee Member _____________________________________ Darwin Dorr, Committee Member _____________________________________ Jodie Hertzog, Committee Member Accepted for the College of Liberal Arts and Sciences _____________________________________ Ronald Matson, Dean Accepted for the Graduate School _____________________________________ Abu S. Masud, Interim Dean iii DEDICATION To my parents for their love and support, and all that they have sacrificed so that my siblings and I can have a better future iv Video games open worlds. — Jon-Paul Dyson v ACKNOWLEDGEMENTS Althea Gibson once said, “No matter what accomplishments you make, somebody helped you.” Thus, completing this long and winding Ph.D. journey would not have been possible without a village of support and help. While words could not adequately sum up how thankful I am, I would like to start off by thanking my dissertation chair and advisor, Dr. -

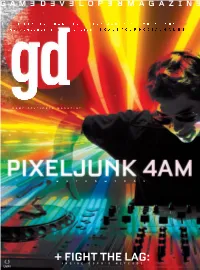

Game Developers Agree: Lag Kills Online Multiplayer, Especially When You’Re Trying to Make Products That Rely on Timing-Based CO Skill, Such As Fighting Games

THE LEADING GAME INDUSTRY MAGAZINE VOL19 NO9 SEPTEMBER 2012 INSIDE: SCALE YOUR SOCIAL GAMES postmortem BER 9 m 24 PIXELJUNK 4AM How do you invent a new musical instrument? PIXELJUNK 4AM turned PS3s everywhere into music-making machines and let players stream E 19 NU their performances worldwide. In this postmortem, lead designer Rowan m Parker walks us through the ups (Move controls, online streaming), the LU o downs (lack of early direction, game/instrument duality), and why you V need to have guts when you’re reinventing interactive music. By Rowan Parker features NTENTS.0912 7 FIGHT THE LAG! Nine out of ten game developers agree: Lag kills online multiplayer, especially when you’re trying to make products that rely on timing-based co skill, such as fighting games. Fighting game community organizer Tony Cannon explains how he built his GGPO netcode to “hide” network latency and make online multiplayer appetizing for even the most picky players. By Tony Cannon 15 SCALE YOUR ONLINE GAME Mobile and social games typically rely on a robust server-side backend—and when your game goes viral, a properly-architected backend is the difference between scaling gracefully and being DDOSed by your own players. Here’s how to avoid being a victim of your own success without blowing up your server bill. By Joel Poloney 20 LEVEL UP YOUR STUDIO Fix your studio’s weakest facet, and it will contribute more to your studio’s overall success than its strongest facet. Production consultant Keith Fuller explains why it’s so important to find and address your studio’s weaknesses in the results of his latest game production survey. -

Koronaviruspandemian Vaikutus Elektroniseen Urheiluun

Koronaviruspandemian vaikutus elektroniseen urheiluun Rami Waly ja Tuomo Tammela 2021 Laurea Laurea-ammattikorkeakoulu Koronaviruspandemian vaikutus elektroniseen urheiluun Rami Waly ja Tuomo Tammela Tietojenkäsittely Opinnäytetyö Huhtikuu 2021 Laurea-ammattikorkeakoulu Tiivistelmä Tietojenkäsittely koulutusohjelma Tradenomi (AMK) Rami Waly, Tuomo Tammela Koronaviruspandemian vaikutus elektroniseen urheiluun Vuosi 2021 Sivumäärä 48 Opinnäytetyön tavoitteena on selvittää, kuinka koronaviruspandemia on vaikuttanut elektro- niseen urheiluun. Opinnäytetyössä käydään läpi mitä toimia elektronisen urheilun organisaa- tiot ja tapahtumien ylläpitäjät ovat ottaneet siirtääkseen toimintaansa LAN-pelaamisesta verkkopelaamiseen. Lisäksi opinnäytetyöstä selviää, millä tavoin e-urheilun tapahtumien jär- jestäminen on pyritty turvaamaan pandemian aikana. Opinnäytetyössä tutkittiin myös eri kil- pailullisten videopelien lajeja sekä elektronisen urheilun organisaatioita. Elektronisen urheilun kysynnän ja joukkueiden kilpailullisen hengen kasvaessa seuraajat jää- vät miettimään, kuinka pandemian vaikutukset muuttavat elektronista urheilua tulevina vuo- sina. E-urheilun tapahtumat ovat yleisesti toimineet paikallisesti, mutta turnauksia on aiem- min myös pidetty verkon kautta. Tämänhetkinen koronaviruspandemia pakotti kaikki elektro- nisen urheiluun liittyvät osapuolet sopeutumaan nopeasti uuteen tilanteeseen, sekä arvioi- maan uudelleen kaikki mahdolliset turvatoimet katsojien, pelaajien ja työntekijöiden vuoksi. Opinnäytetyössä tutkitaan myös latenssin -

Game Interface Design for the Elderly

Game Interface Design for the Elderly Jens Cherukad MASTERARBEIT eingereicht am Fachhochschul-Masterstudiengang Universal Computing in Hagenberg im Januar 2018 © Copyright 2018 Jens Cherukad This work is published under the conditions of the Creative Commons License Attribution- NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0)—see https:// creativecommons.org/licenses/by-nc-nd/4.0/. ii Declaration I hereby declare and confirm that this thesis is entirely the result of my own original work. Where other sources of information have been used, they have been indicated as such and properly acknowledged. I further declare that this or similar work has not been submitted for credit elsewhere. Hagenberg, January 25, 2018 Jens Cherukad iii Contents Declaration iii Abstract vi Kurzfassung vii 1 Introduction 1 1.1 Defining the Elderly and their Needs . .1 1.2 Structure . 2 2 Concepts of Interface Design 4 2.1 Feedback . 4 2.2 Control . 6 2.2.1 Computers . 8 2.2.2 Consoles . 8 2.2.3 Mobile . 9 2.2.4 Virtual Reality . 9 2.3 Supporting Gameplay . 10 2.3.1 Classification of Elements . 10 2.3.2 Gameplay Genres . 13 3 Interface Design for Elderly People 18 3.1 Heuristics and Studies for Interface Design for Elderly . 18 3.1.1 The ALTAC-Project . 18 3.1.2 Touch based User Interfaces . 19 3.1.3 Tangible Interfaces . 19 3.2 Analysis of Game-Interfaces for Elderly . 21 3.2.1 Tangible Gaming . 21 3.3 Outcome of the Research . 22 3.3.1 Output . 22 3.3.2 Input . -

Fighting Games, Performativity, and Social Game Play a Dissertation

The Art of War: Fighting Games, Performativity, and Social Game Play A dissertation presented to the faculty of the Scripps College of Communication of Ohio University In partial fulfillment of the requirements for the degree Doctor of Philosophy Todd L. Harper November 2010 © 2010 Todd L. Harper. All Rights Reserved. This dissertation titled The Art of War: Fighting Games, Performativity, and Social Game Play by TODD L. HARPER has been approved for the School of Media Arts and Studies and the Scripps College of Communication by Mia L. Consalvo Associate Professor of Media Arts and Studies Gregory J. Shepherd Dean, Scripps College of Communication ii ABSTRACT HARPER, TODD L., Ph.D., November 2010, Mass Communications The Art of War: Fighting Games, Performativity, and Social Game Play (244 pp.) Director of Dissertation: Mia L. Consalvo This dissertation draws on feminist theory – specifically, performance and performativity – to explore how digital game players construct the game experience and social play. Scholarship in game studies has established the formal aspects of a game as being a combination of its rules and the fiction or narrative that contextualizes those rules. The question remains, how do the ways people play games influence what makes up a game, and how those players understand themselves as players and as social actors through the gaming experience? Taking a qualitative approach, this study explored players of fighting games: competitive games of one-on-one combat. Specifically, it combined observations at the Evolution fighting game tournament in July, 2009 and in-depth interviews with fighting game enthusiasts. In addition, three groups of college students with varying histories and experiences with games were observed playing both competitive and cooperative games together. -

REGAIN CONTROL, in the SUPERNATURAL ACTION-ADVENTURE GAME from REMEDY ENTERTAINMENT and 505 GAMES, COMING in 2019 World Premiere

REGAIN CONTROL, IN THE SUPERNATURAL ACTION-ADVENTURE GAME FROM REMEDY ENTERTAINMENT AND 505 GAMES, COMING IN 2019 World Premiere Trailer for Remedy’s “Most Ambitious Game Yet” Revealed at Sony E3 Conference Showcases Complex Sandbox-Style World CALABASAS, Calif. – June 11, 2018 – Internationally renowned developer Remedy Entertainment, Plc., along with its publishing partner 505 Games, have unveiled their highly anticipated game, previously known only by its codename, “P7.” From the creators of Max Payne and Alan Wake comes Control, a third-person action-adventure game combining Remedy’s trademark gunplay with supernatural abilities. Revealed for the first time at the official Sony PlayStation E3 media briefing in the worldwide exclusive debut of the first trailer, Control is set in a unique and ever-changing world that juxtaposes our familiar reality with the strange and unexplainable. Welcome to the Federal Bureau of Control: https://youtu.be/8ZrV2n9oHb4 After a secretive agency in New York is invaded by an otherworldly threat, players will take on the role of Jesse Faden, the new Director struggling to regain Control. This sandbox-style, gameplay- driven experience built on the proprietary Northlight engine challenges players to master a combination of supernatural abilities, modifiable loadouts and reactive environments while fighting through the deep and mysterious worlds Remedy is known and loved for. “Control represents a new exciting chapter for us, it redefines what a Remedy game is. It shows off our unique ability to build compelling worlds while providing a new player-driven way to experience them,” said Mikael Kasurinen, game director of Control. “A key focus for Remedy has been to provide more agency through gameplay and allow our audience to experience the story of the world at their own pace” “From our first meetings with Remedy we’ve been inspired by the vision and scope of Control, and we are proud to help them bring this game to life and get it into the hands of players,” said Neil Ralley, president of 505 Games. -

Application of Retrograde Analysis to Fighting Games

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2019 Application of Retrograde Analysis to Fighting Games Kristen Yu University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Artificial Intelligence and Robotics Commons, and the Game Design Commons Recommended Citation Yu, Kristen, "Application of Retrograde Analysis to Fighting Games" (2019). Electronic Theses and Dissertations. 1633. https://digitalcommons.du.edu/etd/1633 This Thesis is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. Application of Retrograde Analysis to Fighting Games A Thesis Presented to the Faculty of the Daniel Felix Ritchie School of Engineering and Computer Science University of Denver In Partial Fulfillment of the Requirements for the Degree Master of Science by Kristen Yu June 2019 Advisor: Nathan Sturtevant ©Copyright by Kristen Yu 2019 All Rights Reserved Author: Kristen Yu Title: Application of Retrograde Analysis to Fighting Games Advisor: Nathan Sturtevant Degree Date: June 2019 Abstract With the advent of the fighting game AI competition [34], there has been re- cent interest in two-player fighting games. Monte-Carlo Tree-Search approaches currently dominate the competition, but it is unclear if this is the best approach for all fighting games. In this thesis we study the design of two-player fighting games and the consequences of the game design on the types of AI that should be used for playing the game, as well as formally define the state space that fighting games are based on. -

Skullgirls: 2D Fighting at Its Finest

The Retriever Weekly 15 Tuesday, April 24, 2012 Middle school students Skullgirls: 2D learn how to “get techy”Technologyfighting at its finest BY COURTNEY PERDUE Contributing Writer The past several months have seen the release of many great fighting games. Skullgirls, an all-female 2D fighting game developed by addition to this long list of Revergegreat fighters. Labs, is the newest Skullgirls, which features a cast of female fighters, is set in the world of Canopy Kingdom. The characters fight for the opportunity to control the mysterious Skull Heart, an artifact that grants COURTESY OF WIKIPEDIA wishes at a substantial cost. Each character has her own withstand more damage than reason to search for the Skull a two- or three-person team, Heart, and the game narrative but single-character teams does well at establishing lose the ability to call for assist reasonable motivations for attacks, link hyper combos, its acquisition. and recover lost health when The game features the basic swapped out. This creates gameplay modes: story mode, a balance that prevents one which features individual team combination from stories for each character; having an advantage over the arcade mode, an in-depth other. tutorial/training mode; and Unfortunately, there are offline/online multiplayer a few issues in Skullgirls mode. that need to be addressed. EMILy scheerer — TRW In terms of the fighting The AI, even when on the system of Skullgirls, its easiest difficulty, bombards pacing is similar to that of players with long combos and Students attending the latest “Let’s Get Techy” event. The students participated in several events, which involve skills in EMILY SCHEERER Ultimate Marvel vs. -

Black Characters in the Street Fighter Series |Thezonegamer

10/10/2016 Black characters in the Street Fighter series |TheZonegamer LOOKING FOR SOMETHING? HOME ABOUT THEZONEGAMER CONTACT US 1.4k TUESDAY BLACK CHARACTERS IN THE STREET FIGHTER SERIES 16 FEBRUARY 2016 RECENT POSTS On Nintendo and Limited Character Customization Nintendo has often been accused of been stuck in the past and this isn't so far from truth when you... Aug22 2016 | More > Why Sazh deserves a spot in Dissidia Final Fantasy It's been a rocky ride for Sazh Katzroy ever since his debut appearance in Final Fantasy XIII. A... Aug12 2016 | More > Capcom's first Street Fighter game, which was created by Takashi Nishiyama and Hiroshi Tekken 7: Fated Matsumoto made it's way into arcades from around late August of 1987. It would be the Retribution Adds New Character Master Raven first game in which Capcom's golden boy Ryu would make his debut in, but he wouldn't be Fans of the Tekken series the only Street fighter. noticed the absent of black characters in Tekken 7 since the game's... Jul18 2016 | More > "The series's first black character was an African American heavyweight 10 Things You Can Do In boxer." Final Fantasy XV Final Fantasy 15 is at long last free from Square Enix's The story was centred around a martial arts tournament featuring fighters from all over the vault and is releasing this world (five countries to be exact) and had 10 contenders in total, all of whom were non year on... Jun21 2016 | More > playable. Each character had unique and authentic fighting styles but in typical fashion, Mirror's Edge Catalyst: the game's first black character was an African American heavyweight boxer. -

The Authentic EGO Arcade Stick (GAPCCAINBL00)

The Authentic EGO Arcade Stick (GAPCCAINBL00) Reorder Number GAPCCAINBL00 Pack Size 1 ea Units Brand Mad Catz Bring the arcade to you with the Authentic EGO Arcade Stick. Featuring Sanwa Denshi components, this arcade stick is tournament ready, delivering reliable arcade grade timing and performance. The ball-top 8-way joystick makes fighting a breeze and can be easily configured to replicate the left or right thumbstick or D-pad on a traditional controller. And 8 customizable action buttons in a Vewlix layout makes this fight stick look and feel like a classic but with modern performance. This arcade stick features multiple additional buttons for even more customization and flexibility, including a home button, share view button, L3/R3 buttons for custom commands and a format switch to easily change button configurations. Designed to reduce fatigue, the Turbo function adds rapid fire to an action button, letting you press the button once and register 10 rapid-fire reactions. With a mod-friendly design, you can easily swap out the top panel decal, internal components and bottom to customize this fight stick to fit your needs. And with a strong metal base and non-slip foam, this arcade stick is built to last, whether you’re playing in tournaments or with your friends. Universally compatible with the PS4™, Xbox One, Nintendo Switch™ and PC, the EGO Arcade Stick is ready to play. Arcade fighting stick with genuine arcade feel – ideal for fighting games Ball-top 8-way joystick – easily configure to replicate left or right thumbstick or D-pad -

Revised BOP-Publication 24H DUBAI 2021

Balance of Performance Publication Revision 1 Date: 11.01.2020 HANKOOK 24H DUBAI 2021 To Sporting & Technical Regulations 24H SERIES powered by Hankook 2021 (Version 26 November 2020 with KNAF permit No.: 0314.21.002) NOTE: This Balance of Performance Publication is valid with immediate effect and replaces the previous publication dated 07.12.2020. With this Balance of Performance publication, the refuelling flow restictor plate is introduced. This is a mandatory BOP-measure that must be implemented by all cars. Each car receives a sticker indicating the restrictor plate colour to be used. This sticker will be placed by the scrutineers above the fuel inlet: The following flow rate applies to each colour: - BLUE: 100% - YELLOW: 95% - RED: 92,5% On each refuelling nozzle, a set of restrictors is attached. The correct coloured restrictor must be inserted into the nozzle handle before refuelling. BOP HANKOOK 24H DUBAI 2021 1 Dear Teams and Drivers These Balance of Performance and other figures are in force with immediate application and replaces the figures of appendix 11 of the Sporting & Technical regulations and eventually previously published BOP-publications. Notes on boost control: Control of Pboost strategy as per document attached (Appendix: Control of Pboost strategy is updated), for all cars of which Pboost max is specified, unless explicit otherwise specified. Approved: Creventic BOP HANKOOK 24H DUBAI 2021 2 Class TC: Touring Cars Max Cylinder Minimum FUEL Brand & Type Turbo Refuelling Remarks capacity Weight FLOW amount According -

Company Profile President's Message

Doc.02 99.9.30 6:31 PM Page H2 1 COMPANY PROFILE PRESIDENT’S MESSAGE A leading company in the amusement have succeeded in producing one hit after advertising and incentive-based consumer industry, Capcom develops, publishes and another, and the release of Resident Evil in promotions such as our innovative Fighters Edge program. distributes a variety of software games for March 1996 established a new genre, both arcade machines and home video "Survival Horror", which is unrivaled by our With the new millennium on the horizon, the business consoles. We also operate amusement competitors. Popular all over the world, the world is on the brink of change. We are entering an age where consumers will have the power of choice. facilities at 47 locations in Japan. Since the outstanding Resident Evil series has Bidding farewell to mass consumption, we will see foundation of the company in May 1979, we contributed enormously to Capcom's growth. the development of an information-centered society have taken a leading role in the entertainment In addition to our existing overseas which contributes to a higher level of customer software industry and have continued to subsidiaries in the United States and Asia, we satisfaction as well as increased environmental awareness. And in the years to come, the world will respond to the demand and expectations of established Capcom Eurosoft in London, continue to change at a faster rate than ever. customers. In March 1991, we achieved the England in July 1998, as a main sales base for top position in the arcade game industry with the European home video game market.