Symposium on Telescope Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Asteroid Regolith Weathering: a Large-Scale Observational Investigation

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Doctoral Dissertations Graduate School 5-2019 Asteroid Regolith Weathering: A Large-Scale Observational Investigation Eric Michael MacLennan University of Tennessee, [email protected] Follow this and additional works at: https://trace.tennessee.edu/utk_graddiss Recommended Citation MacLennan, Eric Michael, "Asteroid Regolith Weathering: A Large-Scale Observational Investigation. " PhD diss., University of Tennessee, 2019. https://trace.tennessee.edu/utk_graddiss/5467 This Dissertation is brought to you for free and open access by the Graduate School at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. To the Graduate Council: I am submitting herewith a dissertation written by Eric Michael MacLennan entitled "Asteroid Regolith Weathering: A Large-Scale Observational Investigation." I have examined the final electronic copy of this dissertation for form and content and recommend that it be accepted in partial fulfillment of the equirr ements for the degree of Doctor of Philosophy, with a major in Geology. Joshua P. Emery, Major Professor We have read this dissertation and recommend its acceptance: Jeffrey E. Moersch, Harry Y. McSween Jr., Liem T. Tran Accepted for the Council: Dixie L. Thompson Vice Provost and Dean of the Graduate School (Original signatures are on file with official studentecor r ds.) Asteroid Regolith Weathering: A Large-Scale Observational Investigation A Dissertation Presented for the Doctor of Philosophy Degree The University of Tennessee, Knoxville Eric Michael MacLennan May 2019 © by Eric Michael MacLennan, 2019 All Rights Reserved. -

Aqueous Alteration on Main Belt Primitive Asteroids: Results from Visible Spectroscopy1

Aqueous alteration on main belt primitive asteroids: results from visible spectroscopy1 S. Fornasier1,2, C. Lantz1,2, M.A. Barucci1, M. Lazzarin3 1 LESIA, Observatoire de Paris, CNRS, UPMC Univ Paris 06, Univ. Paris Diderot, 5 Place J. Janssen, 92195 Meudon Pricipal Cedex, France 2 Univ. Paris Diderot, Sorbonne Paris Cit´e, 4 rue Elsa Morante, 75205 Paris Cedex 13 3 Department of Physics and Astronomy of the University of Padova, Via Marzolo 8 35131 Padova, Italy Submitted to Icarus: November 2013, accepted on 28 January 2014 e-mail: [email protected]; fax: +33145077144; phone: +33145077746 Manuscript pages: 38; Figures: 13 ; Tables: 5 Running head: Aqueous alteration on primitive asteroids Send correspondence to: Sonia Fornasier LESIA-Observatoire de Paris arXiv:1402.0175v1 [astro-ph.EP] 2 Feb 2014 Batiment 17 5, Place Jules Janssen 92195 Meudon Cedex France e-mail: [email protected] 1Based on observations carried out at the European Southern Observatory (ESO), La Silla, Chile, ESO proposals 062.S-0173 and 064.S-0205 (PI M. Lazzarin) Preprint submitted to Elsevier September 27, 2018 fax: +33145077144 phone: +33145077746 2 Aqueous alteration on main belt primitive asteroids: results from visible spectroscopy1 S. Fornasier1,2, C. Lantz1,2, M.A. Barucci1, M. Lazzarin3 Abstract This work focuses on the study of the aqueous alteration process which acted in the main belt and produced hydrated minerals on the altered asteroids. Hydrated minerals have been found mainly on Mars surface, on main belt primitive asteroids and possibly also on few TNOs. These materials have been produced by hydration of pristine anhydrous silicates during the aqueous alteration process, that, to be active, needed the presence of liquid water under low temperature conditions (below 320 K) to chemically alter the minerals. -

The Minor Planet Bulletin 37 (2010) 45 Classification for 244 Sita

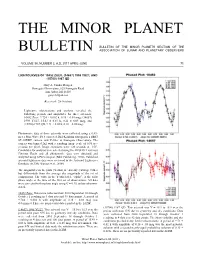

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 37, NUMBER 2, A.D. 2010 APRIL-JUNE 41. LIGHTCURVE AND PHASE CURVE OF 1130 SKULD Robinson (2009) from his data taken in 2002. There is no evidence of any change of (V-R) color with asteroid rotation. Robert K. Buchheim Altimira Observatory As a result of the relatively short period of this lightcurve, every 18 Altimira, Coto de Caza, CA 92679 (USA) night provided at least one minimum and maximum of the [email protected] lightcurve. The phase curve was determined by polling both the maximum and minimum points of each night’s lightcurve. Since (Received: 29 December) The lightcurve period of asteroid 1130 Skuld is confirmed to be P = 4.807 ± 0.002 h. Its phase curve is well-matched by a slope parameter G = 0.25 ±0.01 The 2009 October-November apparition of asteroid 1130 Skuld presented an excellent opportunity to measure its phase curve to very small solar phase angles. I devoted 13 nights over a two- month period to gathering photometric data on the object, over which time the solar phase angle ranged from α = 0.3 deg to α = 17.6 deg. All observations used Altimira Observatory’s 0.28-m Schmidt-Cassegrain telescope (SCT) working at f/6.3, SBIG ST- 8XE NABG CCD camera, and photometric V- and R-band filters. Exposure durations were 3 or 4 minutes with the SNR > 100 in all images, which were reduced with flat and dark frames. -

The Minor Planet Bulletin

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 38, NUMBER 2, A.D. 2011 APRIL-JUNE 71. LIGHTCURVES OF 10452 ZUEV, (14657) 1998 YU27, AND (15700) 1987 QD Gary A. Vander Haagen Stonegate Observatory, 825 Stonegate Road Ann Arbor, MI 48103 [email protected] (Received: 28 October) Lightcurve observations and analysis revealed the following periods and amplitudes for three asteroids: 10452 Zuev, 9.724 ± 0.002 h, 0.38 ± 0.03 mag; (14657) 1998 YU27, 15.43 ± 0.03 h, 0.21 ± 0.05 mag; and (15700) 1987 QD, 9.71 ± 0.02 h, 0.16 ± 0.05 mag. Photometric data of three asteroids were collected using a 0.43- meter PlaneWave f/6.8 corrected Dall-Kirkham astrograph, a SBIG ST-10XME camera, and V-filter at Stonegate Observatory. The camera was binned 2x2 with a resulting image scale of 0.95 arc- seconds per pixel. Image exposures were 120 seconds at –15C. Candidates for analysis were selected using the MPO2011 Asteroid Viewing Guide and all photometric data were obtained and analyzed using MPO Canopus (Bdw Publishing, 2010). Published asteroid lightcurve data were reviewed in the Asteroid Lightcurve Database (LCDB; Warner et al., 2009). The magnitudes in the plots (Y-axis) are not sky (catalog) values but differentials from the average sky magnitude of the set of comparisons. The value in the Y-axis label, “alpha”, is the solar phase angle at the time of the first set of observations. All data were corrected to this phase angle using G = 0.15, unless otherwise stated. -

RASNZ Occultation Section Circular CN2009/1 April 2013 NOTICES

ISSN 11765038 (Print) RASNZ ISSN 23241853 (Online) OCCULTATION CIRCULAR CN2009/1 April 2013 SECTION Lunar limb Profile produced by Dave Herald's Occult program showing 63 events for the lunar graze of a bright, multiple star ZC2349 (aka Al Niyat, sigma Scorpi) on 31 July 2009 by two teams of observers from Wellington and Christchurch. The lunar profile is drawn using data from the Kaguya lunar surveyor, which became available after this event. The path the star followed across the lunar landscape is shown for one set of observers (Murray Forbes and Frank Andrews) by the trail of white circles. There are several instances where a stepped event was seen, due to the two brightest components disappearing or reappearing. See page 61 for more details. Visit the Occultation Section website at http://www.occultations.org.nz/ Newsletter of the Occultation Section of the Royal Astronomical Society of New Zealand Table of Contents From the Director.............................................................................................................................. 2 Notices................................................................................................................................................. 3 Seventh TransTasman Symposium on Occultations............................................................3 Important Notice re Report File Naming...............................................................................4 Observing Occultations using Video: A Beginner's Guide.................................................. -

The Minor Planet Bulletin, It Is a Pleasure to Announce the Appointment of Brian D

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 33, NUMBER 1, A.D. 2006 JANUARY-MARCH 1. LIGHTCURVE AND ROTATION PERIOD Observatory (Observatory code 926) near Nogales, Arizona. The DETERMINATION FOR MINOR PLANET 4006 SANDLER observatory is located at an altitude of 1312 meters and features a 0.81 m F7 Ritchey-Chrétien telescope and a SITe 1024 x 1024 x Matthew T. Vonk 24 micron CCD. Observations were conducted on (UT dates) Daniel J. Kopchinski January 29, February 7, 8, 2005. A total of 37 unfiltered images Amanda R. Pittman with exposure times of 120 seconds were analyzed using Canopus. Stephen Taubel The lightcurve, shown in the figure below, indicates a period of Department of Physics 3.40 ± 0.01 hours and an amplitude of 0.16 magnitude. University of Wisconsin – River Falls 410 South Third Street Acknowledgements River Falls, WI 54022 [email protected] Thanks to Michael Schwartz and Paulo Halvorcem for their great work at Tenagra Observatory. (Received: 25 July) References Minor planet 4006 Sandler was observed during January Schmadel, L. D. (1999). Dictionary of Minor Planet Names. and February of 2005. The synodic period was Springer: Berlin, Germany. 4th Edition. measured and determined to be 3.40 ± 0.01 hours with an amplitude of 0.16 magnitude. Warner, B. D. and Alan Harris, A. (2004) “Potential Lightcurve Targets 2005 January – March”, www.minorplanetobserver.com/ astlc/targets_1q_2005.htm Minor planet 4006 Sandler was discovered by the Russian astronomer Tamara Mikhailovna Smirnova in 1972. (Schmadel, 1999) It orbits the sun with an orbit that varies between 2.058 AU and 2.975 AU which locates it in the heart of the main asteroid belt. -

The Minor Planet Bulletin 36, 188-190

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 37, NUMBER 3, A.D. 2010 JULY-SEPTEMBER 81. ROTATION PERIOD AND H-G PARAMETERS telescope (SCT) working at f/4 and an SBIG ST-8E CCD. Baker DETERMINATION FOR 1700 ZVEZDARA: A independently initiated observations on 2009 September 18 at COLLABORATIVE PHOTOMETRY PROJECT Indian Hill Observatory using a 0.3-m SCT reduced to f/6.2 coupled with an SBIG ST-402ME CCD and Johnson V filter. Ronald E. Baker Benishek from the Belgrade Astronomical Observatory joined the Indian Hill Observatory (H75) collaboration on 2009 September 24 employing a 0.4-m SCT PO Box 11, Chagrin Falls, OH 44022 USA operating at f/10 with an unguided SBIG ST-10 XME CCD. [email protected] Pilcher at Organ Mesa Observatory carried out observations on 2009 September 30 over more than seven hours using a 0.35-m Vladimir Benishek f/10 SCT and an unguided SBIG STL-1001E CCD. As a result of Belgrade Astronomical Observatory the collaborative effort, a total of 17 time series sessions was Volgina 7, 11060 Belgrade 38 SERBIA obtained from 2009 August 20 until October 19. All observations were unfiltered with the exception of those recorded on September Frederick Pilcher 18. MPO Canopus software (BDW Publishing, 2009a) employing 4438 Organ Mesa Loop differential aperture photometry, was used by all authors for Las Cruces, NM 88011 USA photometric data reduction. The period analysis was performed using the same program. David Higgins Hunter Hill Observatory The data were merged by adjusting instrumental magnitudes and 7 Mawalan Street, Ngunnawal ACT 2913 overlapping characteristic features of the individual lightcurves. -

The Minor Planet Bulletin

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 41, NUMBER 4, A.D. 2014 OCTOBER-DECEMBER 203. LIGHTCURVE ANALYSIS FOR 4167 RIEMANN Amy Zhao, Ashok Aggarwal, and Caroline Odden Phillips Academy Observatory (I12) 180 Main Street Andover, MA 01810 USA [email protected] (Received: 10 June) Photometric observations of 4167 Riemann were made over six nights in 2014 April. A synodic period of P = 4.060 ± 0.001 hours was derived from the data. 4167 Riemann is a main-belt asteroid discovered in 1978 by L. V. Period analysis was carried out by the authors using MPO Canopus Zhuraveya. Observations of the asteroid were conducted at the and its Fourier analysis feature developed by Harris (Harris et al., Phillips Academy Observatory, which is equipped with a 0.4-m f/8 1989). The resulting lightcurve consists of 288 data points. The reflecting telescope by DFM Engineering. Images were taken with period spectrum strongly favors the bimodal solution. The an SBIG 1301-E CCD camera that has a 1280x1024 array of 16- resulting lightcurve has synodic period P = 4.060 ± 0.001 hours micron pixels. The resulting image scale was 1.0 arcsecond per and amplitude 0.17 mag. Dips in the period spectrum were also pixel. Exposures were 300 seconds and taken primarily at –35°C. noted at 8.1200 hours (2P) and at 6.0984 hours (3/2P). A search of All images were guided, unbinned, and unfiltered. Images were the Asteroid Lightcurve Database (Warner et al., 2009) and other dark and flat-field corrected with Maxim DL. -

The Planetary and Lunar Ephemeris DE 421

IPN Progress Report 42-178 • August 15, 2009 The Planetary and Lunar Ephemeris DE 421 William M. Folkner,* James G. Williams,† and Dale H. Boggs† The planetary and lunar ephemeris DE 421 represents updated estimates of the orbits of the Moon and planets. The lunar orbit is known to submeter accuracy through fitting lunar laser ranging data. The orbits of Venus, Earth, and Mars are known to subkilometer accu- racy. Because of perturbations of the orbit of Mars by asteroids, frequent updates are needed to maintain the current accuracy into the future decade. Mercury’s orbit is determined to an accuracy of several kilometers by radar ranging. The orbits of Jupiter and Saturn are determined to accuracies of tens of kilometers as a result of spacecraft tracking and modern ground-based astrometry. The orbits of Uranus, Neptune, and Pluto are not as well deter- mined. Reprocessing of historical observations is expected to lead to improvements in their orbits in the next several years. I. Introduction The planetary and lunar ephemeris DE 421 is a significant advance over earlier ephemeri- des. Compared with DE 418, released in July 2007,1 the DE 421 ephemeris includes addi- tional data, especially range and very long baseline interferometry (VLBI) measurements of Mars spacecraft; range measurements to the European Space Agency’s Venus Express space- craft; and use of current best estimates of planetary masses in the integration process. The lunar orbit is more robust due to an expanded set of lunar geophysical solution parameters, seven additional months of laser ranging data, and complete convergence. -

The British Astronomical Association Handbook 2014

THE HANDBOOK OF THE BRITISH ASTRONOMICAL ASSOCIATION 2015 2014 October ISSN 0068–130–X CONTENTS CALENDAR 2015 . 2 PREFACE . 3 HIGHLIGHTS FOR 2015 . 4 SKY DIARY . .. 5 VISIBILITY OF PLANETS . 6 RISING AND SETTING OF THE PLANETS IN LATITUDES 52°N AND 35°S . 7-8 ECLIPSES . 9-14 TIME . 15-16 EARTH AND SUN . 17-19 LUNAR LIBRATION . 20 MOON . 21 MOONRISE AND MOONSET . 21-25 SUN’S SELENOGRAPHIC COLONGITUDE . 26 LUNAR OCCULTATIONS . 27-33 GRAZING LUNAR OCCULTATIONS . 34-35 APPEARANCE OF PLANETS . 36 MERCURY . 37-38 VENUS . 39 MARS . 40-41 ASTEROIDS . 42 ASTEROID EPHEMERIDES . 43-47 ASTEROID OCCULTATIONS .. 48-50 NEO CLOSE APPROACHES TO EARTH . 51 ASTEROIDS: FAVOURABLE OBSERVING OPPORTUNITIES . 52-54 JUPITER . 55-59 SATELLITES OF JUPITER . 59-63 JUPITER ECLIPSES, OCCULTATIONS AND TRANSITS . 64-73 SATURN . 74-77 SATELLITES OF SATURN . 78-81 URANUS . 82 NEPTUNE . 83 TRANS–NEPTUNIAN & SCATTERED DISK OBJECTS . 84 DWARF PLANETS . 85-88 COMETS . 89-96 METEOR DIARY . 97-99 VARIABLE STARS (RZ Cassiopeiae; Algol; λ Tauri) . 100-101 MIRA STARS . 102 VARIABLE STAR OF THE YEAR (V Bootis) . 103-105 EPHEMERIDES OF DOUBLE STARS . 106-107 BRIGHT STARS . 108 ACTIVE GALAXIES . 109 PLANETS – EXPLANATION OF TABLES . 110 ELEMENTS OF PLANETARY ORBITS . 111 ASTRONOMICAL AND PHYSICAL CONSTANTS . 111-112 INTERNET RESOURCES . 113-114 GREEK ALPHABET . 115 ACKNOWLEDGEMENTS . 116 ERRATA . 116 Front Cover: The Moon at perigee and apogee – highlighting the clear size difference when the Moon is closest and farthest away from the Earth. Perigee on 2009/11/08 at 23:24UT, distance -

The Minor Planet Bulletin Lost a Friend on Agreement with That Reported by Ivanova Et Al

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 33, NUMBER 3, A.D. 2006 JULY-SEPTEMBER 49. LIGHTCURVE ANALYSIS FOR 19848 YEUNGCHUCHIU Kwong W. Yeung Desert Eagle Observatory P.O. Box 105 Benson, AZ 85602 [email protected] (Received: 19 Feb) The lightcurve for asteroid 19848 Yeungchuchiu was measured using images taken in November 2005. The lightcurve was found to have a synodic period of 3.450±0.002h and amplitude of 0.70±0.03m. Asteroid 19848 Yeungchuchiu was discovered in 2000 Oct. by the author at Desert Beaver Observatory, AZ, while it was about one degree away from Jupiter. It is named in honor of my father, The amplitude of 0.7 magnitude indicates that the long axis is Yeung Chu Chiu, who is a businessman in Hong Kong. I hoped to about 2 times that of the shorter axis, as seen from the line of sight learn the art of photometry by studying the lightcurve of 19848 as at that particular moment. Since both the maxima and minima my first solo project. have similar “height”, it’s likely that the rotational axis was almost perpendicular to the line of sight. Using a remote 0.46m f/2.8 reflector and Apogee AP9E CCD camera located in New Mexico Skies (MPC code H07), images of Many amateurs may have the misconception that photometry is a the asteroid were obtained on the nights of 2005 Nov. 20 and 21. very difficult science. After this learning exercise I found that, at Exposures were 240 seconds. -

Cumulative Index to Volumes 1-45

The Minor Planet Bulletin Cumulative Index 1 Table of Contents Tedesco, E. F. “Determination of the Index to Volume 1 (1974) Absolute Magnitude and Phase Index to Volume 1 (1974) ..................... 1 Coefficient of Minor Planet 887 Alinda” Index to Volume 2 (1975) ..................... 1 Chapman, C. R. “The Impossibility of 25-27. Index to Volume 3 (1976) ..................... 1 Observing Asteroid Surfaces” 17. Index to Volume 4 (1977) ..................... 2 Tedesco, E. F. “On the Brightnesses of Index to Volume 5 (1978) ..................... 2 Dunham, D. W. (Letter regarding 1 Ceres Asteroids” 3-9. Index to Volume 6 (1979) ..................... 3 occultation) 35. Index to Volume 7 (1980) ..................... 3 Wallentine, D. and Porter, A. Index to Volume 8 (1981) ..................... 3 Hodgson, R. G. “Useful Work on Minor “Opportunities for Visual Photometry of Index to Volume 9 (1982) ..................... 4 Planets” 1-4. Selected Minor Planets, April - June Index to Volume 10 (1983) ................... 4 1975” 31-33. Index to Volume 11 (1984) ................... 4 Hodgson, R. G. “Implications of Recent Index to Volume 12 (1985) ................... 4 Diameter and Mass Determinations of Welch, D., Binzel, R., and Patterson, J. Comprehensive Index to Volumes 1-12 5 Ceres” 24-28. “The Rotation Period of 18 Melpomene” Index to Volume 13 (1986) ................... 5 20-21. Hodgson, R. G. “Minor Planet Work for Index to Volume 14 (1987) ................... 5 Smaller Observatories” 30-35. Index to Volume 15 (1988) ................... 6 Index to Volume 3 (1976) Index to Volume 16 (1989) ................... 6 Hodgson, R. G. “Observations of 887 Index to Volume 17 (1990) ................... 6 Alinda” 36-37. Chapman, C. R. “Close Approach Index to Volume 18 (1991) ..................