Determinants

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

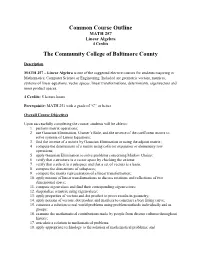

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

Determinant Notes

68 UNIT FIVE DETERMINANTS 5.1 INTRODUCTION In unit one the determinant of a 2× 2 matrix was introduced and used in the evaluation of a cross product. In this chapter we extend the definition of a determinant to any size square matrix. The determinant has a variety of applications. The value of the determinant of a square matrix A can be used to determine whether A is invertible or noninvertible. An explicit formula for A–1 exists that involves the determinant of A. Some systems of linear equations have solutions that can be expressed in terms of determinants. 5.2 DEFINITION OF THE DETERMINANT a11 a12 Recall that in chapter one the determinant of the 2× 2 matrix A = was a21 a22 defined to be the number a11a22 − a12 a21 and that the notation det (A) or A was used to represent the determinant of A. For any given n × n matrix A = a , the notation A [ ij ]n×n ij will be used to denote the (n −1)× (n −1) submatrix obtained from A by deleting the ith row and the jth column of A. The determinant of any size square matrix A = a is [ ij ]n×n defined recursively as follows. Definition of the Determinant Let A = a be an n × n matrix. [ ij ]n×n (1) If n = 1, that is A = [a11], then we define det (A) = a11 . n 1k+ (2) If na>=1, we define det(A) ∑(-1)11kk det(A ) k=1 Example If A = []5 , then by part (1) of the definition of the determinant, det (A) = 5. -

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

Exercise 1 Advanced Sdps Matrices and Vectors

Exercise 1 Advanced SDPs Matrices and vectors: All these are very important facts that we will use repeatedly, and should be internalized. • Inner product and outer products. Let u = (u1; : : : ; un) and v = (v1; : : : ; vn) be vectors in n T Pn R . Then u v = hu; vi = i=1 uivi is the inner product of u and v. T The outer product uv is an n × n rank 1 matrix B with entries Bij = uivj. The matrix B is a very useful operator. Suppose v is a unit vector. Then, B sends v to u i.e. Bv = uvT v = u, but Bw = 0 for all w 2 v?. m×n n×p • Matrix Product. For any two matrices A 2 R and B 2 R , the standard matrix Pn product C = AB is the m × p matrix with entries cij = k=1 aikbkj. Here are two very useful ways to view this. T Inner products: Let ri be the i-th row of A, or equivalently the i-th column of A , the T transpose of A. Let bj denote the j-th column of B. Then cij = ri cj is the dot product of i-column of AT and j-th column of B. Sums of outer products: C can also be expressed as outer products of columns of A and rows of B. Pn T Exercise: Show that C = k=1 akbk where ak is the k-th column of A and bk is the k-th row of B (or equivalently the k-column of BT ). -

Things You Need to Know About Linear Algebra Math 131 Multivariate

the standard (x; y; z) coordinate three-space. Nota- tions for vectors. Geometric interpretation as vec- tors as displacements as well as vectors as points. Vector addition, the zero vector 0, vector subtrac- Things you need to know about tion, scalar multiplication, their properties, and linear algebra their geometric interpretation. Math 131 Multivariate Calculus We start using these concepts right away. In D Joyce, Spring 2014 chapter 2, we'll begin our study of vector-valued functions, and we'll use the coordinates, vector no- The relation between linear algebra and mul- tation, and the rest of the topics reviewed in this tivariate calculus. We'll spend the first few section. meetings reviewing linear algebra. We'll look at just about everything in chapter 1. Section 1.2. Vectors and equations in R3. Stan- Linear algebra is the study of linear trans- dard basis vectors i; j; k in R3. Parametric equa- formations (also called linear functions) from n- tions for lines. Symmetric form equations for a line dimensional space to m-dimensional space, where in R3. Parametric equations for curves x : R ! R2 m is usually equal to n, and that's often 2 or 3. in the plane, where x(t) = (x(t); y(t)). A linear transformation f : Rn ! Rm is one that We'll use standard basis vectors throughout the preserves addition and scalar multiplication, that course, beginning with chapter 2. We'll study tan- is, f(a + b) = f(a) + f(b), and f(ca) = cf(a). We'll gent lines of curves and tangent planes of surfaces generally use bold face for vectors and for vector- in that chapter and throughout the course. -

Schaum's Outline of Linear Algebra (4Th Edition)

SCHAUM’S SCHAUM’S outlines outlines Linear Algebra Fourth Edition Seymour Lipschutz, Ph.D. Temple University Marc Lars Lipson, Ph.D. University of Virginia Schaum’s Outline Series New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto Copyright © 2009, 2001, 1991, 1968 by The McGraw-Hill Companies, Inc. All rights reserved. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior writ- ten permission of the publisher. ISBN: 978-0-07-154353-8 MHID: 0-07-154353-8 The material in this eBook also appears in the print version of this title: ISBN: 978-0-07-154352-1, MHID: 0-07-154352-X. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occurrence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs. To contact a representative please e-mail us at [email protected]. TERMS OF USE This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licensors reserve all rights in and to the work. -

Math 217: Multilinearity of Determinants Professor Karen Smith (C)2015 UM Math Dept Licensed Under a Creative Commons By-NC-SA 4.0 International License

Math 217: Multilinearity of Determinants Professor Karen Smith (c)2015 UM Math Dept licensed under a Creative Commons By-NC-SA 4.0 International License. A. Let V −!T V be a linear transformation where V has dimension n. 1. What is meant by the determinant of T ? Why is this well-defined? Solution note: The determinant of T is the determinant of the B-matrix of T , for any basis B of V . Since all B-matrices of T are similar, and similar matrices have the same determinant, this is well-defined—it doesn't depend on which basis we pick. 2. Define the rank of T . Solution note: The rank of T is the dimension of the image. 3. Explain why T is an isomorphism if and only if det T is not zero. Solution note: T is an isomorphism if and only if [T ]B is invertible (for any choice of basis B), which happens if and only if det T 6= 0. 3 4. Now let V = R and let T be rotation around the axis L (a line through the origin) by an 21 0 0 3 3 angle θ. Find a basis for R in which the matrix of ρ is 40 cosθ −sinθ5 : Use this to 0 sinθ cosθ compute the determinant of T . Is T othogonal? Solution note: Let v be any vector spanning L and let u1; u2 be an orthonormal basis ? for V = L . Rotation fixes ~v, which means the B-matrix in the basis (v; u1; u2) has 213 first column 405. -

Determinants Math 122 Calculus III D Joyce, Fall 2012

Determinants Math 122 Calculus III D Joyce, Fall 2012 What they are. A determinant is a value associated to a square array of numbers, that square array being called a square matrix. For example, here are determinants of a general 2 × 2 matrix and a general 3 × 3 matrix. a b = ad − bc: c d a b c d e f = aei + bfg + cdh − ceg − afh − bdi: g h i The determinant of a matrix A is usually denoted jAj or det (A). You can think of the rows of the determinant as being vectors. For the 3×3 matrix above, the vectors are u = (a; b; c), v = (d; e; f), and w = (g; h; i). Then the determinant is a value associated to n vectors in Rn. There's a general definition for n×n determinants. It's a particular signed sum of products of n entries in the matrix where each product is of one entry in each row and column. The two ways you can choose one entry in each row and column of the 2 × 2 matrix give you the two products ad and bc. There are six ways of chosing one entry in each row and column in a 3 × 3 matrix, and generally, there are n! ways in an n × n matrix. Thus, the determinant of a 4 × 4 matrix is the signed sum of 24, which is 4!, terms. In this general definition, half the terms are taken positively and half negatively. In class, we briefly saw how the signs are determined by permutations. -

Matrices and Tensors

APPENDIX MATRICES AND TENSORS A.1. INTRODUCTION AND RATIONALE The purpose of this appendix is to present the notation and most of the mathematical tech- niques that are used in the body of the text. The audience is assumed to have been through sev- eral years of college-level mathematics, which included the differential and integral calculus, differential equations, functions of several variables, partial derivatives, and an introduction to linear algebra. Matrices are reviewed briefly, and determinants, vectors, and tensors of order two are described. The application of this linear algebra to material that appears in under- graduate engineering courses on mechanics is illustrated by discussions of concepts like the area and mass moments of inertia, Mohr’s circles, and the vector cross and triple scalar prod- ucts. The notation, as far as possible, will be a matrix notation that is easily entered into exist- ing symbolic computational programs like Maple, Mathematica, Matlab, and Mathcad. The desire to represent the components of three-dimensional fourth-order tensors that appear in anisotropic elasticity as the components of six-dimensional second-order tensors and thus rep- resent these components in matrices of tensor components in six dimensions leads to the non- traditional part of this appendix. This is also one of the nontraditional aspects in the text of the book, but a minor one. This is described in §A.11, along with the rationale for this approach. A.2. DEFINITION OF SQUARE, COLUMN, AND ROW MATRICES An r-by-c matrix, M, is a rectangular array of numbers consisting of r rows and c columns: ¯MM.. -

28. Exterior Powers

28. Exterior powers 28.1 Desiderata 28.2 Definitions, uniqueness, existence 28.3 Some elementary facts 28.4 Exterior powers Vif of maps 28.5 Exterior powers of free modules 28.6 Determinants revisited 28.7 Minors of matrices 28.8 Uniqueness in the structure theorem 28.9 Cartan's lemma 28.10 Cayley-Hamilton Theorem 28.11 Worked examples While many of the arguments here have analogues for tensor products, it is worthwhile to repeat these arguments with the relevant variations, both for practice, and to be sensitive to the differences. 1. Desiderata Again, we review missing items in our development of linear algebra. We are missing a development of determinants of matrices whose entries may be in commutative rings, rather than fields. We would like an intrinsic definition of determinants of endomorphisms, rather than one that depends upon a choice of coordinates, even if we eventually prove that the determinant is independent of the coordinates. We anticipate that Artin's axiomatization of determinants of matrices should be mirrored in much of what we do here. We want a direct and natural proof of the Cayley-Hamilton theorem. Linear algebra over fields is insufficient, since the introduction of the indeterminate x in the definition of the characteristic polynomial takes us outside the class of vector spaces over fields. We want to give a conceptual proof for the uniqueness part of the structure theorem for finitely-generated modules over principal ideal domains. Multi-linear algebra over fields is surely insufficient for this. 417 418 Exterior powers 2. Definitions, uniqueness, existence Let R be a commutative ring with 1. -

Università Degli Studi Di Trieste a Gentle Introduction to Clifford Algebra

Università degli Studi di Trieste Dipartimento di Fisica Corso di Studi in Fisica Tesi di Laurea Triennale A Gentle Introduction to Clifford Algebra Laureando: Relatore: Daniele Ceravolo prof. Marco Budinich ANNO ACCADEMICO 2015–2016 Contents 1 Introduction 3 1.1 Brief Historical Sketch . 4 2 Heuristic Development of Clifford Algebra 9 2.1 Geometric Product . 9 2.2 Bivectors . 10 2.3 Grading and Blade . 11 2.4 Multivector Algebra . 13 2.4.1 Pseudoscalar and Hodge Duality . 14 2.4.2 Basis and Reciprocal Frames . 14 2.5 Clifford Algebra of the Plane . 15 2.5.1 Relation with Complex Numbers . 16 2.6 Clifford Algebra of Space . 17 2.6.1 Pauli algebra . 18 2.6.2 Relation with Quaternions . 19 2.7 Reflections . 19 2.7.1 Cross Product . 21 2.8 Rotations . 21 2.9 Differentiation . 23 2.9.1 Multivectorial Derivative . 24 2.9.2 Spacetime Derivative . 25 3 Spacetime Algebra 27 3.1 Spacetime Bivectors and Pseudoscalar . 28 3.2 Spacetime Frames . 28 3.3 Relative Vectors . 29 3.4 Even Subalgebra . 29 3.5 Relative Velocity . 30 3.6 Momentum and Wave Vectors . 31 3.7 Lorentz Transformations . 32 3.7.1 Addition of Velocities . 34 1 2 CONTENTS 3.7.2 The Lorentz Group . 34 3.8 Relativistic Visualization . 36 4 Electromagnetism in Clifford Algebra 39 4.1 The Vector Potential . 40 4.2 Electromagnetic Field Strength . 41 4.3 Free Fields . 44 5 Conclusions 47 5.1 Acknowledgements . 48 Chapter 1 Introduction The aim of this thesis is to show how an approach to classical and relativistic physics based on Clifford algebras can shed light on some hidden geometric meanings in our models.