Experimental Epistemology James R. Beebe (University at Buffalo) Forthcoming in Companion to Epistemology, Edited by Andrew Cullison (Continuum) Word Count: 8,351

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

STILL STUCK on the BACKWARD CLOCK: a REJOINDER to ADAMS, BARKER and CLARKE John N

STILL STUCK ON THE BACKWARD CLOCK: A REJOINDER TO ADAMS, BARKER AND CLARKE John N. WILLIAMS ABSTRACT: Neil Sinhababu and I presented Backward Clock, an original counterexample to Robert Nozick’s truth-tracking analysis of propositional knowledge. In their latest defence of the truth-tracking theories, “Methods Matter: Beating the Backward Clock,” Fred Adams, John A. Barker and Murray Clarke try again to defend Nozick’s and Fred Dretske’s early analysis of propositional knowledge against Backward Clock. They allege failure of truth-adherence, mistakes on my part about methods, and appeal to charity, ‘equivocation,’ reliable methods and unfair internalism. I argue that these objections all fail. They are still stuck with the fact that the tracking theories fall to Backward Clock, an even more useful test case for other analyses of knowledge than might have first appeared. KEYWORDS: sensitive belief, extra-sensitive belief, sensitive methods, truth- adherence, reliable methods, Backward Clock In a seminal paper, “Resurrecting the Tracking Theories,” Fred Adams and Murray Clarke argued persuasively and ingeniously that a number of well-known examples that appear clearly fatal to Robert Nozick and Fred Dretske’s truth- tracking analyses of knowledge are not really counterexamples at all.1 These include Ray Martin’s Racetrack, George Pappas and Marshall Swain’s Generator, and Laurence Bonjour’s Clairvoyant,2 as well as Saul Kripke’s Red Barn, his Deceased Dictator, and his Sloppy Scientist.3 So taken was I with this defence that I assumed that the truth-tracking analyses were impregnable. Until that is, Neil 1 Fred Adams and Murray Clarke, “Resurrecting the Tracking Theories,” Australasian Journal of Philosophy 83, 2 (2005): 207-221. -

The Philosophical Underpinnings of Educational Research

The Philosophical Underpinnings of Educational Research Lindsay Mack Abstract This article traces the underlying theoretical framework of educational research. It outlines the definitions of epistemology, ontology and paradigm and the origins, main tenets, and key thinkers of the 3 paradigms; positivist, interpetivist and critical. By closely analyzing each paradigm, the literature review focuses on the ontological and epistemological assumptions of each paradigm. Finally the author analyzes not only the paradigm’s weakness but also the author’s own construct of reality and knowledge which align with the critical paradigm. Key terms: Paradigm, Ontology, Epistemology, Positivism, Interpretivism The English Language Teaching (ELT) field has moved from an ad hoc field with amateurish research to a much more serious enterprise of professionalism. More teachers are conducting research to not only inform their teaching in the classroom but also to bridge the gap between the external researcher dictating policy and the teacher negotiating that policy with the practical demands of their classroom. I was a layperson, not an educational researcher. Determined to emancipate myself from my layperson identity, I began to analyze the different philosophical underpinnings of each paradigm, reading about the great thinkers’ theories and the evolution of social science research. Through this process I began to examine how I view the world, thus realizing my own construction of knowledge and social reality, which is actually quite loose and chaotic. Most importantly, I realized that I identify most with the critical paradigm assumptions and that my future desired role as an educational researcher is to affect change and challenge dominant social and political discourses in ELT. -

1 Forthcoming in Oxford Studies in Experimental Philosophy Causal Reasoning

Forthcoming in Oxford Studies in Experimental Philosophy Causal Reasoning: Philosophy and Experiment* James Woodward History and Philosophy of Science University of Pittsburgh 1. Introduction The past few decades have seen much interesting work, both within and outside philosophy, on causation and allied issues. Some of the philosophy is understood by its practitioners as metaphysics—it asks such questions as what causation “is”, whether there can be causation by absences, and so on. Other work reflects the interests of philosophers in the role that causation and causal reasoning play in science. Still other work, more computational in nature, focuses on problems of causal inference and learning, typically with substantial use of such formal apparatuses as Bayes nets (e.g. , Pearl, 2000, Spirtes et al, 2001) or hierarchical Bayesian models (e.g. Griffiths and Tenenbaum, 2009). All this work – the philosophical and the computational -- might be described as “theoretical” and it often has a strong “normative” component in the sense that it attempts to describe how we ought to learn, reason, and judge about causal relationships. Alongside this normative/theoretical work, there has also been a great deal of empirical work (hereafter Empirical Research on Causation or ERC) which focuses on how various subjects learn causal relationships, reason and judge causally. Much of this work has been carried out by psychologists, but it also includes contributions by primatologists, researchers in animal learning, and neurobiologists, and more rarely, philosophers. In my view, the interactions between these two groups—the normative/theoretical and the empirical -- has been extremely fruitful. In what follows, I describe some ideas about causal reasoning that have emerged from this research, focusing on the “interventionist” ideas that have figured in my own work. -

Introduction to Philosophy. Social Studies--Language Arts: 6414.16. INSTITUTION Dade County Public Schools, Miami, Fla

DOCUMENT RESUME ED 086 604 SO 006 822 AUTHOR Norris, Jack A., Jr. TITLE Introduction to Philosophy. Social Studies--Language Arts: 6414.16. INSTITUTION Dade County Public Schools, Miami, Fla. PUB DATE 72 NOTE 20p.; Authorized Course of Instruction for the Quinmester Program EDRS PRICE MF-$0.65 HC-$3.29 DESCRIPTORS Course Objectives; Curriculum Guides; Grade 10; Grade 11; Grade 12; *Language Arts; Learnin4 Activities; *Logic; Non Western Civilization; *Philosophy; Resource Guides; Secondary Grades; *Social Studies; *Social Studies Units; Western Civilization IDENTIFIERS *Quinmester Program ABSTRACT Western and non - western philosophers and their ideas are introduced to 10th through 12th grade students in this general social studies Quinmester course designed to be used as a preparation for in-depth study of the various schools of philosophical thought. By acquainting students with the questions and categories of philosophy, a point of departure for further study is developed. Through suggested learning activities the meaning of philosopky is defined. The Socratic, deductive, inductive, intuitive and eclectic approaches to philosophical thought are examined, as are three general areas of philosophy, metaphysics, epistemology,and axiology. Logical reasoning is applied to major philosophical questions. This course is arranged, as are other quinmester courses, with sections on broad goals, course content, activities, and materials. A related document is ED 071 937.(KSM) FILMED FROM BEST AVAILABLE COPY U S DEPARTMENT EDUCATION OF HEALTH. NAT10N41 -

Experimental Philosophy, Robert Kane, and the Concept of Free Will

Journal of Cognition and Neuroethics Experimental Philosophy, Robert Kane, and the Concept of Free Will J. Neil Otte University at Buffalo, The State University of New York Biography J. Neil Otte is presently in the Ph.D. program at the University at Buffalo (SUNY) and works in moral psychology, cognitive science, and the history of philosophy. For six years, he was an adjunct lecturer in philosophy at John Jay College of Criminal Justice in New York City. In the last three years, he has been an organizer of the Buffalo Annual Experimental Philosophy Conference, an organizer for a conference on sentiment and reason in early modern philosophy, and has worked as an ontologist for the research foundation, CUBRC. Acknowledgements The author wishes to thank Gunnar Björnsson, Gregg Caruso, and Oisín Deery for their supportive comments and conversation during the Free Will Conference at the Insight Institute of Neurosurgery and Neuroscience in Fall of 2014, as well as conference organizer Jami Anderson. Publication Details Journal of Cognition and Neuroethics (ISSN: 2166-5087). March, 2015. Volume 3, Issue 1. Citation Otte, J. Neil. 2015. “Experimental Philosophy, Robert Kane, and the Concept of Free Will.” Journal of Cognition and Neuroethics 3 (1): 281–296. Experimental Philosophy, Robert Kane, and the Concept of Free Will J. Neil Otte Abstract Trends in experimental philosophy have provided new and compelling results that are cause for re-evaluations in contemporary discussions of free will. In this paper, I argue for one such re-evaluation by criticizing Robert Kane’s well-known views on free will. I argue that Kane’s claims about pre-theoretical intuitions are not supported by empirical findings on two accounts. -

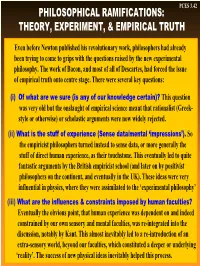

Philosophical Ramifications: Theory, Experiment, & Empirical Truth

PCES 3.42 PHILOSOPHICAL RAMIFICATIONS: THEORY, EXPERIMENT, & EMPIRICAL TRUTH Even before Newton published his revolutionary work, philosophers had already been trying to come to grips with the questions raised by the new experimental philosophy. The work of Bacon, and most of all of Descartes, had forced the issue of empirical truth onto centre stage. There were several key questions: (i) Of what are we sure (is any of our knowledge certain)? This question was very old but the onslaught of empirical science meant that rationalist (Greek- style or otherwise) or scholastic arguments were now widely rejected. (ii) What is the stuff of experience (Sense data/mental ‘impressions’). So the empiricist philosophers turned instead to sense data, or more generally the stuff of direct human experience, as their touchstone. This eventually led to quite fantastic arguments by the British empiricist school (and later on by positivist philosophers on the continent, and eventually in the UK). These ideas were very influential in physics, where they were assimilated to the ‘experimental philosophy’ (iii) What are the influences & constraints imposed by human faculties? Eventually the obvious point, that human experience was dependent on and indeed constrained by our own sensory and mental faculties, was re-integrated into the discussion, notably by Kant. This almost inevitably led to a re-introduction of an extra-sensory world, beyond our faculties, which constituted a deeper or underlying ‘reality’. The success of new physical ideas inevitably helped this process. PCES 3.43 BRITISH EMPIRICISM I: Locke & “Sensations” Locke was the first British philosopher of note after Bacon; his work is a reaction to the European rationalists, and continues to elaborate ‘experimental philosophy’. -

Challenging Conventional Wisdom: Development Implications of Trade in Services Liberalization

UNITED NATIONS CONFERENCE ON TRADE AND DEVELOPMENT TRADE, POVERTY AND CROSS-CUTTING DEVELOPMENT ISSUES STUDY SERIES NO. 2 Challenging Conventional Wisdom: Development Implications of Trade in Services Liberalization Luis Abugattas Majluf and Simonetta Zarrilli Division on International Trade in Goods and Services, and Commodities UNCTAD UNITED NATIONS New York and Geneva, 2007 NOTE Luis Abugattas Majluf and Simonetta Zarrilli are staff members of the Division on International Trade in Goods and Services, and Commodities of the UNCTAD secretariat. Part of this study draws on policy-relevant research undertaken at UNCTAD on the assessment of trade in services liberalization and its development implications. The views expressed in this study are those of the authors and do not necessarily reflect the views of the United Nations. The designations employed and the presentation of the material do not imply the expression of any opinion whatsoever on the part of the United Nations Secretariat concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation of its frontiers or boundaries. Material in this publication may be freely quoted or reprinted, but acknowledgement is requested, together with a reference to the document number. It would be appreciated if a copy of the publication containing the quotation or reprint were sent to the UNCTAD secretariat: Chief Trade Analysis Branch Division on International Trade in Goods and Services, and Commodities United Nations Conference on Trade and Development Palais des Nations CH-1211 Geneva Series Editor: Khalilur Rahman Chief, Trade Analysis Branch DITC/UNCTAD UNCTAD/DITC/TAB/POV/2006/1 UNITED NATIONS PUBLICATION ISSN 1815-7742 © Copyright United Nations 2007 All rights reserved ii ABSTRACT The implications of trade in services liberalization on poverty alleviation, on welfare and on the overall development prospects of developing countries remain at the hearth of the debate on the interlinkages between trade and development. -

Survey Experiments

IU Workshop in Methods – 2019 Survey Experiments Testing Causality in Diverse Samples Trenton D. Mize Department of Sociology & Advanced Methodologies (AMAP) Purdue University Survey Experiments Page 1 Survey Experiments Page 2 Contents INTRODUCTION ............................................................................................................................................................................ 8 Overview .............................................................................................................................................................................. 8 What is a survey experiment? .................................................................................................................................... 9 What is an experiment?.............................................................................................................................................. 10 Independent and dependent variables ................................................................................................................. 11 Experimental Conditions ............................................................................................................................................. 12 WHY CONDUCT A SURVEY EXPERIMENT? ........................................................................................................................... 13 Internal, external, and construct validity .......................................................................................................... -

Experimental Philosophy Jordan Kiper, Stephen Stich, H. Clark Barrett

Experimental Philosophy Jordan Kiper, Stephen Stich, H. Clark Barrett, & Edouard Machery Kiper, J., Stich, S., Barrett, H.C., & Machery, E. (in press). Experimental philosophy. In D. Ludwig, I. Koskinen, Z. Mncube, L. Poliseli, & L. Reyes-Garcia (Eds.), Global Epistemologies and Philosophies of Science. New York, NY: Routledge. Acknowledgments: This publication was made possible through the support of a grant from the John Templeton Foundation. The opinions expressed in this publication are those of the author(s) and do not necessarily reflect the views of the John Templeton Foundation. Abstract Philosophers have argued that some philosophical concepts are universal, but it is increasingly clear that the question of philosophical universals is far from settled, and that far more cross- cultural research is necessary. In this chapter, we illustrate the relevance of investigating philosophical concepts and describe results from recent cross-cultural studies in experimental philosophy. We focus on the concept of knowledge and outline an ongoing project, the Geography of Philosophy Project, which empirically investigates the concepts of knowledge, understanding, and wisdom across cultures, languages, religions, and socioeconomic groups. We outline questions for future research on philosophical concepts across cultures, with an aim towards resolving questions about universals and variation in philosophical concepts. 1 Introduction For centuries, thinkers have urged that fundamental philosophical concepts, such as the concepts of knowledge or right and wrong, are universal or at least shared by all rational people (e.g., Plato 1892/375 BCE; Kant, 1998/1781; Foot, 2003). Yet many social scientists, in particular cultural anthropologists (e.g., Boas, 1940), but also continental philosophers such as Foucault (1969) have remained skeptical of these claims. -

Lab Experiments Are a Major Source of Knowledge in the Social Sciences

IZA DP No. 4540 Lab Experiments Are a Major Source of Knowledge in the Social Sciences Armin Falk James J. Heckman October 2009 DISCUSSION PAPER SERIES Forschungsinstitut zur Zukunft der Arbeit Institute for the Study of Labor Lab Experiments Are a Major Source of Knowledge in the Social Sciences Armin Falk University of Bonn, CEPR, CESifo and IZA James J. Heckman University of Chicago, University College Dublin, Yale University, American Bar Foundation and IZA Discussion Paper No. 4540 October 2009 IZA P.O. Box 7240 53072 Bonn Germany Phone: +49-228-3894-0 Fax: +49-228-3894-180 E-mail: [email protected] Any opinions expressed here are those of the author(s) and not those of IZA. Research published in this series may include views on policy, but the institute itself takes no institutional policy positions. The Institute for the Study of Labor (IZA) in Bonn is a local and virtual international research center and a place of communication between science, politics and business. IZA is an independent nonprofit organization supported by Deutsche Post Foundation. The center is associated with the University of Bonn and offers a stimulating research environment through its international network, workshops and conferences, data service, project support, research visits and doctoral program. IZA engages in (i) original and internationally competitive research in all fields of labor economics, (ii) development of policy concepts, and (iii) dissemination of research results and concepts to the interested public. IZA Discussion Papers often represent preliminary work and are circulated to encourage discussion. Citation of such a paper should account for its provisional character. -

The Wisdom of the Few a Collaborative Filtering Approach Based on Expert Opinions from the Web

The Wisdom of the Few A Collaborative Filtering Approach Based on Expert Opinions from the Web Xavier Amatriain Neal Lathia Josep M. Pujol Telefonica Research Dept. of Computer Science Telefonica Research Via Augusta, 177 University College of London Via Augusta, 177 Barcelona 08021, Spain Gower Street Barcelona 08021, Spain [email protected] London WC1E 6BT, UK [email protected] [email protected] Haewoon Kwak Nuria Oliver KAIST Telefonica Research Computer Science Dept. Via Augusta, 177 Kuseong-dong, Yuseong-gu Barcelona 08021, Spain Daejeon 305-701, Korea [email protected] [email protected] ABSTRACT 1. INTRODUCTION Nearest-neighbor collaborative filtering provides a successful Collaborative filtering (CF) is the current mainstream ap- means of generating recommendations for web users. How- proach used to build web-based recommender systems [1]. ever, this approach suffers from several shortcomings, in- CF algorithms assume that in order to recommend items cluding data sparsity and noise, the cold-start problem, and to users, information can be drawn from what other similar scalability. In this work, we present a novel method for rec- users liked in the past. The Nearest Neighbor algorithm, ommending items to users based on expert opinions. Our for instance, does so by finding, for each user, a number of method is a variation of traditional collaborative filtering: similar users whose profiles can then be used to predict rec- rather than applying a nearest neighbor algorithm to the ommendations. However, defining similarity between users user-rating data, predictions are computed using a set of ex- is not an easy task: it is limited by the sparsity and noise in pert neighbors from an independent dataset, whose opinions the data and is computationally expensive. -

Legal Theory Workshop UCLA School of Law Samuele Chilovi

Legal Theory Workshop UCLA School of Law Samuele Chilovi Postdoctoral Fellow Pompeu Fabra University “GROUNDING, EXPLANATION, AND LEGAL POSITIVISM” Thursday, October 15, 2020, 12:15-1:15 pm Via Zoom Draft, October 6, 2020. For UCLA Workshop. Please Don’t Cite Or Quote Without Permission. Grounding, Explanation, and Legal Positivism Samuele Chilovi & George Pavlakos 1. Introduction On recent prominent accounts, legal positivism and physicalism about the mind are viewed as making parallel claims about the metaphysical determinants or grounds of legal and mental facts respectively.1 On a first approximation, while physicalists claim that facts about consciousness, and mental phenomena more generally, are fully grounded in physical facts, positivists similarly maintain that facts about the content of the law (in a legal system, at a time) are fully grounded in descriptive social facts.2 Explanatory gap arguments have long played a central role in evaluating physicalist theories in the philosophy of mind, and as such have been widely discussed in the literature.3 Arguments of this kind typically move from a claim that an epistemic gap between physical and phenomenal facts implies a corresponding metaphysical gap, together with the claim that there is an epistemic gap between facts of these kinds, to the negation of physicalism. Such is the structure of, for instance, Chalmers’ (1996) conceivability argument, Levine’s (1983) intelligibility argument, and Jackson’s (1986) knowledge argument. Though less prominently than in the philosophy of mind, explanatory gap arguments have also played some role in legal philosophy. In this area, the most incisive and compelling use of an argument of this kind is constituted by Greenberg’s (2004, 2006a, 2006b) powerful attack on positivism.