Tests of Achievement Tests of Achievement Nancy Mather • Barbara J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Delightful Book That Answers the Questions

The delightful book that answers the questions... • Why is my home being invaded by bats? And how can I make them find somewhere else to live? • Why is the great horned owl one of the few predators to regularly dine on skunk? I What are the three reasons you may have weasels around your house but never see them? I What event in the middle of winter will bring possums out in full force? • Wliat common Michigan animal has been dubbed "the most feared mammal on the North American continent"? I Why would we be wise to shun the cute little mouse and welcome a big black snake? I Why should you be very careful where you stack the firewood? NATURE FROM YOUR BACK DOOR MSU is an Affirmative-Action Equal HOpportunity Institution. Cooperative Extension Service programs are open to all without regard to race, color, national origin, sex or handicap. I Issued in furtherance of Cooperative Extension work in agriculture and home economics, acts of May 8, and June 30, 1914, in cooperation with the U.S. Department of Agriculture, Gail L. Imig, director, Cooperative Extension Service, Michigan State University, E. Lansing, Ml 48824. Produced by Outreach Communications MICHIGAN STATE UNIVERSITY Extension Bulletin E-2323 ISBN 1-56525-000-1 ©1991 Cooperative Extension Service, Michigan State University Illustrations ©1991 Brenda Shear 7VATURE ^-^ from YOUR BACK DOOR/ By Glenn R. Dudderar Extension Wildlife Specialist Michigan State University Leslie Johnson Outreach Communications Michigan State University ?f Illustrations by Brenda Shear FIRST EDITION — AUTUMN 1991 NATURE FROM YOUR BACK DOOR INTRODUCTION WHEN I CAME TO MICHIGAN IN THE MID-1970S, I WAS SURPRISED at the prevailing attitude that nature and wildlife were things to see and enjoy if you went "up north". -

Apple Cider Jelly Excellent Cider Jelly Is Easily Made by Cooking Tart Apples in Hard Or Sweet Cider for 10 Minutes, Then Straining the Pulp Through Cheesecloth

Cider MAKING, USING & ENJOYING SWEET & HARD CIDER Third Edition ANNIE PROULX & LEW NICHOLS DEDICATED TO CIDER APPLES AND AMATEUR CIDERMAKERS EVERYWHERE The mission of Storey Publishing is to serve our customers by publishing practical information that encourages personal independence in harmony with the environment. Edited by Mary Grace Butler and Pamela Lappies Cover design by Karen Schober, Unleashed Books Cover illustration by Cyclone Design Text design by Cindy McFarland Text production by Eugenie Seide nberg Delaney Line drawings on pages 5, 6, 17, 140, 141 (top dr awing) by Beverly Duncan, and by Judy Elaison on page 141 (bottom) Indexed by Susan Olason, Indexes and Knowledge Maps Professional assistance by John Vittori, Furnace Brook W inery Third Edition © 2003 by Storey Publishing, LLC Originally published in 1980 by Garden Way Publ ishing. All rights reserved. No part of this book may be reproduced without written permission from the publisher, except by a reviewer who may quote brief passages or reproduce illustrations in a review with appropriate credits; nor may any part of this book be reproduced, stored in a retrieval system, or transmitted in any form or by any means — electronic, mechanical, photocopying, recording, or other — without written permission from the publisher. The information in this book is true and complete to the best of our knowledge. All recommendations are made without guarantee on the part of the author or Storey Publishing. The author and publisher disclaim any liability in connection with the use of this information. For additional information please contact Storey Publishing, 210 MASS MoCAWay, North Adams, MA 01247. -

01 Bull Henry Graves, Hogg Robert

https://pomiferous.com/applebystart/F?page=6 - 01 - Obstsortenliste – Bull, Henry Graves, Hogg Robert: „The Herefordshire Pomona – Apples and Pears“ (London Volume II 1876 - 1885 –Beschreibung – farbige Abbildungen) Nr. Äpfel Nr. Äpfel 001 Alfriston 026 Cockpit 002 Allens Dauerapfel, Allens Everlasting 027 Coccagee 003 American Crab 028 Coes rotgefleckter Apfel, Coes Golden Drop 004 Amerikanischer Melonenapfel, Melonapple 029 College Apple 005 Annie Elizabeth, Anme Elizabeth 030 Cornish Aromatic 006 Aromatic Russet 031 Cornwalliser Nelkenapfel, Chornish Gilliflower 007 Ashmeads Sämling, Ashmeads Kernel 032 Cowarne Queening 008 Barchards Seedling 033 Cowarne Red 009 Baumanns Renette, Reinette Baumann 034 Crimson Crab 010 Belle Bonne 035 Crimson Queening, Herefordshire Queening 011 Benoni 036 Crofton Scarlet, Scarlet Crofton 012 Bess Pool 037 Cummy 013 Betty Geeson 038 Dauerapfel aus Hambledon, Hambledon Deux Ans 014 Bramleys Sämling, Bramleys Seedling 039 Dutchess Favorite 015 Bran Rose 040 Early Harvest 016 Braunroter Winterkalvill, Calville Malingre 041 Early Julien 017 Breitling 042 Englisch Codlin, English Codlin 018 Bringewood Pepping, Bringewood Pippin 043 Englischer Goldpepping, Golden Pippin 019 Broad End 044 Englischer Winterquittenapfel, Lemon Pippin 020 Brownlees’s graue Renette, Brownlees Russet 045 Fearns Pepping, Fearns Pippin 021 Carrion 046 Forest Styre 022 Catshead 047 Franklins Golden Pippin 023 Cherry Apple, Sibirian Crab 048 French Codlin 024 Cherry Pearmain 049 Fruchtbarer aus Frogmore, Frogmore Prolific 025 Cloucestershire -

The Woodcock Volume 4

The Woodcock Volume 4 Fall 2019 Antioch Bird Club Table of Contents Editor’s Note - A Call to Action ABC News Event Recaps - Coastal Trip - River Run Farm Bird Walk - Meet the Mountain Day - Birds on the Farm: Workshop & Guided Bird Walk - Game Night - Cemetery Prowl - Motus Winterizing - Bagels and Birds - Library Scavenger Hunt - Hot Spot Bird of the Month - November – Red-Bellied Woodpecker - December- Pine Siskin Audubon’s Climate Change Actions The Monadnock Region Birding Cup Student Showcases - Raptors: A lifelong love – Eric Campisi - Oscar the Barred Owlet – Jennie Healy - Chasing Nighthawks – Kim Snyder Upcoming Events - Keene & Brattleboro Christmas Bird Count - Coastal Field Trip: Cape Ann and Plum Island 2020 Global Big Year A New Silent Spring – Audrey Boraski *Cover page photo is by Greg & Company, Springbok Editor’s Note - A Call to Action In September of this year, the New York Times published a bombshell article based on a paper from ornithologists across North America called Decline of the North American Avifauna. Within this report was the realization that 1 in 4 birds, some 3 billion individuals, have been lost since 1970. The losses stretched across various families, from sparrows and warblers to blackbirds and finches — all common visitors to backyards here in New England. This kind of revelation can feel crushing, especially for something we see everyday that is slowly fading from existence. Birds are being taken out from multiple threats: pesticides, loss of habitat, window strikes... So what do we do? Something. Anything. Because if we do nothing, they will fade so gradually that we become numb to the loss. -

CONTEXTS of the CADAVER TOMB IN. FIFTEENTH CENTURY ENGLAND a Volumes (T) Volume Ltext

CONTEXTS OF THE CADAVER TOMB IN. FIFTEENTH CENTURY ENGLAND a Volumes (T) Volume LText. PAMELA MARGARET KING D. Phil. UNIVERSITY OF YORK CENTRE FOR MEDIEVAL STUDIES October, 1987. TABLE QE CONTENTS Volume I Abstract 1 List of Abbreviations 2 Introduction 3 I The Cadaver Tomb in Fifteenth Century England: The Problem Stated. 7 II The Cadaver Tomb in Fifteenth Century England: The Surviving Evidence. 57 III The Cadaver Tomb in Fifteenth Century England: Theological and Literary Background. 152 IV The Cadaver Tomb in England to 1460: The Clergy and the Laity. 198 V The Cadaver Tomb in England 1460-1480: The Clergy and the Laity. 301 VI The Cadaver Tomb in England 1480-1500: The Clergy and the Laity. 372 VII The Cadaver Tomb in Late Medieval England: Problems of Interpretation. 427 Conclusion 484 Appendix 1: Cadaver Tombs Elsewhere in the British Isles. 488 Appendix 2: The Identity of the Cadaver Tomb in York Minster. 494 Bibliography: i. Primary Sources: Unpublished 499 ii. Primary Sources: Published 501 iii. Secondary Sources. 506 Volume II Illustrations. TABU QE ILLUSTRATIONS Plates 2, 3, 6 and 23d are the reproduced by permission of the National Monuments Record; Plates 28a and b and Plate 50, by permission of the British Library; Plates 51, 52, 53, a and b, by permission of Trinity College, Cambridge. Plate 54 is taken from a copy of an engraving in the possession of the office of the Clerk of Works at Salisbury Cathedral. I am grateful to Kate Harris for Plates 19 and 45, to Peter Fairweather for Plate 36a, to Judith Prendergast for Plate 46, to David O'Connor for Plate 49, and to the late John Denmead for Plate 37b. -

The Adulteration of Food : Conferences by the Institute of Chemistry

LIBRARY M 72 7^ liUcnutional Health Exhibition^ LONDON, 1884. ADULTERATION OF FOOD. CONFERENCES BY THE INSTITUTE OF CHEMISTR Y ON MONDAY and TUESDAY, JULY 14th and 15///. FOOD ADULTERATION AND ANALYSIS PRrj^TED AND FTJBT TSHED FOR THE €m\\^\\it Council of tlj^ |itternatioiuil Imlllj Cviyibltiait, aitb for tlje Council of tjje Society of BY WILLIAM CLOWES AND SONS. Limited. INTERNATIONAL HEALTH EXHIBITION, AND 13, CHARING CROSS, S.VV. 1884. LOXIJOX. L PRINTED BY WILLIAM CLOWES AND SONS, STAMFORD SIREET ALU CHARING CROSS. International Health Rxhibition, LONDON, 1884. INSTITUTE OF CHEMISTRY. Conference on Monday, July 14, 1884. Professor W. Odling, M.A., F.R.S., President of the Institute, in the Chair. The Chairman said the Executive Council of the Exhi- bition had invited the Institute of Chemistry to hold a conference on the very important subject of the adultera- tion of food, and the modes of analysing it, and he hoped that having before them a subject of this kind, to which so much attention had been paid by so many eminently qualified, a discussion of considerable interest must arise, and that some good in the way of increase of knowledge and increase of agreement as to the points desirable to attain in future must be arrived at. FOOD ADULTERATION AND ANALYSIS. By Dr. JAMES BeLL, F.R.S. Adulteration, in its widest sense, may be described as the act of debasing articles for pecuniary profit by inten- tionally adding thereto an inferior substance, or by taking therefrom some valuable constituent ; and it may also be said to include the falsification of inferior articles by im- [C. -

School, Administrator and Address Listing

District/School Zip District/School Name Administrator Address City State Code Telephone ALBANY COUNTY ALBANY CITY SD Dr. Marguerite Vanden Wyngaard Academy Park Albany NY 12207 (518)475-6010 ALBANY HIGH SCHOOL Ms. Cecily Wilson 700 Washington Ave Albany NY 12203 (518)475-6200 ALBANY SCHOOL OF HUMANITIES Mr. C Fred Engelhardt 108 Whitehall Rd Albany NY 12209 (518)462-7258 ARBOR HILL ELEMENTARY SCHOOL Ms. Rosalind Gaines-Harrell 1 Arbor Dr Albany NY 12207 (518)475-6625 DELAWARE COMMUNITY SCHOOL Mr. Thomas Giglio 43 Bertha St Albany NY 12209 (518)475-6750 EAGLE POINT ELEMENTARY SCHOOL Ms. Kendra Chaires 1044 Western Ave Albany NY 12203 (518)475-6825 GIFFEN MEMORIAL ELEMENTARY SCHOOL Ms. Jasmine Brown 274 S Pearl St Albany NY 12202 (518)475-6650 MONTESSORI MAGNET SCHOOL Mr. Ken Lein 65 Tremont St Albany NY 12206 (518)475-6675 MYERS MIDDLE SCHOOL Ms. Kimberly Wilkins 100 Elbel Ct Albany NY 12209 (518)475-6425 NEW SCOTLAND ELEMENTARY SCHOOL Mr. Gregory Jones 369 New Scotland Ave Albany NY 12208 (518)475-6775 NORTH ALBANY ACADEMY Ms. Lesley Buff 570 N Pearl St Albany NY 12204 (518)475-6800 P J SCHUYLER ACHIEVEMENT ACADEMY Ms. Jalinda Soto 676 Clinton Ave Albany NY 12206 (518)475-6700 PINE HILLS ELEMENTARY SCHOOL Ms. Vibetta Sanders 41 N Allen St Albany NY 12203 (518)475-6725 SHERIDAN PREP ACADEMY Ms. Zuleika Sanchez-Gayle 400 Sheridan Ave Albany NY 12206 (518)475-6850 THOMAS S O'BRIEN ACAD OF SCI & TECH Mr. Timothy Fowler 94 Delaware Ave Albany NY 12202 (518)475-6875 WILLIAM S HACKETT MIDDLE SCHOOL Mr. -

The Apple in Oregon Be

6000. Bulletin No. 81. July, 1904. Department of flortcu1ture OREGON AGRICULTURAL EXPERIMENT STATION CORVALLIS, OREGON. L1'. PYRITS RI ULARI-OTtEGON CRAB APPLE.: THE APPLE IN OREGON BE. R. LAKE THE BULLETINS OF THIS STATION ARE SENT FREE To ALL REC I OF OREGON WHO REQUEST THEM. Board of Regcots of the Oregon Agricultural College and Exerioieot Station Hon. J. K. Weatherford, President Albany, Oregon. Hon. John D. Daly, Secretary PortIand Oregon. Hon. B. F. Irvine, Treasurer Corvallis, Oregon. Hon. Geo. E. Chamberlain, Governor Salem, Oregon. Hon. F. I. Dunbar, Secretary of State Salem, Oregon. Hon. J. H. Ackerman, Stale SuJ,l. of Pub. Instruction, Salem, Oregon. Hon. B. G. Leedy, Master of Slate Grange Tigardville, Oregon. Hon. William W. Cotton Portland, Oregon. Hon. Jonas M. Church. La Grande, Oregon. Hon. J. D. Olwell Central Point, Oregon. Hon. William li. Yates Corvallis, Oregon. Hon. J. T. Apperson, Park Place, Oregon. Hon. W. P. Keady Portland, Oregon. OFFICERS OF THE STATION. STATION STAFF. Thos. M. Gatch, A. M. Ph. D PesidenI. James Withycombe, M. Agr.. .Direclor and Agriculiurisi. A. I.. Knisely, M. S. - CJzmisl. A. B. Cordley, M. S Eniomologzsi. R. Lake, M. S Horticuliurist and Botanist. IL F. Peruot, M. Bactriologisl. George Coote Florist. L. Kent, B. S Dairying. C. M. McKellips, Ph. C., M. S Chemistry. P. K. Edwards, B. M. K. -- Chemistry. H. 0. Gibbs, B. S. Chemistry. THE APPLE IN OREGON PART I Topics Discnssed.Early flistory.Earliest Varieties.Later Plantings. The Problem of Planting.--Slte as to Soil.Site as to Aspect.Selec. tion of Trees.Planting. -

United States Patent (19) 11 Patent Number: 6,139,819 Unger Et Al

USOO6139819A United States Patent (19) 11 Patent Number: 6,139,819 Unger et al. (45) Date of Patent: *Oct. 31, 2000 54 TARGETED CONTRASTAGENTS FOR 4,342,826 8/1982 Cole ............................................ 435/7 DLAGNOSTIC AND THERAPEUTIC USE (List continued on next page.) (75) Inventors: Evan C. Unger; Thomas A. Fritz, both FOREIGN PATENT DOCUMENTS of Tucson; Edward W. Gertz, Paradise Valley, all of Ariz. 641363 3/1990 Australia. 30351/89 3/1993 Australia. 0 052 575 5/1982 European Pat. Off.. 73) Assignee: Imarx Pharmaceutical Corp., Tucson, 0 107 559 5/1984 European Pat. Off.. Ariz. 0 077 752 B1 3/1986 European Pat. Off.. 0 243 947 4/1987 European Pat. Off.. Notice: This patent issued on a continued pros 0 231 091 8/1987 European Pat. Off.. ecution application filed under 37 CFR 0 272 091 6/1988 European Pat. Off.. 1.53(d), and is subject to the twenty year O 320 433 A2 12/1988 European Pat. Off.. patent term provisions of 35 U.S.C. 0.324938 7/1989 European Pat. Off.. 154(a)(2). 0.338 971 10/1989 European Pat. Off.. 357163 A1 3/1990 European Pat. Off.. O 361894 4/1990 European Pat. Off.. 21 Appl. No.: 08/932,273 O 368 486 A2 5/1990 European Pat. Off.. 22 Filed: Sep. 17, 1997 (List continued on next page.) Related U.S. Application Data OTHER PUBLICATIONS 63 Continuation-in-part of application No. 08/660,032, Jun. 6, Desir et al., “ASSessment of regional myocardial perfusion 1996, abandoned, which is a continuation-in-part of appli with myocardial contrast echocardiography in a canine cation No. -

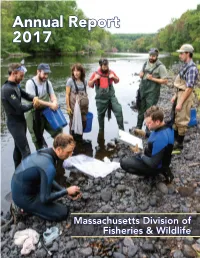

Annual Report 2017

Annual Report 2017 Massachusetts Division of Fisheries & Wildlife 135 Annual Report 2017 Massachusetts Division of Fisheries & Wildlife Jack Buckley Director Susan Sacco Assistant to the Director Mark S. Tisa, Ph.D. Michael Huguenin Deputy Director Assistant Director Administration Field Operations Jim Burnham Debbie McGrath Administrative Assistant to the Administrative Assistant to the Deputy Director, Administration Deputy Director, Field Operations 137 Table of Contents 2 The Board Reports 6 Fisheries 43 Wildlife 67 Natural Heritage & Endangered Species Program 78 Information & Education 92 Hunter Education 94 District Reports 113 Wildlife Lands 123 Federal Aid 125 Personnel Report 128 Financial Report 132 Appendix A About the Cover: Interagency Cooperation – MassWildlife Biologists and Environmental Analysts from MassDOT evaluate rare and common freshwater mussels prior to a bridge replacement project. Staff from both agencies relocated >1000 mus- sels out of the construction area to avoid impacts to the resource. Through the Linking Landscapes partnership, both agencies work together to promote conservation of fish, wildlife, plants and the habitats they depend on. Photo by Troy Gipps/MassWildlife Back Cover: A Monarch butterfly on Common Milkweed in Grafton. Photo © Troy Gipps Printed on Recycled Paper. 1 The Board Reports Joseph S. Larson, Ph.D. Chairperson Overview ing chair until the Board held its elections and chose a per- manent chair, which it did the following month. The Massachusetts Fisheries and Wildlife Board consists of seven persons appointed by the Governor to 5-year Over the course of the fiscal year, the Board examined the terms. By law, the individuals appointed to the Board are details of a Timber Rattlesnake Restoration Project that had volunteers, receiving no remuneration for their service to been first introduced in January 2014. -

TBI2016 Beermenu

12 Gates Doublegate, DIPA 8.5% ABV, 78 IBUs Doublegate has a clean malt body that allows the hop blend of Amarillo and Mosaic hops to shine through with flavors and aromas of berries, tropical citrus, hints of pine, and the complex signature of flavors unique to Mosaic hops that tends to change with each drinker's palate. All this with a slight undertone of alcohol typical of, and to be expected of, in a double IPA that make this beer worthy of a second pour. 42 North Beer du Brut, Bière de Champagne 15.5% ABV 42 North's take on a rarely brewed style originally produced much like Champagne in small parts of France and Belgium. Brewed with pilsner and wheat malt combined with a light touch of spices. Fermented warm with a Champagne yeast and highly carbonated to create a unique hybrid beer somewhere between a Belgian Tripel, Cider, and White Wine. Only 15 gallons made. 42 North Farmhouse Sour, American Sour 4.6% ABV This American sour ale is fermented entirely with a blended wild culture over 3 months and then aged an additional 1 month minimum in the bottle for an extremely tart and complex but crisp experience. Intense yet balanced flavors of sour fruit, tart citrus, white grape, and white wine. Big Ditch Chocolate Gose, Gose Like chocolate covered sourdough pretzels? Big Ditch started with a complex malt bill including wheat, oats, chocolate, debittered roasted malt, and caramel wheat. Next, the beer was kettle soured with a lactobacillus blend. We then added a touch of salt to finish the beer. -

HARTLEY WINTNEY COMMUNITY ORCHARD ~ a Brief History ~

HARTLEY WINTNEY COMMUNITY ORCHARD ~ a brief history ~ Published by Hartley Wintney Parish Council Hartley Wintney Community Orchard Throughout horticultural and agricultural history there has been a place for the Orchard. It is believed that from the silk routes of China, Russia & Kazakhstan, apple trees were taken from the wild and replanted nearer settlements for domestic use as long ago as 6000 BC ~ Almaty in Kazakhstan means ‘the place of apples’. For the Greeks, cultivation and enjoyment of fruit orchards became an essential part of daily life and the Romans, who introduced apples to England from other continents, introduced grafting techniques. In Medieval times, ‘flowery meads’ (small meadows) and orchards played their part at a time when people felt the need to be ‘enclosed’ within their own territory; they were also a vital part of self sufficient, monastic life. Surviving throughout history, orchards remained during the long, Renaissance period and continued over the Landscape movement, both times of great change in horticulture. In essence, the beauty and practical use of the orchard has preserved its own existence; a food and timber source or shady place for contemplation. In the past forty years however, two thirds of the traditional orchards have disappeared. The concept of a Community Orchard in Hartley Wintney was first mooted on May 21st 1994 following a Hampshire Wildlife Trust training day on the subject in Shedfield but it was five years before an opportunity arose to pursue the idea. When, like many local authorities, it was seeking how to provide its community with an original, worthwhile and sustainable commemoration of the new Millennium, Hartley Wintney Parish Council chose to provide a Community Orchard to attempt to recapture some of the orchard’s traditional functions.