Real-Time Embedded Operating Systems: Standards and Perspectives

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What We Know About Testing Embedded Software

What we know about testing embedded software Vahid Garousi, Hacettepe University and University of Luxembourg Michael Felderer, University of Innsbruck Çağrı Murat Karapıçak, KUASOFT A.Ş. Uğur Yılmaz, ASELSAN A.Ş. Abstract. Embedded systems have overwhelming penetration around the world. Innovations are increasingly triggered by software embedded in automotive, transportation, medical-equipment, communication, energy, and many other types of systems. To test embedded software in a cost effective manner, a large number of test techniques, approaches, tools and frameworks have been proposed by both practitioners and researchers in the last several decades. However, reviewing and getting an overview of the entire state-of-the- art and the –practice in this area is challenging for a practitioner or a (new) researcher. Also unfortunately, we often see that some companies reinvent the wheel (by designing a test approach new to them, but existing in the domain) due to not having an adequate overview of what already exists in this area. To address the above need, we conducted a systematic literature review (SLR) in the form of a systematic mapping (classification) in this area. After compiling an initial pool of 560 papers, a systematic voting was conducted among the authors, and our final pool included 272 technical papers. The review covers the types of testing topics studied, types of testing activity, types of test artifacts generated (e.g., test inputs or test code), and the types of industries in which studies have focused on, e.g., automotive and home appliances. Our article aims to benefit the readers (both practitioners and researchers) by serving as an “index” to the vast body of knowledge in this important and fast-growing area. -

Embedded Sensors System Applied to Wearable Motion Analysis in Sports

Embedded Sensors System Applied to Wearable Motion Analysis in Sports Aurélien Valade1, Antony Costes2, Anthony Bouillod1,3, Morgane Mangin4, P. Acco1, Georges Soto-Romero1,4, Jean-Yves Fourniols1 and Frederic Grappe3 1LAAS-CNRS, N2IS, 7, Av. du Colonel Roche 31077, Toulouse, France 2University of Toulouse, UPS, PRiSSMH, Toulouse, France 3EA4660, C3S - Université de Franche Comté, 25000 Besançon, France 4 ISIFC – Génie Biomédical - Université de Franche Comté, 23 Rue Alain Savary, 25000 Besançon, France Keywords: IMU, FPGA, Motion Analysis, Sports, Wearable. Abstract: This paper presents two different wearable motion capture systems for motion analysis in sports, based on inertial measurement units (IMU). One system, called centralized processing, is based on FPGA + microcontroller architecture while the other, called distributed processing, is based on multiple microcontrollers + wireless communication architecture. These architectures are designed to target multi- sports capabilities, beginning with tri-athlete equipment and thus have to be non-invasive and integrated in sportswear, be waterproofed and autonomous in energy. To characterize them, the systems are compared to lab quality references. 1 INTRODUCTION electronics (smartphones, game controllers...), in an autonomous embedded system to monitor the Electronics in sports monitoring has been a growing sportive activity, even in field conditions. field of studies for the last decade. From the heart rate Our IMU based monitoring system allows monitors to the power meters, sportsmen -

Embedded Systems Supporting by Different Operating Systems

A Survey: Embedded Systems Supporting By Different Operating Systems Qamar Jabeen, Fazlullah Khan, Muhammad Tahir, Shahzad Khan, Syed Roohullah Jan Department of Computer Science, Abdul Wali Khan University Mardan [email protected], [email protected] -------------------------------------------------------------------------------------------------------------------------------------- Abstract: In these days embedded systems used in industrial, commercial system have an important role in different areas. e.g Mobile Phones and different Fields and applications like Network type of Network Bridges are embedded embedded system , Real-time embedded used by telecommunication systems for systems which supports the mission- giving better requirements to their users. critical domains, mostly having the time We use digital cameras, MP3 players, DVD constraints, Stand-alone systems which players are the example of embedded includes the network router etc. A great consumer electronics. In our daily life its deployment in the processors made for provided us efficiency and flexibility and completing the demanding needs of the many features which includes microwave users. There is also a large-scale oven, washing machines dishwashers. deployment occurs in sensor networks for Embedded system are also used in providing the advance facilities, for medical, transportation and also used in handled such type of embedded systems wireless sensor network area respectively a specific operating system must provide. medical imaging, vital signs, automobile This paper presents some software electric vehicles and Wi-Fi modules. infrastructures that have the ability of supporting such types of embedded systems. 1. Introduction: Embedded system are computer systems designed for specific purpose, to increase functionality and reliability for achieving a specific task, like general Figure 1: Taxonomy of Embedded Software’s purpose computer system it does not use for multiple tasks. -

New Approach for Testing and Providing Security Mechanism for Embedded Systems

Available online at www.sciencedirect.com ScienceDirect Procedia Computer Science 78 ( 2016 ) 851 – 858 International Conference on Information Security & Privacy (ICISP2015), 11-12 December 2015, Nagpur, INDIA New Approach for Testing and providing Security Mechanism for Embedded Systems Swapnili P. Karmorea, Anjali R. Mahajanb Research Scholar, Department of Computer Science and enginnering, G. H. Raisoni College of Engineering, Nagpur, India. Head, Department of Information Technology, Government Polytechnic, Nagpur, India. Abstract Assuring the quality of embedded system is posing a big challenge for software testers around the globe. Testing of one embedded system widely differs from another. The approach presented in the paper is used for the testing of safety critical feature of embedded system. The input and outputs are trained validated and tested via ANN. Security is provided via skipping invalid and critical classes and by embedding secret key in the Ram of embedded device. The work contributes simulating environment where cost and time required for testing of embedded systems will be minimized, which removes drawback of traditional approaches. Keywords: Embedded system testing; Black box testing; Safety critical embedded systems; Artificial neural network. 1. Introduction Testing is the most common process used to determine the quality and providing security for embedded systems. In the embedded world, testing is an immense challenge. In the test plan, the distinguished characteristics of embedded systems must be reflected as these are application specific. They give embedded system exclusive and distinct flavor. Real-time systems have to meet the challenge of assuring the correct implementation of an application not only dependent upon its logical accuracy, but also its ability to meet the constraints of timing. -

What Is Embedded Computing?

EMBEDDED COMPUTING more stringent cost and power con- straints. PDA design requires careful What Is Embedded attention to both hardware and soft- ware. In the next decade, some micro- processors, largely invisible to users, Computing? will be used for signal processing and control—for example, to enable home Wayne Wolf, Princeton University networking across noisy, low-quality media such as power lines. Others will be used to create advanced user inter- or those who think a lot about embedded computing, as well as Evolving from a craft to an the uninitiated, it’s important to engineering discipline over F define exactly what the term means. In brief, an embedded the past decade, embedded system is any computer that is a com- ponent in a larger system and that relies computing continues to on its own microprocessor. mature. But is embedded computing a field or just a fad? The purpose of this new bimonthly column is to give researchers difficult to implement in mechanical faces—for example, for the entire clus- as well as practitioners an opportunity controllers. ter of home entertainment devices. to demonstrate that embedded com- Laser and inkjet printers also Microprocessors have also enabled puting is an established engineering dis- emerged in the 1980s. Print engines new categories of portable devices cipline with principles and knowledge require computational support for that will assume roles and perform at its core. both typesetting and real-time control. functions yet to be determined. The First, users generate characters and cell phone and PDA combinations A LONG HISTORY lines that a computation must convert that hit the market in 2001 are a People have been building embedded into pixels. -

Embedded Hardware

Lecture #2 Embedded Hardware 18-348 Embedded System Engineering Philip Koopman Friday, 15-Jan-2016 Electrical& Computer ENGINEERING © Copyright 2006-2016, Philip Koopman, All Rights Reserved Announcements Many posted materials are accessible only from a CMU IP Address • Look for this on course web page: If you can't access a file due to access restrictions, you need to get a campus IP address for your web browsing requests. Use Cisco VPN Anyconnect... Course web page has schedules, assignments, other important info • http://www.ece.cmu.ecu/~ece348 • Blackboard will have grades, announcements, sample tests • Look at blackboard announcements before sending e-mail to course staff Lab board handouts in progress • See Blackboard/admin page for TA office hours • OK to go to any scheduled lab section (but, give priority to scheduled students) • For Friday prelab give a good faith attempt to get things working by the deadline – If you hit a showstopper get it fixed on Tuesday so you can do Prelab 2 on time. 2 Design Example: Rack-Mount Power Supply Power supply for server • AC to DC conversion (750-1000W) • Coordinates 2 redundant supplies to maximize uptime • Safeguard against power problems (under/over-voltage; over-current; over-temp) Typical approach: • General microcontroller for AC, alarms, housekeeping • DSP runs control loop on DC side at > 10 KHz to provide stable DC http://accessories.us.dell.com Dell 870W Power Supply for PowerEdge R710 Server power Key requirement: “Doesn’t emit smoke” 3 Apple USB Power Adapter Teardown iPhone -

Embedded Smart Health Monitoring System-Wearable Devices

Volume 4, Issue 5, May – 2019 International Journal of Innovative Science and Research Technology ISSN No:-2456-2165 Embedded Smart Health Monitoring System-Wearable Devices A Sushmitha, Anusha N S, Anusha S Raj Vidyashree. C Student, Dept of ECE, Assistant Professor, Dept of ECE, Dr. Ambedkar Institute of Technology, Dr. Ambedkar Institute of Technology, Bangalore, India. Bangalore, India. Abstract:- This paper gives an insight towards current The objective of developing monitoring systems is to trends and employed methodologies in wearable device reduce health care costs by reducing physician office visits, technology. In the present era it is essential to design a hospitalizations, and diagnostic testing procedure. The cost-effective healthcare system as it is essential to make doctors can continuously monitor the health of the patients the smart health systems available to all the people from any location. around the world. In this project we have developed a healthcare monitoring system which bears the track of II. OVERVIEW patient’s exercising postures, breathing patterns, glucose level detection along with the implementation of A wearable health monitoring system are being Peltier. With the advancement of technology and usage beneficial to common people as well as the health care of Cloud computing, it becomes easy for updation of systems in maintaining the patient‟s activeness or fitness these measured data. This automatic updation of data level and also for self-health tracking. The usage of prevents all the errors which can be caused by embedded systems along the recent technologies such as manually entering the data. IoT and Cloud Computing in healthcare systems has widened the scope of assessing the health of an individual Keywords:- Cloud, Wearable Device, Embedded patient‟s health conditions. -

Prototyping Wearables: a Code-First Approach to the Design of Embedded Systems

W&M ScholarWorks Arts & Sciences Articles Arts and Sciences 10-2016 Prototyping Wearables: A Code-First Approach to the Design of Embedded Systems Daniel Graham College of William and Mary, [email protected] Gang Zhou College of William and Mary, [email protected] Follow this and additional works at: https://scholarworks.wm.edu/aspubs Recommended Citation Graham, Daniel and Zhou, Gang, Prototyping Wearables: A Code-First Approach to the Design of Embedded Systems (2016). 10.1109/JIOT.2016.2537148 This Article is brought to you for free and open access by the Arts and Sciences at W&M ScholarWorks. It has been accepted for inclusion in Arts & Sciences Articles by an authorized administrator of W&M ScholarWorks. For more information, please contact [email protected]. 806 IEEE INTERNET OF THINGS JOURNAL, VOL. 3, NO. 5, OCTOBER 2016 Prototyping Wearables: A Code-First Approach to the Design of Embedded Systems Daniel Graham and Gang Zhou, Senior Member, IEEE Abstract—As wearable devices become ubiquitous, there will by analyzing the code they are required to run, thereby allow- be an increased demand for platforms that allow engineers ing software developers with limited hardware experience to and researchers to quickly prototype and evaluate new wear- develop their own prototypes. The second context is that of able devices. However, many of these platforms require that the hardware be configured before the code is written, thereby lim- hardware/software co-design, where the experienced hardware iting the programmer to the limitations of the hardware. In this engineer is interested in optimizing a hardware design utiliz- paper, we present a platform that allows researchers and engi- ing information from the code that the platform is required neers to quickly prototype new wearable devices using a code-first to run. -

Testing & Validation Strategy for Industrial

The need for a bespoke Testing and Validation strategy for Industrial Automation and Embedded Systems www.happiestminds.com Introduction The last few years have seen tectonic shifts in technology and part of that narrative has been the evolution of Industry 4.0 and the emergence of a software driven approach. Traditionally, a bulk of the investment in the Industrial world would have been allocated to hardware, however that is changing rapidly with the advent of advanced software platforms. In fact, Industrial software is now witness to a blending of Information Technology (IT) and Operational Technology (OT) leading to the creation of new platforms that combine data generated at the corporate level and data received from the field. The Industrial Automation segment has been bolstered by the rapid adoption of IoT which has resulted in reduced costs, flatter and open AI architectures, tighter integration of IT and OT and the ubiquity of edge devices and sensors. As Industrial Automation continues to evolve, end users and IA suppliers are at the crossroads where they must walk the fine line between risk and reward. The risk is in being overly enthusiastic and adopting new technologies before they have been proven at scale. Alternatively, not adopting these technologies until the point of stability can result in other risk savvy competitors forging ahead to snare a large portion of the market. A couple of years ago, we saw the emergence of open industrial system architectures with themes such as computing at the edge and multivendor interoperable open systems to name a few. Today, prominent industry initiatives like Industry 4.0 and Open Process Automation Forums are leading the transformation. -

A Multithreading Embedded Architecture

Proceedings of the 7th WSEAS International Conference on DATA NETWORKS, COMMUNICATIONS, COMPUTERS (DNCOCO '08) A Multithreading Embedded Architecture Lan Dong, Xiufeng Sui Computer Engineering Department Beijing Jiaotong University Shang yuan village No.3, Beijing China Abstract: -More embedded microprocessors are emerging and more multithreaded tasks need to be executed simultaneously on embedded device. This paper proposes a multithreaded architecture for embedded processor. It improves the system performance in a large degree meanwhile considering reducing energy dissipation and improving the cache utilization in limited size. Key-Words: - multithreading technology, thread, cache, fetch policy, decoder, hybrid partition 1 Introduction 2.2 Architecture Embedded computers have been grown as the The basis for this effort on introducing the fastest part in the computer market nowadays. multithreading embedded architecture is an They are spread over from cell phones, video ARM-derived instruction set superscalar processor games to most family machines. Embedded [3, 4]. Only necessary changes to enable systems often deal with event under the real-time simultaneous multithreading are added to the basic constraints. So the performance of the embedded superscalar architecture. The necessary to support system must be considered with these changes. the simultaneous multithreading are mainly: In embedded world the power and the size are the program counter, return stack for each thread, and important constrains for embedded processor [1, 2]. threaded register bank, etc. The techniques to reduce the system energy In embedded system, cache size is usually limited. dissipation and improve the resource utilization It is important to redesign the cache partition must be considered with these variations. mechanism to improve the hit rate in multi-tasks A multithreading embedded architecture is environment on fixed cache size. -

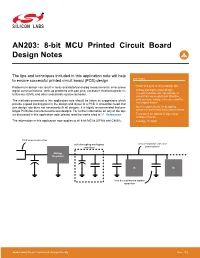

AN203: 8-Bit MCU Printed Circuit Board Design Notes

AN203: 8-bit MCU Printed Circuit Board Design Notes The tips and techniques included in this application note will help to ensure successful printed circuit board (PCB) design. KEY POINTS Problems in design can result in noisy and distorted analog measurements, error-prone • Power and ground circuit design tips. digital communications, latch-up problems with port pins, excessive electromagnetic in- • Analog and digital signal design terference (EMI), and other undesirable system behavior. recommendations with special tips for traces that require particular attention, The methods presented in this application note should be taken as suggestions which such as clock, voltage reference, and the reset signal traces. provide a good starting point in the design and layout of a PCB. It should be noted that one design rule does not necessarily fit all designs. It is highly recommended that pro- • Special requirements for designing totype PCBs be manufactured to test designs. For further information on any of the top- systems in electrically noisy environments. ics discussed in this application note, please read the works cited in 11. References. • Techniques for optimal design using multilayer boards. The information in this application note applies to all 8-bit MCUs (EFM8 and C8051). • A design checklist. PCB power connection bulk decoupling and bypass circuit conductor: traces or capacitors power planes Voltage Regulator IC IC local decoupling and bypass capacitors silabs.com | Smart. Connected. Energy-friendly. Rev. 0.3 AN203: 8-bit MCU Printed Circuit Board Design Notes Power and Ground 1. Power and Ground All embedded system designs have a power supply and ground circuit loop that is shared by components on the PCB. -

UNIT-I - OVERVIEW of EMBEDDED SYSTEMS Embedded System

UNIT-I - OVERVIEW OF EMBEDDED SYSTEMS Embedded System . An embedded system can be thought of as a computer hardware system having software embedded in it. An embedded system can be an independent system or it can be a part of a large system. An embedded system is a microcontroller or microprocessor based system which is designed to perform a specific task. For example, a fire alarm is an embedded system; it will sense only smoke. An embedded system has three components − It has hardware. It has application software. It has Real Time Operating system (RTOS) that supervises the application software and provide mechanism to let the processor run a process as per scheduling by following a plan to control the latencies. RTOS defines the way the system works. It sets the rules during the execution of application program. A small scale embedded system may not have RTOS. So we can define an embedded system as a Microcontroller based, software driven, reliable, real-time control system. Characteristics of an Embedded System Single-functioned − An embedded system usually performs a specialized operation and does the same repeatedly. For example: A pager always functions as a pager. Tightly constrained − All computing systems have constraints on design metrics, but those on an embedded system can be especially tight. Design metrics is a measure of an implementation's features such as its cost, size, power, and performance. It must be of a size to fit on a single chip, must perform fast enough to process data in real time and consume minimum power to extend battery life.