Computer Performance Evaluation Users Group (CPEUG)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Railroadc Lassi.Fication Yard Technology Manual

'PB82126806 1111111111111111111111111111111 u.s. Department of Transportation RailroadC lassi.fication Federal Railroad Administration Yard Technology Manual Office of Research and Development Volume II: Yard Computer Washington, D.C. 20590 Systems . " FRA/ORD-81/20.11 Neal P. Savage Document is available to August 1981 Paul L. Tuan the U.S. public through Final Report Linda C. Gill the National Technical Hazel T. Ellis Information Service, Peter J. Wong Springfield, Virginia 22161 ------, • R£PROOUC£D BY i I, NATIONAL TECHNICAL ! I INFORMATION SERVICE 1 : u.s. D£PAIITM£HT OF COMM£RC£ ISPRIIIGfIUD. VA 22161 . -'-' ~-..:...--.----.~ ------- NOTICE This document is disseminated under the sponsorship of the U.S. Department of Transportation in the interest of informa· tion exchange. The United States Govern ment assumes no liability for the contents or use thereof. NOTICE The United States Government does not endorse products or manufacturers. Trade or manufacturers' names appear herein solely because they are considered essential to the object of this report. Technical Report Doculllentatlon Pale 3. R.clplent·. Catalog No. FRA/ORD-8l/20.II 5. Repor' O.te August 1981 Railroad Classification Yard Technology Hanual- Volume II: Yard Computer Systems 1--::--_~:-:-_________________________-18. Perfor",!ng O'gonilOtion Rep.r, No. 7. Author'.) N. P. Savage, P. L. Tuan, L. C. Gill, SRI Project 6364 H. T. Ellis, P. J. Wong 9. Pe,fo""lng O,,,.,llolion Nome and Addre .. 10. Work Unit No .. (TRAIS) SRI International * 333 kavenswood Avenue 11. C.ntract or Gr.nt No. Henle Park, CA 94025 DOT-TSC-1337 13. Type.f Report .nd Peri.d Co"e,ed ~------------------~--------------------------~12. -

MTS on Wikipedia Snapshot Taken 9 January 2011

MTS on Wikipedia Snapshot taken 9 January 2011 PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sun, 09 Jan 2011 13:08:01 UTC Contents Articles Michigan Terminal System 1 MTS system architecture 17 IBM System/360 Model 67 40 MAD programming language 46 UBC PLUS 55 Micro DBMS 57 Bruce Arden 58 Bernard Galler 59 TSS/360 60 References Article Sources and Contributors 64 Image Sources, Licenses and Contributors 65 Article Licenses License 66 Michigan Terminal System 1 Michigan Terminal System The MTS welcome screen as seen through a 3270 terminal emulator. Company / developer University of Michigan and 7 other universities in the U.S., Canada, and the UK Programmed in various languages, mostly 360/370 Assembler Working state Historic Initial release 1967 Latest stable release 6.0 / 1988 (final) Available language(s) English Available programming Assembler, FORTRAN, PL/I, PLUS, ALGOL W, Pascal, C, LISP, SNOBOL4, COBOL, PL360, languages(s) MAD/I, GOM (Good Old Mad), APL, and many more Supported platforms IBM S/360-67, IBM S/370 and successors History of IBM mainframe operating systems On early mainframe computers: • GM OS & GM-NAA I/O 1955 • BESYS 1957 • UMES 1958 • SOS 1959 • IBSYS 1960 • CTSS 1961 On S/360 and successors: • BOS/360 1965 • TOS/360 1965 • TSS/360 1967 • MTS 1967 • ORVYL 1967 • MUSIC 1972 • MUSIC/SP 1985 • DOS/360 and successors 1966 • DOS/VS 1972 • DOS/VSE 1980s • VSE/SP late 1980s • VSE/ESA 1991 • z/VSE 2005 Michigan Terminal System 2 • OS/360 and successors -

MTS Volume 1, for Example, Introduces the User to MTS and Describes in General the MTS Operating System, While MTS Volume 10 Deals Exclusively with BASIC

M T S The Michigan Terminal System The Michigan Terminal System Volume 1 Reference R1001 November 1991 University of Michigan Information Technology Division Consulting and Support Services DISCLAIMER The MTS manuals are intended to represent the current state of the Michigan Terminal System (MTS), but because the system is constantly being developed, extended, and refined, sections of this volume will become obsolete. The user should refer to the Information Technology Digest and other ITD documentation for the latest information about changes to MTS. Copyright 1991 by the Regents of the University of Michigan. Copying is permitted for nonprofit, educational use provided that (1) each reproduction is done without alteration and (2) the volume reference and date of publication are included. 2 CONTENTS Preface ........................................................................................................................................................ 9 Preface to Volume 1 .................................................................................................................................. 11 A Brief Overview of MTS .......................................................................................................................... 13 History .................................................................................................................................................. 13 Access to the System ........................................................................................................................... -

Sperry Univac 1100/60 System

70C-877-12a Computers Sperry Univac 1100/60 System MANAGEMENT SUMMARY The 1100/60 is a family of medium- to large scale computer systems that feature a The Sperry Univac 1100/60 System repre~ents th.e small.est multiple-microprocessor implementation of member of the currently active 1100 Senes famlly, whlch the 1100 Series architecture. Both uni also includes the lIOO/SO (report 70C-S77-14) and the processor and multiprocessor configurations 1100/90 (Report 70C-S77-16). are available. The 1100/60 System was the first mainframe. to ma~e use of multi-microprocessor architecture. The anthmetIc and MODELS: 1100/61 B1, C1, C2, E1, E2, H1, and H2; 1100/62 E1 MP, E2MP, H1 MP, and logic portions of the 1100/60 employ sets of nine ~otorola IOSOO microprocessors (4-bit slice) combined WIth ECL H2MP; 1100/63 H1MP and H2MP; and circuitry and multilayer packaging. Sperry Univac terms 1100/64H1MP and H2MP. CONFIGURATION: From 1 to 4 CPUs, these sets microexecution units, which concurrently 512K to 8192K words of main memory, execute parts of the same microinstructions for improved throughput. 1 to 4 IOUs, and 1 to 7 consoles. COMPETITION: Burroughs B 5900 and B 6900, Control Data Cyber 170 Series, A fundamental consideration in the 1100/60 system design Digital Equipment DECsystem 10, Honey was the provision of high availability, reliability, and maintainability (ARM). Sperry Univac has implemented well DPS8, and IBM 303X, 4331, and ARM through such techniques as duplicate micro 4341. execution units, and duplicates of the shifter, logic function, PRICE: Purchase prices for basic Proc and control store address generator. -

CS 1550: Introduction to Operating Systems

Operating Systems Lecture1 - Introduction Golestan University Hossein Momeni [email protected] Outline What is an operating system? Operating systems history Operating system concepts System calls Operating system structure User interface to the operating system Anatomy of a system call 2 Operating Systems Course- By: Hossein Momeni Samples of Operating Systems IBSYS (IBM 7090) MACH OS/360 (IBM 360) Apollo DOMAIN TSS/360 (360 mod 67) Unix (System V & BSD) Michigan Terminal System Apple Mac (v. 1– v. 9) CP/CMS & VM 370 MS-DOS MULTICS (GE 645) Windows NT, 2000, XP, 7 Alto (Xerox PARC) Novell Netware Pilot (Xerox STAR) CP/M Linux IRIX FreeBSD Solaris PalmOS MVS PocketPC VxWorks VxWorks 3 Operating Systems Course- By: Hossein Momeni Samples of Operating Systems (continue…) 4 Operating Systems Course- By: Hossein Momeni What is an Operating System? An Operating System is a program that acts as an intermediary/interface between a user of a computer and the computer hardware. It is an extended machine Hides the messy details which must be performed Presents user with a virtual machine, easier to use It is a resource manager Each program gets time with the resource Each program gets space on the resource 5 Operating Systems Course- By: Hossein Momeni Static View of System Components User 1 User 2 User 3 User 4 … User n Compiler Editor Database Calculator WP Operating System Hardware 6 Operating Systems Course- By: Hossein Momeni Dynamic View of System Components 7 Operating Systems Course- By: Hossein Momeni -

Computer Performance Evaluation Users Group

I ICOMPUTER SCIENCE & TECHNOLOGY: COMPUTER PERFORMANCE EVALUATION USERS GROUP CPEUG 16th Meeting »' >' c. NBS Special Publication 500-65 U.S. DEPARTMENT OF COMMERCE 500-65 National Bureau of Standards NATIONAL BOmm OF SlAMOAl^DS The National Bureau of Standards' was eiiaDlished by an act o!' Congress on March 3, 1901. The Bureau's overall goal is to strengthen and advance the Nation's science and technology and facilitate their effective application for public benefit. To this end, the Bureau conducts research and provides: (!) a basis for the Nation's physical measurement system, (2) scientific and technological services lor industry and government, (3) a technical basis for equity in trade, and (4) technical services to promote public safety. The Bureau's technical work is per- formed by the National Measurement Laboratory, the National Engineering Laboratory, and the Institute for Computer Sciences and Technology. THE NATIONAL MEASUREMENT LABORATORY provides the national system of physical and chemical and materials measurement; coordinates the system with measurement systems of other nations and furnishes essential services leading to accurate and uniform physical and chemical measurement throughout the Nation's scientific community, industry, and commerce; conducts materials research leading to improved methods of measurement, standards, and data on the properties of materials needed by industry, commerce, educational institutions, and Government; provides advisory and research services to other Government agencies; develops, produces, -

History and Evolution of IBM Mainframes Over the 60 Years Of

History and Evolution of IBM Mainframes over SHARE’s 60 Years Jim Elliott – z Systems Consultant, zChampion, Linux Ambassador IBM Systems, IBM Canada ltd. 1 2015-08-12 © 2015 IBM Corporation Reports of the death of the mainframe were premature . “I predict that the last mainframe will be unplugged on March 15, 1996.” – Stewart Alsop, March 1991 . “It’s clear that corporate customers still like to have centrally controlled, very predictable, reliable computing systems – exactly the kind of systems that IBM specializes in.” – Stewart Alsop, February 2002 Source: IBM Annual Report 2001 2 2015-08-12 History and Evolution of IBM Mainframes over SHARE's 60 Years © 2015 IBM Corporation In the Beginning The First Two Generations © 2015 IBM Corporation Well, maybe a little before … . The Computing-Tabulating-Recording Company in 1911 – Tabulating Machine Company – International Time Recording Company – Computing Scale Company of America – Bundy Manufacturing Company . Tom Watson, Sr. joined in 1915 . International Business Machines – 1917 – International Business Machines Co. Limited in Toronto, Canada – 1924 – International Business Machines Corporation in NY, NY Source: IBM Archives 5 2015-08-12 History and Evolution of IBM Mainframes over SHARE's 60 Years © 2015 IBM Corporation The family tree – 1952 to 1964 . Plotting the family tree of IBM’s “mainframe” computers might not be as complicated or vast a task as charting the multi-century evolution of families but it nevertheless requires far more than a simple linear diagram . Back around 1964, in what were still the formative years of computers, an IBM artist attempted to draw such a chart, beginning with the IBM 701 of 1952 and its follow-ons, for just a 12-year period . -

CS-3013 — Operating Systems

Worcester PolytechnicCarnegie Institute Mellon CS-3013 — Operating Systems Professor Hugh C. Lauer CS-3013 — Operating Systems Slides include copyright materials Modern Operating Systems, 3rd ed., by Andrew Tanenbaum and from Operating System Concepts, 7th and 8th ed., by Silbershatz, Galvin, & Gagne CS-3013, C-Term 2014 Introduction 1 Worcester PolytechnicCarnegie Institute Mellon Outline for Today Details and logistics of this course Discussion . What is an Operating System? . What every student should know about them Project Assignment . Virtual Machines Introduction to Concurrency CS-3013, C-Term 2014 Introduction 2 Worcester PolytechnicCarnegie Institute Mellon This Course Two 2-hour classes per week . 8:00 – 10:00 AM, Tuesdays and Fridays . January 17 – March 4, 2014 Very similar to first half of CS-502 . First graduate course in Operating Systems Concentrated reading and project work CS-3013, C-Term 2014 Introduction 3 Worcester PolytechnicCarnegie Institute Mellon Concentrated reading and project work A number of students report that this is their hardest CS course at WPI (so far). The programming is demanding (even though not many lines of code) . C language is unforgiving (costing you many hours of frustration) . If you wait till a day or two before an assignment is due, you have very little chance to complete it CS-3013, C-Term 2014 Introduction 4 Worcester PolytechnicCarnegie Institute Mellon This Course Two 2-hour classes per week . 8:00 – 10:00 AM, Tuesdays and Fridays . January 17 – March 4, 2014 Very similar to first half of CS-502 . First graduate course in Operating Systems Concentrated reading and project work Course web site:– . -

Celebrating 50 Years of Campus-Wide Computing

Celebrating 50 Years of Campus-wide Computing The IBM System/360-67 and the Michigan Terminal System 13:30–17:00 Thursday 13 June 2019 Event Space, Urban Sciences Building, Newcastle Helix Jointly organised by the School of Computing and the IT Service (NUIT) School of Computing and IT Service The History of Computing at Newcastle University Thursday 13th June 2019, 13:30 Event Space, Urban Sciences Building, Newcastle Helix, NE4 5TG Souvenir Collection Celebrating 50 Years of Campus-wide Computing – 2019 NUMAC and the IBM System/360-67 – 2019 NUMAC Inauguration – 1968 IBM System/360 Model 67 (Wikipedia) The Michigan Terminal System (Wikipedia) Program and Addressing Structure in a Time-Sharing Environment et al – 1966 Virtual Memory (Wikipedia) Extracts from the Directors’ Reports – 1965-1989 The Personal Computer Revolution – 2019 Roger Broughton – 2017 Newcastle University Celebrating 50 Years of Campus-wide Computing The IBM System/360-67 and the Michigan Terminal System 13:30–17:00 Thursday 13 June 2019 Event Space, Urban Sciences Building, Newcastle Helix Jointly organised by the School of Computing, and the IT Service Two of the most significant developments in the 65 year history of computing at Newcastle University were the acquisition of a giant IBM System/360-67 mainframe computer in 1967, and subsequently the adoption of the Michigan Terminal System. MTS was the operating system which enabled the full potential of the Model 67 – a variant member of the System/360 Series, the first to be equipped with the sort of memory paging facilities that years later were incorporated in all IBM’s computers – to be realized. -

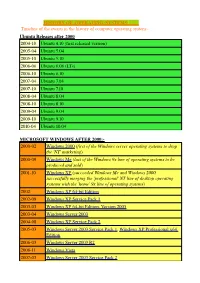

HISTORY of OPERATING SYSTEMS Timeline of The

HISTORY OF OPERATING SYSTEMS Timeline of the events in the history of computer operating system:- Ubuntu Releases after 2000 2004-10 Ubuntu 4.10 (first released version) 2005-04 Ubuntu 5.04 2005-10 Ubuntu 5.10 2006-06 Ubuntu 6.06 (LTs) 2006-10 Ubuntu 6.10 2007-04 Ubuntu 7.04 2007-10 Ubuntu 7.10 2008-04 Ubuntu 8.04 2008-10 Ubuntu 8.10 2009-04 Ubuntu 9.04 2009-10 Ubuntu 9.10 2010-04 Ubuntu 10.04 MICROSOFT WINDOWS AFTER 2000:- 2000-02 Windows 2000 (first of the Windows server operating systems to drop the ©NT© marketing) 2000-09 Windows Me (last of the Windows 9x line of operating systems to be produced and sold) 2001-10 Windows XP (succeeded Windows Me and Windows 2000, successfully merging the ©professional© NT line of desktop operating systems with the ©home© 9x line of operating systems) 2002 Windows XP 64-bit Edition 2002-09 Windows XP Service Pack 1 2003-03 Windows XP 64-bit Edition, Version 2003 2003-04 Windows Server 2003 2004-08 Windows XP Service Pack 2 2005-03 Windows Server 2003 Service Pack 1, Windows XP Professional x64 Edition 2006-03 Windows Server 2003 R2 2006-11 Windows Vista 2007-03 Windows Server 2003 Service Pack 2 2007-11 Windows Home Server 2008-02 Windows Vista Service Pack 1, Windows Server 2008 2008-04 Windows XP Service Pack 3 2009-05 Windows Vista Service Pack 2 2009-10 Windows 7(22 occtober 2009), Windows Server 2008 R2 EVENT IN HISTORY OF OS SINCE 1954:- 1950s 1954 MIT©s operating system made for UNIVAC 1103 1955 General Motors Operating System made for IBM 701 1956 GM-NAA I/O for IBM 704, based on General Motors -

Software Exchange Directory for University Research Administration 05753

#f©r©nct NBS Pubii - cations NBS TECHNICAL NOTE 916 °*l-AU Of NATIONAL BUREAU OF STANDARDS 1 The National Bureau of Standards was established by an act of Congress March 3, 1901. The Bureau's overall goal is to strengthen and advance the Nation's science and technology and facilitate their effective application for public benefit. To this end, the Bureau conducts research and provides: (1) a basis for the Nation's physical measurement system, (2) scientific and technological services for industry and government, (3) a technical basis for equity in trade, and (4) technical services to promote public safety. The Bureau consists of the Institute for Basic Standards, the Institute for Materials Research, the Institute for Applied Technology, the Institute for Computer Sciences and Technology, and the Office for Information Programs. THE INSTITUTE FOR BASIC STANDARDS provides the central basis within the United States of a complete and consistent system of physical measurement; coordinates that system with measurement systems of other nations; and furnishes essential services leading to accurate and uniform physical measurements throughout the Nation's scientific community, industry, and commerce. The Institute consists of the Office of Measurement Services, the Office of Radiation Measurement and the following Center and divisions: Applied Mathematics — Electricity — Mechanics — Heat — Optical Physics — Center for Radiation Research: Nuclear Sciences; Applied Radiation — Laboratory Astrophysics 2 — Cryogenics 2 — Electromagnetics 2 — Time and Frequency ". THE INSTITUTE FOR MATERIALS RESEARCH conducts materials research leading to improved methods of measurement, standards, and data on the properties of well-characterized materials needed by industry, commerce, educational institutions, and Government; provides advisory and research services to other Government agencies; and develops, produces, and distributes standard reference materials. -

Functional.Description Manual

CRAY Y-MP C90 Functional.Description Manual HR-04028 Cray Research, Inc. CRAY Y-MP C90 Functional Description Manual HR-04028 Cray Research, Inc. Copyright © 1992 by Cray Research, Inc. This manual or parts thereof may not be reproduced in any form unless permitted by contract or by written permission of Cray Research, Inc. Autotasking, CRAY, CRAY-1, Cray Ada, CRAYY-MP, HSX, SSD, UniChem, UNICOS, and X-MP EA are federally registered trademarks and CCI, CF77, CFT, CFT2, CFT77, COS, CRAY X-MP, CRAY XMS, CRAY-2, Cray/REELlibrarian, CRlnform, CRI/TurboKiva, CSIM, CVT, Delivering the power ..., Docview, lOS, MPGS, OLNET, RQS, SEGLDR, SMARTE, SUPERCLUSTER, SUPERLINK, Trusted UNICOS, Y-MP, and Y-MP C90 are trademarks of Cray Research, Inc. AEGIS and Apollo are trademarks of Apollo Computer Inc. Amdahl is a trademark of Amdahl Corporation. AOS is a trademark of Data General Corporation. Apollo and Domain are trademarks of Apollo Computer Inc. CDC is a trademark and NOS, NOS/BE, and NOSNE are products of Control Data Corporation. DEC, DECnet, PDP, VAX, VAXcluster, and VMS are trademarks of Digital Equipment Corporation. ECLIPSE is a trademark of Data General Corporation. Ethernet is a trademark of Xerox Corporation. Fluorinert Liquid is a trademark of 3M. Honeywell is a trademark of Honeywell, Inc. HYPERchannel and NSC are trademarks of Network Systems Corporation. IBM is a trademark and SNA is a product of International Business Machines Corporation. LANlord is a trademark of Computer Network Technology Corporation. Delta Series is a trademark of Motorola, Inc. Siemens is a trademark of Siemens Aktiengesellschaft of Berlin and Munich, Germany.