Y¯Ubinkyoku a Photorealistic Path Tracer CS488 A5 Project Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Green Belt Slowly Coming Together

Kemano, Kemano, Kemano She loved the outdoors 1 I Bountiful harvest Reaction to the death of the ~ Vicki Kryklywyj, the heart and soul / /Northern B.C, Winter Games project keeps rolling in by,fax, mail of local hikers, is / / athletes cleaned up in Williams,, and personal delivery/NEWS A5 : remembered/COMMUNITY B1 j /Lake this year/SPORTS Cl I • i! ':i % WEDNESDAY FEBROARY 151 1995 I'ANDAR. /iiii ¸ :¸!ilii if!// 0 re n d a t i p of u n kn own ice b e rg" LIKE ALL deals in the world of tion Corp. effective April 30, the That title is still held by original "They've phoned us and have and the majority of its services, lulose for its Prince Rupert pulp high finance, the proposed amal- transfer of Orenda's forest licorice owners Avenor Inc. of Montreal requested a meeting but there's induding steam and effluent mill for the next two years. gamation of Orenda Forest Pro- must be approved by the provin- and a group of American newspa- been no confirmation of a time treaUnent, are tied to the latter. Even should all of the Orenda duels with a mostly American cial government. per companies. for that meeting," said Archer Avenor also owns and controls pulp fibre end up at Gold River, it company is more complicated There's growing opposition to The partuership built the mill in official Norman Lord from docking facilities and a chipper at still will fall short of the 5130,000 than it first seems. the move to take wood from the the late 1980s but dosed it in the Montreal last week. -

Catalog INTERNATIONAL

اﻟﻤﺆﺗﻤﺮ اﻟﻌﺎﻟﻤﻲ اﻟﻌﺸﺮون ﻟﺪﻋﻢ اﻻﺑﺘﻜﺎر ﻓﻲ ﻣﺠﺎل اﻟﻔﻨﻮن واﻟﺘﻜﻨﻮﻟﻮﺟﻴﺎ The 20th International Symposium on Electronic Art Ras al-Khaimah 25.7833° North 55.9500° East Umm al-Quwain 25.9864° North 55.9400° East Ajman 25.4167° North 55.5000° East Sharjah 25.4333 ° North 55.3833 ° East Fujairah 25.2667° North 56.3333° East Dubai 24.9500° North 55.3333° East Abu Dhabi 24.4667° North 54.3667° East SE ISEA2014 Catalog INTERNATIONAL Under the Patronage of H.E. Sheikha Lubna Bint Khalid Al Qasimi Minister of International Cooperation and Development, President of Zayed University 30 October — 8 November, 2014 SE INTERNATIONAL ISEA2014, where Art, Science, and Technology Come Together vi Richard Wheeler - Dubai On land and in the sea, our forefathers lived and survived in this environment. They were able to do so only because they recognized the need to conserve it, to take from it only what they needed to live, and to preserve it for succeeding generations. Late Sheikh Zayed bin Sultan Al Nahyan viii ZAYED UNIVERSITY Ed unt optur, tet pla dessi dis molore optatiist vendae pro eaqui que doluptae. Num am dis magnimus deliti od estem quam qui si di re aut qui offic tem facca- tiur alicatia veliqui conet labo. Andae expeliam ima doluptatem. Estis sandaepti dolor a quodite mporempe doluptatus. Ustiis et ium haritatur ad quaectaes autemoluptas reiundae endae explaboriae at. Simenis elliquide repe nestotae sincipitat etur sum niminctur molupta tisimpor mossusa piendem ulparch illupicat fugiaep edipsam, conecum eos dio corese- qui sitat et, autatum enimolu ptatur aut autenecus eaqui aut volupiet quas quid qui sandaeptatem sum il in cum sitam re dolupti onsent raeceperion re dolorum inis si consequ assequi quiatur sa nos natat etusam fuga. -

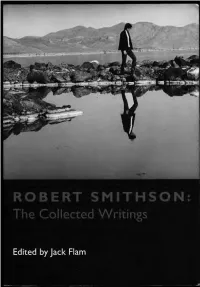

Collected Writings

THE DOCUMENTS O F TWENTIETH CENTURY ART General Editor, Jack Flam Founding Editor, Robert Motherwell Other titl es in the series available from University of California Press: Flight Out of Tillie: A Dada Diary by Hugo Ball John Elderfield Art as Art: The Selected Writings of Ad Reinhardt Barbara Rose Memo irs of a Dada Dnnnmer by Richard Huelsenbeck Hans J. Kl ein sc hmidt German Expressionism: Dowments jro111 the End of th e Wilhelmine Empire to th e Rise of National Socialis111 Rose-Carol Washton Long Matisse on Art, Revised Edition Jack Flam Pop Art: A Critical History Steven Henry Madoff Co llected Writings of Robert Mothen/le/1 Stephanie Terenzio Conversations with Cezanne Michael Doran ROBERT SMITHSON: THE COLLECTED WRITINGS EDITED BY JACK FLAM UNIVERSITY OF CALIFORNIA PRESS Berkeley Los Angeles Londo n University of Cali fornia Press Berkeley and Los Angeles, California University of California Press, Ltd. London, England © 1996 by the Estate of Robert Smithson Introduction © 1996 by Jack Flam Library of Congress Cataloging-in-Publication Data Smithson, Robert. Robert Smithson, the collected writings I edited, with an Introduction by Jack Flam. p. em.- (The documents of twentieth century art) Originally published: The writings of Robert Smithson. New York: New York University Press, 1979. Includes bibliographical references and index. ISBN 0-520-20385-2 (pbk.: alk. paper) r. Art. I. Title. II. Series. N7445.2.S62A3 5 1996 700-dc20 95-34773 C IP Printed in the United States of Am erica o8 07 o6 9 8 7 6 T he paper used in this publication meets the minimum requirements of ANSII NISO Z39·48-1992 (R 1997) (Per111anmce of Paper) . -

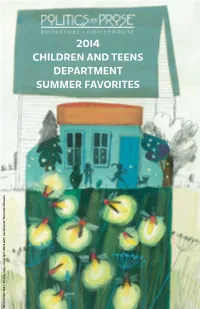

2014 Children and Teens Department Summer Favorites

2014 CHILDREN AND TEENS DEPARTMENT SUMMER FAVORITES , copyright 2014 with permission from Candlewick from with permission 2014 , copyright Firefly July Firefly Illustration from from Illustration 1 PICTURE BOOKS Never turn the page too quickly. Never assume you know what happens next. These are the rules for reading Rules of Summer (Arthur A. Levine, $18.99). In this tale of two brothers’ summertime adventures and mistakes, Shaun Tan creates a world in which meteors are caught like fireflies, robots populate parades, Kazuno Kohara animates the sleeping hours in and a giant rabbit menaces those who leave The Midnight Library (Roaring Brook, $16.99), red socks on the clothesline. Tan’s sweeping a charming tale of the nocturnal life of a library surreal paintings pair with sparse text to create and the librarian who shepherds its animal a world of commonplace marvels that invite patrons. Characters will inspire giggles in young the full participation of the reader. His work readers and ring true to daytime librarians: never confines a reader to a single, easily the loud squirrel musicians, the tortoise who interpretable narrative. Its appeal is part wonder, has plodded only halfway through his tome by part adventure, and part lurking menace. Wildly closing (sunrise). The linocut illustrations’ bold inventive and richly layered, this meditation outlines and minimal color palette add a layer on friendship, childhood, and imagination will of nighttime fantasy and heighten the book’s engage a wide range of readers. The book’s an magic. A sweet homage to librarians, the book adventure in itself, the kind to which one returns also works as a bedtime read, closing with a again and again. -

Newsletter Fall2006 Gen.Pmd

PIMS Magazine Vol. 10 Fall 2006 Alberta Government contributes $1.147- million to PIMS for 2006-2009 The Pacific Institute for the Mathematical Sciences (PIMS) has received a renewal of support from the Alberta Innovation and Science (AIS) for PIMS mathematical activities in Alberta. PIMS will receive $1.147-million over three years from the Alberta government agency. AIS focused on PIMS’ established record in mathematical research and education in Western Canada as the reason to renew and increase funding to $1.147-million between 2006 and 2009. With the initial AIS funding in 2004, PIMS developed activities towards mathematical education and outreach in Alberta, demonstrating PIMS’ dedication to developing inclusive programmes for Alberta’s mathematical commu- nity. With the new funding, PIMS plans to increase its commitment to education and outreach in Alberta, and will participate in the AIS’ three major research priorities: life sciences, energy research, and information and communication technologies. Interview with Ingrid Institutional Autonomy mittee and the SAB. The requirement to have a Canadian and American organizer for each five- Daubechies Consecrates BIRS’s day workshop has been removed, and the The Princeton pro- Role as World competition is now open to all. fessor speaks with Resource The new organizational structure of BIRS PIMS about math- ensures a transparent and uniform governance The Banff International ematics, research in structure that will enable BIRS to attract the industry, and being a Research Station (BIRS) — best proposals from all over the world. PIMS, woman in a male- which was launched in September, 2001, and dominated field. MSRI and MITACS congratulate BIRS on its inaugurated in March, 2003 — was the Interview on Page renewal and on the new era of scientific excel- fulfillment of a remarkable international effort 15 lence upon which it is about to embark. -

W E S T C O a S T a R T S a N D C U L T U

free SWEST COAST ARTS AND CULTUREFAQ Jay DeFeo Jens Hoffmann Kathan Brown Bay Area Latino Arts Part 1: Enrique Chagoya - MENA Report Part 1: Istanbul Biennial with Jens Hoffmann - Scales Fall From My Eyes: Bay Area Beat Generation Visual Art as told to Paul Karlstrom - Peter Selz - Crown Point Press: Kathan Brown SFAQ Artist Spread - Paulson Bott Press - Collectors Corner: Peter Kirkeby Flop Box Zine Reviews - Bay Area Event Calendar: August, September, October West Coast Residency Listing SAN FRANCISCO ARTS QUARTERLY ISSUE.6 ROBERT BECHTLE A NEW SOFT GROUND ETCHING Brochure available Three Houses on Pennsylvania Avenue, 2011. 30½ x 39", edition 40. CROWN POINT PRESS 20 Hawthorne Street San Francisco, CA 94105 www.crownpoint.com 415.974.6273 3IGNUPFOROURE NEWSLETTERATWWWFLAXARTCOM ,IKEUSON&ACEBOOK &OLLOWUSON4WITTER 3IGNUPFOROURE NEWSLETTERATWWWFLAXARTCOM ,IKEUSON&ACEBOOK &OLLOWUSON4WITTER berman_sf_quarterly_final.pdf The Sixth Los Angeles International Contemporary Art Fair September 30 - October 2, 2011 J.W. Marriott Ritz Carlton www.artla.net \ 323.965.1000 Bruce of L.A. B. Elliott, 1954 Collection of John Sonsini Ceramics Annual of America 2011 October 7-9, 2011 ART FAIR SAN FRANCISCO FORT MASON | FESTIVAL PAVILION DECEMBER 1 - 4, 2011 1530 Collins Avenue (south of Lincoln Road), Miami Beach $48$$570,$0,D&20 VIP Preview Opening November 30, 2011 For more information contact: Public Hours December 1- 4, 2011 [email protected] 1.877.459.9CAA www.ceramicsannual.org “The best hotel art fair in the world.” DECEMBER 1 - 4, 2011 1530 Collins Avenue (south of Lincoln Road), Miami Beach $48$$570,$0,D&20 VIP Preview Opening November 30, 2011 Public Hours December 1- 4, 2011 “The best hotel art fair in the world.” Lucas Soi ìWe Bought The Seagram Buildingî October 6th-27th For all your art supply needs, pick Blick. -

Science Fiction

Science Fiction Location Author Title J AND Anderson, M.T. He Laughed With His Other Mouths Boy Technonaut Jasper Dash enlists the help of his friends to find his missing father in this science fiction adventure chock-full of aliens, spaceships, friendship, and plenty of death rays. J AND Anderson, M. T. Whales on Stilts Racing against the clock, shy middle-school student Lily and her best friends, Katie and Jasper, must foil the plot of her father's conniving boss to conquer the world using an army of whales. J ANG Angleberger, Tom Fuzzy When Max (Maxine Zealster) befriends her new robot classmate Fuzzy, she helps him navigate Vanguard Middle School and together they reveal the truth behind the Robot Integration Program. SERIES Applegate, Katherine The Hork-Bajir Chronicles ANIMORPHS Before the Animorphs, on another world, the fight began. Prior to their invasion of Earth, the Yeerks attacked a gentle and docile species known as the Hork-Bajir. This is the story of a special Hork-Bajir, his Andalite friend, and their future enemy, Visser Three. J APP Appleton II, Victor Tom Swift and His Airship, or, The Stirring Cruise of the Red Cloud Originally published in 1910. In this classic series of adventures, the young inventor builds an airship, makes a trial trip, and experiences a smash-up in midair. J ASC Asch, Frank Star Jumper : Journal of a Cardboard Genius Determined to get far away from his evil little brother, Alex works to design a spaceship that will take him so far away from Earth that not even the NASA experts can find him, yet when his brother catches on to his plan, Alex fears that his nearly finished project may be ruined before he gets his chance to take-off. -

Why Math Works So Well

Fractals, such as this stack of spheres created using 3-D modeling software, are one of the mathematical structures that were invent- ed for abstract reasons yet manage to capture reality. 80 Scientific American, August 2011 © 2011 Scientific American Mario Livio is a theoretical astrophysicist at the Space Telescope Science Institute in Baltimore. He has studied a wide range of cosmic phenomena, ranging from dark energy and super nova explosions to extrasolar planets and accretion onto white dwarfs, neutron stars and black holes. PHILOSOPHY OF SCIENCE Why Math Wo r k s Is math invented or discovered? A leading astrophysicist suggests that the answer to the millennia-old question is both By Mario Livio ost of us take it for granted German physicist Heinrich Hertz detected them. Very that math works—that sci few languages are as effective, able to articulate vol entists can devise formulas umes’ worth of material so succinctly and with such to describe subatomic events precision. Albert Einstein pondered, “How is it possi or that engineers can calcu ble that mathematics, a product of human thought late paths for spacecraft. We that is independent of experience, fits so excellently accept the view, initially es the objects of physical reality?” poused by Galileo, that mathematics is the language of As a working theoretical astrophysicist, I encoun Mscience and expect that its grammar explains experi ter the seemingly “unreasonable effectiveness of math mental results and even predicts novel phenomena. ematics,” as Nobel laureate physicist Eugene Wigner The power of mathematics, though, is nothing short of called it in 1960, in every step of my job. -

Bibliography

Bibliography A. Aaboe, Episodes from the Early History of Mathematics (Random House, New York, 1964) A.D. Aczel, Fermat’s Last Theorem: Unlocking the Secret of an Ancient Mathematical Problem (Four Walls Eight Windows, New York, 1996) D. Adamson, Blaise Pascal: Mathematician, Physicist, and Thinker About God (St. Martin’s Press, New York, 1995) R.P. Agarwal, H. Agarwal, S.K. Sen, Birth, Growth and Computation of Pi to ten trillion digits. Adv. Differ. Equat. 2013, 100 (2013) A.A. Al-Daffa’, The Muslim Contribution to Mathematics (Humanities Press, Atlantic Highlands, 1977) A.A. Al-Daffa’, J.J. Stroyls, Studies in the Exact Sciences in Medieval Islam (Wiley, New York, 1984) E.J. Aiton, Leibniz: A Biography (A. Hilger, Bristol, Boston, 1984) R.E. Allen, Greek Philosophy: Thales to Aristotle (The Free Press, New York, 1966) G.J. Allman, Greek Geometry from Thales to Euclid (Arno Press, New York, 1976) E.N. da C. Andrade, Sir Issac Newton, His Life and Work (Doubleday & Co., New York, 1954) W.S. Anglin, Mathematics: A Concise History and Philosophy (Springer, New York, 1994) W.S. Anglin, The Queen of Mathematics (Kluwer, Dordrecht, 1995) H.D. Anthony, Sir Isaac Newton (Abelard-Schuman, New York, 1960) H.G. Apostle, Aristotle’s Philosophy of Mathematics (The University of Chicago Press, Chicago, 1952) R.C. Archibald, Outline of the history of mathematics.Am. Math. Monthly 56 (1949) B. Artmann, Euclid: The Creation of Mathematics (Springer, New York, 1999) C.N. Srinivasa Ayyangar, The History of Ancient Indian Mathematics (World Press Private Ltd., Calcutta, 1967) A.K. Bag, Mathematics in Ancient and Medieval India (Chaukhambha Orientalia, Varanasi, 1979) W.W.R. -

Lewis Jeremy Sykes the Augmented Tonoscope

Lewis Jeremy Sykes The Augmented Tonoscope Towards a Deeper Understanding of the Interplay between Sound and Image in Visual Music A thesis submitted in partial fulfilment of the requirements of the Manchester Metropolitan University for the degree of Doctor of Philosophy Manchester Institute for Research and Innovation in Art & Design (MIRIAD), Manchester School of Art February 2015 Abstract This thesis presents the theoretical, technical and aesthetic concerns in realising a harmonic complementarity and more intimate perceptual connection between music and moving image. It explores the inspirations and various processes involved in creating a series of artistic works - attached as a portfolio and produced as the research. This includes the Cymatic Adufe (v1.1) - a sound-responsive, audiovisual installation; Stravinsky Rose (v2.0) - an audiovisual short in Dome format; and the live performance works of Whitney Triptych (v1.2), Moiré Modes (v1.1) and Stravinsky Rose (v3.0). The thesis outlines an approach towards realising a deeper understanding of the interplay between sound and image in Visual Music - through applying: the Differential Dynamics of pioneering, computer-aided, experimental animator John Whitney Sr.; alternate musical tunings based on harmonic consonance and the Pythagorean laws of harmony; and sound’s ability to induce physical form and flow via Cymatics - the study of wave phenomena and vibration - a term coined by Dr. Hans Jenny for his seminal research into these effects in the 1960s and 70s, using a device of his own design - the ʻtonoscopeʼ. The thesis discusses the key method for this artistic investigation through the design, fabrication and crafting of a hybrid analogue/digital audiovisual instrument - a contemporary version of Jenny’s sound visualisation tool - The Augmented Tonoscope. -

Contemporary American Painting and Sculpture

ILLINOIS LIBRARY AT URBANA-CHAMPAIGN ARCHITECTURE MUSk^ UBWRY /W»CMITK1t«t mvimu OF aiwos NOTICE: Return or renew all Library Matarialtl The Minimum F*« tor each Loat Book it $50.00. The person charging this material is responsible for its return to the library from which it was withdrawn on or before the Latest Date stamped below. Theft, mutilation, and underlining of books are reasons for discipli* nary action and may result in dismissal from the University. To renew call Telephone Center, 333-6400 UNIVERSITY OF ILLINOIS LIBRARY AT URBANA-CHAMPAIGN L161—O-1096 IKilir^ >UIBRICiil^ I^Aievri^^' cnsify n^ liliiMttiK, llrSMiiRj tiHil \' $4.50 Catalogue and cover design: RAYMOND PERLMAN / W^'^H UtSifv,t- >*r..v,~— •... 3 f ^-^ ':' :iiNTi:A\roiriiitY iiAii<:irii:AN I'iiiKTiNrp A\n kciilp i iikb I!k>7 UNIVERSITY OF ILLINOIS MAR G 1967 LIBRARY University of Illinois Press, Urbana, Chicago, and London, 1967 IIINTILUIMHtAirY ilAllilltlCAK I'AINTIKi; AKII KCIILI* I llltK l!H»7 Introduction by Allen S. Weller ex/ubition nth College of Fine and Applied Arts, University of Illinois, Urbana «:«Nli:A\l>«ltilKY AAIKIMCAN PAINTINIp A\» StAlLVTUKK DAVID DODDS HENRY President of the University ALLEN S. WELLER Dean, College of Fine and Applied Arts Director, Krannert Art Museum Ctiairmon, Festivol of Contemporory Arts JURY OF SELECTION Allen S. Weller, Choirman James D. Hogan James R. Shipley MUSEUM STAFF Allen S. Weller, Director Muriel B. Ctiristison, Associate Director Deborah A. Jones, Assistant Curator James O. Sowers, Preporotor Jane Powell, Secretary Frieda V. Frillmon, Secretary H. Dixon Bennett, Assistant K. E. -

Good Design Awards 2017 Page 1 of 77 December 1, 2017 © 2017 the Chicago Athenaeum - Use of This Website As Stated in Our Legal Statement

GOOD DESIGN 2017 AWARDED PRODUCT DESIGNS AND GRAPHICS AND PACKAGING THE CHICAGO ATHENAEUM: MUSEUM OF ARCHITECTURE AND DESIGN THE EUROPEAN CENTRE FOR ARCHITECTURE ART DESIGN AND URBAN STUDIES ELECTRONICS 2017 HP PageWide Pro 700-Series 2015-2017 Designers: Danny Han, HP Global Experience Design, HP Inc., Vancouver, Washington, USA Manufacturer: HP Inc., Vancouver, Washington, USA Dolby Multichannel Amplifier 2016-2017 Designers: Grayson Byrd, Peter Michaelian, Cody Proksa, Marcelo Traverso, and Vince Voron, Dolby Design, Dolby Laboratories, Inc., San Francisco, California, USA Manufacturer: Dolby Laboratories, Inc., San Francisco, California, USA TP-Link Quarter Camera 2016 Designers: Dan Harden, Brian Leach, Mark Hearn, and Hiro Teranishi, Whipsaw, Inc., San Jose, California, USA Manufacturer: TP-Link Technologies Co., Ltd., Nanshan, Schenzhen, P.R. China Hive Connected Home 2016-2017 Designers: Philip Shade and Matt Plested, Philip Shade Alloy Ltd., Farnham, Surrey, United Kingdom Manufacturer: Centrica Connected Home Ltd., London, United Kingdom Martin Logan Impression ESL 11A 2015-2017 Designers: KEM STUDIO, Kansas City, Missouri, USA Manufacturer: Martin Logan, Lawrence, Kansas, USA ST7000 Small Tetra Radio 2016 Designers: Innovation Design Team, Motorola Solutions, Plantation, Florida, USA Manufacturer: Motorola Solutions, Plantation, Florida, USA T600 H2O Outdoor Activity Radio 2016 Designers: Innovation Design Team, Motorola Solutions, Plantation, Florida, USA Manufacturer: Motorola Solutions, Plantation, Florida, USA Blackmagic