Understanding Distributions of Chess Performances

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download the Booklet of Chessbase Magazine #199

THE Magazine for Professional Chess JANUARY / FEBRUARY 2021 | NO. 199 | 19,95 Euro S R V ON U I O D H E O 4 R N U A N DVD H N I T NG RE TIME: MO 17 years old, second place at the Altibox Norway Chess: Alireza Firouzja is already among the Top 20 TOP GRANDmasTERS ANNOTATE: ALL IN ONE: SEMI-TaRRasCH Duda, Edouard, Firouzja, Igor Stohl condenses a Giri, Nielsen, et al. trendy opening AVRO TOURNAMENT 1938 – LONDON SYSTEM – no reST FOR THE Bf4 CLash OF THE GENERATIONS Alexey Kuzmin hits with the Retrospective + 18 newly annotated active 5...Nh5!? Keres games THE MODERN BENONI UNDER FIRE! Patrick Zelbel presents a pointed repertoire with 6.Nf3/7.Bg5 THE MAGAZINE FOR PROFESSIONAL CHESS JANU 17 years old, secondARY / placeFEB at the RU Altibox Norway Chess: AlirezaAR FirouzjaY 2021 is already among the Top 20 NO . 199 1 2 0 2 G R U B M A H , H B M G E S DVD with first class training material for A B S S E club players and professionals! H C © EDITORIAL The new chess stars: Alireza Firouzja and Moscow in order to measure herself against Beth Harmon the world champion in a tournament. She is accompanied by a US official who warns Now the world and also the world of chess her about the Soviets and advises her not to has been hit by the long-feared “second speak with anyone. And what happens? She wave” of the Covid-19 pandemic. Many is welcomed with enthusiasm by the popu- tournaments have been cancelled. -

A New Look at the Tayler by David Kane

A New Look at the Tayler by David kane I: Introduction The Tayler Variation (aka the Tayler Opening) is a line that has been unjustly neglected, in my view. The line is of surprisingly recent vintage though it is often confused with the Inverted Hungarian (or Inverted Hanham Defense), a line which shares the same opening moves: 1. e4 e5 2. Nf3 Nc6 3. Be2: The Inverted Hungarian is an old opening, dating back to the 1860ʼs, at least. Tartakower played it a few times in the 1920ʼs with mixed results, using the continuation, 3...Nf6 4. d3: a rather unenterprising setup for White. In 1981 British player, John Tayler (see biographical note), published an article in the British publication Chess (vol. 46) on a line he had developed stemming from the sharp 4. d4!?. This is a move which apparently no one had thought to play before, and one that transforms the sedate Inverted Hungarian into something else altogether. Technically, it is really the Tayler Variation to the Inverted Hungarian Defense rather than the Tayler Opening, though through usage, the terms are interchangeable for all practical purposes. As has been so often the case when it comes to unorthodox lines, I first heard of this opening via Mike Basman when he published a cassette on it back in the early 80ʼs (still available through audiochess.com). Tayler 2 The line stirred some interest at the time but gradually seems to have been forgotten. The final nail in the coffin was probably some light analysis published by Eric Schiller in Gambit Chess Openings (and elsewhere) where he dismisses the line primarily due to his loss in the game Schiller-Martinovsky, Chicago 1986. -

Here Should That It Was Not a Cheap Check Or Spite Check Be Better Collaboration Amongst the Federations to Gain Seconds

Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Executive Editor: Malcolm Pein Editorial....................................................................................................................4 Editors: Richard Palliser, Matt Read Malcolm Pein on the latest developments in the game Associate Editor: John Saunders Subscriptions Manager: Paul Harrington 60 Seconds with...Chris Ross.........................................................................7 Twitter: @CHESS_Magazine We catch up with Britain’s leading visually-impaired player Twitter: @TelegraphChess - Malcolm Pein The King of Wijk...................................................................................................8 Website: www.chess.co.uk Yochanan Afek watched Magnus Carlsen win Wijk for a sixth time Subscription Rates: United Kingdom A Good Start......................................................................................................18 1 year (12 issues) £49.95 Gawain Jones was pleased to begin well at Wijk aan Zee 2 year (24 issues) £89.95 3 year (36 issues) £125 How Good is Your Chess?..............................................................................20 Europe Daniel King pays tribute to the legendary Viswanathan Anand 1 year (12 issues) £60 All Tied Up on the Rock..................................................................................24 2 year (24 issues) £112.50 3 year (36 issues) £165 John Saunders had to work hard, but once again enjoyed Gibraltar USA & Canada Rocking the Rock ..............................................................................................26 -

Draft – Not for Circulation

A Gross Miscarriage of Justice in Computer Chess by Dr. Søren Riis Introduction In June 2011 it was widely reported in the global media that the International Computer Games Association (ICGA) had found chess programmer International Master Vasik Rajlich in breach of the ICGA‟s annual World Computer Chess Championship (WCCC) tournament rule related to program originality. In the ICGA‟s accompanying report it was asserted that Rajlich‟s chess program Rybka contained “plagiarized” code from Fruit, a program authored by Fabien Letouzey of France. Some of the headlines reporting the charges and ruling in the media were “Computer Chess Champion Caught Injecting Performance-Enhancing Code”, “Computer Chess Reels from Biggest Sporting Scandal Since Ben Johnson” and “Czech Mate, Mr. Cheat”, accompanied by a photo of Rajlich and his wife at their wedding. In response, Rajlich claimed complete innocence and made it clear that he found the ICGA‟s investigatory process and conclusions to be biased and unprofessional, and the charges baseless and unworthy. He refused to be drawn into a protracted dispute with his accusers or mount a comprehensive defense. This article re-examines the case. With the support of an extensive technical report by Ed Schröder, author of chess program Rebel (World Computer Chess champion in 1991 and 1992) as well as support in the form of unpublished notes from chess programmer Sven Schüle, I argue that the ICGA‟s findings were misleading and its ruling lacked any sense of proportion. The purpose of this paper is to defend the reputation of Vasik Rajlich, whose innovative and influential program Rybka was in the vanguard of a mid-decade paradigm change within the computer chess community. -

The USCF Elo Rating System by Steven Craig Miller

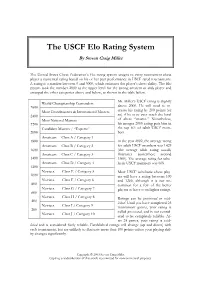

The USCF Elo Rating System By Steven Craig Miller The United States Chess Federation’s Elo rating system assigns to every tournament chess player a numerical rating based on his or her past performance in USCF rated tournaments. A rating is a number between 0 and 3000, which estimates the player’s chess ability. The Elo system took the number 2000 as the upper level for the strong amateur or club player and arranged the other categories above and below, as shown in the table below. Mr. Miller’s USCF rating is slightly World Championship Contenders 2600 above 2000. He will need to in- Most Grandmasters & International Masters crease his rating by 200 points (or 2400 so) if he is to ever reach the level Most National Masters of chess “master.” Nonetheless, 2200 his meager 2000 rating puts him in Candidate Masters / “Experts” the top 6% of adult USCF mem- 2000 bers. Amateurs Class A / Category 1 1800 In the year 2002, the average rating Amateurs Class B / Category 2 for adult USCF members was 1429 1600 (the average adult rating usually Amateurs Class C / Category 3 fluctuates somewhere around 1400 1500). The average rating for scho- Amateurs Class D / Category 4 lastic USCF members was 608. 1200 Novices Class E / Category 5 Most USCF scholastic chess play- 1000 ers will have a rating between 100 Novices Class F / Category 6 and 1200, although it is not un- 800 common for a few of the better Novices Class G / Category 7 players to have even higher ratings. 600 Novices Class H / Category 8 Ratings can be provisional or estab- 400 lished. -

Elo World, a Framework for Benchmarking Weak Chess Engines

Elo World, a framework for with a rating of 2000). If the true outcome (of e.g. a benchmarking weak chess tournament) doesn’t match the expected outcome, then both player’s scores are adjusted towards values engines that would have produced the expected result. Over time, scores thus become a more accurate reflection DR. TOM MURPHY VII PH.D. of players’ skill, while also allowing for players to change skill level. This system is carefully described CCS Concepts: • Evaluation methodologies → Tour- elsewhere, so we can just leave it at that. naments; • Chess → Being bad at it; The players need not be human, and in fact this can facilitate running many games and thereby getting Additional Key Words and Phrases: pawn, horse, bishop, arbitrarily accurate ratings. castle, queen, king The problem this paper addresses is that basically ACH Reference Format: all chess tournaments (whether with humans or com- Dr. Tom Murphy VII Ph.D.. 2019. Elo World, a framework puters or both) are between players who know how for benchmarking weak chess engines. 1, 1 (March 2019), to play chess, are interested in winning their games, 13 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn and have some reasonable level of skill. This makes 1 INTRODUCTION it hard to give a rating to weak players: They just lose every single game and so tend towards a rating Fiddly bits aside, it is a solved problem to maintain of −∞.1 Even if other comparatively weak players a numeric skill rating of players for some game (for existed to participate in the tournament and occasion- example chess, but also sports, e-sports, probably ally lose to the player under study, it may still be dif- also z-sports if that’s a thing). -

Chessbase.Com - Chess News - Grandmasters and Global Growth

ChessBase.com - Chess News - Grandmasters and Global Growth Grandmasters and Global Growth 07.01.2010 Professor Kenneth Rogoff is a strong chess grandmaster, who also happens to be one of the world's leading economists. In a Project Syndicate article that appeared this week Ken sees the new decade as one in which "artificial intelligence hits escape velocity," with an economic impact on par with the emergence of India and China. He uses computer chess to illustrated the point. Grandmasters and Global Growth By Kenneth Rogoff As the global economy limps out of the last decade and enters a new one in 2010, what will be the next big driver of global growth? Here’s betting that the “teens” is a decade in which artificial intelligence hits escape velocity, and starts to have an economic impact on par with the emergence of India and China. Admittedly, my perspective is heavily colored by events in the world of chess, a game I once played at a professional level and still follow from a distance. Though special, computer chess nevertheless offers both a window into silicon evolution and a barometer of how people might adapt to it. A little bit of history might help. In 1996 and 1997, World Chess Champion Gary Kasparov played a pair of matches against an IBM computer named “Deep Blue.” At the time, Kasparov dominated world chess, in the same way that Tiger Woods – at least until recently – has dominated golf. In the 1996 match, Deep Blue stunned the champion by beating him in the first game. -

March 2020 Uschess.Org the United States’ Largest Chess Specialty Retailer

March 2020 USChess.org The United States’ Largest Chess Specialty Retailer 888.51.CHESS (512.4377) www.USCFSales.com ƩĂĐŬŝŶŐǁŝƚŚŐϮͲŐϰ The 100 Endgames You Must Know Workbook The Modern Way to Get the Upper Hand in Chess WƌĂĐƟĐĂůŶĚŐĂŵĞƐdžĞƌĐŝƐĞƐĨŽƌǀĞƌLJŚĞƐƐWůĂLJĞƌ Dmitry Kryavkin 288 pages - $24.95 Jesus de la Villa 288 pages - $24.95 dŚĞƉĂǁŶƚŚƌƵƐƚŐϮͲŐϰŝƐĂƉĞƌĨĞĐƚǁĂLJƚŽĐŽŶĨƵƐĞLJŽƵƌ ͞/ůŽǀĞƚŚŝƐŬ͊/ŶŽƌĚĞƌƚŽŵĂƐƚĞƌĞŶĚŐĂŵĞƉƌŝŶĐŝƉůĞƐLJŽƵ ŽƉƉŽŶĞŶƚƐĂŶĚĚŝƐƌƵƉƚƚŚĞŝƌƉŽƐŝƟŽŶ͘tŝƚŚůŽƚƐŽĨŝŶƐƚƌƵĐƟǀĞ ǁŝůůŶĞĞĚƚŽƉƌĂĐƟĐĞƚŚĞŵ͘͟ʹNM Han Schut, Chess.com ĞdžĂŵƉůĞƐ'D<ƌLJĂŬǀŝŶƐŚŽǁƐŚŽǁŝƚĐĂŶďĞƵƐĞĚƚŽĚĞĨĞĂƚ ͞dŚĞƉĞƌĨĞĐƚƐƵƉƉůĞŵĞŶƚƚŽĞůĂsŝůůĂ͛ƐŵĂŶƵĂů͘dŽŐĂŝŶ ůĂĐŬŝŶƚŚĞƵƚĐŚ͕ƚŚĞYƵĞĞŶ͛Ɛ'Ăŵďŝƚ͕ƚŚĞEŝŵnjŽͲ/ŶĚŝĂŶ͕ ƐƵĸĐŝĞŶƚŬŶŽǁůĞĚŐĞŽĨƚŚĞŽƌĞƟĐĂůĞŶĚŐĂŵĞƐLJŽƵƌĞĂůůLJŽŶůLJ ƚŚĞ<ŝŶŐ͛Ɛ/ŶĚŝĂŶ͕ƚŚĞ^ůĂǀĂŶĚƚŚĞŶŐůŝƐŚKƉĞŶŝŶŐ͘>ĞĂƌŶ ŶĞĞĚƚǁŽďŽŽŬƐ͘͟ʹIM Herman Grooten, Schaaksite NEW! ƚŚĞƚLJƉŝĐĂůǁĂLJƐƚŽŐĂŝŶƚĞŵƉŝ͕ŬĞĞƉƚŚĞŵŽŵĞŶƚƵŵĂŶĚ ŵĂdžŝŵŝnjĞLJŽƵƌŽƉƉŽŶĞŶƚ͛ƐƉƌŽďůĞŵƐ͘ Strategic Chess Exercises Keep It Simple 1.d4 Find the Right Way to Outplay Your Opponent ^ŽůŝĚĂŶĚ^ƚƌĂŝŐŚƞŽƌǁĂƌĚŚĞƐƐKƉĞŶŝŶŐZĞƉĞƌƚŽŝƌĞĨŽƌtŚŝƚĞ Emmanuel Bricard 224 pages - $24.95 Christof Sielecki 432 pages - $29.95 &ŝŶĂůůLJĂŶĞdžĞƌĐŝƐĞƐŬƚŚĂƚŝƐŶŽƚĂďŽƵƚƚĂĐƟĐƐ͊ ^ŝĞůĞĐŬŝ͛ƐƌĞƉĞƌƚŽŝƌĞǁŝƚŚϭ͘ĚϰŵĂLJďĞĞǀĞŶĞĂƐŝĞƌƚŽ ͞ƌŝĐĂƌĚŝƐĐůĞĂƌůLJĂǀĞƌLJŐŝŌĞĚƚƌĂŝŶĞƌ͘,ĞƐĞůĞĐƚĞĚĂƐƵƉĞƌď ŵĂƐƚĞƌƚŚĂŶŚŝƐϭ͘ĞϰƌĞĐŽŵŵĞŶĚĂƟŽŶƐ͕ďĞĐĂƵƐĞŝƚŝƐƐƵĐŚĂ ƌĂŶŐĞŽĨƉŽƐŝƟŽŶƐĂŶĚĞdžƉůĂŝŶƐƚŚĞƐŽůƵƟŽŶƐĞdžƚƌĞŵĞůLJ ĐŽŚĞƌĞŶƚƐLJƐƚĞŵ͗ƚŚĞŵĂŝŶĐŽŶĐĞƉƚŝƐĨŽƌtŚŝƚĞƚŽƉůĂLJϭ͘Ěϰ͕ ǁĞůů͘͟ʹGM Daniel King Ϯ͘EĨϯ͕ϯ͘Őϯ͕ϰ͘ŐϮ͕ϱ͘ϬͲϬĂŶĚŝŶŵŽƐƚĐĂƐĞƐϲ͘Đϰ͘ ͞&ŽƌĐŚĞƐƐĐŽĂĐŚĞƐƚŚŝƐŬŝƐŶŽƚŚŝŶŐƐŚŽƌƚŽĨƉŚĞŶŽŵĞŶĂů͘͟ ͞Ɛ/ƚŚŝŶŬƚŚĂƚ/ƐŚŽƵůĚŬĞĞƉŵLJĂĚǀŝĐĞ͚ƐŝŵƉůĞ͕͛/ǁŽƵůĚƐĂLJ -

Distributional Differences Between Human and Computer Play at Chess

Multidisciplinary Workshop on Advances in Preference Handling: Papers from the AAAI-14 Workshop Human and Computer Preferences at Chess Kenneth W. Regan Tamal Biswas Jason Zhou Department of CSE Department of CSE The Nichols School University at Buffalo University at Buffalo Buffalo, NY 14216 USA Amherst, NY 14260 USA Amherst, NY 14260 USA [email protected] [email protected] Abstract In our case the third parties are computer chess programs Distributional analysis of large data-sets of chess games analyzing the position and the played move, and the error played by humans and those played by computers shows the is the difference in analyzed value from its preferred move following differences in preferences and performance: when the two differ. We have run the computer analysis (1) The average error per move scales uniformly higher the to sufficient depth estimated to have strength at least equal more advantage is enjoyed by either side, with the effect to the top human players in our samples, depth significantly much sharper for humans than computers; greater than used in previous studies. We have replicated our (2) For almost any degree of advantage or disadvantage, a main human data set of 726,120 positions from tournaments human player has a significant 2–3% lower scoring expecta- played in 2010–2012 on each of four different programs: tion if it is his/her turn to move, than when the opponent is to Komodo 6, Stockfish DD (or 5), Houdini 4, and Rybka 3. move; the effect is nearly absent for computers. The first three finished 1-2-3 in the most recent Thoresen (3) Humans prefer to drive games into positions with fewer Chess Engine Competition, while Rybka 3 (to version 4.1) reasonable options and earlier resolutions, even when playing was the top program from 2008 to 2011. -

A Survey of Monte Carlo Tree Search Methods

IEEE TRANSACTIONS ON COMPUTATIONAL INTELLIGENCE AND AI IN GAMES, VOL. 4, NO. 1, MARCH 2012 1 A Survey of Monte Carlo Tree Search Methods Cameron Browne, Member, IEEE, Edward Powley, Member, IEEE, Daniel Whitehouse, Member, IEEE, Simon Lucas, Senior Member, IEEE, Peter I. Cowling, Member, IEEE, Philipp Rohlfshagen, Stephen Tavener, Diego Perez, Spyridon Samothrakis and Simon Colton Abstract—Monte Carlo Tree Search (MCTS) is a recently proposed search method that combines the precision of tree search with the generality of random sampling. It has received considerable interest due to its spectacular success in the difficult problem of computer Go, but has also proved beneficial in a range of other domains. This paper is a survey of the literature to date, intended to provide a snapshot of the state of the art after the first five years of MCTS research. We outline the core algorithm’s derivation, impart some structure on the many variations and enhancements that have been proposed, and summarise the results from the key game and non-game domains to which MCTS methods have been applied. A number of open research questions indicate that the field is ripe for future work. Index Terms—Monte Carlo Tree Search (MCTS), Upper Confidence Bounds (UCB), Upper Confidence Bounds for Trees (UCT), Bandit-based methods, Artificial Intelligence (AI), Game search, Computer Go. F 1 INTRODUCTION ONTE Carlo Tree Search (MCTS) is a method for M finding optimal decisions in a given domain by taking random samples in the decision space and build- ing a search tree according to the results. It has already had a profound impact on Artificial Intelligence (AI) approaches for domains that can be represented as trees of sequential decisions, particularly games and planning problems. -

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United Is a Great Team” Argues My Friend

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United is a great team” argues my friend. “But it will never be better than Manchester City”. People rate everything, from films and fashion to parks and politicians. It’s usually an effortless task. A pool of judges quantify somethings value, and an average is determined. However, what if it’s value is unknown. In this scenario, economists turn to auction theory or statistical rating systems. “Skill” is one of these cases. The Elo and Glicko rating systems tend to be the go-to when quantifying skill in Boolean games. Elo Rating System Figure 1: Arpad Elo As I started playing competitive chess, I came across the Figure 2: Top Elo Ratings for 2014 FIFA World Cup Elo rating system. It is a method of calculating a player’s relative skill in zero-sum games. Quantifying ability is helpful to determine how much better some players are, as well as for skill-based matchmaking. It was devised in 1960 by Arpad Elo, a professor of Physics and chess master. The International Chess Federation would soon adopt this rating system to divide the skill of its players. The algorithm’s success in categorising people on ability would start to gain traction in other win-lose games; Association football, NBA, and “esports” being just some of the many examples. Esports are highly competitive videogames. The Elo rating system consists of a formula that can determine your quantified skill based on the outcome of your games. The “skill” trend follows a gaussian bell curve. -

Extensions of the Elo Rating System for Margin of Victory

Extensions of the Elo Rating System for Margin of Victory MathSport International 2019 - Athens, Greece Stephanie Kovalchik 1 / 46 2 / 46 3 / 46 4 / 46 5 / 46 6 / 46 7 / 46 How Do We Make Match Forecasts? 8 / 46 It Starts with Player Ratings Assume the the ith player has some true ability θi. Models of player abilities assume game outcomes are a function of the difference in abilities Prob(Wij = 1) = F(θi − θj) 9 / 46 Paired Comparison Models Bradley-Terry models are a general class of paired comparison models of latent abilities with a logistic function for win probabilities. 1 F(θi − θj) = 1 + α−(θi−θj) With BT, player abilities are treated as Hxed in time which is unrealistic in most cases. 10 / 46 Bobby Fischer 11 / 46 Fischer's Meteoric Rise 12 / 46 Arpad Elo 13 / 46 In His Own Words 14 / 46 Ability is a Moving Target 15 / 46 Standard Elo Can be broken down into two steps: 1. Estimate (E-Step) 2. Update (U-Step) 16 / 46 Standard Elo E-Step For tth match of player i against player j, the chance that player i wins is estimated as, 1 W^ ijt = 1 + 10−(Rit−Rjt)/σ 17 / 46 Elo Derivation Elo supposed that the ratings of any two competitors were independent and normal with shared standard deviation δ. Given this, he likened the chance of a win to the chance of observing a difference in ratings for ratings drawn from the same distribution, 2 Rit − Rjt ∼ N(0, 2δ ) which leads to, Rit − Rjt P(Rit − Rjt > 0) = Φ( ) √2δ and ≈ 1/(1 + 10−(Rit−Rjt)/2δ) Elo's formula was just a hack for the cumulative normal density function.