Elo World, a Framework for Benchmarking Weak Chess Engines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The 12Th Top Chess Engine Championship

TCEC12: the 12th Top Chess Engine Championship Article Accepted Version Haworth, G. and Hernandez, N. (2019) TCEC12: the 12th Top Chess Engine Championship. ICGA Journal, 41 (1). pp. 24-30. ISSN 1389-6911 doi: https://doi.org/10.3233/ICG-190090 Available at http://centaur.reading.ac.uk/76985/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . To link to this article DOI: http://dx.doi.org/10.3233/ICG-190090 Publisher: The International Computer Games Association All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online TCEC12: the 12th Top Chess Engine Championship Guy Haworth and Nelson Hernandez1 Reading, UK and Maryland, USA After the successes of TCEC Season 11 (Haworth and Hernandez, 2018a; TCEC, 2018), the Top Chess Engine Championship moved straight on to Season 12, starting April 18th 2018 with the same divisional structure if somewhat evolved. Five divisions, each of eight engines, played two or more ‘DRR’ double round robin phases each, with promotions and relegations following. Classic tempi gradually lengthened and the Premier division’s top two engines played a 100-game match to determine the Grand Champion. The strategy for the selection of mandated openings was finessed from division to division. -

Here Should That It Was Not a Cheap Check Or Spite Check Be Better Collaboration Amongst the Federations to Gain Seconds

Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Executive Editor: Malcolm Pein Editorial....................................................................................................................4 Editors: Richard Palliser, Matt Read Malcolm Pein on the latest developments in the game Associate Editor: John Saunders Subscriptions Manager: Paul Harrington 60 Seconds with...Chris Ross.........................................................................7 Twitter: @CHESS_Magazine We catch up with Britain’s leading visually-impaired player Twitter: @TelegraphChess - Malcolm Pein The King of Wijk...................................................................................................8 Website: www.chess.co.uk Yochanan Afek watched Magnus Carlsen win Wijk for a sixth time Subscription Rates: United Kingdom A Good Start......................................................................................................18 1 year (12 issues) £49.95 Gawain Jones was pleased to begin well at Wijk aan Zee 2 year (24 issues) £89.95 3 year (36 issues) £125 How Good is Your Chess?..............................................................................20 Europe Daniel King pays tribute to the legendary Viswanathan Anand 1 year (12 issues) £60 All Tied Up on the Rock..................................................................................24 2 year (24 issues) £112.50 3 year (36 issues) £165 John Saunders had to work hard, but once again enjoyed Gibraltar USA & Canada Rocking the Rock ..............................................................................................26 -

Chess Engine Using Deep Reinforcement Learning Kamil Klosowski

Chess Engine Using Deep Reinforcement Learning Kamil Klosowski 916847 May 2019 Abstract Reinforcement learning is one of the most rapidly developing areas of Artificial Intelligence. The goal of this project is to analyse, implement and try to improve on AlphaZero architecture presented by Google DeepMind team. To achieve this I explore different architectures and methods of training neural networks as well as techniques used for development of chess engines. Project Dissertation submitted to Swansea University in Partial Fulfilment for the Degree of Bachelor of Science Department of Computer Science Swansea University Declaration This work has not previously been accepted in substance for any degree and is not being currently submitted for any degree. May 13, 2019 Signed: Statement 1 This dissertation is being submitted in partial fulfilment of the requirements for the degree of a BSc in Computer Science. May 13, 2019 Signed: Statement 2 This dissertation is the result of my own independent work/investigation, except where otherwise stated. Other sources are specifically acknowledged by clear cross referencing to author, work, and pages using the bibliography/references. I understand that fail- ure to do this amounts to plagiarism and will be considered grounds for failure of this dissertation and the degree examination as a whole. May 13, 2019 Signed: Statement 3 I hereby give consent for my dissertation to be available for photocopying and for inter- library loan, and for the title and summary to be made available to outside organisations. May 13, 2019 Signed: 1 Acknowledgment I would like to express my sincere gratitude to Dr Benjamin Mora for supervising this project and his respect and understanding of my preference for largely unsupervised work. -

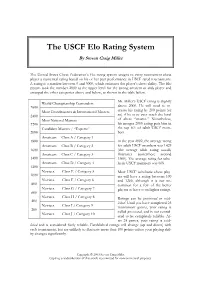

The USCF Elo Rating System by Steven Craig Miller

The USCF Elo Rating System By Steven Craig Miller The United States Chess Federation’s Elo rating system assigns to every tournament chess player a numerical rating based on his or her past performance in USCF rated tournaments. A rating is a number between 0 and 3000, which estimates the player’s chess ability. The Elo system took the number 2000 as the upper level for the strong amateur or club player and arranged the other categories above and below, as shown in the table below. Mr. Miller’s USCF rating is slightly World Championship Contenders 2600 above 2000. He will need to in- Most Grandmasters & International Masters crease his rating by 200 points (or 2400 so) if he is to ever reach the level Most National Masters of chess “master.” Nonetheless, 2200 his meager 2000 rating puts him in Candidate Masters / “Experts” the top 6% of adult USCF mem- 2000 bers. Amateurs Class A / Category 1 1800 In the year 2002, the average rating Amateurs Class B / Category 2 for adult USCF members was 1429 1600 (the average adult rating usually Amateurs Class C / Category 3 fluctuates somewhere around 1400 1500). The average rating for scho- Amateurs Class D / Category 4 lastic USCF members was 608. 1200 Novices Class E / Category 5 Most USCF scholastic chess play- 1000 ers will have a rating between 100 Novices Class F / Category 6 and 1200, although it is not un- 800 common for a few of the better Novices Class G / Category 7 players to have even higher ratings. 600 Novices Class H / Category 8 Ratings can be provisional or estab- 400 lished. -

Thf SO~Twarf Toolworks®Catalog SOFTWARE for FUN & INFORMATION

THf SO~TWARf TOOlWORKS®CATAlOG SOFTWARE FOR FUN & INFORMATION ORDER TOLL FREE 1•800•234•3088 moni tors each lesson and builds a seri es of personalized exercises just fo r yo u. And by THE MIRACLE PAGE 2 borrowi ng the fun of vid eo ga mes, it Expand yo ur repertoire ! IT'S AMIRAClf ! makes kids (even grown-up kids) want to The JI!/ irac/e So11g PAGE 4 NINT!NDO THE MIRACLE PIANO TEACHING SYSTEM learn . It doesn't matter whether you 're 6 Coflectioll: Vo/11111e I ~ or 96 - The Mirttcle brings th e joy of music adds 40 popular ENT!RTA INMENT PAGE/ to everyo ne. ,.-~.--. titles to your The Miracle PiC1110 PRODUCTI VITY PAGE 10 Tet1dti11gSyste111, in cluding: "La Bamba," by Ri chi e Valens; INFORMATION PAGElJ "Sara," by Stevie Nicks; and "Thi s Masquerade," by Leon Russell. Volume II VA LU EP ACKS PAGE 16 adds 40 MORE titles, including: "Eleanor Rigby," by John Lennon and Paul McCartney; "Faith," by George M ichael; Learn at your own pace, and at "The Girl Is M in e," by Michael Jackson; your own level. THESE SYMBOLS INDICATE and "People Get Ready," by C urtis FORMAT AVAILABILITY: As a stand-alone instrument, The M irt1de Mayfield. Each song includes two levels of play - and learn - at yo ur own rivals the music industry's most sophis playin g difficulty and is full y arra nged pace, at your own level, NIN TEN DO ti cated MIDI consoles, with over 128 with complete accompaniments for a truly ENTERTAINMENT SYST!M whenever yo u want. -

Super Human Chess Engine

SUPER HUMAN CHESS ENGINE FIDE Master / FIDE Trainer Charles Storey PGCE WORLD TOUR Young Masters Training Program SUPER HUMAN CHESS ENGINE Contents Contents .................................................................................................................................................. 1 INTRODUCTION ....................................................................................................................................... 2 Power Principles...................................................................................................................................... 4 Human Opening Book ............................................................................................................................. 5 ‘The Core’ Super Human Chess Engine 2020 ......................................................................................... 6 Acronym Algorthims that make The Storey Human Chess Engine ......................................................... 8 4Ps Prioritise Poorly Placed Pieces ................................................................................................... 10 CCTV Checks / Captures / Threats / Vulnerabilities ...................................................................... 11 CCTV 2.0 Checks / Checkmate Threats / Captures / Threats / Vulnerabilities ............................. 11 DAFiii Attack / Features / Initiative / I for tactics / Ideas (crazy) ................................................. 12 The Fruit Tree analysis process ............................................................................................................ -

Project: Chess Engine 1 Introduction 2 Peachess

P. Thiemann, A. Bieniusa, P. Heidegger Winter term 2010/11 Lecture: Concurrency Theory and Practise Project: Chess engine http://proglang.informatik.uni-freiburg.de/teaching/concurrency/2010ws/ Project: Chess engine 1 Introduction From the Wikipedia article on Chess: Chess is a two-player board game played on a chessboard, a square-checkered board with 64 squares arranged in an eight-by-eight grid. Each player begins the game with sixteen pieces: one king, one queen, two rooks, two knights, two bishops, and eight pawns. The object of the game is to checkmate the opponent’s king, whereby the king is under immediate attack (in “check”) and there is no way to remove or defend it from attack on the next move. The game’s present form emerged in Europe during the second half of the 15th century, an evolution of an older Indian game, Shatranj. Theoreticians have developed extensive chess strategies and tactics since the game’s inception. Computers have been used for many years to create chess-playing programs, and their abilities and insights have contributed significantly to modern chess theory. One, Deep Blue, was the first machine to beat a reigning World Chess Champion when it defeated Garry Kasparov in 1997. Do not worry if you are not familiar with the rules of chess! You are not asked to change the methods which calculate the moves, nor the internal representation of boards or moves, nor the scoring of boards. 2 Peachess Peachess is a chess engine written in Java. The implementation consists of several components. 2.1 The chess board The chess board representation stores the actual state of the game. -

Newagearcade.Com 5000 in One Arcade Game List!

Newagearcade.com 5,000 In One arcade game list! 1. AAE|Armor Attack 2. AAE|Asteroids Deluxe 3. AAE|Asteroids 4. AAE|Barrier 5. AAE|Boxing Bugs 6. AAE|Black Widow 7. AAE|Battle Zone 8. AAE|Demon 9. AAE|Eliminator 10. AAE|Gravitar 11. AAE|Lunar Lander 12. AAE|Lunar Battle 13. AAE|Meteorites 14. AAE|Major Havoc 15. AAE|Omega Race 16. AAE|Quantum 17. AAE|Red Baron 18. AAE|Ripoff 19. AAE|Solar Quest 20. AAE|Space Duel 21. AAE|Space Wars 22. AAE|Space Fury 23. AAE|Speed Freak 24. AAE|Star Castle 25. AAE|Star Hawk 26. AAE|Star Trek 27. AAE|Star Wars 28. AAE|Sundance 29. AAE|Tac/Scan 30. AAE|Tailgunner 31. AAE|Tempest 32. AAE|Warrior 33. AAE|Vector Breakout 34. AAE|Vortex 35. AAE|War of the Worlds 36. AAE|Zektor 37. Classic Arcades|'88 Games 38. Classic Arcades|1 on 1 Government (Japan) 39. Classic Arcades|10-Yard Fight (World, set 1) 40. Classic Arcades|1000 Miglia: Great 1000 Miles Rally (94/07/18) 41. Classic Arcades|18 Holes Pro Golf (set 1) 42. Classic Arcades|1941: Counter Attack (World 900227) 43. Classic Arcades|1942 (Revision B) 44. Classic Arcades|1943 Kai: Midway Kaisen (Japan) 45. Classic Arcades|1943: The Battle of Midway (Euro) 46. Classic Arcades|1944: The Loop Master (USA 000620) 47. Classic Arcades|1945k III 48. Classic Arcades|19XX: The War Against Destiny (USA 951207) 49. Classic Arcades|2 On 2 Open Ice Challenge (rev 1.21) 50. Classic Arcades|2020 Super Baseball (set 1) 51. -

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United Is a Great Team” Argues My Friend

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United is a great team” argues my friend. “But it will never be better than Manchester City”. People rate everything, from films and fashion to parks and politicians. It’s usually an effortless task. A pool of judges quantify somethings value, and an average is determined. However, what if it’s value is unknown. In this scenario, economists turn to auction theory or statistical rating systems. “Skill” is one of these cases. The Elo and Glicko rating systems tend to be the go-to when quantifying skill in Boolean games. Elo Rating System Figure 1: Arpad Elo As I started playing competitive chess, I came across the Figure 2: Top Elo Ratings for 2014 FIFA World Cup Elo rating system. It is a method of calculating a player’s relative skill in zero-sum games. Quantifying ability is helpful to determine how much better some players are, as well as for skill-based matchmaking. It was devised in 1960 by Arpad Elo, a professor of Physics and chess master. The International Chess Federation would soon adopt this rating system to divide the skill of its players. The algorithm’s success in categorising people on ability would start to gain traction in other win-lose games; Association football, NBA, and “esports” being just some of the many examples. Esports are highly competitive videogames. The Elo rating system consists of a formula that can determine your quantified skill based on the outcome of your games. The “skill” trend follows a gaussian bell curve. -

Extensions of the Elo Rating System for Margin of Victory

Extensions of the Elo Rating System for Margin of Victory MathSport International 2019 - Athens, Greece Stephanie Kovalchik 1 / 46 2 / 46 3 / 46 4 / 46 5 / 46 6 / 46 7 / 46 How Do We Make Match Forecasts? 8 / 46 It Starts with Player Ratings Assume the the ith player has some true ability θi. Models of player abilities assume game outcomes are a function of the difference in abilities Prob(Wij = 1) = F(θi − θj) 9 / 46 Paired Comparison Models Bradley-Terry models are a general class of paired comparison models of latent abilities with a logistic function for win probabilities. 1 F(θi − θj) = 1 + α−(θi−θj) With BT, player abilities are treated as Hxed in time which is unrealistic in most cases. 10 / 46 Bobby Fischer 11 / 46 Fischer's Meteoric Rise 12 / 46 Arpad Elo 13 / 46 In His Own Words 14 / 46 Ability is a Moving Target 15 / 46 Standard Elo Can be broken down into two steps: 1. Estimate (E-Step) 2. Update (U-Step) 16 / 46 Standard Elo E-Step For tth match of player i against player j, the chance that player i wins is estimated as, 1 W^ ijt = 1 + 10−(Rit−Rjt)/σ 17 / 46 Elo Derivation Elo supposed that the ratings of any two competitors were independent and normal with shared standard deviation δ. Given this, he likened the chance of a win to the chance of observing a difference in ratings for ratings drawn from the same distribution, 2 Rit − Rjt ∼ N(0, 2δ ) which leads to, Rit − Rjt P(Rit − Rjt > 0) = Φ( ) √2δ and ≈ 1/(1 + 10−(Rit−Rjt)/2δ) Elo's formula was just a hack for the cumulative normal density function. -

Portable Game Notation Specification and Implementation Guide

11.10.2020 Standard: Portable Game Notation Specification and Implementation Guide Standard: Portable Game Notation Specification and Implementation Guide Authors: Interested readers of the Internet newsgroup rec.games.chess Coordinator: Steven J. Edwards (send comments to [email protected]) Table of Contents Colophon 0: Preface 1: Introduction 2: Chess data representation 2.1: Data interchange incompatibility 2.2: Specification goals 2.3: A sample PGN game 3: Formats: import and export 3.1: Import format allows for manually prepared data 3.2: Export format used for program generated output 3.2.1: Byte equivalence 3.2.2: Archival storage and the newline character 3.2.3: Speed of processing 3.2.4: Reduced export format 4: Lexicographical issues 4.1: Character codes 4.2: Tab characters 4.3: Line lengths 5: Commentary 6: Escape mechanism 7: Tokens 8: Parsing games 8.1: Tag pair section 8.1.1: Seven Tag Roster 8.2: Movetext section 8.2.1: Movetext line justification 8.2.2: Movetext move number indications 8.2.3: Movetext SAN (Standard Algebraic Notation) 8.2.4: Movetext NAG (Numeric Annotation Glyph) 8.2.5: Movetext RAV (Recursive Annotation Variation) 8.2.6: Game Termination Markers 9: Supplemental tag names 9.1: Player related information www.saremba.de/chessgml/standards/pgn/pgn-complete.htm 1/56 11.10.2020 Standard: Portable Game Notation Specification and Implementation Guide 9.1.1: Tags: WhiteTitle, BlackTitle 9.1.2: Tags: WhiteElo, BlackElo 9.1.3: Tags: WhiteUSCF, BlackUSCF 9.1.4: Tags: WhiteNA, BlackNA 9.1.5: Tags: WhiteType, BlackType -

Package 'Playerratings'

Package ‘PlayerRatings’ March 1, 2020 Version 1.1-0 Date 2020-02-28 Title Dynamic Updating Methods for Player Ratings Estimation Author Alec Stephenson and Jeff Sonas. Maintainer Alec Stephenson <[email protected]> Depends R (>= 3.5.0) Description Implements schemes for estimating player or team skill based on dynamic updating. Implemented methods include Elo, Glicko, Glicko-2 and Stephenson. Contains pdf documentation of a reproducible analysis using approximately two million chess matches. Also contains an Elo based method for multi-player games where the result is a placing or a score. This includes zero-sum games such as poker and mahjong. LazyData yes License GPL-3 NeedsCompilation yes Repository CRAN Date/Publication 2020-03-01 15:50:06 UTC R topics documented: aflodds . .2 elo..............................................3 elom.............................................5 fide .............................................7 glicko . .9 glicko2 . 11 hist.rating . 13 kfide............................................. 14 kgames . 15 krating . 16 kriichi . 17 1 2 aflodds metrics . 17 plot.rating . 19 predict.rating . 20 riichi . 22 steph . 23 Index 26 aflodds Australian Football Game Results and Odds Description The aflodds data frame has 675 rows and 9 variables. It shows the results and betting odds for 675 Australian football games played by 18 teams from 26th March 2009 until 24th June 2012. Usage aflodds Format This data frame contains the following columns: Date A date object showing the date of the game. Week The number of weeks since 25th March 2009. HomeTeam The home team name. AwayTeam The away team name. HomeScore The home team score. AwayScore The home team score. Score A numeric vector giving the value one, zero or one half for a home win, an away win or a draw respectively.