Physical Layer Identification: Methodology, Security, and Origin of Variation Ryan Gerdes Iowa State University

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

VSC8489-10 and VSC8489-13

VSC8489-10 and VSC8489-13 Dual Channel WAN/LAN/Backplane Highlights RXAUI/XAUI to SFP+/KR 10 GbE SerDes PHY • IEEE 1588v2 compliant with VeriTime™ • Failover switching and lane ordering Vitesse’s dual channel SerDes PHY provides fully • Simultaneous LAN and WAN support IEEE 1588v2-compliant devices and hardware-based KR • RXAUI/XAUI support support for timing-critical applications, including all • SFP+ I/O with KR support industry-standard protocol encapsulations. • 1 GbE support VeriTime™ is Vitesse’s patent-pending distributed timing technology Applications that delivers the industry’s most accurate IEEE 1588v2 timing implementation. IEEE 1588v2 timing integrated in the PHY is the • Multiple-port RXAUI/XAUI to quickest, lowest cost method of implementing the timing accuracy that SFI/ SFP+ line cards or NICs is critical to maintaining existing timing-critical capabilities during the • 10GBASE-KR compliant backplane migration from TDM to packet-based architectures. transceivers The VSC8489-10 and VSC8489-13 devices support 1-step and 2-step • Carrier Ethernet networks requiring PTP frames for ordinary clock, boundary clock, and transparent clock IEEE 1588v2 timing applications, along with complete Y.1731 OAM performance monitoring capabilities. • Secure data center to data center interconnects The devices meet the SFP+ SR/LR/ER/220MMF host requirements in accordance with the SFF-8431 specifications. They also compensate • 10 GbE switch cards and router cards for optical impairments in SFP+ applications, along with degradations of the PCB. The devices provide full KR support, including KR state machine, for autonegotiation and link optimization. The transmit path incorporates a multitap output driver to provide flexibility to meet the demanding 10GBASE-KR (IEEE 802.3ap) Tx output launch requirements. -

IEEE 802.3 Working Group November 2006 Plenary Week

IEEE 802.3 Working Group November 2006 Plenary Week Robert M. Grow Chair, IEEE 802.3 Working Group [email protected] Web site: www.ieee802.org/3 13 November 2006 IEEE 802 November Plenary 1 Current IEEE 802.3 activities • P802.3ap, Backplane Ethernet Published• P802.3aq, 10GBASE-LRM • P802.3ar, Congestion Management Approved• P802.3as, Frame Format Extensions • P802.3at, DTE Power Enhancements • P802.3av, 10 Gb/s EPON New • Higher Speed Study Group 13 November 2006 IEEE 802 November Plenary 2 P802.3ap Backplane Ethernet • Define Ethernet operation over electrical backplanes – 1Gb/s serial – 10Gb/s serial – 10Gb/s XAUI-based 4-lane – Autonegotiation • In Sponsor ballot • Meeting plan – Complete resolution of comments on P802.3ap/D3.1, 1st recirculation Sponsor ballot – Possibly request conditional approval for submittal to RevCom 13 November 2006 IEEE 802 November Plenary 3 P802.3aq 10GBASE-LRM • Extends Ethernet capabilities at 10 Gb/s – New physical layer to run under 802.3ae specified XGMII – Extends Ethernet capabilities at 10 Gb/s – Operation over FDDI-grade multi-mode fiber • Approved by Standards Board at September meeting • Published 16 October 2006 • No meeting – Final report to 802.3 13 November 2006 IEEE 802 November Plenary 4 P802.3ar Congestion Management • Proposed modified project documents failed to gain consensus support in July • Motion to withdraw the project was postponed to this meeting • Current draft advancement to WG ballot was not considered in July • Meeting plan – Determine future of the project – Reevaluate -

Customer Issues and the Installed Base of Cabling

CustomerCustomer andand MarketMarket Issues:Issues: 1010 GbpsGbps EthernetEthernet onon CategoryCategory 55 oror BetterBetter CablingCabling Bruce Tolley Cisco Systems, Inc [email protected] 1 IEEE 802.3 Interim January 2003 GbEGbE SwitchSwitch Ports:Ports: FiberFiber vsvs CopperCopper Ports (000s) 802.3ab 8,000 STD 7,000 6/99 6,000 802.3z 5,000 STD Total 4,000 6/98 Fiber 3,000 Copper 2,000 1,000 0 1997 1998 1999 2000 2001 2002 2 Source: Dell’Oro 2002 IEEE 802.3 Interim January 2003 SuccessSuccess ofof GbEGbE onon CopperCopper • It is 10/100/1000 Mbps • It runs Cat5, 5e and 6 • It does not obsolete the installed base • It does not require both ends of the link to be upgraded at the same time 3 IEEE 802.3 Interim January 2003 1010 GbEGbE LayerLayer DiagramDiagram Media Access Control (MAC) Full Duplex 10 Gigabit Media Independent Interface (XGMII) or 10 Gigabit Attachment Unit Interface (XAUI) CWDM Serial Serial LAN PHY LAN PHY WAN PHY (8B/10B) (64B/66B) (64B/66B + WIS) CWDM Serial Serial Serial Serial Serial Serial PMD PMD PMD PMD PMD PMD PMD 1310 nm 850 nm 1310 nm 1550 nm 850 nm 1310 nm 1550 nm -LX4 -SR -LR -ER -SW -LW -EW Source: Cisco Systems 4 IEEE 802.3 Interim January 2003 Pluggable 10 GbE Modules: The Surfeit of SKUs 10GBASE XENPAK X2/XPAK XFP PMDs XAUI XAUI -SR X X -LR X X X -ER X X -LX4 X X -CX4 X X -T X X X CWDM, X X X DWDM Please: No new pluggable for 10GBASE-T! 5 IEEE 802.3 Interim January 2003 CumulativeCumulative WorldWorld--widewide ShipmentsShipments 1300 1200 Cat 7 Cat5 Cat5 1100 Cat 6 59% 51% 1000 Cat 5e 900 Cat 5 800 -

SGI® IRIS® Release 2 Dual-Port Gigabit Ethernet Board User's Guide

SGI® IRIS® Release 2 Dual-Port Gigabit Ethernet Board User’s Guide 007-4324-001 CONTRIBUTORS Written by Matt Hoy and updated by Terry Schultz Illustrated by Dan Young and Chrystie Danzer Production by Karen Jacobson Engineering contributions by Jim Hunter and Steve Modica COPYRIGHT © 2002, 2003, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the US government or any contractor thereto, it is acquired as “commercial computer software” subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is Silicon Graphics, Inc., 1600 Amphitheatre Pkwy 2E, Mountain View, CA 94043-1351. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, IRIS, IRIX, Octane, Onyx, Onyx2, and Origin are registered trademarks, and Octane2, Silicon Graphics Fuel, and Silicon Graphics Tezro are trademarks of Silicon Graphics, Inc., in the United States and/or other countries worldwide. FCC WARNING This equipment has been tested and found compliant with the limits for a Class A digital device, pursuant to Part 15 of the FCC rules. -

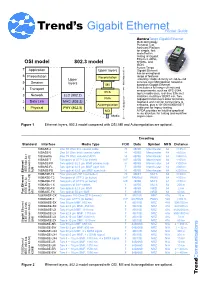

Gigabit Ethernet Pocket Guide

GbE.PocketG.fm Page 1 Friday, March 3, 2006 9:43 AM Carrier Class Ethernet, Metro Ethernet tester, Metro Ethernet testing, Metro Ethernet installation, Metro Ethernet maintenance, Metro Ethernet commissioning, Carrier Class Ethernet tester, Carrier Class Ethernet testing, Carrier Class Ethernet installation, Carrier Class Ethernet maintenance, Gigabit Ethernet tester, Gigabit Ethernet testing, Gigabit Ethernet installation, Gigabit Ethernet maintenance, Gigabit Ethernet commissioning, Gigabit Ethernet protocols, 1000BASE-T tester, 1000BASE-LX test, 1000BASE-SX test, 1000BASE-T testing, 1000BASE-LX testing Trend’s Gigabit EthernetPocket Guide AuroraTango Gigabit Ethernet Multi-technology Personal Test Assistant Platform for simple, fast and effective testing of Gigabit Ethernet, ADSL, OSI model 802.3 model SHDSL, and ISDN. Aurora Tango 7 Application Upper layers Gigabit Ethernet has an exceptional 6 Presentation Reconciliation range of features Upper ensuring reliable delivery of end-to-end 5 Session layers services over Metropolitan networks MII Media independent based on Gigabit Ethernet. 4 It includes a full range of tests and Transport measurements, such as RFC-2544, PCS top ten addresses, real-time Ethernet 3 Network LLC (802.2) statistics, multilayer BERT, etc. Two PMA Gigaport transceivers allow terminate, 2 Data Link MAC (803.3) loopback and monitor connections to Autonegotiation networks, plus a 10/100/1000BASE-T Physical cable port for legacy testing. 1 PHY (802.3) dependent Media MDI A PDA provides an intuitive graphical menu -

Cisco Presentation Guide

EthernetEthernet PetrPetr GrygGrygáárekrek © 2005 Petr Grygarek, Advanced Computer Networks Technologies 1 EthernetEthernet HistorHistoryy •ResearchResearch background:background: AlohaNetAlohaNet •UniversityUniversity ofof Hawai,Hawai, 19701970:: commoncommon (radio)(radio) channelchannel sharingsharing methodsmethods –– basisbasis forfor CSMA/CDCSMA/CD •1980:1980: DIXDIX publishedpublished EthernetEthernet standardstandard (Metcalfe)(Metcalfe) •1985:1985: IEEEIEEE 802802.3.3 (MAC(MAC andand LLCLLC layers)layers) •10Base5,10Base5, 10Base2,10Base2, 10BaseT10BaseT •19951995 IEEEIEEE 802.3u802.3u (Fast(Fast Ethernet)Ethernet) •19981998 IEEEIEEE 802.3z802.3z (Gigabit(Gigabit Ethernet)Ethernet) •20022002 IEEEIEEE 802.3ae802.3ae (10Gb(10Gb Ethernet)Ethernet) •...... © 2005 Petr Grygarek, Advanced Computer Networks Technologies 2 EthernetEthernet NamingNaming RulesRules (IEEE(IEEE Standard)Standard) MbpsMbps [Base|Broad][Base|Broad] [segment[segment_length_length_m_m || -medium-medium]] --TT -- TwistedTwisted Pair,Pair, --FF -- FiberFiber optic,optic, ...... e.g.e.g. 10Base5,10Base5, 10BaseT,10BaseT, 100BaseF100BaseF NameName ofof eacheach particularparticular EthernetEthernet technologytechnology defineddefined inin individualindividual supplementsupplementss ofof 802.3802.3 standardstandard •e.ge.g 802.3u,802.3u, 802.3ab,802.3ab, 802.3z802.3z © 2005 Petr Grygarek, Advanced Computer Networks Technologies 3 Half-duplexHalf-duplex andand Full-duplexFull-duplex EthernetEthernet •HalfHalf duplexduplex –– colissioncolission envirnmentenvirnment -

AH1721 SJA1105SMBEVM User Manual Rev

AH1721 SJA1105SMBEVM User Manual Rev. 1.00 — 16 July 2018 User Manual Document information Info Content Keywords SJA1105PQRS, SJA1105S, SJA1105S Evaluation Board, SJA1105SMBEVM, Cascading, Ethernet, Wakeup, Sleep Abstract The SJA1105SMBEVM Evaluation Board is described in this document. NXP Semiconductors AH1721 SJA1105SMBEVM UM Revision history Rev Date Description 1.00 20180716 Release Contact information For more information, please visit: http://www.nxp.com For sales office addresses, please send an email to: mailto:[email protected] AH1721 All information provided in this document is subject to legal disclaimers. © NXP Semiconductors N.V. 2018. All rights reserved. User Manual Rev. 1.00 — 16 July 2018 2 of 35 NXP Semiconductors AH1721 SJA1105SMBEVM UM 1. Introduction The SJA1105SMBEVM (SJA1105S Mother Board Evaluation Module, see Fig 1) is designed for evaluating the capabilities of the SJA1105P/Q/R/S Automotive Ethernet switch family and the TJA1102 Automotive Ethernet PHYs, by developing and running customer software. Therefore, the board features the MPC5748G MCU with a rich set of peripherals for communication and automotive applications. It is designed to allow early adaptions of applications, like a X-to-Ethernet gateway, communication hub, etc. Fig 1. SJA1105SMBEVM board This user manual describes the board hardware and the software development environment to allow the user to quickly bring up a working system and to pave the way towards an own customized software, and in the end towards an all custom system. After the HW description the manual walks the user through the process to create the factory firmware starting at the supplied example project and describes the recommended SW development environment S32 Design Studio for Power as far as it is needed for the example project. -

8-Port Gigabit Switch

GSW-0809 Version: 3 8-Port Gigabit Switch The LevelOne GSW-0809 Gigabit Ethernet Switch is designed for reliable high-performance networking. With its non-blocking switching fabric in full-duplex mode, this switch can deliver up to 2000Mbps per port, while the Store-and-Forward service brings low latency and error-free packet delivery. This highperformance Gigabit Ethernet switch is perfect for fulfilling the demands of online gaming and multimedia streaming. Moreover, this switch also supports IEEE 802.3az Energy Efficient Ethernet which helps reduce power consumption. Power Saving Features The GSW-0809supports IEEE 802.3az Energy Efficient Ethernet to provide power-saving benefits without compromising performance. One of the ways it does this is by automatically detecting the length of connected cables and can adjust power usage by saving energy on shorter cable connections. Internal Power With a built-in power supply, GSW-0809 is simple to install and use. No bulky adapters will hang on the wall and get in the way of your other electronic devices. Keep your desktop and computer area free from the clutter of cords! Easy to Use The GSW-0809 features plug-and-play for easy installation. No configuration is required. Autonegotiation on each port senses the link speed of a network device and adjusts for compatibility and optimal performance. Key Features - 8 Gigabit Ethernet ports - 10/100/1000Mbps wire speed transmission and reception - 9K jumbo frames to increase data transfer rates - Supports 8K MAC address auto-learning and auto-aging -

Ethernet at New Speeds, Deterministic Networking, and Power Over Everything!

Ethernet Evolving: Ethernet at New Speeds, Deterministic Networking, and Power over Everything! Dave Zacks Distinguished System Engineer @DaveZacks Peter Jones Principal Engineer @petergjones #NBASET BRKCRS-3900 #HighBitrate Ethernet Evolving: Ethernet at New Speeds, Deterministic Networking, and Power over Everything! BRKCRS-3900 – Session Overview We have a great session for you today … so sit back, buckle in, and let’s get started! Come to this session to get geared up on the latest developments in Ethernet for Campus and Branch networks. The Ethernet we all know and love is evolving at a rapid pace. With the release of MultiGigabit Ethernet on Cisco's latest Catalyst access switching platforms, you can connect new devices that support MultiGigabit connections at 2.5Gb/s and 5Gb/s over Cat5e/6 copper. No longer are 1Gbps and 10Gbps the only connectivity options – intermediate speeds are now possible. Cisco’s Multigigabit Technology is a key enabler for upcoming 802.11ac Wave 2 wireless deployments, where the Ethernet bandwidth required per AP exceeds 1Gb/s. Multigigabit switches also support other high-bandwidth devices in the Ethernet access layer without requiring expensive and time-consuming re-cabling for 10GBASE-T. We will talk about how Cisco MultiGigabit switches work, and how they will change your network. As well, we will discuss work being done in (and out of) the standards bodies to broaden the footprint for Ethernet, and enable the Internet of Everything. This includes additional Ethernet speeds, capabilities for deterministic networking functionality over an Ethernet infrastructure (precision timing, pre-emption, time scheduling, etc), and of course we need to provide more Power over Ethernet to more devices, in more places, than ever. -

P802.3Ah Draft 2.0 Comments

P802.3ah Draft 2.0 Comments Cl 00 SC P L # 952 Cl 00 SC P L # 1248 Thompson, Geoff Nortel Lee Sendelbach IBM Comment Type TR Comment Status R Comment Type E Comment Status A What is being proposed in many places throughout this draft is not a peer network. To Fix all the references with *ref*. Like 60.9.4, 60.8.13.2.1, 60.8.13.1 60.8.11 60.1 I don't introduce such a foreign concept into a document where the implicit and explicit notion of understand what is going on with the *refs. Also fix #CrossRef# in 64.1 peer relationships is so thoroughly infused throughout the existing document is likely to SuggestedRemedy cause (a) significant confusion and (b) significant errors. Fix it. SuggestedRemedy Move non-peer proposals to a new and separate document that can thoroughly, explicitly Proposed Response Response Status C and unambigiously embrace the concept of Ethernet Services over asymetrical ACCEPT IN PRINCIPLE. infrastructure. These references are intended for the use of the editors to search for cross references. All Proposed Response Response Status U these will be romeved at time of publication as indicated in the editor's note boxes REJECT. Cl 00 SC P L # 951 The suggested remedy is ambiguous. What are "the non-peer proposals"? What is the Thompson, Geoff Nortel "new and separate document"? Comment Type TR Comment Status A reassigned The draft in its current form satisfies the PAR and 5 Criteria for the project, which call for an I have a problem with the use of the term "loopback" for the diagnostic return path being amendment to IEEE Std 802.3, formatted as a set of clauses. -

Ethernet PHY Transceiver Characterization ESA AO/1-8074/14/NL/LF

Ethernet PHY Transceiver Characterization ESA AO/1-8074/14/NL/LF C. Plettner, L. Buttelmann, Airbus DS F. Guettache, G. Magistrati, ESA/ESTEC H. Kettunen, A. Virtanen, University of Jyväskylä Overview •International context and ESA/Airbus DS vision •Project layout •Developments: electronics (PCB motherboards) and software (data acquisition) •Environmental and radiation test results •Conclusion and Outlook Confidential International Context as Motivation tten consent of Astrium [Ltd/SAS/GmbH]. •Time Triggered Ethernet (TTECH) technology gained worldwide momentum in the automotive and (aero)space industry. ted to any ted any tothird party without the wri •NASA and Honeywell promote TTECH as baseline for the on board data bus system. Honeywell technology is rad-hard and ITAR protected. •Large scale space projects deploying TTECH: rictly confidential. It shall not be communica be not shall It confidential. rictly -NASA/ESA Orion lunar mission to the Moon -ESA next generation launcher Ariane 6 •Strategically important to safeguard and adapt commercial ITAR free technology with the property Astriumofproperty [Ltd/SAS/GmbH] the and is st respect to one of the building blocks of TTECH which is PHY transceiver. This This and itsdocument contentis 10 May 2017 3 Confidential Objectives tten consent of Astrium [Ltd/SAS/GmbH]. •Investigate the possibility to use commercial off the shelf Ethernet transceivers components for space use to circumvent the costly radiation-hardened development ted to any ted any tothird party without the wri •Perform a trade off and choose three-best transceiver manufacturers in a defined metric •Run a full space qualification campaign on the parts rictly confidential. It shall not be communica be not shall It confidential. -

Siemens SIMATIC NET Industrial Ethernet Brochure

BS_IE_Switching_062010_EN.book Seite 1 Mittwoch, 23. Juni 2010 4:19 16 © Siemens AG 2010 Industrial Ethernet Switching Brochure · June 2010 SIMATIC NET Answers for industry. BR_Switching_EN_062010.fm Seite 2 Montag, 28. Juni 2010 3:40 15 © Siemens AG 2010 Industrial Ethernet Industrial Ethernet Networking With Totally Integrated Automation, Siemens is the only They are used for the structured networking of machines and manufacturer to offer an integrated range of products and plants as well as for integrating them into the overall corpo- systems for automation in all sectors – from incoming goods rate network. A graded portfolio of switches (SCALANCE X) and the production process to outgoing goods, from the field and communications processors with integral switches level through the production control level, to connection with enables optimum solutions for all types of switching tasks, the corporate management level. SIMATIC NET offers all the not only in harsh industrial environments. components for industrial communication: from industrial communications processors right up to network components To assist in selecting the right Industrial Ethernet switches as – even wireless if required. well as configuration of modular variants, the Switch Selec- tion Tool is available as a free download at: The ever expanding spread of Ethernet in the industrial envi- ronment makes it increasingly important to structure the www.siemens.de/switchselection resulting Industrial Ethernet/PROFINET networks. To achieve maximum uniformity of the networks and seam- less integration of the industrial plants, SIMATIC NET offers different Industrial Ethernet switching components − active network components for use direct at the SIMATIC sys- tem, as stand-alone devices or as plug-in communications processors with integral switch for PCs and SIMATIC.