Classifying Paintings by Artistic Genre: an Analysis of Features & Classifiers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Pennsylvania State University the Graduate School College Of

The Pennsylvania State University The Graduate School College of Arts and Architecture CUT AND PASTE ABSTRACTION: POLITICS, FORM, AND IDENTITY IN ABSTRACT EXPRESSIONIST COLLAGE A Dissertation in Art History by Daniel Louis Haxall © 2009 Daniel Louis Haxall Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy August 2009 The dissertation of Daniel Haxall has been reviewed and approved* by the following: Sarah K. Rich Associate Professor of Art History Dissertation Advisor Chair of Committee Leo G. Mazow Curator of American Art, Palmer Museum of Art Affiliate Associate Professor of Art History Joyce Henri Robinson Curator, Palmer Museum of Art Affiliate Associate Professor of Art History Adam Rome Associate Professor of History Craig Zabel Associate Professor of Art History Head of the Department of Art History * Signatures are on file in the Graduate School ii ABSTRACT In 1943, Peggy Guggenheim‘s Art of This Century gallery staged the first large-scale exhibition of collage in the United States. This show was notable for acquainting the New York School with the medium as its artists would go on to embrace collage, creating objects that ranged from small compositions of handmade paper to mural-sized works of torn and reassembled canvas. Despite the significance of this development, art historians consistently overlook collage during the era of Abstract Expressionism. This project examines four artists who based significant portions of their oeuvre on papier collé during this period (i.e. the late 1940s and early 1950s): Lee Krasner, Robert Motherwell, Anne Ryan, and Esteban Vicente. Working primarily with fine art materials in an abstract manner, these artists challenged many of the characteristics that supposedly typified collage: its appropriative tactics, disjointed aesthetics, and abandonment of ―high‖ culture. -

Unification of Art Theories (Uat) - a Long Manifesto

UNIFICATION OF ART THEORIES (UAT) - A LONG MANIFESTO - by FLORENTIN SMARANDACHE ABSTRACT. “Outer-Art” is a movement set up as a protest against, or to ridicule, the random modern art which states that everything is… art! It was initiated by Florentin Smarandache, in 1990s, who ironically called for an upside-down artwork: to do art in a way it is not supposed to be done, i.e. to make art as ugly, as silly, as wrong as possible, and generally as impossible as possible! He published three such (outer-)albums, the second one called “oUTER-aRT, the Worst Possible Art in the World!” (2002). FLORENTIN SMARANDACHE Excerpts from his (outer-)art theory: <The way of how not to write, which is an emblem of paradoxism, was later on extended to the way of how not to paint, how not to design, how to not sculpture, until the way of how not to act, or how not to sing, or how not to perform on the stage – thus: all reversed. Only negative adjectives are cumulated in the outer- art: utterly awful and uninteresting art; disgusting, execrable, failure art; garbage paintings: from crumpled, dirty, smeared, torn, ragged paper; using anti-colors and a-colors; naturalist paintings: from wick, spit, urine, feces, any waste matter; misjudged art; self-discredited, ignored, lousy, stinky, hooted, chaotic, vain, lazy, inadequate art (I had once misspelled 'rat' instead of 'art'); obscure, unremarkable, syncopal art; para-art; deriding art expressing inanity and emptiness; strange, stupid, nerd art, in-deterministic, incoherent, dull, uneven art... as made by any monkey!… the worse the better!> 2 A MANIFESTO AND ANTI-MANIFESTO FOR OUTER-ART I was curious to learn new ideas/ schools /styles/ techniques/ movements in arts and letters. -

Women of Abstract Expressionism

WOMEN OF ABSTRACT EXPRESSIONISM EDITED BY JOAN MARTER INTRODUCTION BY GWEN F. CHANZIT, EXHIBITION CURATOR DENVER ART MUSEUM IN ASSOCIATION WITH YALE UNIVERSITY PRESS NEW HAVEN AND LONDON Published on the occasion of the exh1b1t1on Women of Abstract Denver Art Museum Expressionism. organized by the Denver Art Museum Director of Publications: Laura Caruso Curatorial Assistant: Renee B. Miller Denver Art Museum. June-September 2016 Yale University Press Mint Museum. Charlotte. North Carolina. October-January 2017 Palm Springs Art Museum. February-May 2017 Publisher. Art and Architecture: Patricia Fidler Editor Art and Architecture: Katherine Boller Wh itechapel Gallery. London.June-September 2017 . Production Manager: Mary Mayer Women of Abstract Expressionism is organized by the Denver Art Museum. It is generously funded by Merle Chambers: the Henry Luce Designed by Rita Jules. Miko McGinty Inc. Foundation: the National Endowment for the Arts: the Ponzio family: Set in Benton Sans type by Tina Henderson DAM U.S. Bank: Christie's: Barbara Bridges: Contemporaries. a Printed in China through Oceanic Graphic International. Inc. support group of the Denver Art Museum: the Joan Mitchell Foundation: the Dedalus Foundation: Bette MacDonald: the Deborah Library of Congress• Control Number: 2015948690 Remington Charitable Trust for the Visual Arts;the donors to the ISBN 978-0-300-20842-9 (hardcover): 978-0914738-62-6 Annual Fund Leadership Campaign: and the citizens who support the (paperback) Scientific and Cultural Facilities District (SCFD). We regret the A catalogue record for this book is available from the British Library. omission of sponsors confirmed after February 15. 2016. This paper meets the requirements of ANS1m1so 239.48-1992 (Permanence of Paper). -

Download the Publication

LOST LOOSE AND LOVED FOREIGN ARTISTS IN PARIS 1944-1968 The exhibition Lost, Loose, and Loved: Foreign Artists in Paris, 1944–1968 concludes the year 2018 at the Museo Nacional Centro de Arte Reina Sofía with a broad investigation of the varied Parisian art scene in the decades after World War II. The exhibition focuses on the complex situation in France, which was striving to recuperate its cultural hegemony and recompose its national identity and influence in the newly emerging postwar geopolitical order of competing blocs. It also places a particular focus on the work of foreign artists who were drawn to the city and contributed to creating a stimulating, productive climate in which intense discussion and multiple proposals prevailed. Cultural production in a diverse, continuously transforming postwar Paris has often been crowded out by the New York art world, owing both to a skillful exercise of American propaganda that had spellbound much of the criticism, market, and institutions, as well as later to the work of canonical art history with its celebration of great names and specific moments. Dismissed as secondary, minor, or derivative, art practices in those years, such as those of the German artist Wols, the Dutch artist Bram van Velde, or the Portuguese artist Maria Helena Vieira da Silva, to name just a few, lacked the single cohesive image that the New York School offered with Abstract Impressionism and its standard bearer, Jackson Pollock. In contrast, in Paris there existed a multitude of artistic languages and positions coexisting -

History of Art FJ Display

Expressive Art Timeline Neo Classicism c.1765 - 1830 A return to the purity of Rome and Ancient Greece. Sharp colours and bold contrast. Subject : Ancient Greece, mythology Jacques-Louis David :Oath of Horatii, 1784, Jean Auguste Dominique Ingres: Roger and Angelique, 1819, Romanticism c.1780 - 1840 Some human experience are beyond logic Reaction against rationalization of nature, The Age of Reason Sharp colours and bold contrast similar to Neo-Classicism Subject : Awesome power of nature eg. storms, Patriotism Eugene Delacroix :Liberty Leading the People, Thoodore Gericault : 1830 The Raft of The Medusa, 1819, Realism c.1800 - 1899 Realistic works of modern everyday life Reaction against traditional art methods and techniques the first to work outdoors Muted, more natural colours Subject : country life, peasants, landscapes Jean Francois Millet :The Gleaners 1857, Honore Daumier: Le Wagon de Troisieme Classe, 1864, Impressionism c.1860 - 1890 Capturing the flickering light Reaction against all academic styles of painting painted blue Visible brushstrokes, emphasis of light, shadows short thick strokes of paint Subject : landscapes, ordinary subjects from unusual angles Claude Monet :Water Lilies (The Clouds) 1903, Pierre Auguste Renoir: Dance at Le Moulin de la Galette , 1876, Post Impressionism 1886 - 1892 Artistic experimentation, new styles are born Retained the vivid colours and thick application of paint but preferred more formal compositions to that of the Impressionists Subject : Landscapes, Portraits Gauguin Cezanne Van Gogh Vincent Van Gogh :Starry Night, 1889, Paul Gaugain: Gardanne, 1886, Pointilism1886 - 1892 Using dots to form an image Influenced by Impressionism using dots of colour Subject : Landscapes, Portraits George Seurat Paul Signac Gerges Seurat :A Sunday Afternoon on the Island of the Grand Detail from Seurat's La Parade de Jatte 1886, Cirque (1889) Fauvism 1905 - 1915 The paintings of wild beasts Celebration of spontaneity Simplified lines and brilliant but random colours. -

Unification of Art Theories (UAT)

UNIFICATION OF ART THEORIES (UAT) Mail oUTER-aRT 2007 FLORENTIN $MARANDACHE, Ph. D. Associate Professor Math & Science Department Chair University of New Mexico 200 College Road Gallup, NM 87301, USA [email protected] http://www.gallup.unm.edu/~smarandache/a/oUTER-aRT.htm Unification of Art Theories (UAT) =composed, found, changed, modified, altered, graffiti, polyvalent art= Unification of Art Theories (UAT) considers that every artist should employ - in producing an artwork – ideas, theories, styles, techniques and procedures of making art barrowed from various artists, teachers, schools of art, movements throughout history, but combined with new ones invented, or adopted from any knowledge field (science in special, literature, etc.), by the artist himself. The artist can use a multi-structure and multi-space. The distinction between Eclecticism and Unification of Art Theories (UAT) is that Eclecticism supposed to select among the previous schools and teachers and procedures - while UAT requires not only selecting but also to invent, or adopt from other (non-artistic) fields, new procedures. In this way UAT pushes forward the art development. Also, UAT has now a larger artistic database to choose from, than the 16th century Eclecticism, since new movements, art schools, styles, ideas, procedures of making art have been accumulated in the main time. Like a guide, UAT database should periodically be updated, changed, enlarged with new invented or adopted-from-any-field ideas, styles, art schools, movements, experimentation techniques, artists. It is an open increasing essay to include everything that has been done throughout history. This book presents a short panorama of commented art theories, together with experimental digital images using adopted techniques from various fields, in order to inspire the actual artists to choose from, and also to invent or adopt new procedures in producing their artworks. -

Complete Dissertation

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of East Anglia digital repository Table of Contents Page Title page 2 Abstract 3 Acknowledgements 4 Introduction 5 Chapter 1 A brief biography of E J Power 8 Chapter 2 Post-war collectors of contemporary art. 16 Chapter 3 A Voyage of Discovery – Dublin, London, Paris, Brussels, 31 Copenhagen. Chapter 4 A further voyage to American and British Abstraction and Pop Art. 57 Conclusion 90 List of figures 92 Appendices 93 Bibliography 116 1 JUDGEMENT BY EYE THE ART COLLECTING LIFE OF E. J. POWER 1950 to 1990 Ian S McIntyre A thesis submitted in partial fulfilment of the requirements for the degree of MASTER OF ARTS THE UNIVERSITY OF EAST ANGLIA September 2008 2 Abstract Ian S McIntyre 2008 JUDGEMENT BY EYE The art collecting life of E J Power The thesis examines the pattern of art collecting of E J Power, the leading British patron of contemporary painting and sculpture in the period after the Second World War from 1950 to the 1970s. The dissertation draws attention to Power’s unusual method of collecting which was characterised by his buying of work in quantity, considering it in depth and at leisure in his own home, and only then deciding on what to keep or discard. Because of the auto-didactic nature of his education in contemporary art, Power acquired work from a wide cross section of artists and sculptors in order to interrogate the paintings in his own mind. He paid particular attention to the works of Nicolas de Stael, Jean Dubuffet, Asger Jorn, Sam Francis, Barnett Newman, Ellsworth Kelly, Francis Picabia, William Turnbull and Howard Hodgkin. -

Walter Plate

Walter Plate East End Abstractions Pollock-Krasner House and Study Center 1 August – 31 October 2019 Walt ate East n Abstractions ugust – 3 ctober 19 Organized by Helen A. Harrison Eugene V. and Clare E. Thaw Director Pollock-Krasner House and Study Center Catalog essay by Marc Plate The exhibition and catalog are supported by the Pollock-Krasner Endowment and the Research Foundation, Stony Brook University, designated by Drs. Bobbi and Barry Coller On the front cover: Georgica Beach – The Hamptons, 1971 Oil on canvas, 60 x 66 inches Catalog number 9 Photograph courtesy of Levis Fine Art, New York On the back cover: Walter Plate in his studio, Woodstock, ca. 1960 Photograph courtesy of Marc Plate Walte Abstractions per 1961 Foreword Helen rison More than three decades ago, when I was the curator of Guild Hall Museum in East Hampton, I was contacted by a local man named William Plate. He asked if I could advise him about some paintings he owned, so I visited his home, where he showed me some works by his late brother, Walter. They needed conservation, and I gave him a couple of recommendations. I asked if he and Walter were related to Robert Plate, whom I had met in Woodstock in the mid 1970s, and he said yes, Robert was their elder brother. Looking back, it seems odd that I hadn’t encountered Plate’s work while I was the research assistant for the 1977 Vassar College exhibition celebrating the seventy-fifth anniversary of the Woodstock art colony. Considering Plate’s prominence in Woodstock—his longstanding residence, his role in the Art Students League summer school, and his family still living there—how had he escaped my notice? Perhaps the fact that he had died a few years earlier accounted for his work’s lack of visibility at that moment. -

A Curator Finds Inspiration in Abstract...The American Fine Arts

5/9/2019 A Curator Finds Inspiration In Abstract Expressionism at The American Fine Arts Society Gallery A CURATOR FINDS INSPIRATION IN ABSTRACT EXPRESSIONISM AT THE AMERICAN FINE ARTS SOCIETY GALLERY By Gary Du | April 3, 2019 | Culture As an artist, James Little found inspiration in creating his own abstract impressionism paintings. Now he seeks to bring light to others in the movement as a curator with an exhibition entitled, "New York - Centric," now open through May 1. "I picked these artists because of the way they bridged the second generation of abstract expressionism." says Little of his contemporaries Ronnie Landeld, Dan Christensen, Margaret Neill and Robert Swain, among others, whose art appears alongside his in the gallery. "Despite other SHARE https://gothammag.com/abstract-expressionism-at-american-fine-arts-society-gallery?fbclid=IwAR0HNOkVefWUb8AfTB8YRSGBy1QN4ChCk5bab3dPI5TM3X2V… 1/7 5/9/2019 A Curator Finds Inspiration In Abstract Expressionism at The American Fine Arts Society Gallery movements, they stuck with 20th-century modernism and painting... and I looked for artists who developed some sort of relationship with those ideas and the medium of paint." The pieces span the latter half of the 20th century and oer a vision of art in the onset of the 21st century, giving the viewer a sense of history as they walk through the multi-room exhibit. The striking thing is the space's versatility, with each piece complementing another as though brother and sister. The family resemblance is clear. Margaret Neill poses beside her painting, PILOT, at the "New York - Centric exhibit" at The American Fine Arts Society Gallery. -

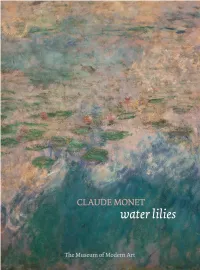

Water Lilies

Ann Temkin and Nora Lawrence CLAUDE MONET water lilies The Museum of Modern Art NEW YORK Historical Note Claude Monet rented a house in Giverny, France, in 1883, purchasing its property in 1890. In 1893 he bought an additional plot of land, across a road and a set of railroad tracks from his house, and there embarked on plans to transform an existing small pond into a magnificent water garden, filled with imported lilies and spanned by a Japanese-style wooden bridge (fig. 1). This garden setting may well signify “nature,” but it was not a purely natural site. Monet lavished an extraordinary amount of time and money on the upkeep and eventual expansion of the pond and the surrounding grounds, ultimately employing six gardeners. Itself a cherished work of art, the garden was the subject of many easel-size paintings Monet made at the turn of the new century and during its first fig. 1 HENRI CARTIER-BRESSON decade. Beginning in 1903 he began to concentrate on works that dis- Untitled (Monet’s water lily pond in Giverny), c. 1952 pensed with the conventional structure of landscape painting—omitting Gelatin-silver print the horizon line, the sky, and the ground—and focused directly on the fig. 2 surface of the pond and its reflections, sometimes including a hint of the CLAUDE MONET pond’s edge to situate the viewer in space (see fig. 2).C ompared with later Water Lily Pond, 1904 3 1 Oil on canvas, 35 /8 x 36 /4" (90 x 92 cm) depictions of the pond (see fig. -

Abstract Expressionism 1 Abstract Expressionism

Abstract expressionism 1 Abstract expressionism Abstract expressionism was an American post–World War II art movement. It was the first specifically American movement to achieve worldwide influence and put New York City at the center of the western art world, a role formerly filled by Paris. Although the term "abstract expressionism" was first applied to American art in 1946 by the art critic Robert Coates, it had been first used in Germany in 1919 in the magazine Der Sturm, regarding German Expressionism. In the USA, Alfred Barr was the first to use this term in 1929 in relation to works by Wassily Kandinsky.[1] The movement's name is derived from the combination of the emotional intensity and self-denial of the German Expressionists with the anti-figurative aesthetic of the European abstract schools such as Futurism, the Bauhaus and Synthetic Cubism. Additionally, it has an image of being rebellious, anarchic, highly idiosyncratic and, some feel, nihilistic.[2] Jackson Pollock, No. 5, 1948, oil on fiberboard, 244 x 122 cm. (96 x 48 in.), private collection. Style Technically, an important predecessor is surrealism, with its emphasis on spontaneous, automatic or subconscious creation. Jackson Pollock's dripping paint onto a canvas laid on the floor is a technique that has its roots in the work of André Masson, Max Ernst and David Alfaro Siqueiros. Another important early manifestation of what came to be abstract expressionism is the work of American Northwest artist Mark Tobey, especially his "white writing" canvases, which, though generally not large in scale, anticipate the "all-over" look of Pollock's drip paintings. -

A HIDDEN RESERVE: PAINTING from 1958 to 1965 - Artforum International 3/23/20, 11�18 AM a HIDDEN RESERVE: PAINTING from 1958 to 1965

A HIDDEN RESERVE: PAINTING FROM 1958 TO 1965 - Artforum IntErnational 3/23/20, 1118 AM A HIDDEN RESERVE: PAINTING FROM 1958 TO 1965 IN THE LATE 1950s, painting celebrated some of the greatest triumphs in its history, grandly ordained as a universal language of subjective and historical experience in major shows and touring exhibitions. But only a short while later, its very right to exist was fundamentally questioned. Already substantially weakened by the rise of Happenings and Pop art, painting was shoved aside by art critics during the embattled ascendancy of Minimalism in the mid-ʼ60s. Since then, painters and their champions alike have tirelessly pondered the reasons for their chosen mediumʼs downfall, its abandonment by advanced theoretical discourse. It is not coincidental that “Painting: The Task of Mourning” is the title of Yve-Alain Boisʼs seminal 1986 essay (republished in his 1990 book Painting as Model), which remains the last ambitious attempt to outline a history of modern painting and its endgames.1 For Bois, painting will doubtless survive beyond its postulated conclusions. But for the past several decades, we nevertheless seem to have been presented with only two possible outcomes: the unrelenting yet joyful endgame, a celebration of past painterly devices; and its complement, “bad painting,” the cynical appropriation and parody of the mediumʼs former claims (as when Andy Warhol, Albert Oehlen, and Merlin Carpenter employ avant-garde strategies of montage or monochrome as farce or pose). But how was painting forced to