Take a Way Exploring the Security Implications of AMD's Cache Way Predictors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Effective Virtual CPU Configuration with QEMU and Libvirt

Effective Virtual CPU Configuration with QEMU and libvirt Kashyap Chamarthy <[email protected]> Open Source Summit Edinburgh, 2018 1 / 38 Timeline of recent CPU flaws, 2018 (a) Jan 03 • Spectre v1: Bounds Check Bypass Jan 03 • Spectre v2: Branch Target Injection Jan 03 • Meltdown: Rogue Data Cache Load May 21 • Spectre-NG: Speculative Store Bypass Jun 21 • TLBleed: Side-channel attack over shared TLBs 2 / 38 Timeline of recent CPU flaws, 2018 (b) Jun 29 • NetSpectre: Side-channel attack over local network Jul 10 • Spectre-NG: Bounds Check Bypass Store Aug 14 • L1TF: "L1 Terminal Fault" ... • ? 3 / 38 Related talks in the ‘References’ section Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications What this talk is not about 4 / 38 Related talks in the ‘References’ section What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications 4 / 38 What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications Related talks in the ‘References’ section 4 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP QEMU QEMU VM1 VM2 Custom Disk1 Disk2 Appliance ioctl() KVM-based virtualization components Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP Custom Appliance KVM-based virtualization components QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) Custom Appliance KVM-based virtualization components libvirtd QMP QMP QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 libguestfs (guestfish) Custom Appliance KVM-based virtualization components OpenStack, et al. -

Thermal Interface Material Comparison: Thermal Pads Vs

Thermal Interface Material Comparison: Thermal Pads vs. Thermal Grease Publication # 26951 Revision: 3.00 Issue Date: April 2004 © 2004 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. No license, whether express, implied, arising by estoppel, or otherwise, to any intellectual property rights are granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of merchantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other application in which the failure of AMD’s product could create a situation where personal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any time without notice. Trademarks AMD, the AMD Arrow logo, AMD Athlon, AMD Duron, AMD Opteron and combinations thereof, are trademarks of Advanced Micro Devices, Inc. Other product names used in this publication are for identification purposes only and may be trademarks of their respective companies. -

AMD's Llano Fusion

AMD’S “LLANO” FUSION APU Denis Foley, Maurice Steinman, Alex Branover, Greg Smaus, Antonio Asaro, Swamy Punyamurtula, Ljubisa Bajic Hot Chips 23, 19th August 2011 TODAY’S TOPICS . APU Architecture and floorplan . CPU Core Features . Graphics Features . Unified Video decoder Features . Display and I/O Capabilities . Power Gating . Turbo Core . Performance 2 | LLANO HOT CHIPS | August 19th, 2011 ARCHITECTURE AND FLOORPLAN A-SERIES ARCHITECTURE • Up to 4 Stars-32nm x86 Cores • 1MB L2 cache/core • Integrated Northbridge • 2 Chan of DDR3-1866 memory • 24 Lanes of PCIe® Gen2 • x4 UMI (Unified Media Interface) • x4 GPP (General Purpose Ports) • x16 Graphics expansion or display • 2 x4 Lanes dedicated display • 2 Head Display Controller • UVD (Unified Video Decoder) • 400 AMD Radeon™ Compute Units • GMC (Graphics Memory Controller) • FCL (Fusion Control Link) • RMB (AMD Radeon™ Memory Bus) • 227mm2, 32nm SOI • 1.45BN transistors 4 | LLANO HOT CHIPS | August 19th, 2011 INTERNAL BUS . Fusion Control Link (FCL) – 128b (each direction) path for IO access to memory – Variable clock based on throughput (LCLK) – GPU access to coherent memory space – CPU access to dedicated GPU framebuffer . AMD Radeon™ Memory Bus (RMB) – 256b (each direction) for each channel for GMC access to memory – Runs on Northbridge clock (NCLK) – Provides full bandwidth path for Graphics access to system memory – DRAM friendly stream of reads and write – Bypasses coherency mechanism 5 | LLANO HOT CHIPS | August 19th, 2011 Dual-channel DDR3 Unified Video DDR3 UVD Decoder NB CPU CPU Graphics SIMD Integrated Integrated GPU, Display Northbridge Array Controller I/O Controllers L2 Display L2 I/OMultimedia Controllers L2 PCI Express I/O - 24 lanes, optional 1 MB L2 cache L2 I/O per core digital display interfaces CPU CPU Digital display interfaces 4 Stars-32nm PCIe CPU cores PPL Display PCIe PCIe Display 6 | LLANO HOT CHIPS | August 19th, 2011 CPU, GPU, UVD AND IO FEATURES STARS-32nm CPU CORE FEATURES . -

AMD EPYC™ 7371 Processors Accelerating HPC Innovation

AMD EPYC™ 7371 Processors Solution Brief Accelerating HPC Innovation March, 2019 AMD EPYC 7371 processors (16 core, 3.1GHz): Exceptional Memory Bandwidth AMD EPYC server processors deliver 8 channels of memory with support for up The right choice for HPC to 2TB of memory per processor. Designed from the ground up for a new generation of solutions, AMD EPYC™ 7371 processors (16 core, 3.1GHz) implement a philosophy of Standards Based AMD is committed to industry standards, choice without compromise. The AMD EPYC 7371 processor delivers offering you a choice in x86 processors outstanding frequency for applications sensitive to per-core with design innovations that target the performance such as those licensed on a per-core basis. evolving needs of modern datacenters. No Compromise Product Line Compute requirements are increasing, datacenter space is not. AMD EPYC server processors offer up to 32 cores and a consistent feature set across all processor models. Power HPC Workloads Tackle HPC workloads with leading performance and expandability. AMD EPYC 7371 processors are an excellent option when license costs Accelerate your workloads with up to dominate the overall solution cost. In these scenarios the performance- 33% more PCI Express® Gen 3 lanes. per-dollar of the overall solution is usually best with a CPU that can Optimize Productivity provide excellent per-core performance. Increase productivity with tools, resources, and communities to help you “code faster, faster code.” Boost AMD EPYC processors’ innovative architecture translates to tremendous application performance with Software performance. More importantly, the performance you’re paying for can Optimization Guides and Performance be matched to the appropriate to the performance you need. -

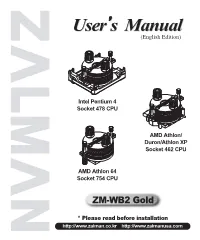

User's Manual User's Manual

User’s Manual (English Edition) Intel Pentium 4 Socket 478 CPU AMD Athlon/ Duron/Athlon XP Socket 462 CPU AMD Athlon 64 Socket 754 CPU ZM-WB2 Gold * Please read before installation http://www.zalman.co.kr http://www.zalmanusa.com 1. Features 1) Gold plated pure copper base maximizes cooling performance and prevents CPU blocks from galvanic corrosion 2) Intel Pentium 4 (Socket 478), AMD Athlon/Duron/Athlon XP (Socket 462),Athlon 64 (Socket 754) compatible design for broad compatibility. 3) Three types of compression fitting are offered for use with water tubes (8X10mm,8X11mm,10X13mm) * Zalman Tech. Co., Ltd. is not responsible for any damage resulting from CPU OVERCLOCKING. 2. Specifications Part No Part name Q’ ty Weight (g) Use 1 CPU block 1 447.0 CPU mountimg 2 Hand screw 2 8.0 Clip mountimg 3 Thermal grease 1 1.8 Apply to cpu core 4 Clip 1 9.6 CPU block mountimg 5 User’s manual 1 6 Clip supports 2 8.2 Socket 478 7 Clip supports A 2 5.0 Socket 462 8 Clip supports B 2 5.0 Socket 462 9 Bolts 4 0.8 Clip supports, A, B-type 10 Paper washer 1 set 7, 8 mountimg 11 Nipple 2 6.2 Socket 754 mountimg 12 Back plate 60.0 Socket 754 mountimg Fitting to 13 Fitting 3 set 8 x 10mm, 8 x 11mm, 10 x 13mm tubes NOTE 1) The maximum weight for the cooler is specified as 450g/450g/300g for socket 478/754/462 respectively. Special care should be taken when moving a computer equipped with a cooler which exceeds the relevant weight limit. -

Evaluation of AMD EPYC

Evaluation of AMD EPYC Chris Hollowell <[email protected]> HEPiX Fall 2018, PIC Spain What is EPYC? EPYC is a new line of x86_64 server CPUs from AMD based on their Zen microarchitecture Same microarchitecture used in their Ryzen desktop processors Released June 2017 First new high performance series of server CPUs offered by AMD since 2012 Last were Piledriver-based Opterons Steamroller Opteron products cancelled AMD had focused on low power server CPUs instead x86_64 Jaguar APUs ARM-based Opteron A CPUs Many vendors are now offering EPYC-based servers, including Dell, HP and Supermicro 2 How Does EPYC Differ From Skylake-SP? Intel’s Skylake-SP Xeon x86_64 server CPU line also released in 2017 Both Skylake-SP and EPYC CPU dies manufactured using 14 nm process Skylake-SP introduced AVX512 vector instruction support in Xeon AVX512 not available in EPYC HS06 official GCC compilation options exclude autovectorization Stock SL6/7 GCC doesn’t support AVX512 Support added in GCC 4.9+ Not heavily used (yet) in HEP/NP offline computing Both have models supporting 2666 MHz DDR4 memory Skylake-SP 6 memory channels per processor 3 TB (2-socket system, extended memory models) EPYC 8 memory channels per processor 4 TB (2-socket system) 3 How Does EPYC Differ From Skylake (Cont)? Some Skylake-SP processors include built in Omnipath networking, or FPGA coprocessors Not available in EPYC Both Skylake-SP and EPYC have SMT (HT) support 2 logical cores per physical core (absent in some Xeon Bronze models) Maximum core count (per socket) Skylake-SP – 28 physical / 56 logical (Xeon Platinum 8180M) EPYC – 32 physical / 64 logical (EPYC 7601) Maximum socket count Skylake-SP – 8 (Xeon Platinum) EPYC – 2 Processor Inteconnect Skylake-SP – UltraPath Interconnect (UPI) EYPC – Infinity Fabric (IF) PCIe lanes (2-socket system) Skylake-SP – 96 EPYC – 128 (some used by SoC functionality) Same number available in single socket configuration 4 EPYC: MCM/SoC Design EPYC utilizes an SoC design Many functions normally found in motherboard chipset on the CPU SATA controllers USB controllers etc. -

Amd Athlon Ii X2 270 Manual

Amd Athlon Ii X2 270 Manual Specifications. Please visit AMD Athlon II X2 215 (rev. C3) and AMD Athlon II X2 270 pages for more detailed specifications. Review, Differences, Benchmarks, Specifications, Comments Athlon II X2 270. CPUBoss recommends the AMD Athlon II X2 270 based on its. See full details. Specifications. Please visit AMD Athlon II X2 270 and AMD Athlon II X2 280 pages for more detailed specifications of both. Far Cry 4 on AMD Athlon x2 340(Dual Core) 4GB RAM HD 6570 PC Specifications. Specifications. Please visit AMD Athlon II X2 270 and AMD FX-6300 pages for more detailed specifications of both. AMD Athlon II x2 260 (3.2GHz) Although the specifications of this cpu list 74C as the max temp, i prefer to stick to the old "65C max" rule-of-thumb for amd cpus. Amd Athlon Ii X2 270 Manual Read/Download AMD Athlon II X2 270u. 2 GHz, Dual core. Front view of AMD Athlon II X2 270u. 5.9 Out of 10. VS Review, Differences, Benchmarks, Specifications, Comments. Photos of the AMD Athlon II X2 270 Black Edition from the KitGuru Price Comparison Engine. Specifications. Please visit AMD Athlon II X2 270 and AMD FX-4300 pages for more detailed specifications of both. SPECIFICATIONS : Model : AMD Athlon II X2 270. CPU Clock Speed : 3.4 GHz. Core : 2. Total L2 Cache : 2 MB Sockets : Socket AM2+,Socket AM3 Supported. up vote -5 down vote favorite. These are my specifications: AMD Athlon II x2 270 CPU, AMD 760g GPU, 4GB RAM. grand-theft-auto-5. -

Mairjason2015phd.Pdf (1.650Mb)

Power Modelling in Multicore Computing Jason Mair a thesis submitted for the degree of Doctor of Philosophy at the University of Otago, Dunedin, New Zealand. 2015 Abstract Power consumption has long been a concern for portable consumer electron- ics, but has recently become an increasing concern for larger, power-hungry systems such as servers and clusters. This concern has arisen from the asso- ciated financial cost and environmental impact, where the cost of powering and cooling a large-scale system deployment can be on the order of mil- lions of dollars a year. Such a substantial power consumption additionally contributes significant levels of greenhouse gas emissions. Therefore, software-based power management policies have been used to more effectively manage a system’s power consumption. However, man- aging power consumption requires fine-grained power values for evaluating the run-time tradeoff between power and performance. Despite hardware power meters providing a convenient and accurate source of power val- ues, they are incapable of providing the fine-grained, per-application power measurements required in power management. To meet this challenge, this thesis proposes a novel power modelling method called W-Classifier. In this method, a parameterised micro-benchmark is designed to reproduce a selection of representative, synthetic workloads for quantifying the relationship between key performance events and the cor- responding power values. Using the micro-benchmark enables W-Classifier to be application independent, which is a novel feature of the method. To improve accuracy, W-Classifier uses run-time workload classification and derives a collection of workload-specific linear functions for power estima- tion, which is another novel feature for power modelling. -

A Statistical Performance Model of the Opteron Processor

A Statistical Performance Model of the Opteron Processor Jeanine Cook Jonathan Cook Waleed Alkohlani New Mexico State University New Mexico State University New Mexico State University Klipsch School of Electrical Department of Computer Klipsch School of Electrical and Computer Engineering Science and Computer Engineering [email protected] [email protected] [email protected] ABSTRACT m5 [11], Simics/GEMS [25], and more recently MARSSx86 [5]{ Cycle-accurate simulation is the dominant methodology for the only one supporting the popular x86 architecture. Some processor design space analysis and performance prediction. of these have not been validated against real hardware in However, with the prevalence of multi-core, multi-threaded the multi-core mode (SESC and M5), and the others that architectures, this method has become highly impractical as are validated show large errors of up to 50% [5]. All cycle- the sole means for design due to its extreme slowdowns. We accurate multi-core simulators are very slow; a few hundred have developed a statistical technique for modeling multi- thousand simulated instructions per second is the most op- core processors that is based on Monte Carlo methods. Us- timistic speed with the help of native mode execution. Fur- ing this method, processor models of contemporary archi- ther, the architectures supported by many of these simula- tectures can be developed and applied to performance pre- tors are now obsolete. diction, bottleneck detection, and limited design space anal- ysis. To date, we have accurately modeled the IBM Cell, the To address these issues and to satisfy our own desire for Intel Itanium, and the Sun Niagara 1 and Niagara 2 proces- performance models of contemporary processors, we devel- sors [34, 33, 10]. -

AMD FX Cpus Now Faster Than Ever—Unlocked Performance with Maximum Cores

How to Sell the New AMD FX CPUs Now Faster than Ever—Unlocked Performance with Maximum Cores Who’s it for? Gamers and Enthusiasts who want the most out of their PC. Sell it in 5 seconds. More Cores. More Speed. Unlocked1 More Cores > Up to 8 Cores > Only 8 Core Desktop Processor—2 cores more than Intel1 Maximum Speed > Up to 4.2GHz Turbo Clock Speed > Latest Technology and Worlds Highest Frequencies2 Unlocked & Overclockable > Get the Maximum Tunable Performance > Go up to 5.0 GHz with aggressive cooling solutions from AMD3 Sell it in 60 seconds. Unlocked Performance for Less Money1,4 Performance Ferocious power right out of the box. > The groundbreaking AMD FX 8-Core Processor Black Edition comes unlocked. No premium to pay. No code to input.Just unrestrained, overclocked power right away.4 > Groundbreaking technology. Epic performance. > This next-generation architecture takes 8-core processing to a new level. Test the limits to play harder and get more done. Speed The need for speed. AMD FX Processors—Unlocked and overclocked.4 > Incredible speed out of the box and more in the tank; AMD Turbo CORE Technology automatically kicks in, boosting performance to a user’s varied workload. > The sky’s the limit with this processor’s massive headroom. Go up to 5.0 GHz with aggressive cooling solutions from AMD.5 Price Price and performance that keeps getting better and more bang for your buck. Unlocked technology.4 Unbelievable pricing. > With more speed than ever and more aggressive price points – New AMD FX Processors raise the bar for 8-core computing. -

Power Modeling

CHAPTER 5 POWER MODELING Jason Mair1, Zhiyi Huang1, David Eyers1, Leandro Cupertino2, Georges Da Costa2, Jean-Marc Pierson2 and Helmut Hlavacs3 1Department of Computer Science, University of Otago, New Zealand 2Institute for Research in Informatics of Toulouse (IRIT), University of Toulouse III, France 3Faculty of Computer Science, University of Vienna, Austria 5.1 Introduction Power consumption has long been a concern for portable consumer electronics, with many manufacturers explicitly seeking to maximize battery life in order to improve the usability of devices such as laptops and smart phones. However, it has recently become a concern in the domain of much larger, more power hungry systems such as servers, clusters and data centers. This new drive to improve energy efficiency is in part due to the increasing deployment of large-scale systems in more businesses and industries, which have two pri- mary motives for saving energy. Firstly, there is the traditional economic incentive for a business to reduce their operating costs, where the cost of powering and cooling a large data center can be on the order of millions of dollars [18]. Reducing the total cost of own- ership for servers could help to stimulate further deployments. As servers become more affordable, deployments will increase in businesses where concerns over lifetime costs pre- viously prevented adoption. The second motivating factor is the increasing awareness of the environmental impact—e.g. greenhouse gas emissions—caused by power production. Reducing energy consumption can help a business indirectly reduce their environmental impact, making them more clean and green. The most commonly adopted solution for reducing power consumption is a hardware- based approach, where old, inefficient hardware is replaced with newer, more energy effi- COST Action 0804, edition. -

Amd(Amd.Us)18Q1 点评 2018 年 07 月 30 日

海外公司报告 | 公司动态研究 证券研究报告 AMD(AMD.US)18Q1 点评 2018 年 07 月 30 日 作者 AMD 7 年最佳,10 年翻身,重申买入,TP 上调至 何翩翩 分析师 23 美元 SAC 执业证书编号:S1110516080002 [email protected] 业绩超预期,7 年来最佳盈利季 雷俊成 分析师 SAC 执业证书编号:S1110518060004 AMD 18Q2 实现 7 年来最佳盈利季度,non-GAAP EPS 0.14 美元,营收 17.6 [email protected] 亿美元同比大涨 53%,均超过华尔街预期的 EPS 0.13 美元和营收 17.2 亿美 马赫 分析师 SAC 执业证书编号:S1110518070001 元。计算与图形业务同比大涨 64%至 10.9 亿美元好于市场预期的 10.6 亿, [email protected] 但受 Q2 区块链相关贡献进一步减弱带来该业务环比跌 3%。挖矿业务本季 董可心 联系人 营收占比从上季的 10%降低为 6%,公司进一步看淡下半年需求。EESC 业务 [email protected] 同比涨 37%至 6.7 亿美元,好于预期的 6.61 亿,EPYC 逐步进入放量阶段, 公司维持到年底会实现中单位数份额的预测。Q2 毛利率提升至 37%,Q3 指引营收 17 亿美元,同比增长 7%,略低于市场预期的 17.6 亿,毛利率提 相关报告 升至约 38%;全年指引营收增速保持 25%,我们认为公司指引基于 17Q3 的 1 《AMD(AMD.US)点评:EPYC“从 高基数较为保守,且区块链影响作为一次性业务逐渐消弭也会进一步减少 零到一”终实现,7nm 产品周期全方位 业绩不确定性,我们看好 EPYC 会在下半年至 Q4 迎来关键放量。 回归“传奇”;TP 上调至 22 美元,重 服务器市场 AMD 与 Intel“荣辱互见” 申买入》2018-06-20 2 《AMD(AMD.US)点评:公布 7nm 服务器市场 AMD 与 Intel“荣辱互见”,EPYC 服务器随着 Cisco、HPE 适配 GPU 加入 AI 计算抢滩战,Ryzen+EPYC 以及超级云计算客户的需求能见度提高,Q2 出货量和营收均环比提高超 50%,目前与 AMD 合作的 5 个云计算巨头成主要推动力。我们认为 AMD “双子星”仍是中流砥柱;TP 上调至 将继续通过单插槽服务器高核心数和低功耗打造性价比优势,下半年加速 18 美元,重申买入》2018-06-08 市场渗透蚕食 Intel 份额,进入明年则等待 7nm 的第二代 EPYC 面市,面对 3 《AMD(AMD.US)18Q1 点评:2018 已将 10nm Cannon Lake 量产时点延后至明年的 Intel,AMD 将终于实现制 开门红,业绩指引均超预期,Ryzen 继 程反超,加速量价齐升。“从零到一”抢占 20 亿美元以上的市场份额。 续扎实闪耀,EPYC 仍待升级放量,重 反观 Intel Q2 数据中心业务收入 55.5 亿美元,虽然在整体行业高景气度下 申买入》2018-04-30 同比增长 27%,但仍低于市场预期的 56.3 亿美元。业绩发布会上 Intel 更为 4 《2017 扭亏为盈业绩迎拐点,2018 明确消费级 10nm 产品会到 19 年下半年节日旺季才推向市场,让市场情绪 厚积待薄发,Ryzen+EPYC 继续双星闪 愈加悲观的同时也给了 AMD 足够的时间窗口。 耀,重申买入》2018-02-01 Ryzen 继续攻城略地,进一步打开笔记本市场 5 《AMD(AMD.US)点评:合作英特