Unclassified DSTI/ICCP(97)12/FINAL

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

COMMISSION of the EUROPEAN COMMUNITIES Brussels, 7.8.2003

COMMISSION OF THE EUROPEAN COMMUNITIES Brussels, 7.8.2003 SEC(2003) 849 COMMISSION STAFF WORKING PAPER ANNEXES TO the TEN Annual Report for the Year 2001 {COM(2003) 442 final} COMMISSION STAFF WORKING PAPER ANNEXES TO the TEN Annual Report for the Year 2001 Data and Factsheets The present Commission Staff Working Paper is intended to complementing the Trans- European Networks (TEN) Annual Report for the Year 2001 (COM(2002)344 final. It consists of ten annexes, each covering a particular information area, and providing extensive information and data reference on the implementation and financing of the TEN for Energy, Transport and Telecommunications. 2 INDEX Pages Annex I : List of abbreviations .....................................................................................................4 Annex II : Information on TEN-T Priority Projects ......................................................................6 Annex III : Community financial support for Trans-European Network Projects in the energy sector during the period from 1995 to 2001 (from the TEN-energy budget line) ......24 Annex IV Progress achieved on specific TEN-ISDN / Telecom projects from 1997 to 2001....36 Annex V: Community financial support in 2001 for the co-financing of actions related to Trans-European Network Projects in the energy sector .............................................58 Annex VI : TEN-Telecom projects financed in 2001 following the 2001 call for proposals .......61 Annex VII : TEN-T Projects/Studies financed in 2001 under Regulation 2236/95 -

Tale of Cities Tale of C

TaleTale of citiesc A practitioner’s guide to city recovery By Anne Power, Astrid Winkler, Jörg Plöger and Laura Lane 2 Contents Introduction 4 Industrial cities in their hey-day 5 Historic roles 6 Industrial giants 8 Industrial pressures 14 Industrial collapse and its consequences 15 Economic and social unravelling 16 Cumulative damage and the failures of mass housing 18 Inner city abandonment 24 Surburbanisation 26 Political unrest 27 Unwanted industrial infrastructure 28 New perspectives, new ideas, new roles 31 Symbols of change 32 Big ideas 35 New enterprises 36 Massive physical reinvestment by cities, 37 governments and EU Restored cities 38 New transport links 47 Practical steps towards recovery 49 Building the new economy 50 – Saint-Étienne’s Design Village and the survival of small workshops – Sheffi eld’s Advanced Science Park and Cultural Industries Quarter – Bremen’s technology focus – Torino’s incubators for local entrepreneurs – Belfast’s innovative reuse of former docks – Leipzig’s new logistics and manufacturing centres Building social enterprise and integration 60 Building skills 64 Upgrading local environments 67 Working in partnership with local communities 71 Have the cities turned the corner? 75 New image through innovative use of existing assets 76 Where next for the seven cities? 81 An uncertain future 82 Conclusion 84 Appendix 85 3 Introduction This documentary booklet traces at ground level social and economic in the seven cities and elsewhere with suggestions and comments. progress of seven European cities observing dramatic changes in Our contact details can be found at the back of the document. We industrial and post-industrial economies, their booming then shrinking know that we have not done justice to the scale of work that has gone populations, their employment and political leadership. -

Sheffield & Rotherham Joint Employment Land Review Final

Sheffield & Rotherham Joint Employment Land Review Final Report Sheffield City Council and Rotherham Metropolitan Borough Council 15 October 2015 50467/JG/RL Nathaniel Lichfield & Partners Generator Studios Trafalgar Street Newcastle NE1 2LA nlpplanning.com This document is formatted for double sided printing. © Nathaniel Lichfield & Partners Ltd 2015. Trading as Nathaniel Lichfield & Partners. All Rights Reserved. Registered Office: 14 Regent's Wharf All Saints Street London N1 9RL All plans within this document produced by NLP are based upon Ordnance Survey mapping with the permission of Her Majesty’s Stationery Office. © Crown Copyright reserved. Licence number AL50684A Sheffield & Rotherham Joint Employment Land Review : Final Report Contents 1.0 Introduction 1 Scope of Study................................................................................................. 1 Methodology .................................................................................................... 2 Structure of the Report ..................................................................................... 3 2.0 Policy Review 5 National Documents ......................................................................................... 5 Sub-Regional Documents ................................................................................ 8 Rotherham Documents .................................................................................. 10 Sheffield Documents ..................................................................................... -

Digital Intermediate

Digital Intermediate: (typically abbreviated to DI) is a motion picture finishing process which classically involves digitizing a motion picture and manipulating the color and other image characteristics. Josh Haynie SVP Operations, Efilm. Member of the Worldwide Deluxe Family Team Leader: Colorists, Production, Editorial, Data Management, Scanning/Recording, Quality Control, Restoration, Vault, Security, Facilities 13 Years with Efilm Over 500 Feature Films delivered since 2003 Traditional and emerging Post Production since 1991 [email protected] efilm.com 79 Worldwide Locations 6570+ Fulltime Employees 800+ Metadata Technicians 120+ System R+D Developers 30,000 DCP’s delivered per month 60,000 Digital Distribution deliveries per month Let us take a look at what we have completed and a glimpse of what are are working on… Complex Projects Overall Workflow Testing Location Services Dailies VFX Pulls/ Shots Marketing Assembly Grading Render HD/ Blue Ray HDR UHD Large Format Archiving Looks and LUT’s Lighting Room Colors Costumes Locations Aspect Ratios Arri Alexa Digital Camera 35mm Film Camera Canon 5D Digital Camera/SLR Arri 65 Digital Camera Sony F65 Digital Camera Go Pro Imax Film Camera Red Digital Camera Phantom Digital Camera Canon C300 Digital Camera IPhone 6S Black Magic Digital Camera EC3: Hollywood, Location, Near Set, WW Receive, Archive and Verify Data Grade and QC each day’s footage Create Editorial Media daily Create and Distribute Studio Screening elements daily On Set Near Set Dailies Deliverables H.264 Network Deliverable Transfer Station Camera Mag Editorial Dailies Processing Colorstream NAS Storage Audio ARRI RAW Multiple LTO EC3 Archive DeBayered SAN Storage Backup I/O Station Controller Meta Data VFX Pulls Pulling frames for VFX creation during shoot Post shoot frame pulling for VFX creation. -

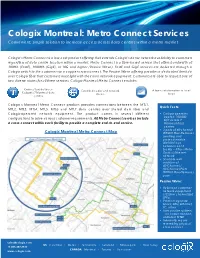

Cologix Montreal: Metro Connect Services Convenient, Simple Solution to Increase Access Across Data Centres Within a Metro Market

Cologix Montreal: Metro Connect Services Convenient, simple solution to increase access across data centres within a metro market Cologix’s Metro Connect is a low-cost product offering that extends Cologix’s dense network availability to customers regardless of data centre location within a market. Metro Connect is a fibre-based service that offers bandwidth of 100Mb (FastE), 1000Mb (GigE), or 10G and higher (Passive Wave). FastE and GigE services are delivered through a Cologix switch to the customer via a copper cross-connect. The Passive Wave offering provides a dedicated lambda over Cologix fibre that customers must light with their own network equipment. Customers are able to request one of two diverse routes for all three services. Cologix Montreal Metro Connect enables: Connections between Extended carrier and network A low-cost alternative to local Cologix’s 7 Montreal data choice loops centres Cologix’s Montreal Metro Connect product provides connections between the MTL1, Quick Facts: MTL2, MTL3, MTL4, MTL5, MTL6 and MTL7 data centres over shared dark fibre and Cologix-operated network equipment. The product comes in several different • Cologix operates confgurations to solve various customer requirements. All Metro Connect services include approx. 100,000 SQF across 7 a cross-connect within each facility to provide a complete end-to-end service. Montreal data centres • 2 pairs of 40-channel Cologix Montreal Metro Connect Map DWDM Mux-Demuxes (working and protect) enable 40x100 Gbps between each facility = 4Tbps Metro Optical -

Public Document Pack

Public Document Pack Committee Agenda City of Westminster Title: Environment Policy & Scrutiny Committee Meeting Date: Monday 19th January, 2015 Time: 7.00 pm Venue: Rooms 5, 6 & 7 - 17th Floor, City Hall Members: Councillors: Ian Adams (Chairman) Thomas Crockett Jonthan Glanz Louise Hyams Vincenzo Rampulla Karen Scarborough Cameron Thomson Jason Williams Members of the public are welcome to attend the meeting and listen to the discussion Part 1 of the Agenda Admission to the public gallery is by ticket, issued from the ground floor reception at City Hall from 6.00pm. If you have a disability and require any special assistance please contact the Committee Officer (details listed below) in advance of the meeting. An In duction loop operates to enhance sound for anyone wearing a hearing aid or using a transmitter. If you require T any further information, please contact the Committee Officer, Jonathan Deacon, Senior Committee and Governance Officer. Tel: 020 7641 2783; email: [email protected] Corporate Website: www.westminster.gov.uk Note for Members: Members are reminded that Officer contacts are shown at the end of each report and Members are welcome to raise questions in advance of the meeting. With regard to item 2, guidance on declarations of interests is included in the Code of Governance; if Members and Officers have any particular questions they should contact the Head of Legal & Democratic Services in advance of the meeting please. AGENDA PART 1 (IN PUBLIC) 1. MEMBERSHIP To note any changes to the membership. 2. DECLARATIONS OF INTEREST To receive declarations by Members and Officers of the existence and nature of any personal or prejudicial interests in matters on this agenda. -

Attendee Demographics

DEMOGRAPHICS 20REPORT 19 2020 Conferences: April 18–22, 2020 Exhibits: April 19–22 Show Floor Now Open Sunday! 2019 Conferences: April 6–11, 2019 Exhibits: April 8–11 Las Vegas Convention Center, Las Vegas, Nevada USA NABShow.com ATTENDANCE HIGHLIGHTS OVERVIEW 27% 63,331 Exhibitors BUYERS 4% Other 24,896 91,921 TOTAL EXHIBITORS 69% TOTAL NAB SHOW REGISTRANTS Buyers Includes BEA registrations 24,086 INTERNATIONAL NAB SHOW REGISTRANTS from 160+ COUNTRIES 1,635* 963,411* 1,361 EXHIBITING NET SQ. FT. PRESS COMPANIES 89,503 m2 *Includes unique companies on the Exhibit Floor and those in Attractions, Pavilions, Meeting Rooms and Suites. 2019 NAB SHOW DEMOGRAPHICS REPORT PRIMARY BUSINESS Total Buyer Audience and Data Total Buyers: 63,331 ADVERTISING/PUBLIC RELATIONS/MARKETING 6% AUDIO PRODUCTION/POST-PRODUCTION SERVICE 21% BROADERCASTING/CARRIER 19% Cable/MSO Satellite (Radio or Television) Internet/Social Media Telco (Wireline/Wireless) Radio (Broadcast) Television (Broadcast) CONTENT/CHANNEL 8% Film/TV Studio Podcasting Independent Filmmaker Gaming Programming Network Photography DIGITAL MEDIA 4% DISTRIBUTOR/DEALER/RESELLER 4% EDUCATION 3% FAITH-BASED ORGANIZATION 1% FINANCIAL 1% HEALTHCARE/MEDICAL .4% SPORTS: TEAM/LEAGUE/VENUE 1% GOVERNMENT/NON-PROFIT 1% MANUFACTURER/SUPPLIER (HARDWARE) 3% PERFORMING ARTS/MUSIC/LIVE ENTERTAINMENT 1% RENTAL EQUIPMENT 1% SYSTEMS INTEGRATION 3% VIDEO PRODUCTION/POST-PRODUCTION 8% Video Production Services/Facility Video Post-Production Services/Facility WEB SERVICES/SOFTWARE MANUFACTURER 8% OTHER 7% 2019 NAB -

State of Sheffield 03–16 Executive Summary / 17–42 Living & Working

State of Sheffield 03–16 Executive Summary / 17–42 Living & Working / 43–62 Growth & Income / 63–82 Attainment & Ambition / 83–104 Health & Wellbeing / 105–115 Looking Forwards 03–16 Executive Summary 17–42 Living & Working 21 Population Growth 24 People & Places 32 Sheffield at Work 36 Working in the Sheffield City Region 43–62 Growth & Income 51 Jobs in Sheffield 56 Income Poverty in Sheffield 63–82 Attainment & Ambition 65 Early Years & Attainment 67 School Population 70 School Attainment 75 Young People & Their Ambitions 83–104 Health & Wellbeing 84 Life Expectancy 87 Health Deprivation 88 Health Inequalities 1 9 Premature Preventable Mortality 5 9 Obesity 6 9 Mental & Emotional Health 100 Fuel Poverty 105–115 Looking Forwards 106 A Growing, Cosmopolitan City 0 11 Strong and Inclusive Economic Growth 111 Fair, Cohesive & Just 113 The Environment 114 Leadership, Governance & Reform 3 – Summary ecutive Ex State of Sheffield State Executive Summary Executive 4 The State of Sheffield 2016 report provides an Previous Page overview of the city, bringing together a detailed Photography by: analysis of economic and social developments Amy Smith alongside some personal reflections from members Sheffield City College of Sheffield Executive Board to tell the story of Sheffield in 2016. Given that this is the fifth State of Sheffield report it takes a look back over the past five years to identify key trends and developments, and in the final section it begins to explore some of the critical issues potentially impacting the city over the next five years. As explored in the previous reports, Sheffield differs from many major cities such as Manchester or Birmingham, in that it is not part of a larger conurbation or metropolitan area. -

Annual Report 2019–2020

annual report 2019–2020 Energy Solutions for the Decisive Decade M OUN KY T C A I O N R IN S T E Rocky Mountain Institute Annual Report 2019/2020TIT U1 04 Letter from Our CEO 08 Introducing RMI’s New Global Programs 10 Diversity, Equity, and Inclusion Contents. 2 Rocky Mountain Institute Annual Report 2019/2020 Cover image courtesy of Unsplash/Cassie Matias 14 54 Amory Lovins: Making the Board of Trustees Future a Reality 22 62 Think, Do, Scale Thank You, Donors! 50 104 Financials Our Locations Rocky Mountain Institute Annual Report 2019/2020 3 4 Rocky Mountain Institute Annual Report 2019/2020 Letter from Our CEO There is no doubt that humanity has been dealt a difficult hand in 2020. A global pandemic and resulting economic instability have sown tremendous uncertainty for now and for the future. Record- breaking natural disasters—hurricanes, floods, and wildfires—have devastated communities resulting in deep personal suffering. Meanwhile, we have entered the decisive decade for our Earth’s climate—with just ten years to halve global emissions to meet the goals set by the Paris Agreement before we cause irreparable damage to our planet and all life it supports. In spite of these immense challenges, when I reflect on this past year I am inspired by the resilience and hope we’ve experienced at Rocky Mountain Institute (RMI). This is evidenced through impact made possible by the enduring support of our donors and tenacious partnership of other NGOs, companies, cities, states, and countries working together to drive a clean, prosperous, and secure low-carbon future. -

The Economic Development of Sheffield and the Growth of the Town Cl740-Cl820

The Economic Development of Sheffield and the Growth of the Town cl740-cl820 Neville Flavell PhD The Division of Adult Continuing Education University of Sheffield February 1996 Volume One THE ECONOMIC DEVELOPMENT OF SHEFFIELD AND THE GROWTH OF THE TOWN cl740-c 1820 Neville Flavell February 1996 SUMMARY In the early eighteenth century Sheffield was a modest industrial town with an established reputation for cutlery and hardware. It was, however, far inland, off the main highway network and twenty miles from the nearest navigation. One might say that with those disadvantages its future looked distinctly unpromising. A century later, Sheffield was a maker of plated goods and silverware of international repute, was en route to world supremacy in steel, and had already become the world's greatest producer of cutlery and edge tools. How did it happen? Internal economies of scale vastly outweighed deficiencies. Skills, innovations and discoveries, entrepreneurs, investment, key local resources (water power, coal, wood and iron), and a rapidly growing labour force swelled largely by immigrants from the region were paramount. Each of these, together with external credit, improved transport and ever-widening markets, played a significant part in the town's metamorphosis. Economic and population growth were accompanied by a series of urban developments which first pushed outward the existing boundaries. Considerable infill of gardens and orchards followed, with further peripheral expansion overspilling into adjacent townships. New industrial, commercial and civic building, most of it within the central area, reinforced this second phase. A period of retrenchment coincided with the French and Napoleonic wars, before a renewed surge of construction restored the impetus. -

Sheffield City Region Devolution Agreements

SHEFFIELD CITY COUNCIL Full Council Report of: Chief Executive ______________________________________________________________________________ Report to: Full Council ______________________________________________________________________________ Date: 18th March 2016 ______________________________________________________________________________ Subject: Sheffield City Region (SCR) Devolution Agreement: Ratification of the Proposal ______________________________________________________________________________ Author of Report: Laurie Brennan Policy and Improvement Officer [email protected] 0114 2734755 ______________________________________________________________________________ Key Decision: YES ______________________________________________________________________________ Reason Key Decision: Affects 2 or more wards ______________________________________________________________________________ Summary: This paper sets out the proposed SCR Devolution Agreement which represents the next step towards Sheffield and Sheffield City Region (SCR) securing greater local control of the powers needed to unlock our economic potential. The proposed Agreement includes a range of vital powers and long-term funding for SCR, including major £900m (£30m a year for 30 years) investment fund; the full devolution of adult skills; the powers to franchise the bus network in SCR (subject to the Buses Bill); and the co- commissioning of services to help people get back into work. Government made clear that access to substantial devolved powers -

Endogenous City Disamenities: Lessons from Industrial Pollution in 19Th Century Britain∗

Endogenous City Disamenities: Lessons from Industrial Pollution in 19th Century Britain∗ PRELIMINARY W. Walker Hanlon UCLA and NBER October 31, 2014 Abstract Industrialization and urbanization often go hand-in-hand with pollution. Growing industries create jobs and drive city growth, but may also generate pollution, an endogenous disamenity that drives workers and firms away. This paper provides an approach for analyzing endogenous disamenities in cities and applies it to study the first major episode of modern industrial pollution using data on 31 large British cities from 1851-1911. I begin by constructing a measure of industrial coal use, the largest source of industrial pollution in British cities. I show that industrial coal use was an important city disamenity; it was negatively related to measured quality-of-life in these cities and positively related to mortality. I then offer an estimation strategy that allows me to separate the negative impact of coal use on city growth through pollution from the direct positive effect of employment growth in polluting industries. My results suggest that coal-based pollution substantially impacted the growth of British cities during this period. ∗I thank Leah Boustan, Dora Costa, Matt Kahn, Adriana Lleras-Muney and Werner Troesken for their helpful comments. Reed Douglas provided excellent research assistance. Some of the data used in this study was collected using funds from a grant provided by the California Center for Population Research. Author email: [email protected]. 1 Introduction From the mill towns of 19th century England to the mega-cities of modern China, urbanization has often gone hand-in-hand with pollution.