Image Denoising by Autoencoder: Learning Core Representations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Blind Denoising Autoencoder

1 Blind Denoising Autoencoder Angshul Majumdar In recent times a handful of papers have been published on Abstract—The term ‘blind denoising’ refers to the fact that the autoencoders used for signal denoising [10-13]. These basis used for denoising is learnt from the noisy sample itself techniques learn the autoencoder model on a training set and during denoising. Dictionary learning and transform learning uses the trained model to denoise new test samples. Unlike the based formulations for blind denoising are well known. But there has been no autoencoder based solution for the said blind dictionary learning and transform learning based approaches, denoising approach. So far autoencoder based denoising the autoencoder based methods were not blind; they were not formulations have learnt the model on a separate training data learning the model from the signal at hand. and have used the learnt model to denoise test samples. Such a The main disadvantage of such an approach is that, one methodology fails when the test image (to denoise) is not of the never knows how good the learned autoencoder will same kind as the models learnt with. This will be first work, generalize on unseen data. In the aforesaid studies [10-13] where we learn the autoencoder from the noisy sample while denoising. Experimental results show that our proposed method training was performed on standard dataset of natural images performs better than dictionary learning (K-SVD), transform (image-net / CIFAR) and used to denoise natural images. Can learning, sparse stacked denoising autoencoder and the gold the learnt model be used to recover images from other standard BM3D algorithm. -

A Comparison of Delayed Self-Heterodyne Interference Measurement of Laser Linewidth Using Mach-Zehnder and Michelson Interferometers

Sensors 2011, 11, 9233-9241; doi:10.3390/s111009233 OPEN ACCESS sensors ISSN 1424-8220 www.mdpi.com/journal/sensors Article A Comparison of Delayed Self-Heterodyne Interference Measurement of Laser Linewidth Using Mach-Zehnder and Michelson Interferometers Albert Canagasabey 1,2,*, Andrew Michie 1,2, John Canning 1, John Holdsworth 3, Simon Fleming 2, Hsiao-Chuan Wang 1,2 and Mattias L. Åslund 1 1 Interdisciplinary Photonics Laboratories (iPL), School of Chemistry, University of Sydney, 2006, NSW, Australia; E-Mails: [email protected] (A.M.); [email protected] (J.C.); [email protected] (H.-C.W.); [email protected] (M.L.Å.) 2 Institute of Photonics and Optical Science (IPOS), School of Physics, University of Sydney, 2006, NSW, Australia; E-Mail: [email protected] (S.F.) 3 SMAPS, University of Newcastle, Callaghan, NSW 2308, Australia; E-Mail: [email protected] (J.H.) * Author to whom correspondence should be addressed; E-Mail: [email protected]; Tel.: +61-2-9351-1984. Received: 17 August 2011; in revised form: 13 September 2011 / Accepted: 23 September 2011 / Published: 27 September 2011 Abstract: Linewidth measurements of a distributed feedback (DFB) fibre laser are made using delayed self heterodyne interferometry (DHSI) with both Mach-Zehnder and Michelson interferometer configurations. Voigt fitting is used to extract and compare the Lorentzian and Gaussian linewidths and associated sources of noise. The respective measurements are wL (MZI) = (1.6 ± 0.2) kHz and wL (MI) = (1.4 ± 0.1) kHz. The Michelson with Faraday rotator mirrors gives a slightly narrower linewidth with significantly reduced error. -

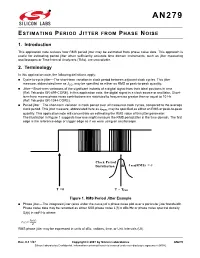

AN279: Estimating Period Jitter from Phase Noise

AN279 ESTIMATING PERIOD JITTER FROM PHASE NOISE 1. Introduction This application note reviews how RMS period jitter may be estimated from phase noise data. This approach is useful for estimating period jitter when sufficiently accurate time domain instruments, such as jitter measuring oscilloscopes or Time Interval Analyzers (TIAs), are unavailable. 2. Terminology In this application note, the following definitions apply: Cycle-to-cycle jitter—The short-term variation in clock period between adjacent clock cycles. This jitter measure, abbreviated here as JCC, may be specified as either an RMS or peak-to-peak quantity. Jitter—Short-term variations of the significant instants of a digital signal from their ideal positions in time (Ref: Telcordia GR-499-CORE). In this application note, the digital signal is a clock source or oscillator. Short- term here means phase noise contributions are restricted to frequencies greater than or equal to 10 Hz (Ref: Telcordia GR-1244-CORE). Period jitter—The short-term variation in clock period over all measured clock cycles, compared to the average clock period. This jitter measure, abbreviated here as JPER, may be specified as either an RMS or peak-to-peak quantity. This application note will concentrate on estimating the RMS value of this jitter parameter. The illustration in Figure 1 suggests how one might measure the RMS period jitter in the time domain. The first edge is the reference edge or trigger edge as if we were using an oscilloscope. Clock Period Distribution J PER(RMS) = T = 0 T = TPER Figure 1. RMS Period Jitter Example Phase jitter—The integrated jitter (area under the curve) of a phase noise plot over a particular jitter bandwidth. -

Signal-To-Noise Ratio and Dynamic Range Definitions

Signal-to-noise ratio and dynamic range definitions The Signal-to-Noise Ratio (SNR) and Dynamic Range (DR) are two common parameters used to specify the electrical performance of a spectrometer. This technical note will describe how they are defined and how to measure and calculate them. Figure 1: Definitions of SNR and SR. The signal out of the spectrometer is a digital signal between 0 and 2N-1, where N is the number of bits in the Analogue-to-Digital (A/D) converter on the electronics. Typical numbers for N range from 10 to 16 leading to maximum signal level between 1,023 and 65,535 counts. The Noise is the stochastic variation of the signal around a mean value. In Figure 1 we have shown a spectrum with a single peak in wavelength and time. As indicated on the figure the peak signal level will fluctuate a small amount around the mean value due to the noise of the electronics. Noise is measured by the Root-Mean-Squared (RMS) value of the fluctuations over time. The SNR is defined as the average over time of the peak signal divided by the RMS noise of the peak signal over the same time. In order to get an accurate result for the SNR it is generally required to measure over 25 -50 time samples of the spectrum. It is very important that your input to the spectrometer is constant during SNR measurements. Otherwise, you will be measuring other things like drift of you lamp power or time dependent signal levels from your sample. -

Estimation from Quantized Gaussian Measurements: When and How to Use Dither Joshua Rapp, Robin M

1 Estimation from Quantized Gaussian Measurements: When and How to Use Dither Joshua Rapp, Robin M. A. Dawson, and Vivek K Goyal Abstract—Subtractive dither is a powerful method for remov- be arbitrarily far from minimizing the mean-squared error ing the signal dependence of quantization noise for coarsely- (MSE). For example, when the population variance vanishes, quantized signals. However, estimation from dithered measure- the sample mean estimator has MSE inversely proportional ments often naively applies the sample mean or midrange, even to the number of samples, whereas the MSE achieved by the when the total noise is not well described with a Gaussian or midrange estimator is inversely proportional to the square of uniform distribution. We show that the generalized Gaussian dis- the number of samples [2]. tribution approximately describes subtractively-dithered, quan- tized samples of a Gaussian signal. Furthermore, a generalized In this paper, we develop estimators for cases where the Gaussian fit leads to simple estimators based on order statistics quantization is neither extremely fine nor extremely coarse. that match the performance of more complicated maximum like- The motivation for this work stemmed from a series of lihood estimators requiring iterative solvers. The order statistics- experiments performed by the authors and colleagues with based estimators outperform both the sample mean and midrange single-photon lidar. In [3], temporally spreading a narrow for nontrivial sums of Gaussian and uniform noise. Additional laser pulse, equivalent to adding non-subtractive Gaussian analysis of the generalized Gaussian approximation yields rules dither, was found to reduce the effects of the detector’s coarse of thumb for determining when and how to apply dither to temporal resolution on ranging accuracy. -

Active Noise Control Over Space: a Subspace Method for Performance Analysis

applied sciences Article Active Noise Control over Space: A Subspace Method for Performance Analysis Jihui Zhang 1,* , Thushara D. Abhayapala 1 , Wen Zhang 1,2 and Prasanga N. Samarasinghe 1 1 Audio & Acoustic Signal Processing Group, College of Engineering and Computer Science, Australian National University, Canberra 2601, Australia; [email protected] (T.D.A.); [email protected] (W.Z.); [email protected] (P.N.S.) 2 Center of Intelligent Acoustics and Immersive Communications, School of Marine Science and Technology, Northwestern Polytechnical University, Xi0an 710072, China * Correspondence: [email protected] Received: 28 February 2019; Accepted: 20 March 2019; Published: 25 March 2019 Abstract: In this paper, we investigate the maximum active noise control performance over a three-dimensional (3-D) spatial space, for a given set of secondary sources in a particular environment. We first formulate the spatial active noise control (ANC) problem in a 3-D room. Then we discuss a wave-domain least squares method by matching the secondary noise field to the primary noise field in the wave domain. Furthermore, we extract the subspace from wave-domain coefficients of the secondary paths and propose a subspace method by matching the secondary noise field to the projection of primary noise field in the subspace. Simulation results demonstrate the effectiveness of the proposed algorithms by comparison between the wave-domain least squares method and the subspace method, more specifically the energy of the loudspeaker driving signals, noise reduction inside the region, and residual noise field outside the region. We also investigate the ANC performance under different loudspeaker configurations and noise source positions. -

ECE 417 Lecture 3: 1-D Gaussians Mark Hasegawa-Johnson 9/5/2017 Contents

ECE 417 Lecture 3: 1-D Gaussians Mark Hasegawa-Johnson 9/5/2017 Contents • Probability and Probability Density • Gaussian pdf • Central Limit Theorem • Brownian Motion • White Noise • Vector with independent Gaussian elements Cumulative Distribution Function (CDF) A “cumulative distribution function” (CDF) specifies the probability that random variable X takes a value less than : Probability Density Function (pdf) A “probability density function” (pdf) is the derivative of the CDF: That means, for example, that the probability of getting an X in any interval is: Example: Uniform pdf The rand() function in most programming languages simulates a number uniformly distributed between 0 and 1, that is, Suppose you generated 100 random numbers using the rand() function. • How many of the numbers would be between 0.5 and 0.6? • How many would you expect to be between 0.5 and 0.6? • How many would you expect to be between 0.95 and 1.05? Gaussian (Normal) pdf Gauss considered this problem: under what circumstances does it make sense to estimate the mean of a distribution, , by taking the average of the experimental values, ? He demonstrated that is the maximum likelihood estimate of if Gaussian pdf Attribution: jhguch, https://commons. wikimedia.org/wik i/File:Boxplot_vs_P DF.svg Unit Normal pdf Suppose that X is normal with mean and standard deviation (variance ): Then is normal with mean 0 and standard deviation 1: Central Limit Theorem The Gaussian pdf is important because of the Central Limit Theorem. Suppose are i.i.d. (independent and identically distributed), each having mean and variance . Then Example: the sum of uniform random variables Suppose that are i.i.d. -

Topic 5: Noise in Images

NOISE IN IMAGES Session: 2007-2008 -1 Topic 5: Noise in Images 5.1 Introduction One of the most important consideration in digital processing of images is noise, in fact it is usually the factor that determines the success or failure of any of the enhancement or recon- struction scheme, most of which fail in the present of significant noise. In all processing systems we must consider how much of the detected signal can be regarded as true and how much is associated with random background events resulting from either the detection or transmission process. These random events are classified under the general topic of noise. This noise can result from a vast variety of sources, including the discrete nature of radiation, variation in detector sensitivity, photo-graphic grain effects, data transmission errors, properties of imaging systems such as air turbulence or water droplets and image quantsiation errors. In each case the properties of the noise are different, as are the image processing opera- tions that can be applied to reduce their effects. 5.2 Fixed Pattern Noise As image sensor consists of many detectors, the most obvious example being a CCD array which is a two-dimensional array of detectors, one per pixel of the detected image. If indi- vidual detector do not have identical response, then this fixed pattern detector response will be combined with the detected image. If this fixed pattern is purely additive, then the detected image is just, f (i; j) = s(i; j) + b(i; j) where s(i; j) is the true image and b(i; j) the fixed pattern noise. -

Adaptive Noise Suppression of Pediatric Lung Auscultations with Real Applications to Noisy Clinical Settings in Developing Countries Dimitra Emmanouilidou, Eric D

IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, VOL. 62, NO. 9, SEPTEMBER 2015 2279 Adaptive Noise Suppression of Pediatric Lung Auscultations With Real Applications to Noisy Clinical Settings in Developing Countries Dimitra Emmanouilidou, Eric D. McCollum, Daniel E. Park, and Mounya Elhilali∗, Member, IEEE Abstract— Goal: Chest auscultation constitutes a portable low- phy or other imaging techniques, as well as chest percussion cost tool widely used for respiratory disease detection. Though it and palpation, the stethoscope remains a key diagnostic de- offers a powerful means of pulmonary examination, it remains vice due to its portability, low cost, and its noninvasive nature. riddled with a number of issues that limit its diagnostic capabil- ity. Particularly, patient agitation (especially in children), back- Chest auscultation with standard acoustic stethoscopes is not ground chatter, and other environmental noises often contaminate limited to resource-rich industrialized settings. In low-resource the auscultation, hence affecting the clarity of the lung sound itself. high-mortality countries with weak health care systems, there is This paper proposes an automated multiband denoising scheme for limited access to diagnostic tools like chest radiographs or ba- improving the quality of auscultation signals against heavy back- sic laboratories. As a result, health care providers with variable ground contaminations. Methods: The algorithm works on a sim- ple two-microphone setup, dynamically adapts to the background training and supervision -

Lecture 18: Gaussian Channel, Parallel Channels and Rate-Distortion Theory 18.1 Joint Source-Channel Coding (Cont'd) 18.2 Cont

10-704: Information Processing and Learning Spring 2012 Lecture 18: Gaussian channel, Parallel channels and Rate-distortion theory Lecturer: Aarti Singh Scribe: Danai Koutra Disclaimer: These notes have not been subjected to the usual scrutiny reserved for formal publications. They may be distributed outside this class only with the permission of the Instructor. 18.1 Joint Source-Channel Coding (cont'd) Clarification: Last time we mentioned that we should be able to transfer the source over the channel only if H(src) < Capacity(ch). Why not design a very low rate code by repeating some bits of the source (which would of course overcome any errors in the long run)? This design is not valid, because we cannot accumulate bits from the source into a buffer; we have to transmit them immediately as they are generated. 18.2 Continuous Alphabet (discrete-time, memoryless) Channel The most important continuous alphabet channel is the Gaussian channel. We assume that we have a signal 2 Xi with gaussian noise Zi ∼ N (0; σ ) (which is independent from Xi). Then, Yi = Xi + Zi. Without any Figure 18.1: Gaussian channel further constraints, the capacity of the channel can be infinite as there exists an inifinte subset of the inputs which can be perfectly recovery (as discussed in last lecture). Therefore, we are interested in the case where we have a power constraint on the input. For every transmitted codeword (x1; x2; : : : ; xn) the following inequality should hold: n n 1 X 1 X x2 ≤ P (deterministic) or E[x2] = E[X2] ≤ P (randomly generated codebits) n i n i i=1 i=1 Last time we computed the information capacity of a Gaussian channel with power constraint. -

Noise Reduction Through Active Noise Control Using Stereophonic Sound for Increasing Quite Zone

Noise reduction through active noise control using stereophonic sound for increasing quite zone Dongki Min1; Junjong Kim2; Sangwon Nam3; Junhong Park4 1,2,3,4 Hanyang University, Korea ABSTRACT The low frequency impact noise generated during machine operation is difficult to control by conventional active noise control. The Active Noise Control (ANC) is a destructive interference technic of noise source and control sound by generating anti-phase control sound. Its efficiency is limited to small space near the error microphones. At different locations, the noise level may increase due to control sounds. In this study, the ANC method using stereophonic sound was investigated to reduce interior low frequency noise and increase the quite zone. The Distance Based Amplitude Panning (DBAP) algorithm based on the distance between the virtual sound source and the speaker was used to create a virtual sound source by adjusting the volume proportions of multi speakers respectively. The 3-Dimensional sound ANC system was able to change the position of virtual control source using DBAP algorithm. The quiet zone was formed using fixed multi speaker system for various locations of noise sources. Keywords: Active noise control, stereophonic sound I-INCE Classification of Subjects Number(s): 38.2 1. INTRODUCTION The noise reduction is a main issue according to improvement of living condition. There are passive and active control methods for noise reduction. The passive noise control uses sound absorption material which has efficiency about high frequency. The Active Noise Control (ANC) which is the method for generating anti phase control sound is able to reduce low frequency. -

Link Budgets 1

Link Budgets 1 Intuitive Guide to Principles of Communications www.complextoreal.com Link Budgets You are planning a vacation. You estimate that you will need $1000 dollars to pay for the hotels, restaurants, food etc.. You start your vacation and watch the money get spent at each stop. When you get home, you pat yourself on the back for a job well done because you still have $50 left in your wallet. We do something similar with communication links, called creating a link budget. The traveler is the signal and instead of dollars it starts out with “power”. It spends its power (or attenuates, in engineering terminology) as it travels, be it wired or wireless. Just as you can use a credit card along the way for extra money infusion, the signal can get extra power infusion along the way from intermediate amplifiers such as microwave repeaters for telephone links or from satellite transponders for satellite links. The designer hopes that the signal will complete its trip with just enough power to be decoded at the receiver with the desired signal quality. In our example, we started our trip with $1000 because we wanted a budget vacation. But what if our goal was a first-class vacation with stays at five-star hotels, best shows and travel by QE2? A $1000 budget would not be enough and possibly we will need instead $5000. The quality of the trip desired determines how much money we need to take along. With signals, the quality is measured by the Bit Error Rate (BER).