Naoko Tosa Cross-Cultural Computing: an Artist's Journey Springer Series on Cultural Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TOM FORD Lumineuse Instantanée, Oui

ellequebec.com SARAH-JEANNE LABROSSE BEAUTÉ DIY LE GOÛT LES RECETTES DE DU RISQUE LA RÉDAC DU ELLE TENDANCES mode et beauté CARTES POSTALES D’ISRAËL RÉFUGIÉES AU QUÉBEC LEURS HISTOIRES SEPTEMBRE 2018 calvinklein.com/205 biotherm.ca CHRISTY TURLINGTON-BURNS Suivez-nous sur Facebook et Instagram LE POUVOIR DE LA NATURE INFUSÉ AU CŒUR DE SOLUTIONS ANTI-ÂGE CIBLÉES PROTÈGE ET RÉPARE Pour les peaux normales à sèches RÉPARATION RAPIDE Pour tous types de peau RAFFERMIT ET RÉPARE Pour tous types de peau NOURRIT ET RÉPARE Pour les peaux normales à sèches L’HYDRATANT ANTI-ÂGE N°1 * N° 1 EN VOLUME, MARCHÉ LUXE SOINS AU CANADA, PÉRIODE DE 12 MOIS SE TERMINANT EN DÉCEMBRE 2017 (SOURCE : NPD). CANADA, PÉRIODE DE 12 MOIS SE TERMINANT EN DÉCEMBRE 2017 (SOURCE SOINS AU MARCHÉ LUXE * N° 1 EN VOLUME, BLUE THERAPY AU CANADA* Découvrez notre diagnostic de peau afin de trouver votre routine personnalisée. Visitez biotherm.ca ou votre pharmacie la plus proche et obtenez un échantillon gratuit. eau de toilette sommaireseptembre2018 Veste en jean et robe en résille ornées de cristaux (Dolce & Gabbana); boucles d’oreilles en cuivre (Sunday Feel). 62 41 CULTURE MODE 26 PLISSÉ SERRÉ 33 EN COUVERTURE Sorties, théâtre, cinéma, La jupe à plis musique, livres... en trois temps. ELLE RENCONTRE 27 41 Sarah-Jeanne Labrosse VERT ÉMERAUDE mène la danse. La couleur de la rentrée. REPORTAGE 62 L’ÉCOLE BUISSONNIÈRE REPORTAGE 50 46 C’EST MON HISTOIRE Découverte des nouveautés Parcours de réfugiées: «Un soir, j’ai fait le trottoir.» de la saison. fuir et guérir pour recommencer à vivre. -

Fashion, Costume, and Culture Clothing, Headwear, Body Decorations, and Footwear Through the Ages FCC TP V2 930 3/5/04 3:55 PM Page 3

FCC_TP_V2_930 3/5/04 3:55 PM Page 1 Fashion, Costume, and Culture Clothing, Headwear, Body Decorations, and Footwear through the Ages FCC_TP_V2_930 3/5/04 3:55 PM Page 3 Fashion, Costume, and Culture Clothing, Headwear, Body Decorations, and Footwear through the Ages Volume 2: Early Cultures Across2 the Globe SARA PENDERGAST AND TOM PENDERGAST SARAH HERMSEN, Project Editor Fashion, Costume, and Culture: Clothing, Headwear, Body Decorations, and Footwear through the Ages Sara Pendergast and Tom Pendergast Project Editor Imaging and Multimedia Composition Sarah Hermsen Dean Dauphinais, Dave Oblender Evi Seoud Editorial Product Design Manufacturing Lawrence W. Baker Kate Scheible Rita Wimberley Permissions Shalice Shah-Caldwell, Ann Taylor ©2004 by U•X•L. U•X•L is an imprint of For permission to use material from Picture Archive/CORBIS, the Library of The Gale Group, Inc., a division of this product, submit your request via Congress, AP/Wide World Photos; large Thomson Learning, Inc. the Web at http://www.gale-edit.com/ photo, Public Domain. Volume 4, from permissions, or you may download our top to bottom, © Austrian Archives/ U•X•L® is a registered trademark used Permissions Request form and submit CORBIS, AP/Wide World Photos, © Kelly herein under license. Thomson your request by fax or mail to: A. Quin; large photo, AP/Wide World Learning™ is a trademark used herein Permissions Department Photos. Volume 5, from top to bottom, under license. The Gale Group, Inc. Susan D. Rock, AP/Wide World Photos, 27500 Drake Rd. © Ken Settle; large photo, AP/Wide For more information, contact: Farmington Hills, MI 48331-3535 World Photos. -

Japonesque-2018-Print-Catalog.Pdf

ABOUT US JAPONESQUE’s founder, a professional model, was captivated by the unique makeup tools and palettes used by Japanese makeup artists in Kabuki theater in Japan. Inspired by the artistry, she brought these tools to the US where they eventually found their way to a feature story in Glamour Magazine. This article captured the attention of US makeup artists who wanted these palettes for their clients and JAPONESQUE was born. Driven by the love of creating beauty, JAPONESQUE has earned the reputation of creating the most refined, innovative and distinctive beauty accessories, makeup brushes and cosmetics in the world. Favored by celebrated makeup artists and beauty professionals worldwide, JAPONESQUE products are crafted with precision and performance in mind. Exceptional materials, superior craftsmanship and uncompromising standards have allowed us to create a line of coveted products that deliver flawless results. JAPONESQUE is trusted by makeup artists and beauty aficionados alike and has some of the most desired cosmetics and beauty accessories in the world. COSMETICS JAPONESQUE Color Cosmetics are formulated with superior quality ingredients to perform exactly as the user wishes, giving complete control to create a look that is an exceptional expression of beauty. Our pro-performance complexion cosmetics, including foundation, concealers, bronzers and highlighters deliver results with today’s most trending techniques to create a flawless canvas. Our artist inspired color palettes and lip colors offer runway beauty with the versatility to create looks ranging from soft and subtle to bold and bright. FACE COLOR CORRECTING LUMINOUS FOUNDATION VELVET TOUCH® CONCEALER CRAYON An exquisitely dewy liquid foundation for the perfect Provides superb matte coverage. -

Delft University of Technology Tatami

Delft University of Technology Tatami Hein, Carola Publication date 2016 Document Version Final published version Published in Kyoto Design Lab. Citation (APA) Hein, C. (2016). Tatami. In A. C. de Ridder (Ed.), Kyoto Design Lab.: The tangible and the intangible of the Machiya House (pp. 9-12). Delft University of Technology. Important note To cite this publication, please use the final published version (if applicable). Please check the document version above. Copyright Other than for strictly personal use, it is not permitted to download, forward or distribute the text or part of it, without the consent of the author(s) and/or copyright holder(s), unless the work is under an open content license such as Creative Commons. Takedown policy Please contact us and provide details if you believe this document breaches copyrights. We will remove access to the work immediately and investigate your claim. This work is downloaded from Delft University of Technology. For technical reasons the number of authors shown on this cover page is limited to a maximum of 10. TATAMI Inside the Shōkin-tei, located in the garden of the Katsura Imperial Villa. A joint of three tatami. Tatami Carola Hein Use of the tatami mat reportedly goes back to the 8th century (the Nara period in Japan) when single mats began to be used as beds, or brought out for a high-ranking person to sit on. Over centuries it became a platform that has hosted all facets of life for generations of Japanese. From palaces to houses, from temples to spaces for martial art, the tatami has served as support element for life. -

Taosrewrite FINAL New Title Cover

Authenticity and Architecture Representation and Reconstruction in Context Proefschrift ter verkrijging van de graad van doctor aan Tilburg University, op gezag van de rector magnificus, prof. dr. Ph. Eijlander, in het openbaar te verdedigen ten overstaan van een door het college voor promoties aangewezen commissie in de Ruth First zaal van de Universiteit op maandag 10 november 2014 om 10.15 uur door Robert Curtis Anderson geboren op 5 april 1966 te Brooklyn, New York, USA Promotores: prof. dr. K. Gergen prof. dr. A. de Ruijter Overige leden van de Promotiecommissie: prof. dr. V. Aebischer prof. dr. E. Todorova dr. J. Lannamann dr. J. Storch 2 Robert Curtis Anderson Authenticity and Architecture Representation and Reconstruction in Context 3 Cover Images (top to bottom): Fantoft Stave Church, Bergen, Norway photo by author Ise Shrine Secondary Building, Ise-shi, Japan photo by author King Håkon’s Hall, Bergen, Norway photo by author Kazan Cathedral, Moscow, Russia photo by author Walter Gropius House, Lincoln, Massachusetts, US photo by Mark Cohn, taken from: UPenn Almanac, www.upenn.edu/almanac/volumes 4 Table of Contents Abstract Preface 1 Grand Narratives and Authenticity 2 The Social Construction of Architecture 3 Authenticity, Memory, and Truth 4 Cultural Tourism, Conservation Practices, and Authenticity 5 Authenticity, Appropriation, Copies, and Replicas 6 Authenticity Reconstructed: the Fantoft Stave Church, Bergen, Norway 7 Renewed Authenticity: the Ise Shrines (Geku and Naiku), Ise-shi, Japan 8 Concluding Discussion Appendix I, II, and III I: The Venice Charter, 1964 II: The Nara Document on Authenticity, 1994 III: Convention for the Safeguarding of Intangible Cultural Heritage, 2003 Bibliography Acknowledgments 5 6 Abstract Architecture is about aging well, about precision and authenticity.1 - Annabelle Selldorf, architect Throughout human history, due to war, violence, natural catastrophes, deterioration, weathering, social mores, and neglect, the cultural meanings of various architectural structures have been altered. -

Folklore and Earthquakes: Native American Oral Traditions from Cascadia Compared with Written Traditions from Japan

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/249551808 Folklore and earthquakes: Native American oral traditions from Cascadia compared with written traditions from Japan Article in Geological Society London Special Publications · January 2007 DOI: 10.1144/GSL.SP.2007.273.01.07 CITATIONS READS 20 5,081 12 authors, including: R. S. Ludwin Deborah Carver University of Washington Seattle University of Alaska Anchorage 18 PUBLICATIONS 261 CITATIONS 2 PUBLICATIONS 95 CITATIONS SEE PROFILE SEE PROFILE Robert J. Losey Coll Thrush University of Alberta University of British Columbia - Vancouver 103 PUBLICATIONS 1,174 CITATIONS 20 PUBLICATIONS 137 CITATIONS SEE PROFILE SEE PROFILE Some of the authors of this publication are also working on these related projects: Cheekye Fan - Squamish Floodplain Interactions View project Peace River area radiocarbon database View project All content following this page was uploaded by John J. Clague on 07 June 2016. The user has requested enhancement of the downloaded file. Downloaded from http://sp.lyellcollection.org/ at Simon Fraser University on June 6, 2016 Folklore and earthquakes: Native American oral traditions from Cascadia compared with written traditions from Japan RUTH S. LUDWIN 1 & GREGORY J. SMITS 2 IDepartment of Earth and Space Sciences, University of Washington, Box 351310, Seattle, WA 98195-1310, USA (e-mail: [email protected]) 2Department of Histoo' and Program in Religious Studies, 108 Weaver Building, The Pennsylvania State UniversiO', Universio' Park, PA 16802, USA With Contributions from D. CARVER 3, K. JAMES 4, C. JONIENTZ-TRISLER 5, A. D. MCMILLAN 6, R. LOSEY 7, R. -

30IM-RK5501-MS04-00B-66ST Q713773.Pdf

30IM-RK5501-MS04-00B-66ST QUOTE # SPRING 2021 ALBERTSONS LLC 04' COSMETICS UNIVERSAL FIX 66" PWD TRANS PWD MATTE+PORE FIT ME PWD SUN BGE SUN PWD MATTE+PORE FIT ME QUOTE # FIT ME 1 SUPERSTAY FULL N SOFT VOL XPRS VOL EXP MASC LASH UNSTOPPABLE 11 MATTE+PORE GREAT LASH FND PORCELAN WP MASC VERY FALSIES COLOSSAL SENSATION 27 EYELINER ONYX 2 SUPERSTAY MASCARA WSH 23 19 WSHBLE BLK/BK FIT ME 41 GLAM BLK WSH VERY BLACK 33 12 49 V BLACK 37 MATTE+PORE 45 BLACK UNSTOPPABLE FND NAT IVRY 3 SUPERSTAY EYELINER TRUE BG PWD MAT ESS FITME+POREL IVRY CLS PWD MATTE+PORE FIT ME FULL N SOFT VOL XPRS LASH 28 FIT ME VOL EXP MASC ESPRESSO 13 MATTE+PORE MASCARA VERY GREAT LASH FALSIES WP COLOSSAL SENSATION FND CLS IVRY 4 SUPERSTAY BLACK VERY BLK EYE STUDIO MSTR 50 42 FIT ME MASCARA WTP WSH 24 20 VERY BLK 34 29 PREC LNR BLACK 14 38 V BLACK BROWN/BLACK MATTE+PORE 5 SUPERSTAY 46 FND NAT BGE TOTAL TEMPT VOL XPRS LASH E/S PRECISE TOFFEE PWD MATTE+PORE FIT ME NATBGE PWD MATTE+PORE FIT ME FIT ME 6 SUPERSTAY MASC WTP GREAT LASH VOL EXP MASC SENSATION SKINNY DEFINE BLK 15 MATTE+PORE FALSIES 30 FND SFT TAN VERY BLK MASCARA COLOSSAL WPF VERY WSHBLE VRY BK 35 7 43 FIT ME 51 SUPERSTAY 25 21 GLAM BLK 16 BLKST BLACK 39 MATTE+PORE 47 BLACK PRECISE INK LNR FND PURE BGE BLACK COMET 8 TOTAL TEMPT 31 SUPERSTAY SPRING 2021 LASH STILETTO TAN NAT PWD MAT ESS FITME+POREL PWD BUFF BGE MATTE+PORE FIT ME FIT ME LOTS OF VOL EXP RCKT PM0000030 PM0000030 PM0000030 PM0000030 VEX COLOSSAL MASC WSH 17 MATTE+PORE WSHBLE VERY FND SUN BGE LASHES WSHB 9 WSH MASC WSH BLKST BLK EYE STUDIO SUPERSTAY -

Sengoku Revised Edition E-Book

SENGOKUSENGOKUTM CHANBARA ROLEPLAYING IN FEUDAL JAPAN Revised Edition CREDITS Authors: Anthony J. Bryant and Mark Arsenault Michelle Knight, Charles Landauer, Bill Layman, Greg Lloyd, Fuzion Roleplaying Rules: David Ackerman-Gray, Bruce Paradise Long, Steve Long, Jonathan Luse, Kevin MacGregor, Harlick, Ray Greer, George MacDonald, Steve Peterson, Mike Shari MacGregor, Paul Mason, John Mehrholz, Edwin Pondsmith, Benjamin Wright Millheim, Mike Montesa, Dale Okada, Arcangel Ortiz, Jr., Sengoku-specific Rules: Mark Arsenault Ken Pryde, Mauro Reis, David Ross, Arzhange Safdarzadeh, Project Developer & Revisions: Mark Arsenault Rick Sagely, Janice Sellers, Matt Smith, Susan Stafford, Editorial Contributions: David Carroll, Dorian Davis, Paul Patrick Sweeney, Simon Taylor, Andy Vetromile, Marissa Mason, Andrew Martin, Sakai Naoko Way, Paul Wilcox, Chris Wolf. Cover Illustration: Jason A, Engle Additional Thanks: To Paul Hume, and to everyone on the Interior Illustrations: Paul Abrams, Mark Arsenault, Heather Sengoku mailing list for their suggestions and encouragement, Bruton, Nancy Champion, Storn Cook, Audrey Corman, Steve especially Dorian Davis, Anthony Jackson, Dave Mattingly, Goss, John Grigni, Kraig Horigan, Bryce Nakagawa, J. Scott Mike Montesa, Simon Seah, and Paul Wilcox. Reeves, Greg Smith, Tonya Walden Revised Edition Thanks: To Peter Corless for helping us real- Layout Design & Graphics: Mark Arsenault ize the “new” dream, Sakai Naoko and David Carroll for edi- Cartography: Mark Arsenault & Anthony J. Bryant torial contributions, Kurosawa Akira and Mifune Toshirô for Playtesters: Margaret Arsenault, Mark Arsenault, Andrew feuling the fire, Margaret for continued support, and to all the Bordner, Theron Bretz, Matt Converse-Willson, Josh Conway, fans for keeing Sengoku alive! Mark Craddock, Dorian Davis, Paul Delon, Frank Foulis, Scott Sengoku Mailing List: To join the Sengoku e-mail list just Galliand, Steve B. -

Glimpses of Unfamiliar Japan Second Series by Lafcadio Hearn

Glimpses of Unfamiliar Japan Second Series by Lafcadio Hearn CONTENTS 1 IN A JAPANESE GARDEN …........................................P3 2 THE HOUSEHOLD SHRINE ….....................................P23 3 OF WOMEN'S HAIR …................................................P36 4 FROM THE DIARY OF AN ENGLISH TEACHER …..........P43 5 TWO STRANGE FESTIVALS …....................................P73 6 BY THE JAPANESE SEA …..........................................P79 7 OF A DANCING-GIRL …..............................................P89 8 FROM HOKI TO OKI …................................................P102 9 OF SOULS ….............................................................P137 10 OF GHOSTS AND GOBLINS …...................................P142 11 THE JAPANESE SMILE …..........................................P152 12 SAYONARA! …........................................................P165 NOTES …....................................................................P170 CHAPTERONE In a Japanese Garden Sec. 1 MY little two-story house by the Ohashigawa, although dainty as a bird- cage, proved much too small for comfort at the approach of the hot season—the rooms being scarcely higher than steamship cabins, and so narrow that an ordinary mosquito-net could not be suspended in them. I was sorry to lose the beautiful lake view, but I found it necessary to remove to the northern quarter of the city, into a very quiet Street behind the mouldering castle. My new home is a katchiu-yashiki, the ancient residence of some samurai of high rank. It is shut off from the street, or rather roadway, skirting the castle moat by a long, high wall coped with tiles. One ascends to the gateway, which is almost as large as that of a temple court, by a low broad flight of stone steps; and projecting from the wall, to the right of the gate, is a look-out window, heavily barred, like a big wooden cage. Thence, in feudal days, armed retainers kept keen watch on all who passed by—invisible watch, for the bars are set so closely that a face behind them cannot be seen from the roadway. -

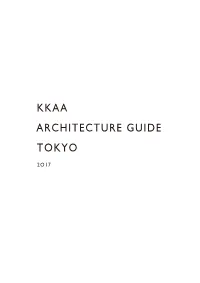

Kkaa Architecture Guide Tokyo

KKAA ARCHITECTURE GUIDE TOKYO 2O17 KUMAP 1 CENTRAL TOKYO J R Y a 35 m BUILDINGS: 1 = NEZU KAMACHIKU 2 = WITHUS NEZU a n o t DAIWA UBIQUITOUS COMPUTING RESEARCH BUILDING H e 3 = o l n the University i n g e o of Tokyo 4 = KUROGI 5 = ASAKUSA TOURIST INFORMATION CENTER D Ueno Park o HULIC ASAKUSABASHI BUILDING r 6 = 7 = KAYANOYA IKEBUKURO K i a s u g a 1 2 TONETS BUILDING KITTE SHICHIJUNIKOU D 8 = 9 = 10 = o r i SUSHIDOKORO JUN TIFFANY GINZA e 11 = 12 = n i l GINZA KABUKIZA JUGETSUDO KABUKIZA 3 4 13 = 14 = e t o n a UENO m 33 ASAKUSA a Y BUILDINGS: KAGURA- 5 R J ZAKA r e 15 = GINZA SHOCHIKU SQUARE i v R 34 a 16 = SHINONOME CANAL COURT CODAN d i m KIHA- u 17 = THE CAPITOL HOTEL TOKYU A BARA S 6 18 = TOKYO MIDTOWN D-NORTH WING ASAKUSA- SUNTORY MUSEUM K the Imperial Palace BASHI 19 = o s h u K a i d LUCIEN PELLAT-FINET SHINJUKU o 20 = Akasaka Palace TOKYO MIDTOWN SHOP Jingu Gaien 10 Meiji Jingu Shrine 7 21 =KURAYAMIZAKA MIYASHITA 11 22 = BAISO IN TEMPLE Shinjuku Gyoen 9 TOKYO 23 = ESCORTER AOYAMA o r i a D y a m NEZU INSTITUTE OF FINE ARTS 23 A o 24 = 17 URAKU- 25 = NEZUCAFÉ Y CHO 8 HARAJUKU O 22 12 MIELE CENTER OMOTESANDO m 26 = o 28 21 t i e s r SUNNYHILLS JAPAN a n 18 o 27 = d D o o 27 19 20 u h 28 =ONE OMOTESANDO C 13 14 WAKETOKUYAMA Hibiya Park 29 = 26 15 30 = JR SHIBUYA STATION FAÇADE 31 = SHIBUYA STATION AREA 31 24 25 30 32 H REDEVELOPMENT PROJECT a r u m R o p p o n g i D o r i i D =RED BULL MUSIC ACADEMY o 32 r i SHIBUYA 29 Roppongi Hills 33 = AKAGI JINJA SHRINE 16 Hamarikyu 34 = LA KAGU Gardens 35 = TOSHIMA CITY HALL Shiba Park 池 袋 e n i l 38 線 央 線 e 中 t o 手 n 吉 祥 寺 a m 高 J R C h u o l i n e a 山 Y R 環 J 尾 京 新 王 状 K 高 a 尾 m o K o s h u K a i d p a 宿 八 甲 街 道 c 州 線 e n h i l i 尾 o d a k 号 o 高 a r T i 王 o i e 線 K 京 39 渋 44 45 o 道 高 d 40 i 谷 a K 街 尾 n e a Y 猿 山 6 野 4 R 2 口 東 41 42 急 To 大 ky 川 u 井 二 子 玉 O im 町 a c h i 線 l i n 多 e T a 摩 m a 43 R 川 i v 等 e r 々 力 KUMAP 2 1 NEZU KAMACHIKU 2 WITHUS NEZU WESTERN TOKYO BUILDINGS: 36 = TECCHAN 37 = M2 38 = FOOD AND AGRICULTURE MUSEUM 49,40 = TAMAGAWA TAKASHIMAYA S.C. -

Fashion,Costume,And Culture

FCC_TP_V2_930 3/5/04 3:55 PM Page 1 Fashion, Costume, and Culture Clothing, Headwear, Body Decorations, and Footwear through the Ages FCC_TP_V2_930 3/5/04 3:55 PM Page 3 Fashion, Costume, and Culture Clothing, Headwear, Body Decorations, and Footwear through the Ages Volume 2: Early Cultures Across2 the Globe SARA PENDERGAST AND TOM PENDERGAST SARAH HERMSEN, Project Editor Fashion, Costume, and Culture: Clothing, Headwear, Body Decorations, and Footwear through the Ages Sara Pendergast and Tom Pendergast Project Editor Imaging and Multimedia Composition Sarah Hermsen Dean Dauphinais, Dave Oblender Evi Seoud Editorial Product Design Manufacturing Lawrence W. Baker Kate Scheible Rita Wimberley Permissions Shalice Shah-Caldwell, Ann Taylor ©2004 by U•X•L. U•X•L is an imprint of For permission to use material from Picture Archive/CORBIS, the Library of The Gale Group, Inc., a division of this product, submit your request via Congress, AP/Wide World Photos; large Thomson Learning, Inc. the Web at http://www.gale-edit.com/ photo, Public Domain. Volume 4, from permissions, or you may download our top to bottom, © Austrian Archives/ U•X•L® is a registered trademark used Permissions Request form and submit CORBIS, AP/Wide World Photos, © Kelly herein under license. Thomson your request by fax or mail to: A. Quin; large photo, AP/Wide World Learning™ is a trademark used herein Permissions Department Photos. Volume 5, from top to bottom, under license. The Gale Group, Inc. Susan D. Rock, AP/Wide World Photos, 27500 Drake Rd. © Ken Settle; large photo, AP/Wide For more information, contact: Farmington Hills, MI 48331-3535 World Photos. -

The Development of Kaji Kito in Nichiren Shu Buddhism Kyomi J

The Development of Kaji Kito in Nichiren Shu Buddhism Kyomi J. Igarashi Submitted in Partial Fulfillment of the Prerequisite for Honors in Religion April 2012 Copyright 2012 Kyomi J. Igarashi ACKNOWLEDGEMENTS First and foremost, I would like to thank Professor Kodera for his guidance and all that he has taught me throughout my four years at Wellesley College. I could not have written this thesis or taken on this topic of my interest without his encouragement and words of advice. I would like to acknowledge the Religion Department for funding me on my trip to Japan in December 2011 to do research for my thesis. I would also like to thank Reverend Ekyo Tsuchida for his great assistance and dedication during my trip to Japan in finding important information and setting up interviews for me, without which I could not have written this thesis. I am forever grateful for your kindness. I express my gratitude to Reverend Ryotoku Miyagawa, Professor Akira Masaki and Professor Daijo Takamori for kindly offering their expertise and advice as well as relevant sources used in this thesis. I would also like to acknowledge Reverend Honyo Okuno for providing me with important sources as well as giving me the opportunity to observe the special treasures exhibited at the Kuonji Temple in Mount. Minobu. Last but not least, I would like to extend my appreciation to my father, mother and younger brother who have always supported me in all my decisions and endeavors. Thank you for the support that you have given me. ii ABSTRACT While the historical and religious roots of kaji kito (“ritual prayer”) lay in Indian and Chinese Esoteric Buddhist practices, the most direct influence of kaji kito in Nichiren Shu Buddhism, a Japanese Buddhist sect founded by the Buddhist monk, Nichiren (1222-1282), comes from Shingon and Tendai Buddhism, two traditions that precede Nichiren’s time.